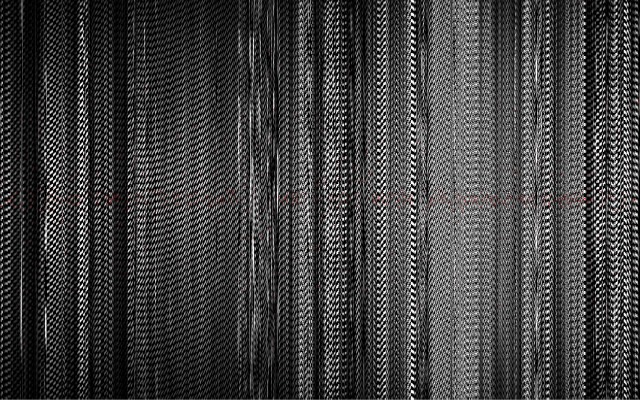

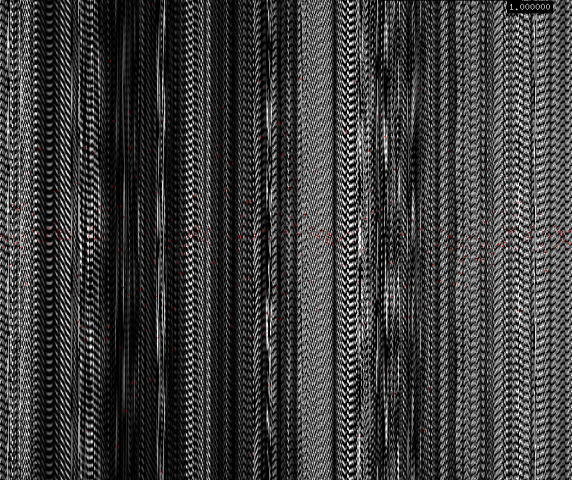

I built an ultrasonic interferometer to map and visualize the sonic texture and echo-pathways of a place. Here is an example output:

In the above image, the x-axis corresponds to the time. Each spot on the y-axis represents a correlation bin, a bright spot represents that there was an echo at that time delay. The above image is produced from the STUDIO for Creative Inquiry ceiling.

why

i wanted to create sonic maps of a space mapping sound qualia that we wouldn’t normally pay attention to.

the final setup

A SoundLazer parametric speaker, connected to a moto pre4 usb audio digitizer. I use two AKG C542BL boundary-effect microphones connected to the digitizer as the sound input. The microphones are mounted 1.6 – 2 meters apart. Note that the SoundLazer is only powered up, but not plugged into any audio output. I then feed the sound input to openFrameworks, using the Eigen C++ scientific library to compute the cross-correlation of the two signals. I then plot the short-time cross-correlation of the two signals on the y-axis, sweeping the ‘cross-correlation brush’ across the x-axis. I also plot a small red dot at the maximum cross-correlation.

It is also possible send back the microphone input into the ultrasonic speaker, creating feedback effects that let you hear (in some way) what texture is there as you scan it (although, then it’s just a sonic interferometer).

he process

Originally, my project was going to be audio-source localisation of footsteps using contact microphones. The audio-source localisation works through computing the cross-correlation of the two microphones signals. The cross-correlation will have a peak at the predicted lag time. From this, we can calculate the extra distance the signal travelled from one microphone to another, from which we can calculate two possible angles the speaker was relative the baseline of the microphones. Using three microphones, we can figure out two angles from two different known baselines, giving us the approximate location of the speaker (including virtual speakers created from echos).

To improve the results, I whiten the cross-correlation using Rohr whitening.

Although I could get the localisation working in the air using the AKG boundary microphones, the contact microphones were not sensitive enough in the low frequencies to pick up footsteps at any distance. Although the boundary microphones could very easily pick up footsteps and movement across CFA, the output wasn’t satisfactory to me (and, every time I explained the idea to someone it seemed less and less interesting).

I realised that by computing the cross-correlation of a signal I send out myself, I would be creating a sonar. I also remembered I had a SoundLazer, and at 2am thought to change my project to using the SoundLazer’s beam to scan the acoustic reflections of a location.

The idea change required almost no change in the code (I ended up using PHAT whitening rather than Rohr whitening).

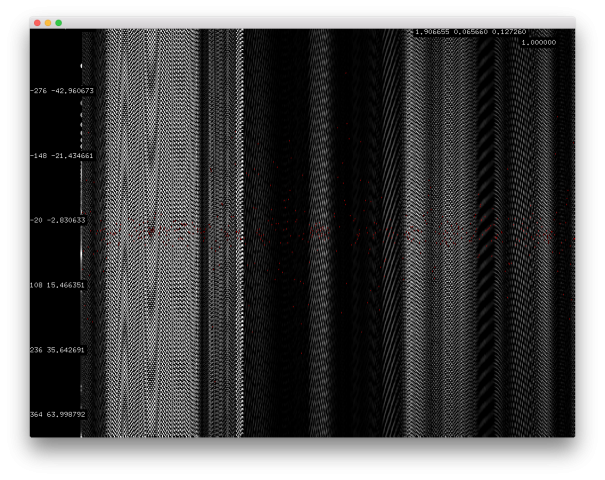

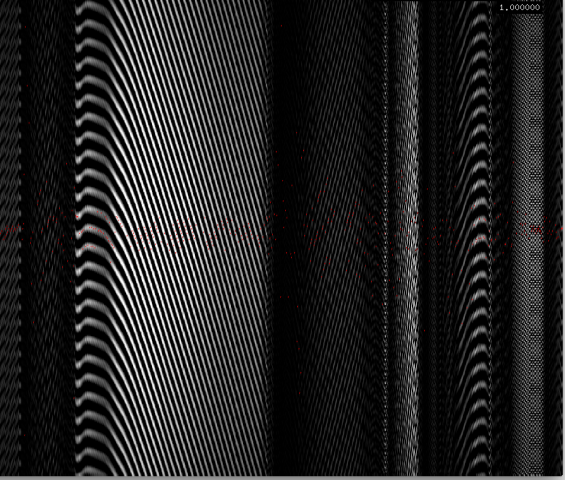

The following a debug screenshot, the vertical text on the left marks the lag (in samples, and in projected speaker angle) that the y-axis corresponds to.

the results

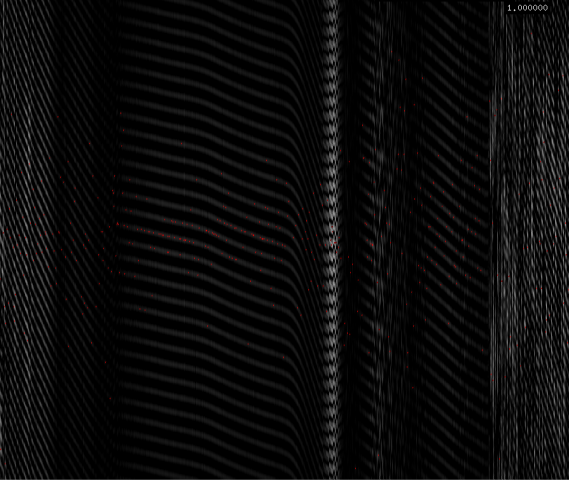

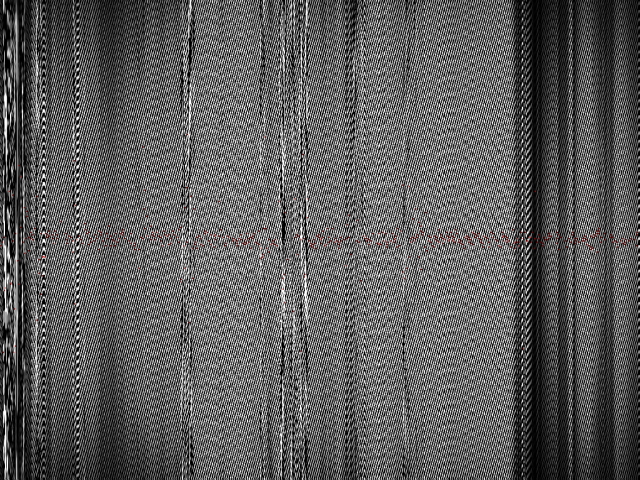

The following is produced from scanning my room.

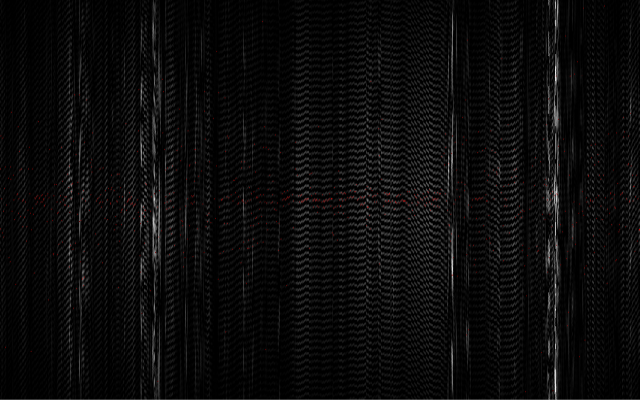

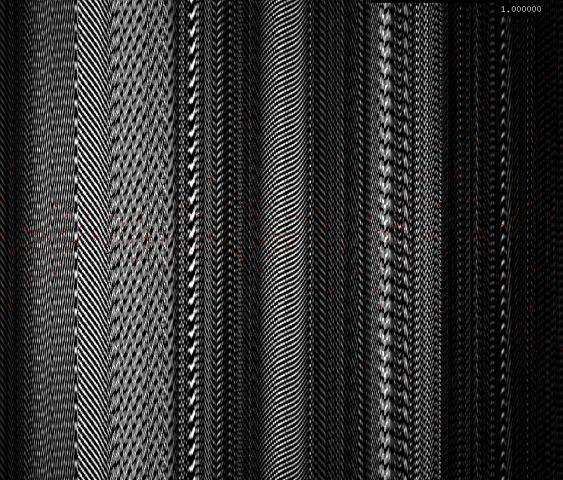

The following is produced from the entrance to the STUDIO, the bright white smudges coming from people’s speech.

The following is produced from scanning the scene outside the window of the STUDIO for Creative Inquiry at noon.

The following is a hardwood table with other people sat around it.

The following were produced from scanning the north foyer of the College of Fine Arts.

Comments from the review.

general : awesome. Amazing. Predictability ?

COOL. YOU ARE COOL AND THIS IS COOL.

There’s potential for live performance

#unpleasantArt but in the best way +

“Compute the cross correlation” ??? Needs to be better explained. How do the images you’re presenting represent an encoding of a texture? I feel like you’re missing an opportunity to better educate us, especially in your blog post.

#Hex.sounds of the textures of the walls

What type of more interesting space could you map???? Where would you take a future iteration? – a glass box

Your capture method is very strong. Did you give any thought to the place you were scanning? I think it works best live with the spatial audio

The images you produced can’t be compared to each other. Can you move the rig in a systematic way; remove a variable. Measuring it over time is… not informative in any way.

^I feel like this is more powerful as an experience rather than the actual audio artifacts after.

I think I am going to need another explanation on how this gets the texture? This is awesome.

+1 → better documentation of your project!!

You made something that is insufferable to experience.+1+1

Would a stone room sound different? Would it absorb a lot of it?

^^Curious to see what happens if you try different materials (Glass/wood/stone/etcetc)

^yes

What about CnC routing those textures into a physical material?

Please don’t play with toys that can cause permanent hearing damage without understanding that that is the case, waving them at the class wildly. +++