Foot Classifier

Our event is stepping. We were inspired by the many tools that were shown during lecture, including the SenselMorph, openframeworks eye and face trackers, etc. We liked the idea of classifying feet, a part of our body that is often forgotten about yet is unique to every individual. Furthermore, we wanted to capture the event of stepping, to see how individuals “step” and distribute pressure through his or her feet. As a result, we decided to make a foot classifier by training a convolutional neural network.

Process

Openframeworks and ofxSenselMorph2

First, we used Aman’s openframeworks addon ofxSenselMorph2 to get the SenselMorph working, and to display some footprints. Next, we adapted the example project from the addon so that the script takes a picture of the window for every “step” on the Sensel.

Gathering Data

In order to train our neural network we want to get a lot of data. We collected around 200-250 train images for each individual our neural net would train on, and got 4 volunteers (including ourselves).

Training our Neural Net

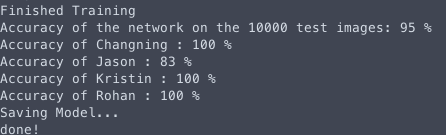

We used Pytorch, a machine learning library based on python. It took us a while to finally be able to download + run the sample code, but through some help from Aman we managed to get it to train on some of our own data sets. We ran a small piece of code through the sensel that can capture each foot print through a simple gesture. This allowed us to gather our data much faster. We used our friend’s GPU-enabled desktop to train the neural net, which greatly reduced our overall time dealing with developing the model.

Putting Everything Together

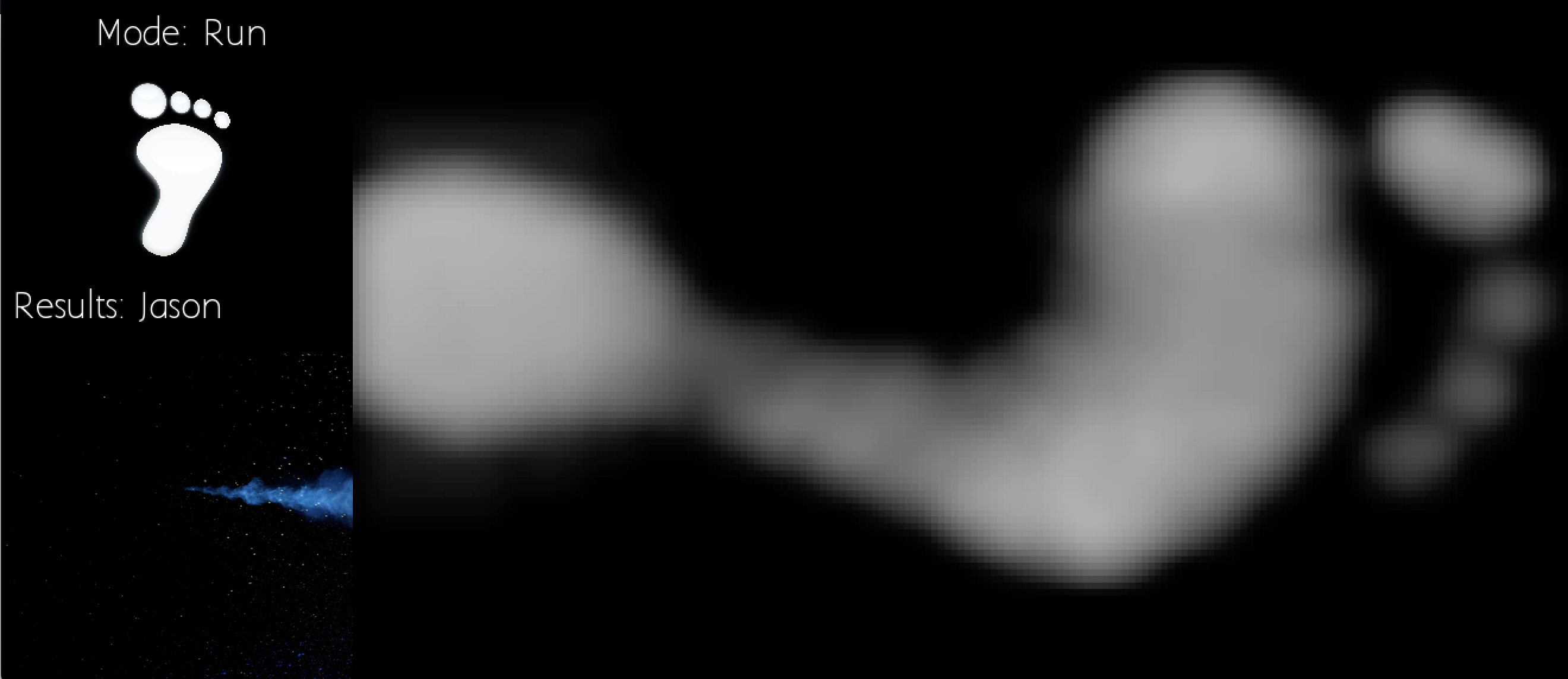

To put everything together, we combined our python script that given an image will detect whose foot it is with our openframeworks app. We created 2 modes on the app, a train and run mode, where train mode is for collecting data, and run mode is to classify someone’s foot in real time given a saved train model. On Run mode, the app will display its prediction after every “step”. On train mode, the app will save a train image after every “step”.

Running Our Classifier

Overall, we were really happy with our results. Although, the app did not predict every footprint with 100% accuracy, about 85-90% of the time it was correct between 4 people, and this is with 200-250 train data for each person, which is pretty darn good.

Anonymous notes from the group critique, 4/13/2017:

You need to state in a clear way what your objectives were, in a single sentence.

Overall this presentation suffered from poor explanation. The verbal presentation was poorly explained, unfortunately, and the visual explanations (diagrams, videos, images) in the blog post were very weak as well.

You make so many assumptions about what your audience knows. You assume your audience already knows what a Sensel morph is. The fact that Austin found it necessary to ask what the device is, after your six-minute presentation, is evidence of the kind of assumptions you’re making.

In your documentation video, you seem to take for granted that the viewer knows who is who. However, believe it or not, most viewers who watch this video do not know the names of the people who appear in it. Therefore it is impossible to know if your algorithm is working correctly.

Dorm-room-style documentation (again!??) is a real shame. Almost no care was taken to make this clear, legible, informative and (maybe, even) entertaining. There could have been a great video of stepping w/ a nice camera and a clean background. Instead, the dorm-room documentation video is cluttered, drab, unmemorable, and, above all, comes across as unconsidered. That 2-second animated GIF with the shaky camera (https://ems.andrew.cmu.edu/excap17/wp-content/uploads/2017/04/giphy-1.gif ) literally could not be worse!

Would have been nice to show an animated GIF of the pressure-video image early in your blog post; it would explain what kind of data you were dealing with.

Sean Follmer’s KidCad: Malleable gel surface / co-located projection, 2.5 depth. Please check out the hardware configuration and compare with the high-end pressure sensor (Sensel morph).

It’s a little hard to recognize the results.

Maybe some introduction about sensel-morph?

Cool project, very computer sciencey though. Seems like more of a machine learning class project. I’m interested in why you did this?

What is interesting about knowing or detecting someone by their foot? → Push concept further

The fact that it’s supposed to be an art interface is the most interesting thing to me

Where do you see this in context?

You omitted Golan’s request for some sort of context or inspirational prior work in your blog post and oral presentation.

Lots of missing information here. What is that device? Why did you make this? What interested you about footprints? Has anyone done this before?