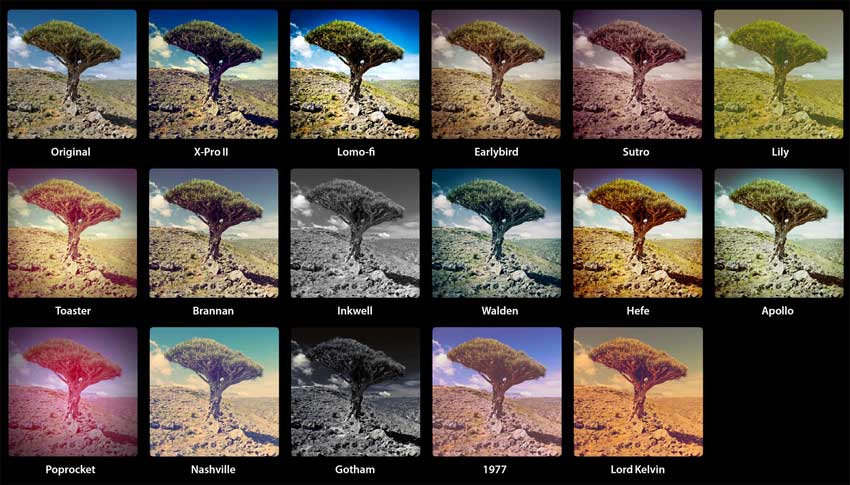

In simplest terms, I want to create an applet that “applies Instagram filters to music” or other sound files. I did a lot of research into the methods and techniques this could be done with and I have a few options, all of which would technically yield different results. I would have to choose the perfect balance of what I want and what is possible.

Method 1 – FFT (spectrum analysis)

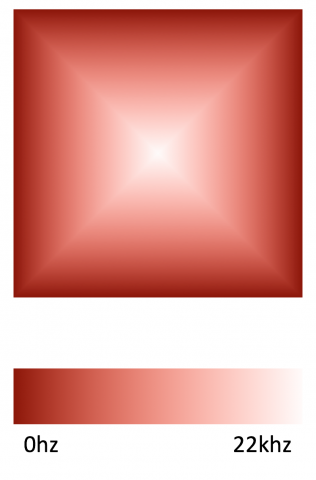

This is the original method I thought up of. At any given moment, the human ear can hear a range of frequencies (21 – 22,050 hz). The applet would, ideally in real-time, take each “moment” of sound from a sound file (or mic input?), convert the 22k frequencies into a RGB representation and plot them into an image form, apply the selected filter to the image, and read back in the new RGB values pixel-by-pixel to “rebuild” the sound. To be somewhat recognizable, I was thinking the image would be organized in spiral form, where the image is drawn inward starting with the outermost border of pixels, and progress up the frequency range as it does so. This way the image would have bass tones on the outside, and the higher the pitch, the more center the image. In this method, an Instagram filter that applies a vignette effect would accomplish the same as a bass-boost, if I choose for it to behave that way. Of course, the direction of sound can be applied in reverse as well (bass on inside).

PROS:

- Since the filter is applied to entire sound spectrum, and the spectrum is organized in a data-vis kind of human readable way, it would act as expected and could yield recognizable, fun and understandable results (deeper bass, modified treble, etc).

- Full control over how sound is visualized and applied. FFT results one value per frequency (amplitude/volume), which can be interpreted with an RGBA value in any way.

CONS:

- Realtime may be slow or impossible. It may be impossible to “rebuild” sound based on just modified FFT values/ frequency values, as FFT is an average of the sound instance index <code>i</code> and <code>i-1</code>, I believe. And if possible, could be very complicated.

- FFT has one value per frequency (amp/vol). A pixel is made up of RGB (and HSB), and optionally an alpha value. An Instagram filter will not only modify brightness, but possibly hue as well. How to convert all those variables back and forth with amp/vol? Would a hue shift result in a tonal shift too? And brightness is volume? Are all “tones/similar hues” averaged for volume? Added? Mean? Mode?

Method 2 – Equalizer

This is similar to Method 1 in that it applies the effect as it goes, to the sound instance at that moment, preferably in real-time. However, instead of using FFT to do a full 22k spectrum analysis, it would go the route of sound equalizers, ones you’d see in DJ software of HiFi systems. I tried to find examples of this in Python and Java but they’re hard to find online and I don’t quite understand how I’d do this. The Minim library for Processing has “low-bandpass” and “high-bandpass” filters but I’m not quite sure how to do adjust these bandpass filters, and how to apply them in specific frequencies rather than just “high end” and “low end” sound. The use of Instagram filters would also be different than Method 1. I’m not quite sure how it would work, but my thought is applying the Instagram filter to a constant original image, “calculating the difference” between before/after pixel-by-pixel, and apply those pixels to specific frequencies or frequency ranges on the equalizer. Essentially the Instagram filters would be run once, perhaps loaded as variables in setup(), and the difference mentioned above would be calculated to a simple mathematical expression to be performed on the equalizer.

PROS:

- Since the filter is applied to entire sound spectrum, and the spectrum is organized in a data-vis kind of human readable way, it would act as expected and could yield recognizable, fun and understandable results (deeper bass, modified treble, etc).

- Full control over how sound is visualized and applied. Equalizer can apply one change per frequency (amplitude/volume), which can be interpreted with an RGBA value in any way.

- Realtime is possible, depending on the efficiency of my coding. Other software does this, why can’t mine?

CONS:

- A pixel is made up of RGB (and HSB), and optionally an alpha value. An Instagram filter will not only modify brightness, but possibly hue as well. How to convert all those variables back and forth with the equalizer adjustment? How would hue shift and brightness shift change things separately?

Method 3 – Song File

This is perhaps my least favorite idea because it applies to the entire song at once, which may yield results that don’t quite make sense. In this method I would essentially be reading a sound file’s encoding, such as .wav or .mp3, somehow decode it into readable sound files OR directly convert the filetype’s encoded hexadecimal values linearly into some kind of image (hexadecimal to RGB hex?), and that image would represent the music file as a whole. However, applying an Instagram filter on it would yield weird results. In method 1, a vignette could act as a bass-boost. However, this method may just boost the volume at the beginning and end of a song for example. The other blaring problem with this is that messing with encoding may just result in absolute gibberish noise.

PROS:

- Potentially very fast execution, and could spit out a new, savable file as well (or just play it back, which probably requires saving the file as well and re-loading it with a proper MP3/WAV reader library

CONS:

- Potentially garbage noise, or breaking the encoding/file entirely

- Extremely complicated to encode/decode music files, or even just read them