For this project I am working with Quan. After getting to know each other and brainstorming many ideas that were either general or specific to each other’s interests and attributes, we settled on the idea of creating a portrait of us through our portraits of the world- meaning, we are both photographers and we want to use the metadata and other information available in our photography to paint a picture of how we photograph the world, what our interests/habits are when it comes to photography, etc.

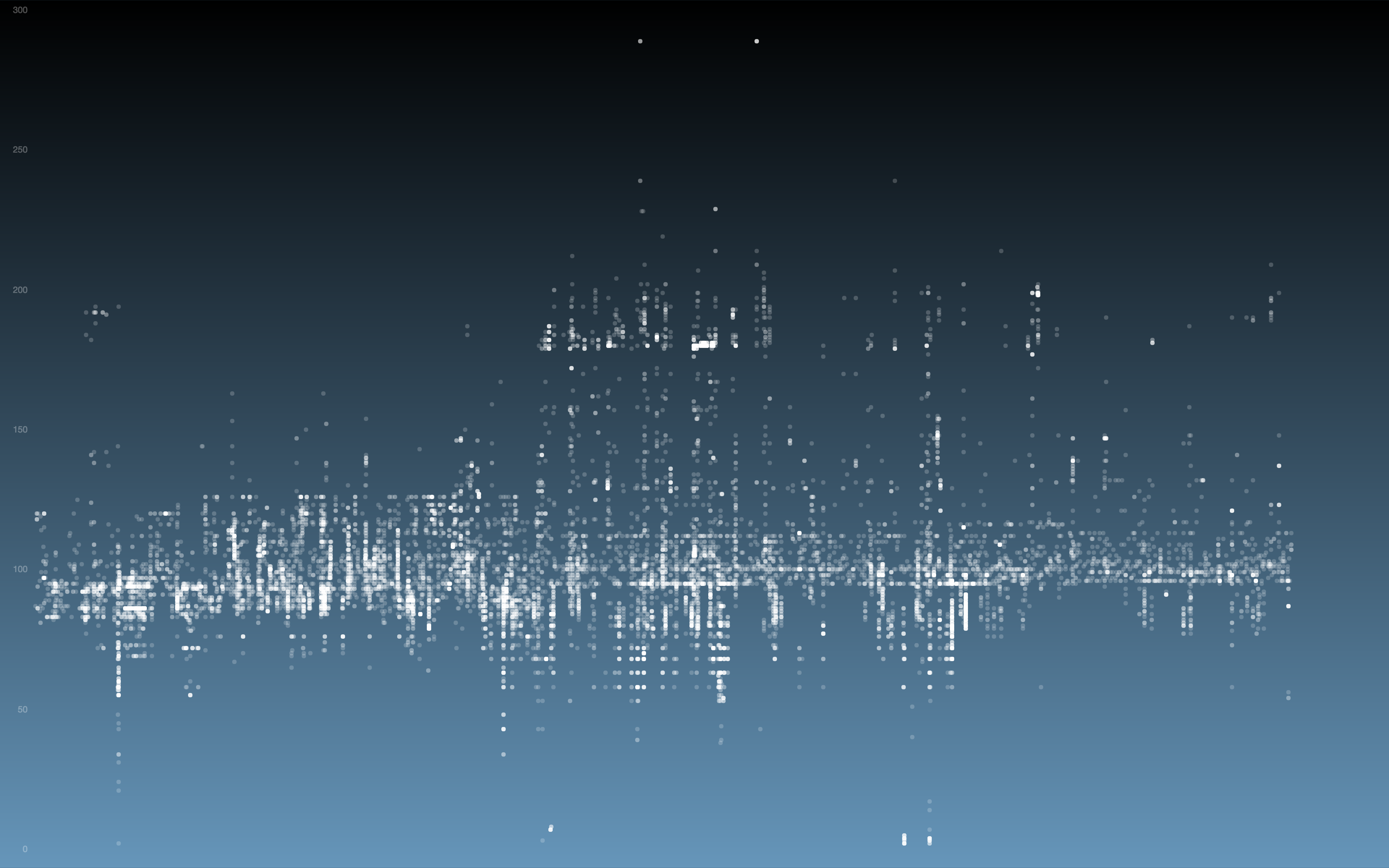

We are still coming up with variations and implementations of our settled subject, but one that we are sure to do is crating an “illumination portrait,” a visualization of the lighting conditions we generally shoot in based on a value obtained from the aggregate of shutter speed, aperture and ISO (grabbed from the EXIF data of all available .jpg images). An example of my portrait, below, is from the 20k+ images on my computer. Quan, who’s photo count is much higher he never deletes any photos) is currently converting all RAW images to jpg for analysis, hopefully to be done within the next few days.

Another idea involves running our images through Google vision to get an idea of the subject matter, however this gets very tricky on multiple levels. Some problems we’ve run into for this idea are:

- Google vision spits out too many variations of subjects, and often don’t align with “photography categories”

- Google vision will cost a lot of resources (time, money) to run all of our images through

- Sometimes it is wrong. Or weird.