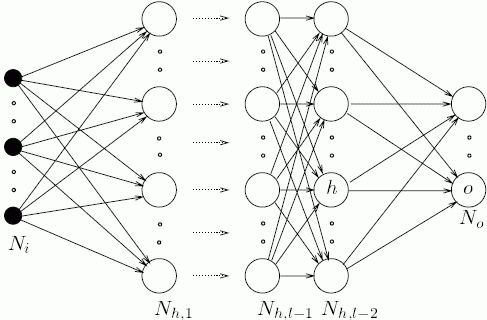

An artificial neural network is a

which

thus, we can then

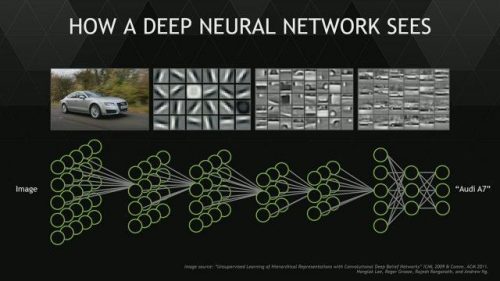

So now that we’re clear on what exactly in general a neural network is, we can look at what neural networks can do. The field of deep learning is integral to fields of emerging technology such as autonomous vehicles, computer vision, statistical analysis and artificial intelligence.

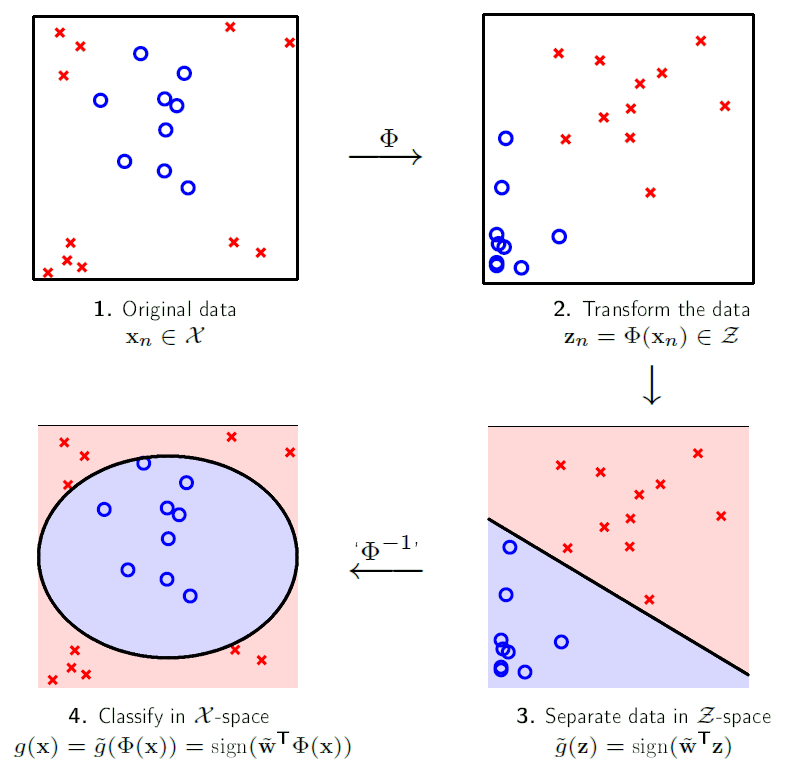

Recently, a research paper was published which details using neural networks to manipulate images. The basic process is as follows: the network is given an image as an input, which it then tries to change to match a second image.

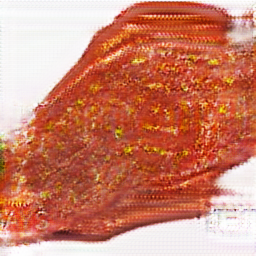

I trained a neural network with images of fresh fruit matched with images of rotten fruit. The network is thus trained to rot or unrot any image it is given. I then fed the network images that are not fruit. The results have mixed effectiveness:

Net #2:

indreams from David Gordon on Vimeo.

Net #3:

training images:

which is ‘unrotted’ to:

sometimes it makes mistakes:

which is ‘rotted’ to:

actual rotten cucumber:

mistakenly ‘unrotted’ into a strawberry:

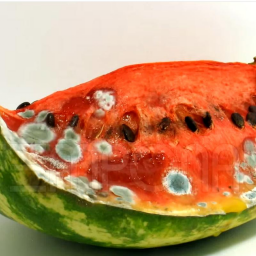

but it really knew how to rot watermelons:

Fresh MJ from David Gordon on Vimeo.

Anonymous feedback from group critique, 4/13/2017:

NEURAL NETS, RIGHT?

Great public speaker!

In order to explain what you’re doing,

1. You need to show visual examples of Pix2Pix, so that an intelligent layperson understands that a neural net can be trained to reproduce a visual transformation (such as “before/after”). A good example people might recognize is the lines2cats example from online.

2. You need to show specific examples of the fresh and rotten images that you collected. You mention that there are huge communities who do this; in explaining your project, you need to show this to us, so that we understand the kinds of materials you’re using to train your network.

Show a video from youtube of the rotting fruit you’re using

I don’t think you should touch the King of Pop — it’s enough to make a fruit-rotter, and then rot some other everyday objects (such as a soccerball!). I just don’t get why you’re using Michael Jackson, it seems arbitrary. If you really want to show the rotting of a face, I recommend choosing a still image (such as a self-portrait) rather than a video, so that you don’t have temporal coherence to deal with.

→ I disagree, i think it adds to the project. If he hadn’t taken it to another level, it would have just been a study instead of an art project

Oh my god I love this.

Nicely documented and good final form

The unrotting of thee watermark is funny

Your GAN suffered some mode-collapse, try using a WGAN, BEGAN or Least-Squares GAN as the internal GAN in pix2pix.

Can you collaborate with Caroline’s portrait of you, to turn your sketchbook drawings rotten??? Can you rot still images of faces? (yes he can, he did the rotting frame by frame)

Pix2pix is fast enough to run live in feedforward mode too, you could have a live webcam that rots things

What if u unrotted zombies from movies

What if u applied it to corpses

What if u applied it to david wojarnowicz’s a fire in my belly

What if u trained it on decaying bodies instead (ew)

I want to know what David Lynch’s reaction to your Blue Velvet rot would be. +

Charlie White’s “Picture of Dorian Grey” comment was very intelligent and applicable! You should look into that ++++ ++++

Reminds me of:

http://www.popularmechanics.com/technology/a25344/draw-your-own-nightmare-cats-with-this-ai-program/