THE PULSE OF SHIBUYA

I decided to capture a place that has been fascinating me ever since my first trip to Japan: Shibuya crossing in Tokyo. The amount of people who cross the road in any direction (almost feeling too disorganized for Japan) is just huge and there more than ever you can feel that Tokyo is one of the most densely populated cities in the world. I wanted to somehow capture the motion, the dynamics, the hustle and bustle of that place.

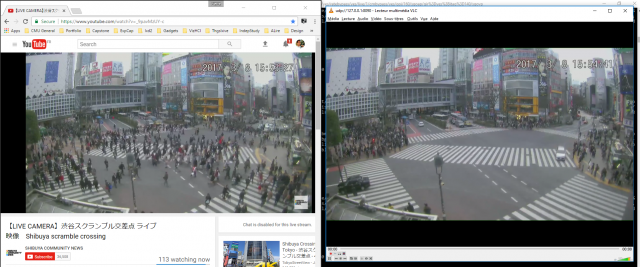

I quickly discovered that there was a live stream of that crossing which could be accessed on Youtube at any time at the following link.

I was inspired by the work of Kyle Mcdonald, who managed to get a sense of the life in Piccadilly Circus in London thanks to a webcam (http://www.exhaustingacrowd.com/london), and decided to use this feed as my input.

Getting the Live Cam… On Windows!

The first part of my work, which actually lasted way longer than I thought, consisted in getting the live stream from Shibuya to my computer. This was not trivial: The Camera IP was not available to the public and Youtube doesn’t provide a way to access it due to its privacy policy. I somehow managed to get the HLS URL of the video through the command-line program “Youtube-dl”. However, this link didn’t seem to work on regular video decoder such as VLC (only the first frame showed up). After days of tweaking with this, I finally used another command-line software called “FFMPEG” to stream back this link to a port of my computer using a UDP protocol.

The stream was now available on my computer once I decoded it through VLC. You can see in the following picture that I actually get the camera feed more than one minute before Youtube published it.

If VLC was able to decode the video through UDP, I now had to do it in OpenFrameworks. After having a few unsuccessful attempts, and more time spent than I should have, I decided to move to the Computer Vision part and to use a 90 minutes recording from the webcam that I got through FFMPEG.

Future work will consist in either finding a better protocol than UDP to stream back the camera feed, or decoding the UDP stream in OpenFrameworks, perhaps using the same method than the one used in the ofxIpVideoGrabber (https://github.com/bakercp/ofxIpVideoGrabber) but using UDP protocol (and OfxUDPManager) instead of HTTP.

What to do with it

– Getting the position of the people in the video? Took measurements of the place through Google Maps

– Played with OpenCV reagular addon. But contour finder didn’t provide the information I wanted. Too complex to filter the important information

– Addon ofxCV from Kyle McDonald. Windows version GML not good. Install the previous version from Kyle McDonald. Tweaked and got it to compile

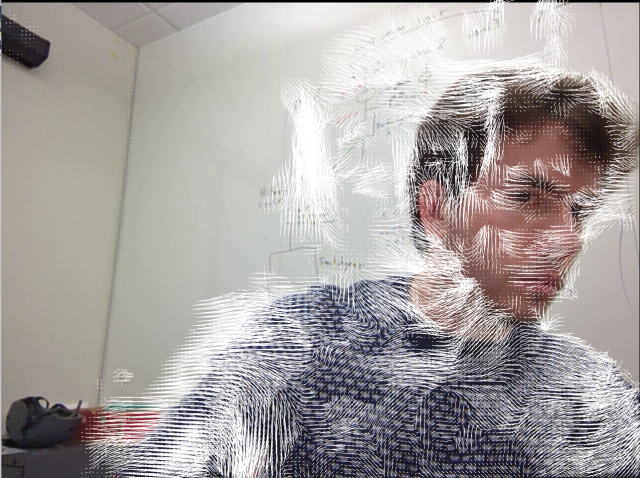

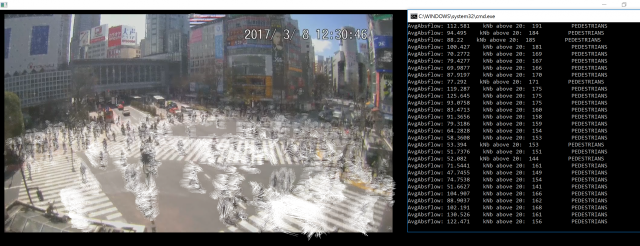

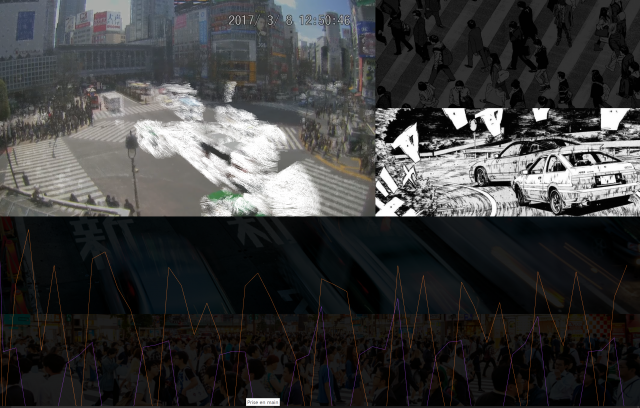

– Started monitoring two factors: speed and movement. Differentiate cars and people. Will have to be adjusted based on the time of the day. But nice overview

– Output. Pulse. + Ghosty movements

Comments from the Group Review

Examination of an intersection in Japan: Shibuya Crossing in Tokyo. He used a video webcam in YouTube, but it was difficult to acquire the stream. Used a recording, analyzed its optical flow.

General: look at windmap over US. Consider stripping background and using only movement patterns. Potential generator of audio? Do you want to impose a pulse? Or allow people to find the pulse themselves. Very close to developing a fingerprint of a place from a camera developed from motion. Flow over the course of a day?

Would be good to make your velocity diagram more legible — the purple graph is very hard to read. -labels?

Generator for audio

There are some tools for siphoning YouTube videos into OF — Ben Snell here at CMU did this in his Bald Trump project: https://www.youtube.com/watch?v=5Ce1EAZ3vE0, http://www.ben-snell.com/Samson , https://github.com/bensnell/samson

Not sure if this would work in your case- but Syphon tools can be very useful for getting videos from one app to another – Syphoner (although not great) is pretty easy to work with especially with OSC

I feel like you have created a capture method, but what is the media artifact that you are making?

The carghosts & interaction with the peopleghosts are cool !

I love how people just look like they are part of a weather forecast.

Optical Flow is a great/interesting way to find the data hidden in this stream

If you do median blending with a lot of pictures you should be able to remove all the humans and then subtract the noisy background if you wanted to +

Did you try showing the optical flow over a black background?

I’d like to see the cars and people as waves or currents. They almost look like water now.

^+1

I want to understand more about the concept – what is this exploring?+

The pulse from one minute to the next (each beat) is neat; and the diurnal elements are nifty… but what is the health of the city over the course of a month? 3 years? 10 years? How long does this need to run for us to detect some change in the cities ecosystem, otherwise hidden?

I like how you connect a physical, bodily process to an urban environment. +

When you say pulse, i connect it with sound. It would be interesting to make these graphs into sound.