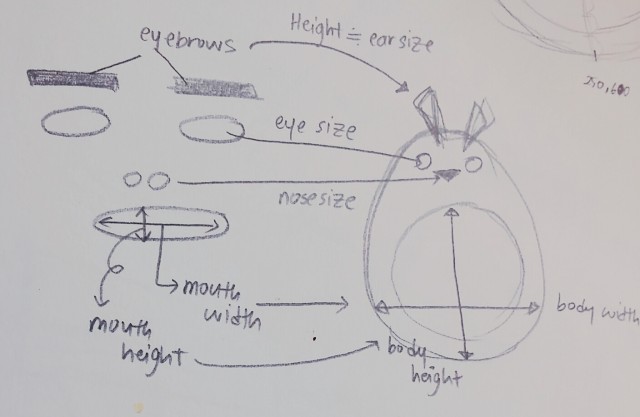

Ghost egg avatar clair

I wanted to create a simple avatar while learning how to manipulate OSCface. I also wanted to manipulate how long the image stays on the screen. Heightening the movement of the mouth and eyebrows. was also one main goal. The expressions of my avatar don’t range too drastically but mostly show varying levels of anger intensity. This was really fun to make.

//ghost egg gonna fuck you up!!!!!!

import oscP5.*;

OscP5 oscP5;

// num faces found

int found;

// pose

float poseScale;

PVector posePosition = new PVector();

PVector poseOrientation = new PVector();

// gesture

float mouthHeight;

float mouthWidth;

float eyeLeft;

float eyeRight;

float eyebrowLeft;

float eyebrowRight;

float jaw;

float nostrils;

void setup() {

background(167,41,41,12);

fill(0, 12);

rect(0, 0, width, height);

size(640, 480);

frameRate(30);

oscP5 = new OscP5(this, 8338);

oscP5.plug(this, “found”, “/found”);

oscP5.plug(this, “poseScale”, “/pose/scale”);

oscP5.plug(this, “posePosition”, “/pose/position”);

oscP5.plug(this, “poseOrientation”, “/pose/orientation”);

oscP5.plug(this, “mouthWidthReceived”, “/gesture/mouth/width”);

oscP5.plug(this, “mouthHeightReceived”, “/gesture/mouth/height”);

oscP5.plug(this, “eyeLeftReceived”, “/gesture/eye/left”);

oscP5.plug(this, “eyeRightReceived”, “/gesture/eye/right”);

oscP5.plug(this, “eyebrowLeftReceived”, “/gesture/eyebrow/left”);

oscP5.plug(this, “eyebrowRightReceived”, “/gesture/eyebrow/right”);

oscP5.plug(this, “jawReceived”, “/gesture/jaw”);

oscP5.plug(this, “nostrilsReceived”, “/gesture/nostrils”);

}

//ghost egg is gonna fuck you up

void draw() {

fill(167,41,41,12);

rect(0, 0, width*2, height*2);

stroke(255);

if(found > 0) {

translate(posePosition.x, posePosition.y);

scale(poseScale);

noFill();

ellipse(-20, eyeLeft * -9, 10, 8);

ellipse(20, eyeRight * -9, 10, 8);

ellipse(0, 10, mouthWidth* 1.5, mouthHeight * 5);

ellipse(-5,eyeLeft+-11,80,100);

line(-30,eyebrowLeft*-6,0,-40);

line(30,eyebrowLeft*-6,0,-40);

rectMode(CENTER);

fill(0);

}

}

// OSC CALLBACK FUNCTIONS

public void found(int i) {

println(“found: ” + i);

found = i;

}

public void poseScale(float s) {

println(“scale: ” + s);

poseScale = s;

}

public void posePosition(float x, float y) {

println(“pose position\tX: ” + x + ” Y: ” + y );

posePosition.set(x, y, 0);

}

public void poseOrientation(float x, float y, float z) {

println(“pose orientation\tX: ” + x + ” Y: ” + y + ” Z: ” + z);

poseOrientation.set(x, y, z);

}

public void mouthWidthReceived(float w) {

println(“mouth Width: ” + w);

mouthWidth = w;

}

public void mouthHeightReceived(float h) {

println(“mouth height: ” + h);

mouthHeight = h;

}

public void eyeLeftReceived(float f) {

println(“eye left: ” + f);

eyeLeft = f;

}

public void eyeRightReceived(float f) {

println(“eye right: ” + f);

eyeRight = f;

}

public void eyebrowLeftReceived(float f) {

println(“eyebrow left: ” + f);

eyebrowLeft = f;

}

public void eyebrowRightReceived(float f) {

println(“eyebrow right: ” + f);

eyebrowRight = f;

}

public void jawReceived(float f) {

println(“jaw: ” + f);

jaw = f;

}

// all other OSC messages end up here

void oscEvent(OscMessage m) {

/* print the address pattern and the typetag of the received OscMessage */

println(“#received an osc message”);

println(“Complete message: “+m);

println(” addrpattern: “+m.addrPattern());

println(” typetag: “+m.typetag());

println(” arguments: “+m.arguments()[0].toString());

if(m.isPlugged() == false) {

println(“UNPLUGGED: ” + m);

}

}

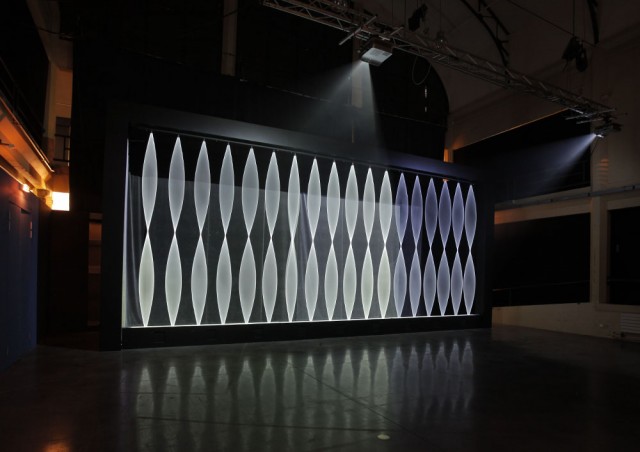

Tripwire by Jean-Michel Albert and Ashley Fure

Tripwire by Jean-Michel Albert and Ashley Fure