FaceOSC: The Orange Gobbler

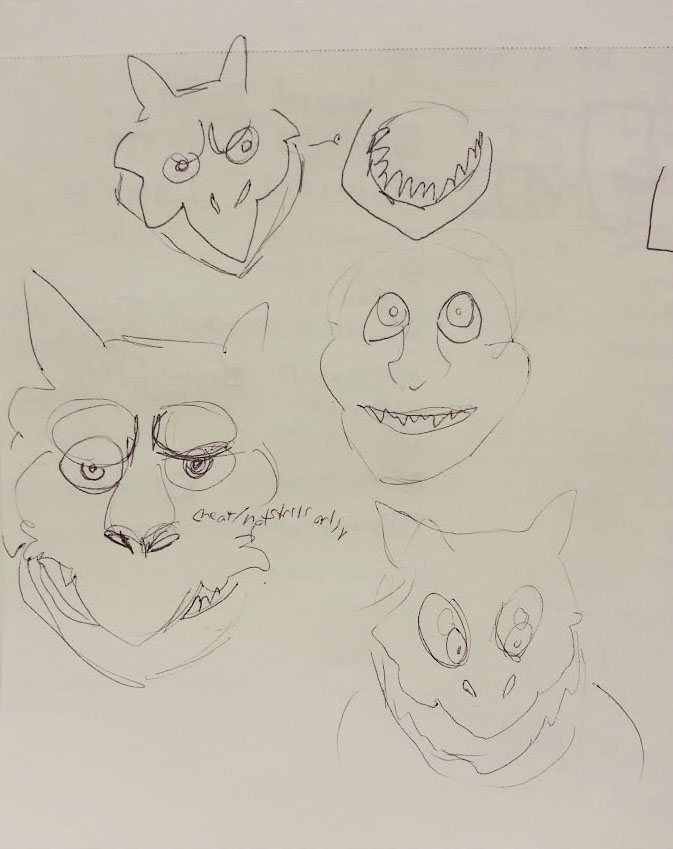

My goal was to create a creature with expressive eyes and an expressive mouth. I tracked the positions of the left eye, right eye, left eyebrow, right eyebrow, the nostrils, and the jaw. I decided to work with primitives so I could easily be able to see what motions were changing which shapes.

The body was a bit of an afterthought, and it acts a bit like a slinky due to how the position of the jaw was used in placing shapes. If I were to revisit this project, I’d try to utilize some of the mouth parameters to make the creature more expressive. However, I’m undecided on whether or not to give “The Orange Gobbler” a set of teeth.

At one point, The Orange Gobbler did have highlights in its eyes, but for some reason the highlights made the Gobbler look a bit too unsettling.

At one point, The Orange Gobbler did have highlights in its eyes, but for some reason the highlights made the Gobbler look a bit too unsettling.

//Miranda Jacoby

//EMS Inetractivity Section A

//majacoby@andrew.cmu.edu

//Creature design is copyright Miranda Jacoby 2014

//Adapted from Golan Levin's "FaceOSCReceiver" program

// Don't forget to install OscP5 library for Processing,

// or nothing will work!

//

// A template for receiving face tracking osc messages from

// Kyle McDonald's FaceOSC https://github.com/kylemcdonald/ofxFaceTracker

import oscP5.*;

OscP5 oscP5;

// num faces found

int found;

// pose

float poseScale;

PVector posePosition = new PVector();

PVector poseOrientation = new PVector();

// gesture

float mouthHeight;

float mouthWidth;

float eyeLeft;

float eyeRight;

float eyebrowLeft;

float eyebrowRight;

float jaw;

float nostrils;

//dimensions

float nostrilHeight;

void setup() {

size(640, 480);

frameRate(30);

oscP5 = new OscP5(this, 8338);

oscP5.plug(this, "found", "/found");

oscP5.plug(this, "poseScale", "/pose/scale");

oscP5.plug(this, "posePosition", "/pose/position");

oscP5.plug(this, "poseOrientation", "/pose/orientation");

oscP5.plug(this, "mouthWidthReceived", "/gesture/mouth/width");

oscP5.plug(this, "mouthHeightReceived", "/gesture/mouth/height");

oscP5.plug(this, "eyeLeftReceived", "/gesture/eye/left");

oscP5.plug(this, "eyeRightReceived", "/gesture/eye/right");

oscP5.plug(this, "eyebrowLeftReceived", "/gesture/eyebrow/left");

oscP5.plug(this, "eyebrowRightReceived", "/gesture/eyebrow/right");

oscP5.plug(this, "jawReceived", "/gesture/jaw");

oscP5.plug(this, "nostrilsReceived", "/gesture/nostrils");

nostrilHeight = 7;

}

void draw() {

background(249, 245, 255);

stroke(0);

if(found > 0) {

translate(posePosition.x, posePosition.y);

scale(poseScale);

noStroke();

//Legs

fill(155, 84, 14);

pushMatrix();

rotate(6.25);

ellipse(20, jaw * 20, 125, 200);

popMatrix();

pushMatrix();

rotate(-6.25);

ellipse(-20, jaw * 20, 125, 200);

popMatrix();

//Neck and Body

fill(155, 84, 14);

ellipse(0, jaw * 3, 110, 180);

ellipse(0, jaw * 5, 120, 190);

ellipse(0, jaw * 7, 130, 200);

ellipse(0, jaw * 9, 140, 210);

ellipse(0, jaw * 11, 150, 220);

ellipse(0, jaw * 15, 275, 375);

fill(206, 119, 48);

ellipse(0, jaw * 3, 100, 150);

ellipse(0, jaw * 7, 100, 150);

ellipse(0, jaw * 11, 100, 150);

ellipse(0, jaw * 15, 200, 275);

//Arms

fill(155, 84, 14);

pushMatrix();

rotate(6);

ellipse(20, jaw * 15, 125, 200);

popMatrix();

pushMatrix();

rotate(-6);

ellipse(-20, jaw * 15, 125, 200);

popMatrix();

//Mouth Lower Jaw

fill(155, 84, 14);

ellipse(0, jaw * .5, 100, 100);

fill(58, 42, 72);

ellipse(0, jaw * .5, 73, 80);

//Mouth Lower Jaw Tip

fill(155, 84, 14);

ellipse(0, jaw * 2.25, 50, 30);

//Space Between the Eyes (CHECK FOR OVERLAP PROBLEMS)

fill(155, 84, 14);

ellipse(0, 10 * -2.7, 35, 20);

ellipse(-20, 10 * -2.7, 35, 20);

ellipse(20, 10 * -2.7, 35, 20);

ellipse(-20, 5 * -2.7, 35, 20);

ellipse(20, 5 * -2.7, 35, 20);

quad(-20, eyeLeft * -10, 20, eyeRight * -10, 5, (nostrils + nostrilHeight * .5) * 3, -5, (nostrils + nostrilHeight * .5) * 3);

//Horns

fill(206, 119, 48);

pushMatrix();

rotate(6);

ellipse(-30, -40, 35, 55);

popMatrix();

pushMatrix();

rotate(-6);

ellipse(30, -40, 35, 55);

popMatrix();

fill(155, 84, 14);

ellipse(-20, 0, 50, 30);

ellipse(20, 0, 50, 30);

fill(206, 119, 48);

ellipse(-40, 0, 50, 30);

ellipse(40, 0, 50, 30);

//Mouth Upper Jaw

fill(206, 119, 48);

pushMatrix();

rotate(-5);

ellipse(-0, 30, 40, 25);

popMatrix();

pushMatrix();

rotate(5);

ellipse(0, 30, 40, 25);

popMatrix();

pushMatrix();

//rotate(-5);

ellipse(15, 20, 30, 40);

popMatrix();

pushMatrix();

//rotate(5);

ellipse(-15, 20, 30, 40);

popMatrix();

// ellipse(0, jaw * .5, 100, 100);

// ellipse(0, jaw * 2.5, 50, 30);

//Bridge of Nose

fill(155, 84, 14);

quad(-20, eyeLeft * -2, 20, eyeRight * -2, 5, (nostrils + nostrilHeight + (jaw * -2.5)/15) * 3, -5, (nostrils + nostrilHeight + (jaw * -2.5)/15) * 3);

fill(206, 119, 48);

quad(-20 + 27, eyeLeft * -5, 20 - 27, eyeRight * -5, -5, (nostrils + nostrilHeight + (jaw * -2.5)/15) * 3, 5, (nostrils + nostrilHeight + (jaw * -2.5)/15) * 3);

fill(240, 172, 104);

quad(-30 + 27, eyeLeft * -5, 30 - 27, eyeRight * -5, 5, (nostrils + nostrilHeight + (jaw * -2.5)/15) * 3, -5, (nostrils + nostrilHeight + (jaw * -2.5)/15) * 3);

//Eye Bags

//fill(206, 119, 48);

fill(58, 42, 72);

ellipse(-20, 0, 25, 15);

ellipse(20, 0, 25, 15);

//Eye Sclera

fill(245, 229, 175);

ellipse(-20, eyeLeft * -2, 25, 20);

ellipse(20, eyeRight * -2, 25, 20);

//Eye Iris

fill(84, 115, 134);

ellipse(-20, eyeLeft * -2, 15, 15);

ellipse(20, eyeRight * -2, 15, 15);

//Eye Pupil

fill(58, 42, 72);

ellipse(-20, eyeLeft * -2, 5, 5);

ellipse(20, eyeRight * -2, 5, 5);

//Eye Highlight

//fill(255, 253, 234);

//ellipse(-27, eyeLeft * -2.5, 4, 2);

//ellipse(13, eyeRight * -2.5, 4, 2);

//Mouth?

//ellipse(0, 20, mouthWidth* 3, mouthHeight * 3);

//Nostrils

fill(58, 42, 72);

ellipse(-5, nostrils * 3, 3, nostrilHeight);

ellipse(5, nostrils * 3, 3, nostrilHeight);

//Mouth Upper Jaw Tip

fill(206, 119, 48);

ellipse(0, 80 + jaw * -2.25, 20, 30);

fill(240, 172, 104);

ellipse(0, 70 + jaw * -2.25, 10, 15);

//ellipseMode(CENTER);

//Eyebrows

fill(155, 84, 14);

pushMatrix();

ellipse(-20, eyebrowLeft * -2.7, 35, 20);

popMatrix();

pushMatrix();

ellipse(20, eyebrowRight * -2.7, 35, 20);

popMatrix();

fill(206, 119, 48);

pushMatrix();

ellipse(-20, eyebrowLeft * -3, 35, 20);

popMatrix();

pushMatrix();

ellipse(20, eyebrowRight * -3, 35, 20);

popMatrix();

}

}

// OSC CALLBACK FUNCTIONS

public void found(int i) {

println("found: " + i);

found = i;

}

public void poseScale(float s) {

println("scale: " + s);

poseScale = s;

}

public void posePosition(float x, float y) {

//println("pose position\tX: " + x + " Y: " + y );

posePosition.set(x, y, 0);

}

public void poseOrientation(float x, float y, float z) {

//println("pose orientation\tX: " + x + " Y: " + y + " Z: " + z);

poseOrientation.set(x, y, z);

}

public void mouthWidthReceived(float w) {

//println("mouth Width: " + w);

mouthWidth = w;

}

public void mouthHeightReceived(float h) {

//println("mouth height: " + h);

mouthHeight = h;

}

public void eyeLeftReceived(float f) {

//println("eye left: " + f);

eyeLeft = f;

}

public void eyeRightReceived(float f) {

//println("eye right: " + f);

eyeRight = f;

}

public void eyebrowLeftReceived(float f) {

//println("eyebrow left: " + f);

eyebrowLeft = f;

}

public void eyebrowRightReceived(float f) {

//println("eyebrow right: " + f);

eyebrowRight = f;

}

public void jawReceived(float f) {

println("jaw: " + f);

jaw = f;

}

public void nostrilsReceived(float f) {

//println("nostrils: " + f);

nostrils = f;

}

// all other OSC messages end up here

void oscEvent(OscMessage m) {

/* print the address pattern and the typetag of the received OscMessage */

println("#received an osc message");

println("Complete message: "+m);

println(" addrpattern: "+m.addrPattern());

println(" typetag: "+m.typetag());

println(" arguments: "+m.arguments()[0].toString());

if(m.isPlugged() == false) {

println("UNPLUGGED: " + m);

}

}