FaceTracker Banana

import oscP5.*;

OscP5 oscP5;

float premouth1;

PImage topb;

PImage botb;

PImage midb;

PImage nakbot;

int found;

// pose

float poseScale;

PVector posePosition = new PVector();

PVector poseOrientation = new PVector();

// gesture

float mouthHeight;

float mouthWidth;

float eyeLeft;

float eyeRight;

float eyebrowLeft;

float eyebrowRight;

float jaw;

float nostrils;

float x=0;

float prevmouth1;

float check;

void setup(){

size(800,800);

topb = loadImage("topbanana.png");//height:567, width: 650

botb= loadImage("bottombanana.png");

midb= loadImage("middlebanana.png");

nakbot= loadImage ("nakedbanana.png");

check= 0;

frameRate(30);

oscP5 = new OscP5(this, 8338);

oscP5.plug(this, "found", "/found");

oscP5.plug(this, "poseScale", "/pose/scale");

oscP5.plug(this, "posePosition", "/pose/position");

oscP5.plug(this, "poseOrientation", "/pose/orientation");

oscP5.plug(this, "mouthWidthReceived", "/gesture/mouth/width");

oscP5.plug(this, "mouthHeightReceived", "/gesture/mouth/height");

oscP5.plug(this, "eyeLeftReceived", "/gesture/eye/left");

oscP5.plug(this, "eyeRightReceived", "/gesture/eye/right");

oscP5.plug(this, "eyebrowLeftReceived", "/gesture/eyebrow/left");

oscP5.plug(this, "eyebrowRightReceived", "/gesture/eyebrow/right");

oscP5.plug(this, "jawReceived", "/gesture/jaw");

oscP5.plug(this, "nostrilsReceived", "/gesture/nostrils");

}

void draw(){

background(255);

// if(prevmouth1!=mouthWidth){

// check= mouthWidth;

//if the jaw is less then a certain range(ie, closed) close the banana

if (jaw < 21.5){

jaw = 0;

}

// }

// prevmouth1= mouthWidth;

//translate(posePosition.x, posePosition.y);

//scale(poseScale);

//noFill();

image(nakbot,(jaw+152),nostrils+155 );

image(topb,(jaw * -5.5 +129),nostrils+32 ) ;

image(botb, jaw * 5.5 +125 ,nostrils+145);

image(midb, jaw * 5.5 +127 ,nostrils+45 );

//}

}

public void found(int i) {

//println("found: " + i);

found = i;

}

public void poseScale(float s) {

//println("scale: " + s);

poseScale = s;

}

public void posePosition(float x, float y) {

//println("pose position\tX: " + x + " Y: " + y );

posePosition.set(x, y, 0);

}

public void poseOrientation(float x, float y, float z) {

//println("pose orientation\tX: " + x + " Y: " + y + " Z: " + z);

poseOrientation.set(x, y, z);

}

public void mouthWidthReceived(float w) {

//println("mouth Width: " + w);

mouthWidth = w;

}

public void mouthHeightReceived(float h) {

//println("mouth height: " + h);

mouthHeight = h;

}

public void eyeLeftReceived(float f) {

//println("eye left: " + f);

eyeLeft = f;

}

public void eyeRightReceived(float f) {

//println("eye right: " + f);

eyeRight = f;

}

public void eyebrowLeftReceived(float f) {

//println("eyebrow left: " + f);

eyebrowLeft = f;

}

public void eyebrowRightReceived(float f) {

//println("eyebrow right: " + f);

eyebrowRight = f;

}

public void jawReceived(float f) {

println("jaw: " + f);

jaw = f;

}

public void nostrilsReceived(float f) {

//println("nostrils: " + f);

nostrils = f;

}

// all other OSC messages end up here

void oscEvent(OscMessage m) {

if(m.isPlugged() == false) {

println("UNPLUGGED: " + m);

}

}

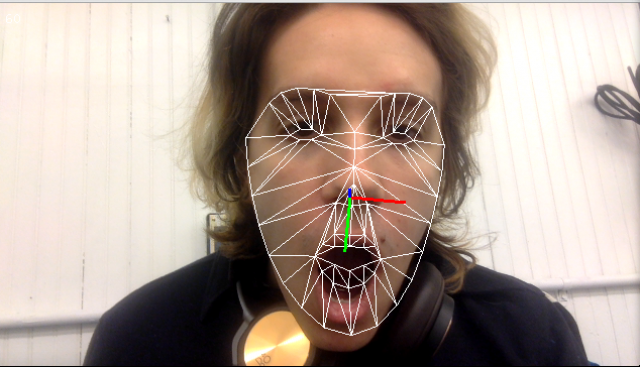

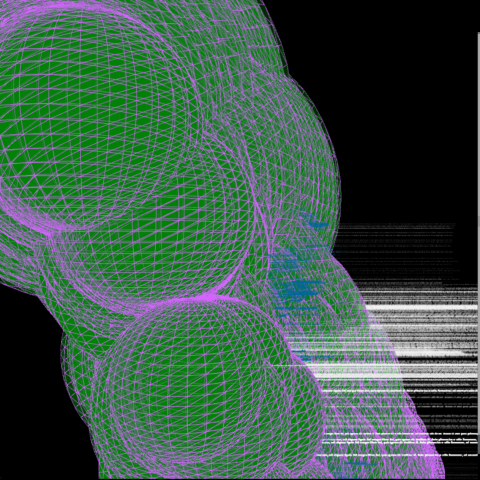

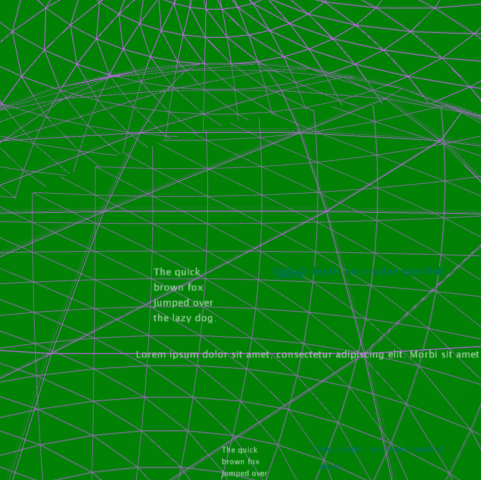

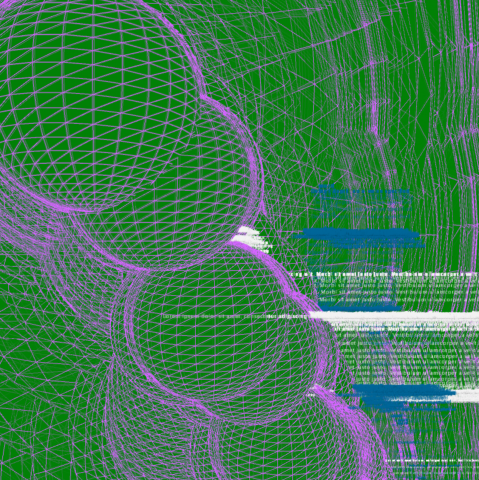

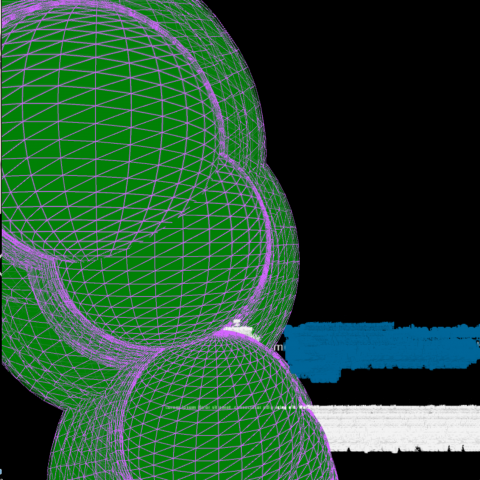

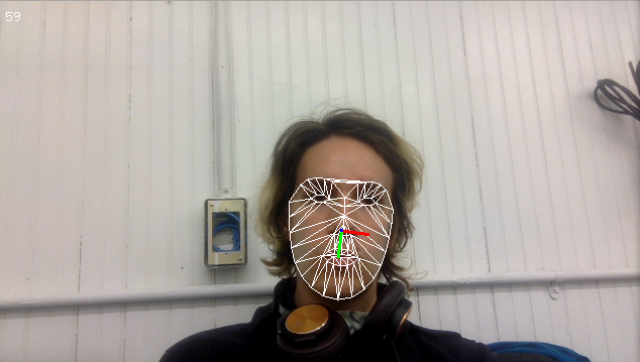

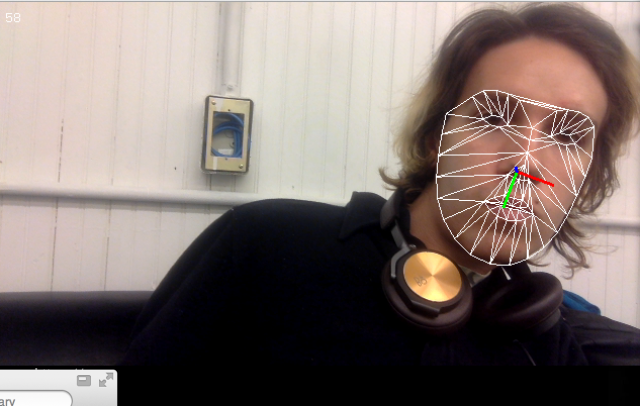

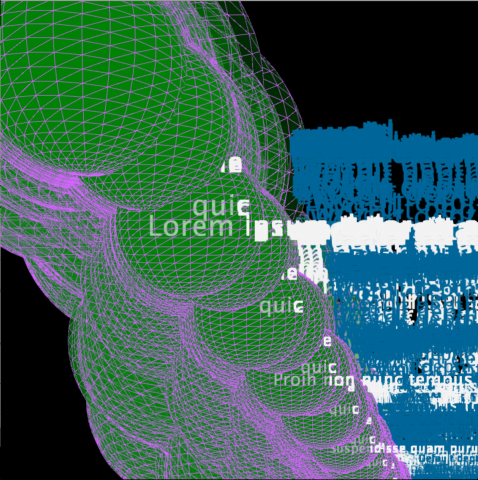

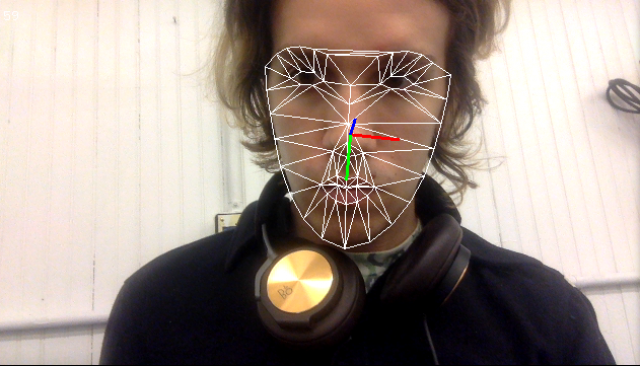

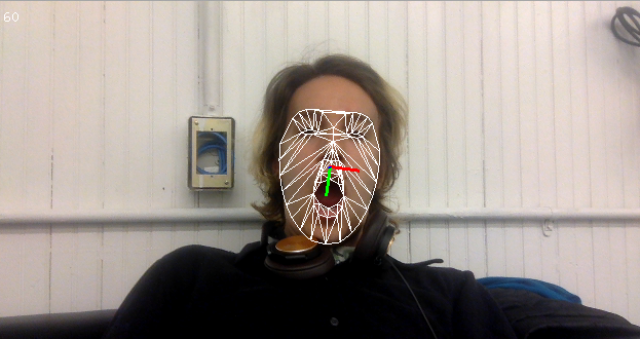

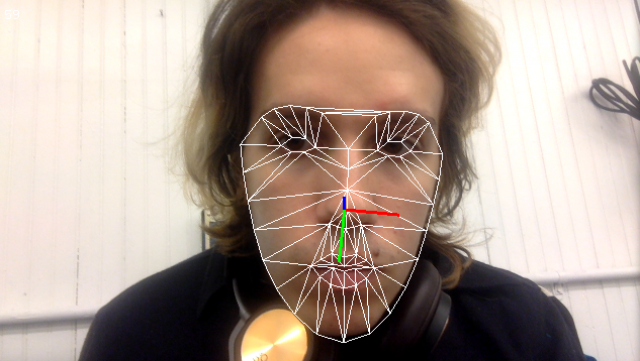

My piece explanation. Fruit. I’ve been working with, and eating fruit the most I’ve ever had to in my life. It’s been through this experience that I’ve come to understand how frustrating it is to peel fruit. With that in mind, my piece works in conjunction with idea. The frustration I have when peeling fruit, my constant frowning and creased brows, are reflected in the piece and as such, in order to peel the fruit depicted, the user must frown, a lot. In the future I would want to uses the movement of the link and the eyebrows to track how much of the fruit to peel, and the blinking of the eyes to switch to the next fruit.

*Edited: The video now works. I just had to change some coding errors and use someone else’s face. FaceOS likes light rooms, and people apparently.

*My program isn’t working. For one thing, the FaceOSC can’t seem to track my face for more than too seconds. Also, I loaded an image which doesn’t change at all despite changing the positing of your face. So with that in mind, here’s a picture of a banana that should have been peeled. I will note that trying to peel a banana in real life is almost as frustrating as trying to program a banana to peel. Almost.