This is a music visualizer that simulates the starry night sky.

A music-loving friend of mine once told me he missed seeing the stars at night after coming to Pittsburgh. The idea for this project came as an idea for a present for that friend. I liked the idea of a portable, personal set of stars that could be charmed to life by playing music. The stars react to new notes being played, and the aurora appears at certain volume of music and duration of continuous music. (This may not seem very obvious in the video at the beginning because I wasn’t playing the notes hard enough. Also pardon my rustiness on piano – I haven’t really played in 2 years.)

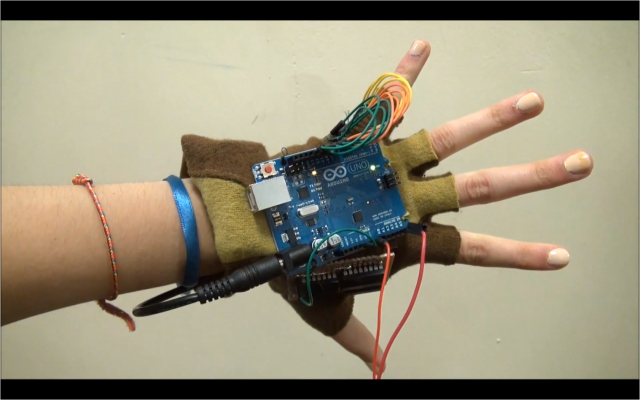

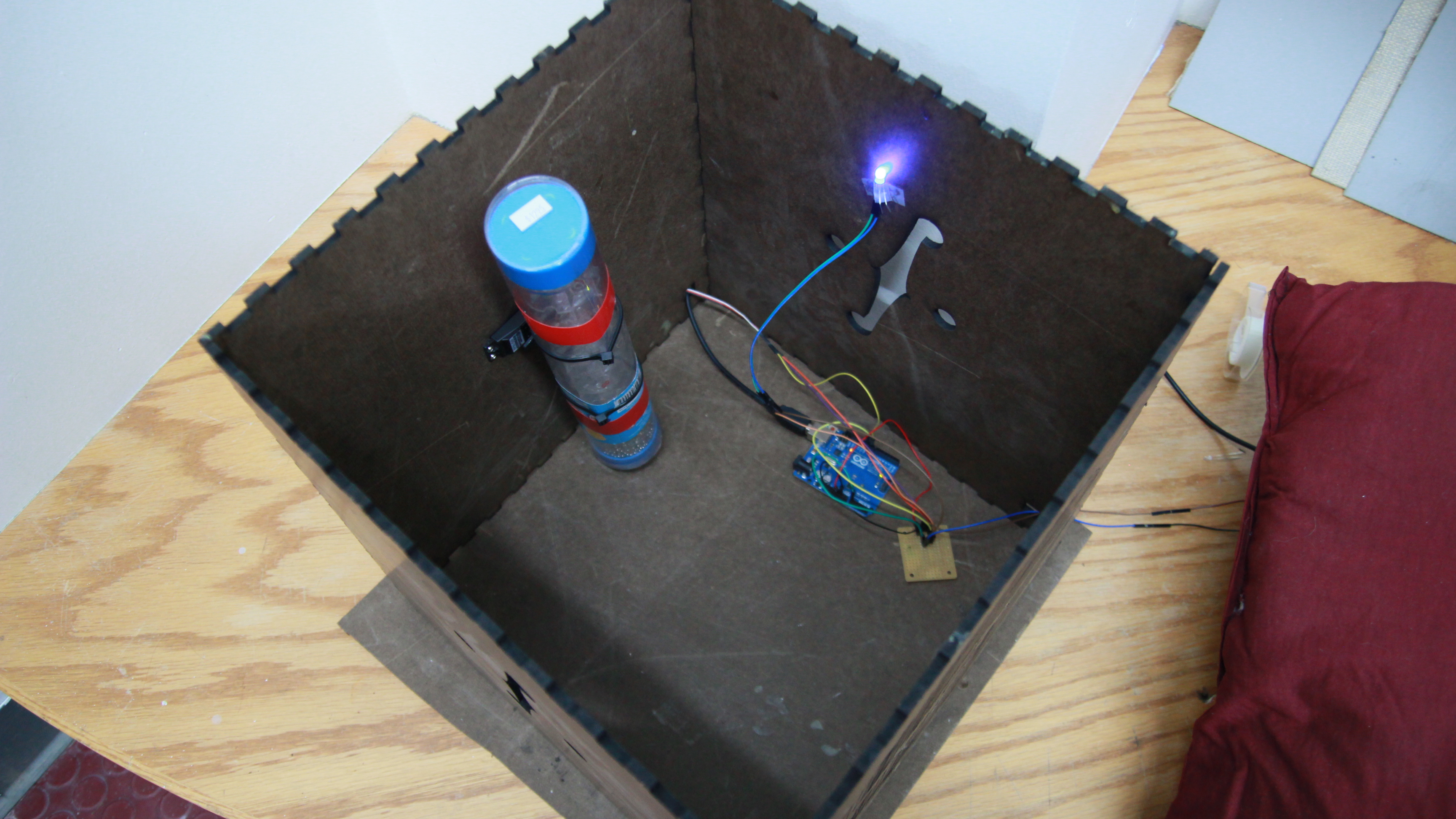

The end product uses an Arduino Mega 2560, with an Electret Mic Amplifier for sound input, and loads of LEDs for display. Frequency analysis utilizes code from Adafruit’s Piccolo (https://github.com/adafruit/piccolo), which uses Elm-Chan’s FFT (Fast Fourier Transformation) library.

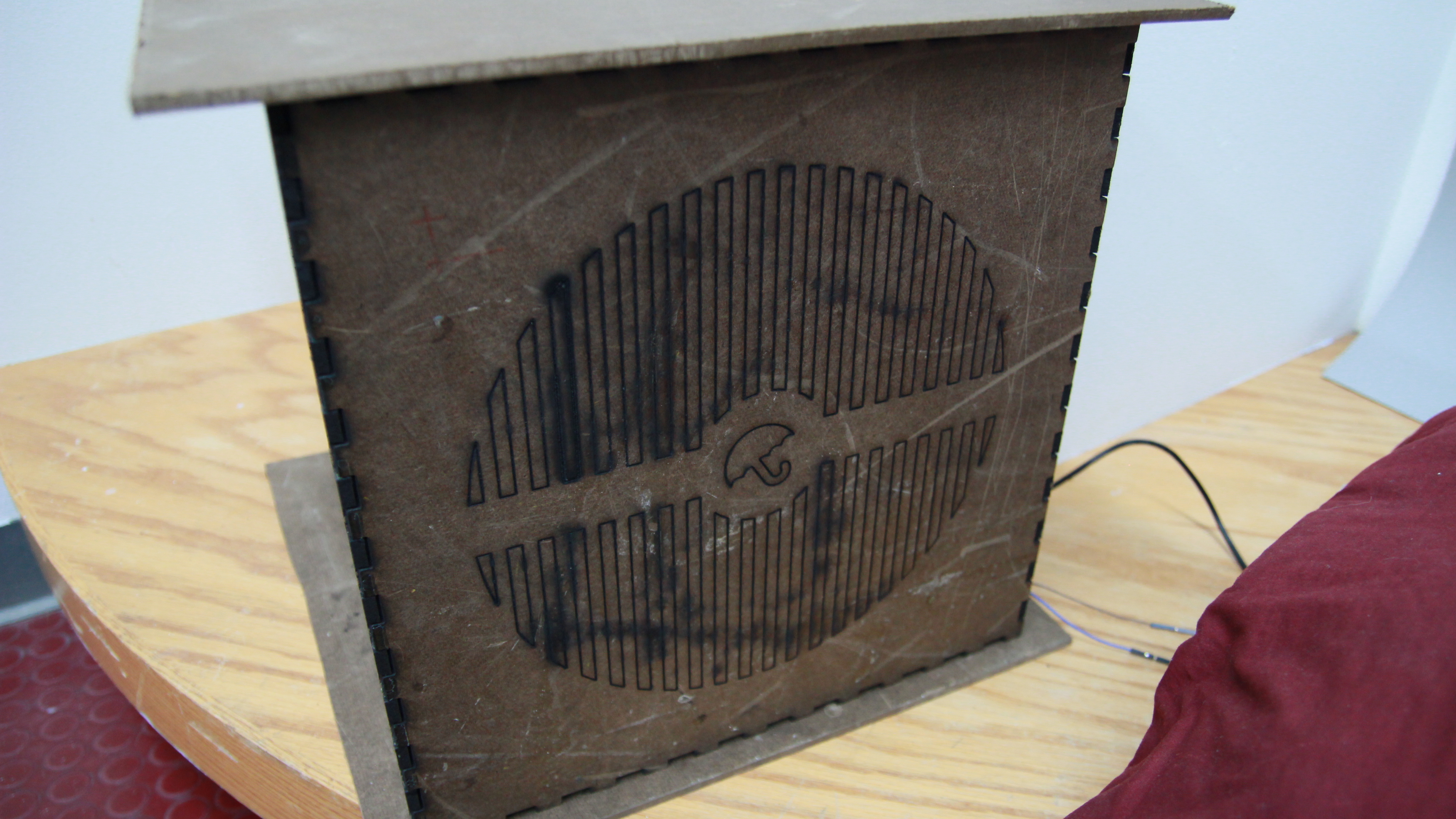

The creation of this project was a long and arduous process for me. My initial idea was to have a box filled with blue origami stars (https://fc04.deviantart.net/fs25/f/2008/072/f/e/Straw_Stars_by_Miraka.jpg), with white LEDs hidden inside white origami stars scattered around in the box. However, I quickly ran out of material for making the blue origami stars, and so replaced it with black cardstock and tissue paper. The end result of the stars adhere to my original idea in terms of visuals and functionality. The end product still has the white LEDs hidden inside white origami stars, and you just can’t tell clearly because they are now covered by black tissue paper. The white origami stars make the light of the white LEDs spread a little bit, and if you look carefully, the spread is in the shape of 5-pointed stars. I also wanted more white LED stars, but was limited by the number of PWM pins on the board (and later, space for the wires).

I also wanted to actually learn how to use the FFT library to implement more accurate frequency measurement, for picking out very roughly which notes are being played. It turned out that this is actually quite difficult due to harmonics, and it was hard to understand how to use the library partly due to poor documentation, so I ended up working with code from Adafruit for frequency analysis. A lot of testing was done to get it more suited for piano music. After getting the stars to work the way I wanted them to, I reflected on I could make it appear more interesting/visually appealing. The easy answer was “colors”, so I tried to implement something that appears similar to auroras. The source of the auroras are a number of LEDs. The ideal way to do this would be to use a LED strip (like this one https://www.adafruit.com/products/306), but since this was late into the project, I didn’t have time to get one.

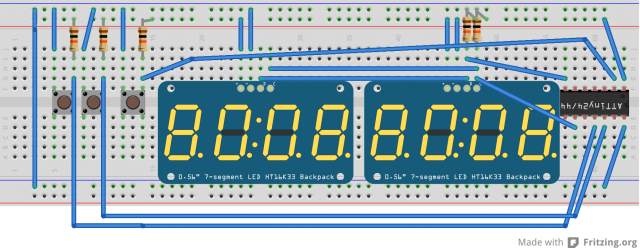

Physically putting this together was also very hard and time-consuming. I had a lot of trouble getting the connections for all the LEDs to work. I had to basically tear my project apart several times because the conductive copper tape wasn’t effective for LEDs, or wires broke, or solder wasn’t strong enough, etc. In the end my breadboard had almost every single slot filled. Then more things fell apart as I was trying to get everything to fit inside a small box. I didn’t realize all those wires would take up so much space.

Weird, but useful tidbits I’ve learned about Arduino:

– variables with types that don’t match won’t raise an error while compiling, but would cause weird things when run

– error in uploading program to Mega board can sometimes be fixed by unplugging a few pins

In the end, I was fairly satisfied with the final product. The stars worked almost as well as I hoped they would. I just wish I was able to show off the craftsmanship that went into this project more. If I get up enough energy, I’d replace the RGB LEDs with an RGB strip. It would be difficult though, because I’d literally have to tear apart my project again, both physically and coding-wise. I enjoy watching it as someone else is playing the piano. Too bad I can’t really watch it while playing at the same time, since I have to watch the keyboard, haha.

[I just realized I accidentally named this the same as that famous van Gogh piece. Ugh. Need better naming skills.]

Code, if you’re interested. It’s messy and long and uncommented:

/* Starry Night -

a music visualizer that simulates the starry night sky.

Parts of the code are written by Adafruit Industries. Distributed under the BSD license.

See https://github.com/adafruit/piccolo for original source.

Additional code written by Jun Huo.

*/

#include

#include

#include

“Face Instrument” – Daito Manabe

“Face Instrument” – Daito Manabe

“Happy Things” – Kyle McDonald

“Happy Things” – Kyle McDonald