I am kicking around an idea of either analyzing or generating cute characters.

http://pinktentacle.com/2010/08/99-cute-trademarked-characters-from-japan/ from http://www3.ipdl.inpit.go.jp/cgi-bin/TF/sft3.cgi

Art from Summer Wars

I am kicking around an idea of either analyzing or generating cute characters.

http://pinktentacle.com/2010/08/99-cute-trademarked-characters-from-japan/ from http://www3.ipdl.inpit.go.jp/cgi-bin/TF/sft3.cgi

Art from Summer Wars

This is an interesting anti-pattern for my transit visualization. It’s a somewhat arbitrary mapping between sitemap information and a London-Tube-style map.

How transit-oriented is the portland region? The mapping here is pretty straightforward (transit-friendliness to height) but compelling.

Cool project out of Columbia’s graduate school of architecture. It maps the homes of people in New York prisions on a block-by-block basis. I want my project to have this sort of granularity.

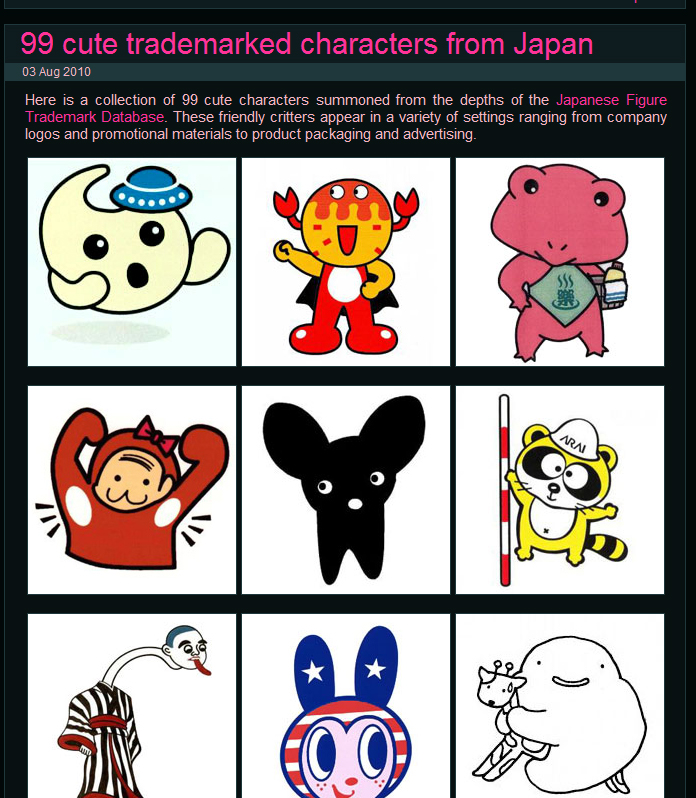

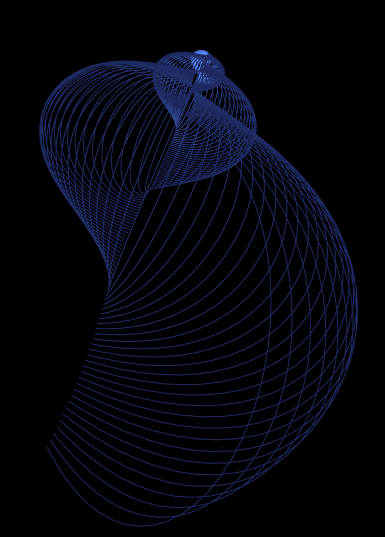

In this project, I explored the mathematical equations that describe seashells. After reading Hans Meinhardt’s excellent book The Algorithmic Beauty of Sea Shells I discovered that relatively simple equations can be used to describe almost every natural shell.

r=aebθ

By revolving a curve (usually a semicircle) around this helicospiral, a curve can be generated that describes almost any natural shell.

I used Cinder to generate a polygon mesh approximating the curve. This gave me an opportunity to experiment with OpenGL vertex buffer objects and Cinder’s VBOMesh class.

At this point, I assumed getting lighting and texturing working would be relatively simple. However, it turns out that knowing how OpenGL works is quite different from knowing how to make something with it. The final results were quite humbling:

Perhaps the most interesting thing about the model is its ability to generate plausible (though unnatural) forms. Many of the forms created have no analog in nature, but seem like they could.

In future iterations of this project, I plan to conquer proper OpenGL lighting and possibly more advanced techniques such as ambient occlusion and shadow mapping. In addition, I would like to texture the shells with reaction diffusion patterns which was the original focus of my project.

I’m still tossing around ideas for my final project, but I’d like to do more experimentation with the kinect. Specifically, I think it’d be fun to do some high-quality background subtraction and separate the user from the rest of the scene. I’d like to create a hack in which the users body is distorted by a fun house mirror, while the background in the scene remains entirely unaffected. Other tricks, such as pixelating the users body or blurring it while keeping everything else intact could also be fun. The basic idea seems manageable, and I think I’d have some time left over to polish it and add a number of features. I’d like to draw on the auto calibration code I wrote for my previous kinect hack so that it’s easy to walk up and interact with the “circus mirror.”

I’ve been searching for about an hour, and it doesn’t look like anyone has done selective distortion of the RGB camera image off the kinect. I’m thinking something like this:

Imagine how much fun those Koreans would be having if the entire scene looked normal except for their stretched friend. It’s crazy mirror 2.0.

I think background subtraction (and then subsequent filling) would be important for this sort of hack, and it looks like progress has been made to do this in OpenFrameworks. The video below shows someone cutting themselves out of the kinect depth image and then hiding everything else in the scene.

To achieve the distortion of the user’s body, I’m hoping to do some low-level work in OpenGL. I’ve done some research in this area and it looks like using a framebuffer and some bump mapping might be a good approach. This article suggests using the camera image as a texture and then mapping it onto a bump mapped “mirror” plane:

Circus mirror and lens effects. Using a texture surface as the rendering target, render a scene (or a subset thereof) from the point of view of a mirror in your scene. Then use this rendered scene as the mirror’s texture, and use bump mapping to perturb the reflection/refraction according to the values in your bump map. This way the mirror could be bent and warped, like a funhouse mirror, to distort the view of the scene.

At any rate, we’ll see how it goes! I’d love to get some feedback on the idea. It seems like something I could get going pretty quickly, so I’m definitely looking for possible extensions / features that might make it more interesting!

A few things I’m thinking about, mainly about sound.

The last inspiration that I had was the project where the artist tracks the movement of glass blades blown by the wind and produces sound. I’m thinking of creating a purely sound based project, creating a soundscape where users can wander into and interact with the environment. The idea is that basically the user is wading through a blade of grass and as the user pushes the grass blades around, they will collide and create a sound. It would a project purely sound based with no visuals.

For my final project, I’m currently thinking I want to adapt the dynamic landscape with kinect I made with Paul Miller for the 2nd project, and probably create some sort of game on top of it.

Recompose is a kinect project developed by the MIT media lab. It uses the depth camera, mounted above a table, to do gesture recognition on the users hands in order to control a pin-based surface on the table. I think this is interesting because it’s almost a reversal of the work Paul and I did, which I’d like to expand – modifying something physical rather than something virtual. These type of gestures might also be good to incorporate into a game to give the user more control.

Not quite a project, but something I’ll be looking over is this Guide to Meshes in Cinder. OpenFrameworks has no built-in mesh tools, so if Cinder has something to make the process easier, I may consider porting the code over in order to save myself some trouble.

This project, Dynamic Terrain, is another interesting reversal of our project. This work totally reverse things, modifying physical through virtual rather than modifying virtual through physical.

These aren’t new, but I’m trying to find more info on Reactables, as one of the directions I could go in would be incorporating special objects into the terrain generator that represent certain features or can make other modifications. A project like this can help guide me in how to think about relating these objects to each other, and what variety and diversity of functions they might have.

Finally, I found this article on Terrain reasoning of AI. I’m thinking of a game where the player must guide or interact with groups of tiny creatures or people by modifying their landscape, so the information and ideas here could be extremely useful.

For my final project, one of the possible trend is to keep working on the algrhythm project and make a set of physical drum bots. Ideally I’d like to create about 10 drum bots with different drum stick/ and in-built algorithm. These drums may pile up/ make a chain/ circle, tree or what ever. And see what kind of music can we get from these drums.

Some inspiration:

1. Yellow Drum Machine

2. ABSOLUTE MACHINES

3. muchosucko

I found this really weird video of people walking on a street, but they are colored and background subtracted in.

I have been thinking about doing some “soul” visualizations of the observers in a live installation. Some possible scenarios:

Here is the background subtraction camo example:

My friend has an idea to use the Kinect to direct a live presentation. That gave me an idea of using the Kinect to speak to a facetious large audience, and try to get them riled up. The user would talk and stand behind a podium. Perhaps like the state of the union? User walks up to the podium and half of them stand and clap. Say something with cadence and a partisan block stands? See this video of the the interaction in Kinect Sports:

But, apply it to this:

For my final project, I want to make some sort of tool to create music visually. Since I don’t have much (any) experience in computer music, I thought this project would be a good opportunity for exploration.

Clapping pattern visualization

This is a visualization (I think, as opposed to a program producing the sounds) of the patterns in a clapping song. The visualization is actually a couple of really simple shapes that clearly show how the song progresses.For my project, I would hope that the visual representation is just as easy to understand as this one.

mta.me

mta.me is a website that shows the nyc subway trains running throughout the day, based on their schedules. As they cross paths with other trains, the path is plucked and makes a nice little sound. This is a really clean visualization and it’s nice how the sounds correlate with the busyness of the subway system.

Artikulator

This is a project that allows a user to draw music on an iPad or iPhone. The person basically finger paints trails on the screen and the app plays through it left to right. It seems like, with this iteration, it is kind of hard to get something that sounds really ‘nice’, but I like how easy it is to just pick up and use.

Generative Knitting

So there hasn’t been much precedence for this, since contemporary knitting machines are ungodly expensive, and the older ones, generally the brother models, that people have at home are so unwieldy that changing stitches is more of a pain to do this way than by hand. But if I can figure out someway to make it work out, I think knitting has ridiculous potential for generative/algorithmic garment making. Since it is possible to create intense volume/pattern in one seamless piece of fabric, simply though a mathematical output of pattern. It would be excellent just to be able to “print” these creations on the spot, and do more than just fair isle.

I sent off a couple emails to hackpgh, but I’ll try to stop by their actual office today or tomorrow and just ask them in person if I can borrow/use their machine

here’s an example of a pattern generator based off of fractals and other mathy things

how to create knitting patterns that focus purely on texture

Perl script to Cellular Automaton knitting

Here’s a pretty well known knitting machine hack, for printing out images in fair-isle. This is awesome, but I was hoping to be able to play more with volume and texture than color

Computational Crochet

Sonya Baumel crocheted these crazy gloves based off of bacteria placement on the skin

User Interactive Particles

I also really enjoyed the work we did for the kinect project, and would be interested in pursuing more complicated user generated forms. These two short films by FIELD design are particularly lovely

Generative Jewelry

I also would be interested in continuing my work with project 4. I guess not really continuing, since I want to abandon flocking entirely and focus on getting the snakes or a different generative system up and running to create the meshes to make some more aesthetically pleasing forms. Asides from snakes, I want to look into Voronoi, like the butterflies on the barbarian blog.

Helmut Smit’s LP Bike is a clever and amusingly low-tech bike-record-player. The video doesn’t show him using it in public, but do I hope he’s exploited the enormous performative potential. Trick bike skills + DJ skills could make for quite a spectacle.

His Automatic Street Musician is so much more successful than a steel Simon & Garfunkel machine I built last year.

![]()

His Dead pixel in Google Earth is very punny, as the grass is actually dead. The implication is that 82×82 cm is the actual size of one Google Earth pixel at his latitude, which makes for an idiosyncratic unit of measure. Of course, Google’s imagery is most likely pulled from many different sources and has no consistent orientation or resolution.

His Greenscreen is interesting to me more because of the imaginative repurposing of the video technique more than the advertising/visual media message.

I began this project with the idea of creating generative jewelry. A generative system easily wraps up the problem of how to make a visually cohesive “collection” while still leaving a lot of the decisions up to the user. I also love making trinkets and things for myself, so there you go.

My immediate inclination when starting this was snakes. Being one of the few images that I’ve obsessively painted over and over (I think I did my first medusa drawing way back freshman year high school, and yes, finally being over the [terrifying] cusp of actual adulthood, this now qualifies as way back ), I grown a sort of fascination with the form. Contrary to the Raiders of the Lost Ark stereotype, snakes can be ridiculously beautiful, and move in the most lithe/graceful fashions. Painting the medusas, I also like the idea of them being tangled up in and around each other and various parts of the human body, and thought it would be awesome to create a simulate that, and then freeze them in order to create 3D printable jewelry

I quickly drafted a Processing sketch to simulate snake movement, playing around with having randomized/user controlled variables that drastically altered the behavior of each “snake”. Okay cool, easy enough.

(I can’t get this to embed for some reason, but you can play around with the app here. Its actually very entertaining)

I then ran into this post on the barbarian blog talking about how then went about making an absolutely gorgeous snake simulation in 3D (which I hadn’t quite figured out how to do yet). So I was like no sweat, VBOs, texture mapping, magnetic repulsion?? I’ve totally got three more days for this project, it is going to be AWESOME.

Halfway through day 2, I discovered I had bitten off a bit more than I could chew and decided to fall back on trusty flocking (which i already had running in three dimensions, and was really one of my first fascinations when i started creative coding). I dusted off my code from the Kinect project, and tweaked it a bit to run better in three dimensions/adding in the pretty OpenGL particle trails I figured out how to do in Cinder

3d flocking with Perlin noise from Alex Wolfe on Vimeo.

Using toxiclibs, I made each particle a volume “paintbrush”, so instead of these nice OpenGL quadstrips, each particle leaves behind a hollow spherical mesh of variable radius(that can be combined in order to form one big mesh). By constraining the form of the flocking simulation, for example setting rules that particles can’t fly out of a certain rectangle, or by adding a repulsive force to the center to form more of a cylinder, I was able to get them to draw out some pretty interesting (albeit scary/unwearable) jewelry

flocking bangle

Also, flocking KNUCKLE DUSTERS. Even scarier than normal knuckle dusters.

Here’s a raw mesh, without any added cleaning (except for a handy ring size hole, added afterwards in SolidWorks)

I then added a few features that would allow you to clean up the mesh. I figured the spiky deathwish look might not be for everyone. The first was Laplacian smoothing, that rounds out any rough corners in the mesh. You can actually keep smoothing for as long as you like, eventually wearing the form away to nothing

And mesh subdivison (shown along with smoothing here), which actually wasn’t as cool as I hoped, due to the already complicated geometry the particles leave behind.

The original plan was to 3D print these and paint them will nail polish (which actually makes excellent 3D printed model varnish, glossing over the resolution lines, and hardly ever chipping, with unparalleled pigment/color to boot). However, due to their delicate nature, and not being the most…er… aesthetically pleasing, I decided to hold off. It was an excellent foray into dynamic mesh creation though, and I hope I can apply a similar volume building system to a different particle algorithm (with more visually appealing results).

(some nicer OpenGL vs VRay renderings)

This project involved using a genetic algorithm to determine the optimal solution for a parametrically constructed table. Each table has a group of variables that define its dimensions (ex: leg height, table width, etc.). Each table (or “individual”) has a phenotype and a genotype: phenotypes consist of the observable characteristics of an individual where as genotypes are the internally coded and inheritable sets of information that manifest themselves in the form of the phenotype (phenotypes are are encoded as a binary string).

Phases of the Genetic Algorithm:

1) The first generation of tables are initialized, a population w/ randomly generated dimensions

2) Each table is evaluated based on a fitness function ( fitness is determined by comparing each individual to an optimal model, ex: maximize table height while minimizing table surface area)

3) Reproduction: Next, the tables enter a crossover phase in which randomly chosen sites along an individual’s genotype are swapped with the site of another individual’s genotype. *Elite individuals (a small percentage of the population that best satisfy the fitness model) are exempt from this stage. Individuals are also subjected to a 5% mutation rate which simulates the natural variation that occurs within biological populations.

4) Each successive population is evaluated until all individuals are considered fit (termination)

Areas for improvement:

• Currently, the outcome is fairly predictable in that the criteria for the fitness function is predefined. An improved version of the program might use a physics simulation(ex: gravity) to influence the form of the table. Using simulated forces to influence the form of the table would yield less expected results.

Play

Download the app and get your swarm on!

Mac/Windows mesh freeware

About

Mesh Swarm is a generative tool for creating particle driven .stl files directly out of Processing. The 3d geometry is created by particles on trajectories that have a closed mesh around their trace. Parameters are set up to control particle count, age, death rate, start position, and mesh properties. These rules are able to be changed live with interactive parametric controls. This program is designed to help novice 3d modelers and fabricators gain an understanding of the 3d printing process. Once the desired form is achieved the user can simply hit the ‘S’ key and save a .stl to open in a program of choice.

The following video is a demo of the work flow of how to produce your custom mesh…

The beginnings of the project were rooted in the Interactive Parametrics 2011 workshop in Brooklyn, Ny hosed by Studio Mode and Marius Watz. Here I began to investigate point and line particle systems in 3D and later added mesh and .stl functionality. These are a few examples of screen grabs from the point and line 3D process.

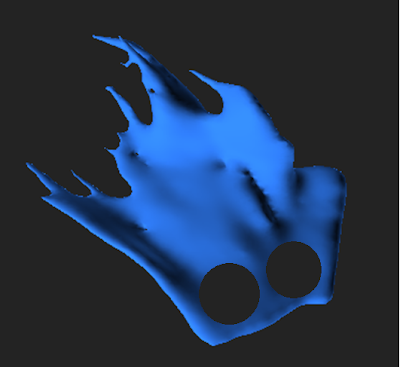

From there, the next step was to begin working with 3D printable meshes. The framework of the point and line code was used to drive the 3D meshes that followed. After the .stl export, I used Maya to smooth and cluster some of the mesh output. The process of mesh smoothing creates interesting opportunities to take clustered geometry and make relational and local mesh operations giving the appearance of liquid/goo. Renderings were done in Vray for Rhino.

I built the twitterviz project to visualize a live twitter stream. Originally, I was looking at ways to visualize social media–either from facebook or twitter. I was poking around the twitter api, and noticed that they had a stream api that would pipe a live twitter stream through a post request. I wanted to work with live data instead of the static data I used for my first project (facebook collage visualization), so I decided this api would be my best bet.

The stream api allows developers to specify a filter query, after which twitter feeds containing that query will be piped, real time, to the client. I decided to utilize this to allow users to enter a query and visualize the twitter results as a live data stream.

I was also looking at Hakim El Hattab’s work at the time. For my project, I used his bacterium project as starter code for my project, using his rudimentary particle physics engine in particular. I decided to use javascript and html5 to implement the project because it’s a platform I wanted to gain experience with and also because that allowed it to be easily accessible to everyone using a web browser. I decided to visualize each twitter filter query as a circle, and when a new twitter feed containing that query is found, the circle would “poop” out a circle of the same color onto the screen.

However, I encountered some problems in this process. Since javascript has a same-domain policy for security reasons, my client-side javascript code could not directly query twitter’s api using ajax. To solve this, I used Phirehose, a PHP library that interfaces with twitter’s stream api. Thus, the application queries twitter by having client side javascript query a php script on my server, which in turn queries twitter’s stream api, thereby solving the same-domain policy issue. Due to time constraints, the application only works for one client at a time, and there are some caveats. Changing the filter query rapidly causes twitter to block the request (for a few minutes), so entering new filter strings rapid-fire will break the application.

Here are some images:

Default page, no queries.

One query, “food”. The food circle is located on the top right corner, and it looks like it has a halo surrounding it. New twitter feeds with “food” poop out more green circles, which gravitate to the bottom.

You can access a test version of this application here.