Project 4: Final Days…

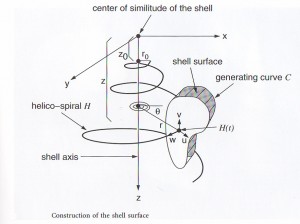

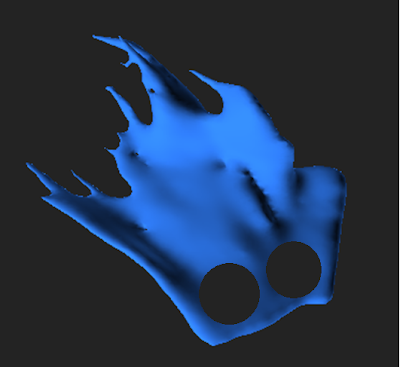

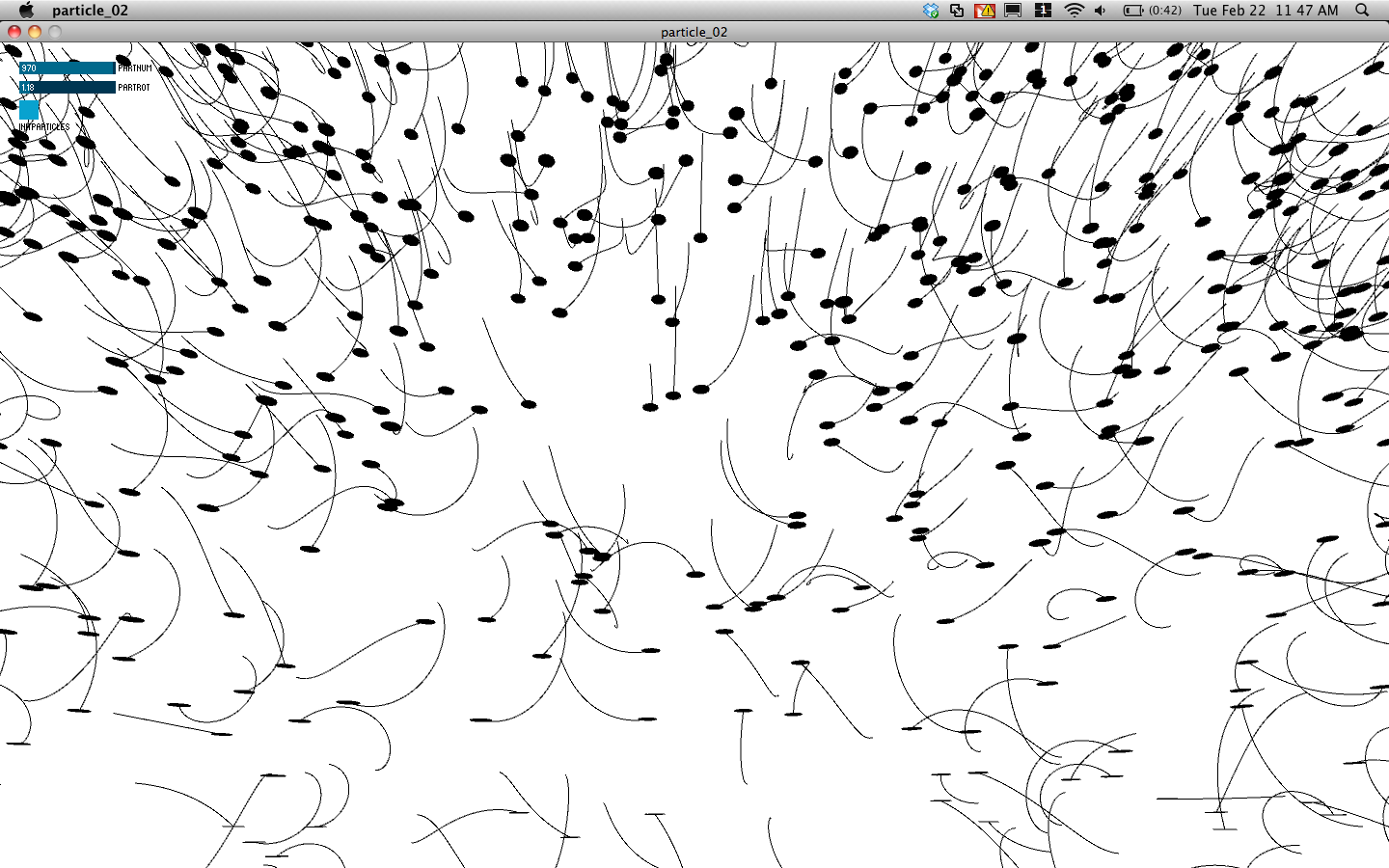

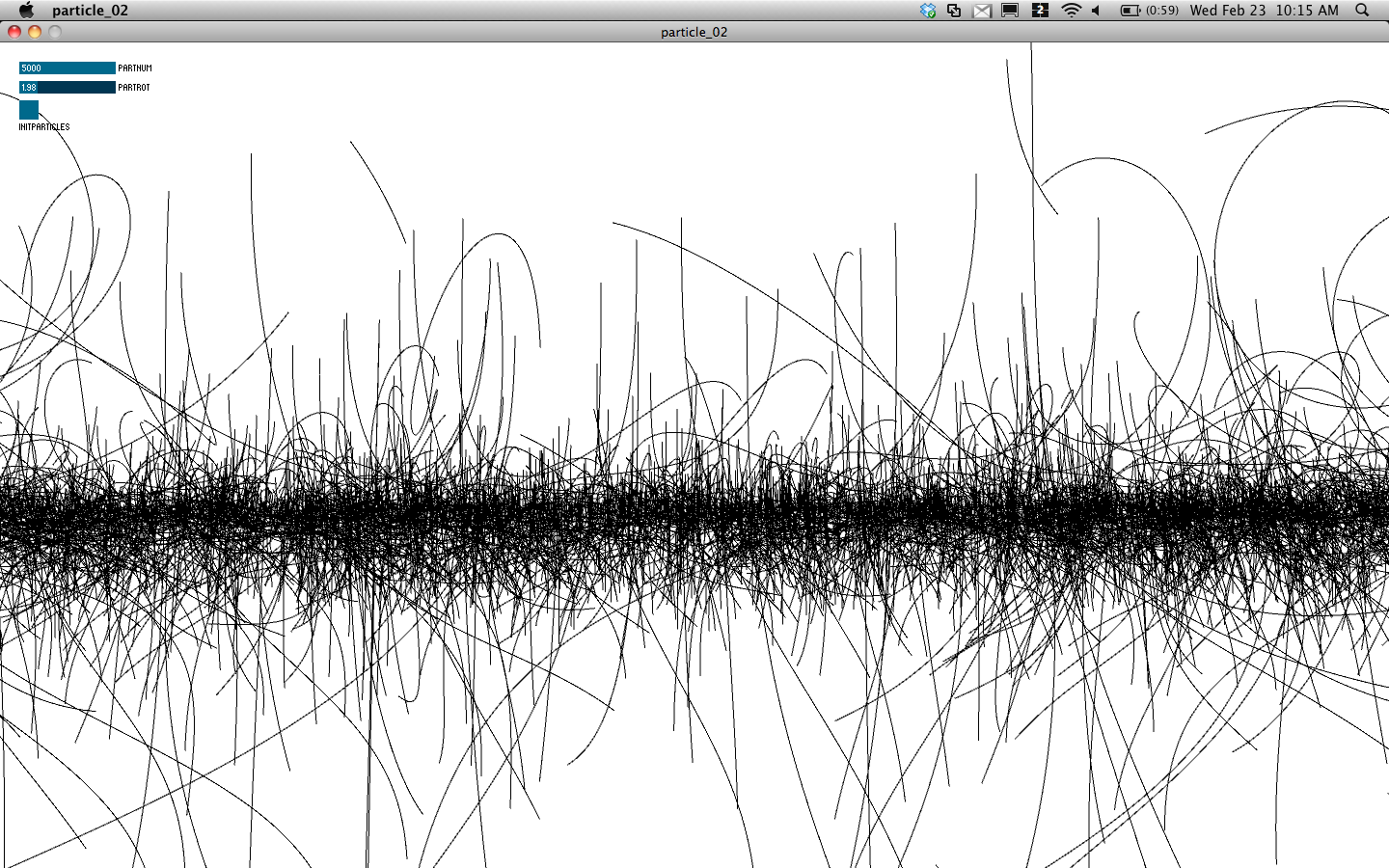

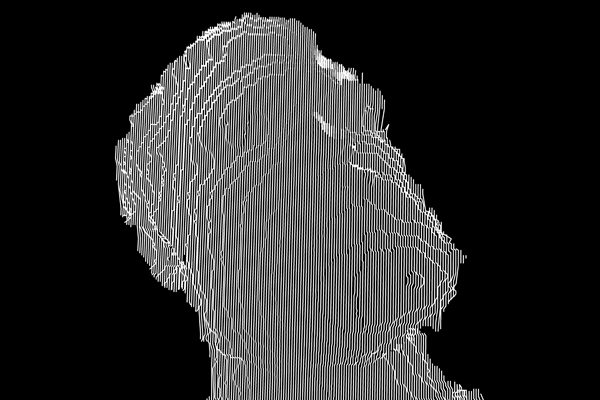

For the last couple weeks, I’ve been working on a kinect hack that performs body detection and extracts individuals from the scene, distorts them using GLSL shaders, and pastes them back into the scene using OpenGL multitexturing. The concept is relatively straightforward. Blob detection on the depth image determines the pixels that are part of each individual. The color pixels within the body are copied into a texture, and the non-interesting parts of the image are copied into a second background texture. Since distortions are applied to bodies in the scene, the holes in the background image need to be filled. To accomplish this, the most distant pixel at each point is cached from frame to frame and substituted in when body blobs are cut out.

It’s proved difficult to pull out the bodies in color. Because the depth camera and the color camera in the Kinect do not align perfectly, using a depth image blob as a mask for color image does not work. On my Kinect, the mask region was off by more than 15 pixels, and color pixels flagged as belonging to a blob might actually be part of the background.

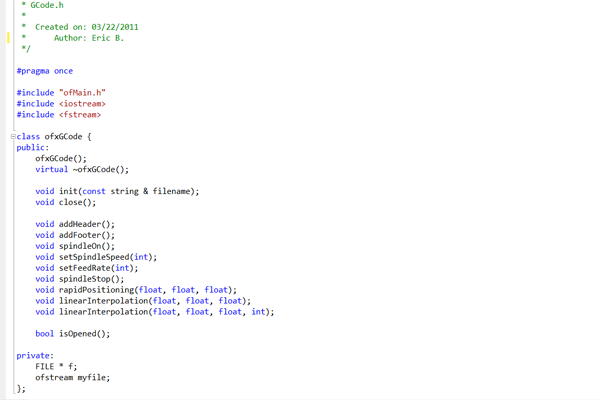

To fix this, Max Hawkins pointed me in the direction of a Cinder project which used OpenNI to correct the perspective of the color image to match the depth image. Somehow, that impressive feat of computer imaging is accomplished with these five lines of code:

// Align depth and image generators

printf("Trying to set alt. viewpoint");

if( g_DepthGenerator.IsCapabilitySupported(XN_CAPABILITY_ALTERNATIVE_VIEW_POINT) )

{

printf("Setting alt. viewpoint");

g_DepthGenerator.GetAlternativeViewPointCap().ResetViewPoint();

if( g_ImageGenerator ) g_DepthGenerator.GetAlternativeViewPointCap().SetViewPoint( g_ImageGenerator );

}

I hadn’t used Cinder before, and I decided to migrate the project to Cinder since it seemed to be a much more natural environment to use GLSL shaders in. Unfortunately, the Kinect OpenNI drivers in Cinder seemed to be crap compared to the ones in OpenFrameworks, et. al. The console often reported that the “depth buffer size was incorrect” and that the “depth frame is invalid”. Onscreen, the image from the camera flashed and occasionally frames appeared misaligned or half missing.

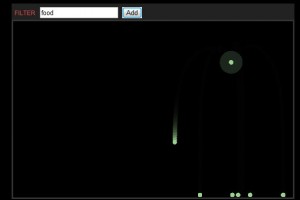

I continued fighting with Cinder until last night, when at 10PM I found this video in an online forum:

This video is intriguing, because it shows the real-time detection and unique identification of multiple people with no configuration. AKA it’s hot shit. It turns out, the video is made with PrimeSense, the technology used for hand / gesture / person detection on the XBox.

I downloaded PrimeSense and compiled the samples. Behavior in the above video achieved. The scene analysis code is incredibly fast and highly robust. It kills the blob detection code I wrote performance-wise, and doesn’t require that people’s legs intersect with the bottom of the frame (the technique I was using assumed the nearest blob intersecting the bottom of the frame was the user.)

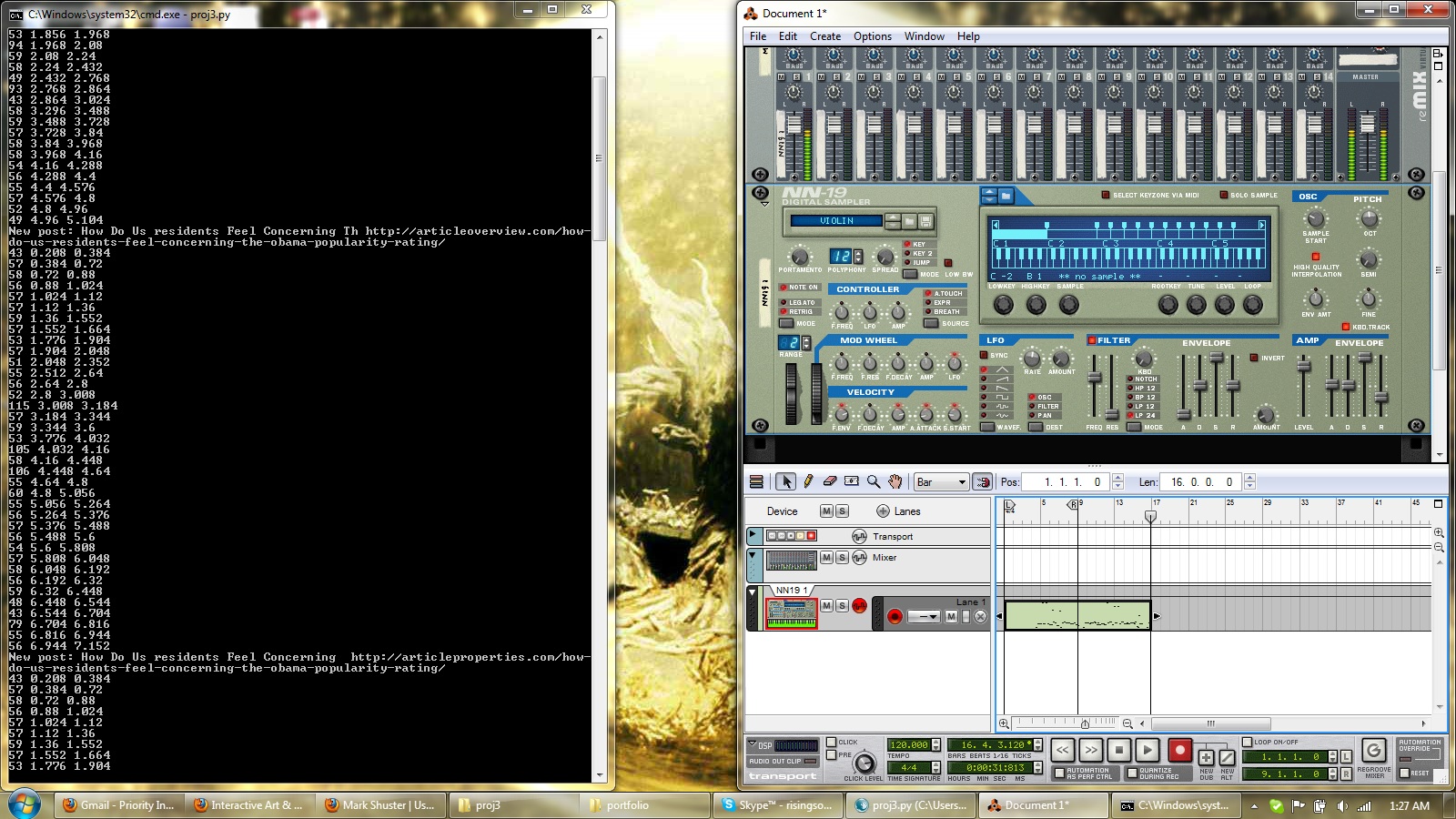

I re-implemented the project on top of the PrimeSense sample in C++. I migrated the depth+color alignment code over from Cinder and built a background cache and rebuilt the display on top of a GLSL shader. Since I was just using Cinder to wrap OpenGL shaders, I decided it wasn’t worth linking it in to the sample code. It’s 8 source files, it compiles on the command line. It was ungodly fast. I was in love.

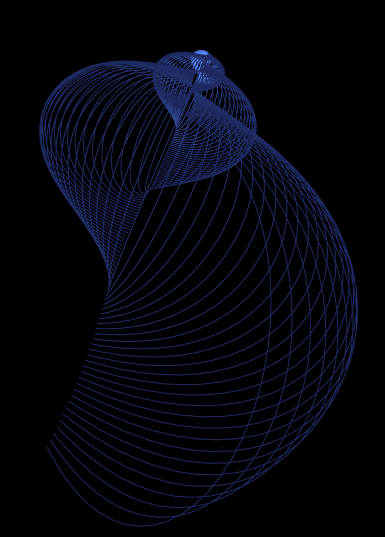

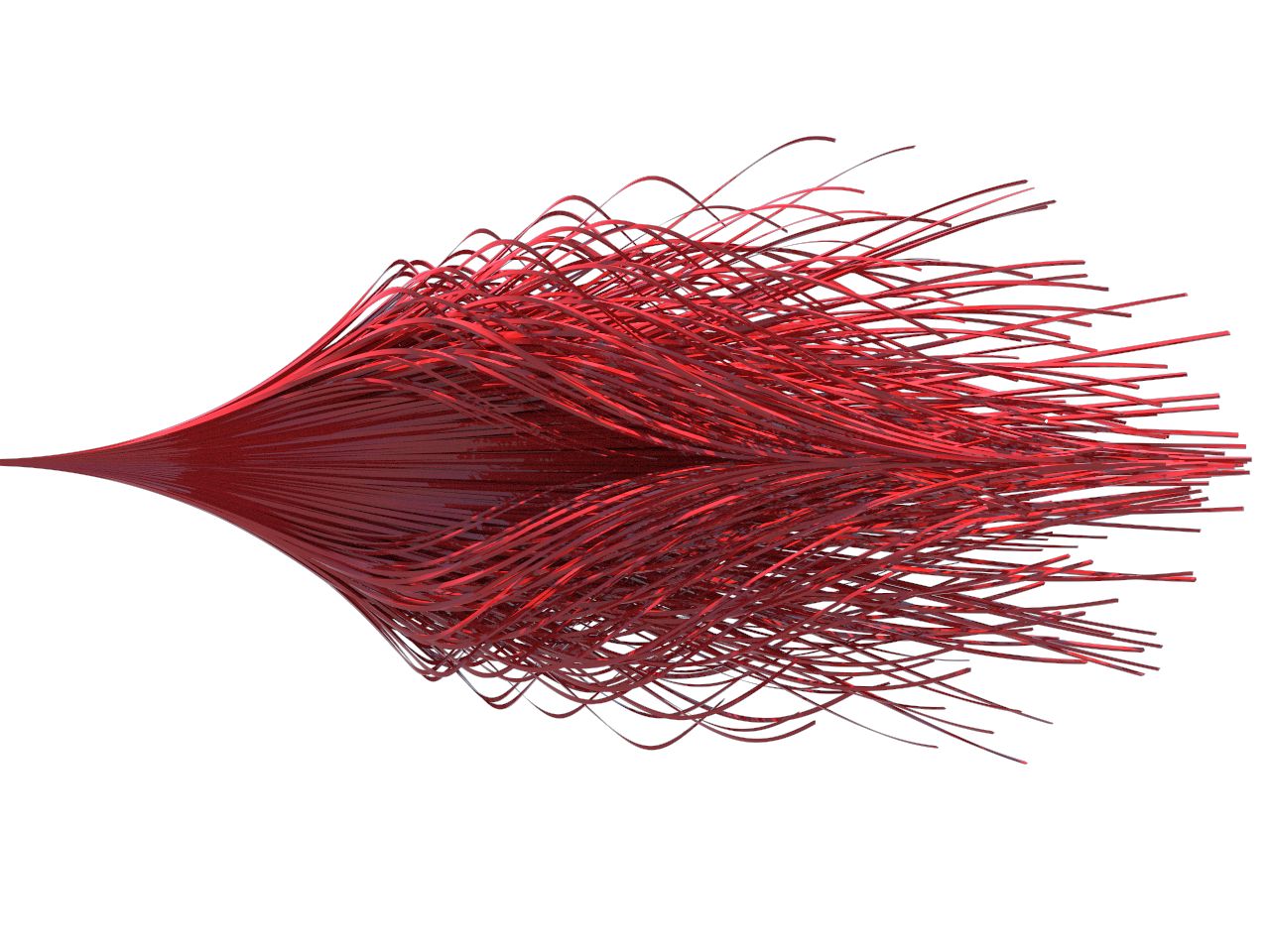

Rather than apply an effect to all the individuals in the scene, I decided it was more interesting to distort one. Since the PrimeSense library assigns each blob a unique identifier, this was an easy task. The video below shows the progress so far. Unfortunately, it doesn’t show off the frame rate, which is a cool 30 or 40fps.

My next step is to try to improve the edge of the extracted blob and create more interesting shaders that blur someone in the scene or convert them to “8-bit”. Stay tuned!