Mark Shuster – Generative – TweetSing

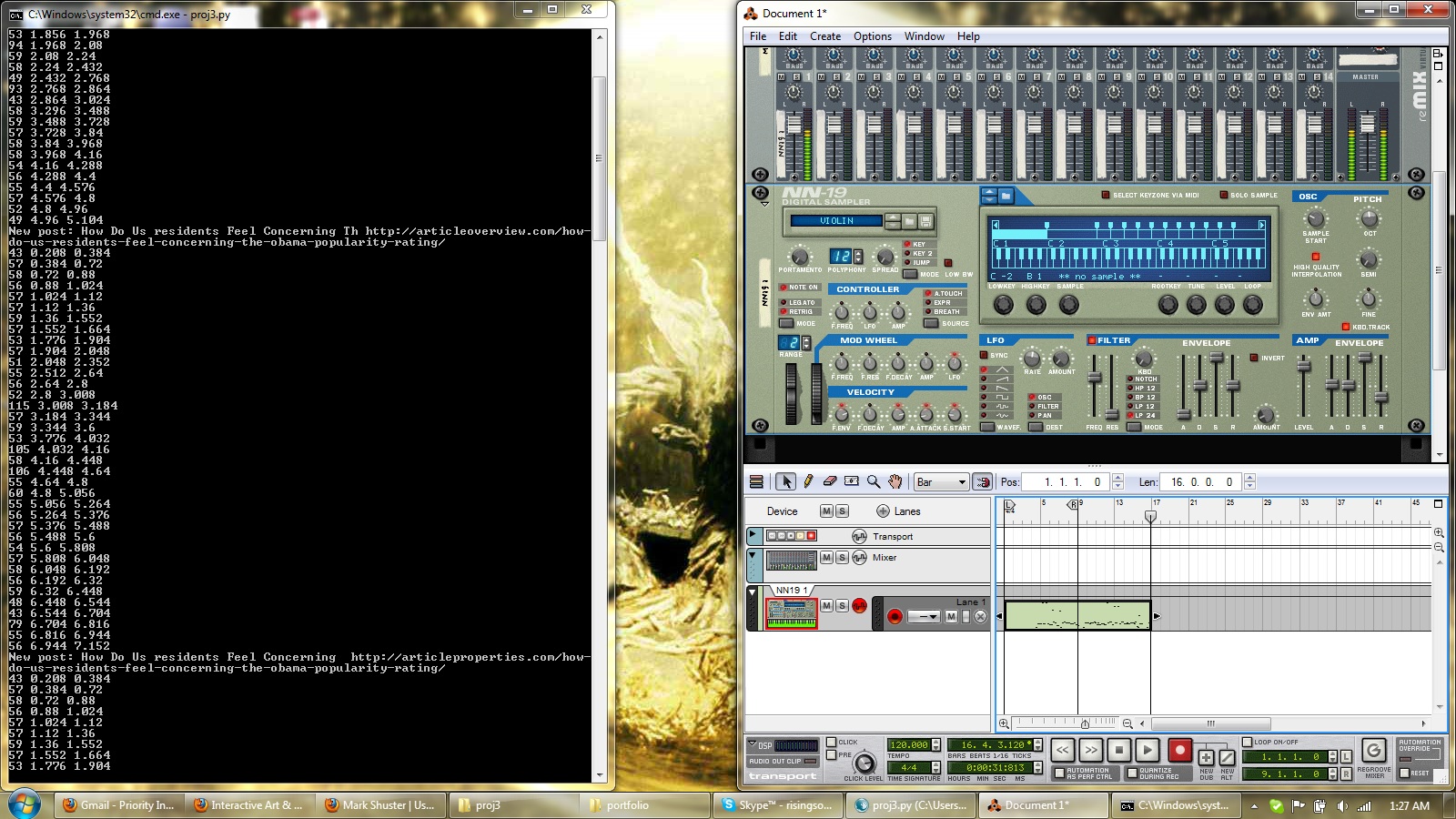

TweetSing is an experiment in generative music composition that attempts to transform tweets into music. The script reads a stream of tweets related to a specific keyword and sends the content to be converted to speech via Google TTS. The audio of the speech is then analyzed and the individual pitch changes are detected. The pitches are converted to a series of midi notes that are then played at the same cadence as the original speech, thus singing the tweet (for the demo, the ‘song’ is slowed to half-speed).

TweetSing is writted in Python and uses the TwitterAPI. The tweets are transcoded using Google Translate and the pitch detection and MIDI generation is done using the Aubio library. The final arrangement was then played through Reason’s NN-19 Sampler.

For an example, tweets relating to President Obama were played through a sampled violin. Listen to ‘Obama’ tweets on Violin.

Hi Mark, here are the notes from the crit. Please update your blog post with some documentation, soon :) –GL

————————————

I actually did not know that Google Translate did TextToSpeech. This stuff is pretty slick.

No man… the crowdz wantz slidez : |

It kind of has some nice feeling to it (not so much a beep beep thing which is good).

Putting this behind the tts would be good, almost as if you are scoring the conversation. (agreed) The tones are interesting, but it needs a little more than just the tones.

I like the piano version. It would be cool if you played the music while you displayed the words on the screen, so people can see what’s going on. Um I love your technical abilities. Go MARK !

There’s a lot of information loss here. What if it spoke? https://www.youtube.com/watch?v=muCPjK4nGY4

I wonder if other languages would sound different.

It might be easier to use the CMU pronouncing dictionary: http://www.speech.cs.cmu.edu/cgi-bin/cmudict You could use that to get the phenomes and use some simple speech synthesis.

We rarely get a chance to hear the musical quality of our own speech. It reminds me of this Radiolab episode: http://www.radiolab.org/2010/aug/09/

Wha no slides? The show goes on.

Wow, very creepy strings… Clint Mansell anyone?

Are the notes organized into any particular key? Could be a really interesting way to have generative improvisational music, like jazz or something

Could you take the spoken word and put it through a generated auto-tune?

I want to hear the original audio, can this be done in (semi) realtime? What are the mappings for data to sound? Sounds too random to tell. The difficulty lies in choosing the right mappings to create a more meaningful sound. Perhaps stretch out the time, build and grow over the course of hours instead of minutes or seconds. Blend in the long term trends with more background instruments with longer attacks and releases mixed with short term sounds/instruments.

Actually sounds not unreasonable.

Very interesting idea and exploration. I little bit of continuity between notes might help, though.

Using the inherent pitch of speech is a great basis for an an audio exploration. And translating the speech into midi notes. When I think of algorithmic audio, I think of accompaniment tracks as well.

It’s possible, with some speech synthesis libraries, to set the pitch of the voice. The Apple speech synthesizer does this, using special embedded command codes.

I think it would be good to hear the speech mixed in, to help explain what we’re hearing.

Neat to think of Twitter as different musical instruments. It would be interesting to hear some combination of different instruments, perhaps depending on some aspect of the tweets would determine what instruments were used. Maybe you could visualize this with the original tweets to give it some context. I wonder if there is a way to communicate the tone or mood of the tweet, or the subject matter, with the sound of the output.

It’s interesting, I wonder if it’s possible to get it to sound like the actual speech

I’d be interested to hear the pitch based MIDI data used as vocoder/autotune input for the original sound – might be too cliche?

I agree with Golan’s comment in class, the lack of context is a setback here, but you deserve mad props for the technical acrobatics that connect this project from start to finish.

Musical sound and conversation it is based on could make a great little web app.

See the Palin Song by pianist Henry Hey, it sets the bar:

https://www.youtube.com/watch?v=9nlwwFZdXck