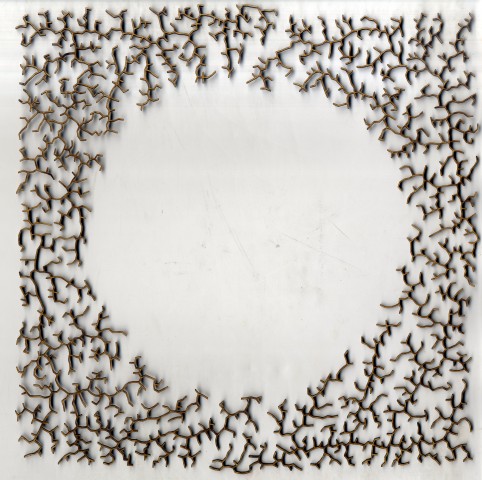

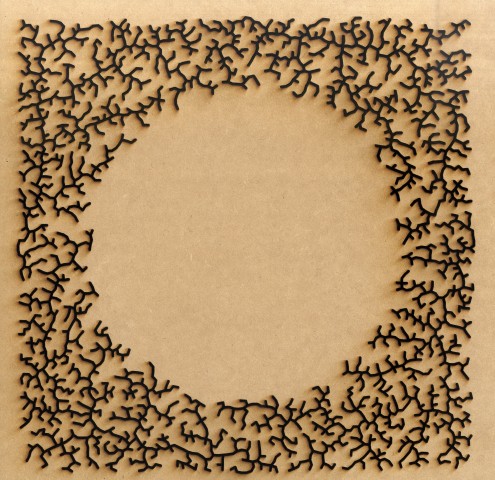

Segregation and Other Intolerant Algorithms [Lasercut Screen]

./wp-content/uploads/sites/2/2013/10/output1.pdf

Drawing loosely from the Nervous System presentation, I began thinking about processes I could exploit to churn out varied, yet unified designs. While searching for information about laplacian growth, I found this pithy sketch by echoechonoisenoise on OpenProcessing, which employs a grid of automata to generate a segregation pattern.

My cells are similarly situated in a grid, wherein three main processes occur. First, a matrix of cells is seeded by a scaled noise field, which is in turn refined and restricted using the modulus operator and a threshold. This design is problematic out of the tube, since the laser cutter wants lines and not filled blobs.

Filled blobs, before the outlines are isolated

Filled blobs, before the outlines are isolated

So the second step is to use a neighbor-counting technique similar to echoechonoisenoise’s to isolate the border of the blob shapes. (If a cell has three out of eight possible neighbors, I can assume with some confidence that it is a bordering cell.) Third, to convert a set of disparate points to vector lines, I plot lines from each cell to the nearest available living cell.

Disclaimer: I try to produce smooth-ish lines in a relatively straight-forward fashion, but I admit that there are instances of weirdo trickery in my code:

import processing.pdf.*;

float cells[][];

float noiseScale = 100.0;

float scaleFactor = 1;

int dist = 3;

//density of pattern

int bandWidth = 1200;

//noise seed

int seed = 9;

int[] rule = {

0, 0, 0, 1, 0, 0, 0, 0, 0

};

int searchRad = 12;

int cellCount = 0;

void setup() {

size(900, 900);

cells = new float[width][height];

generateWorld();

noStroke();

smooth();

beginRecord(PDF, "output.pdf");

}

void generateWorld() {

noiseSeed(seed);

//Using a combination of modulus and noise to generate a pattern

for (int x = 0; x < cells.length; x++) {

for (int y = 0; y < cells[x].length; y++) { float noise = noise(x/noiseScale, y/noiseScale); if (x % int(bandWidth*noise) > int(bandWidth*noise)/2) {

cells[x][y] = 0;

}

else if (y % int(bandWidth*noise) > int(bandWidth*noise)/2) {

cells[x][y] = 0;

}

else {

cells[x][y] = 1;

}

}

}

}

void draw() {

background(255);

drawCells();

//Draw the world on the first frame with points, connect the points on the second frame

if (frameCount == 1) updateCells();

else {

for (int x = 0; x < cells.length; x++) {

for (int y = 0; y < cells[x].length; y++) { if (cells[x][y] > 0) {

stroke(0);

strokeWeight(1);

//Arbitrary

for (int i = 0; i < 20; i++) {

PVector closestPt = findClosest(new PVector(x, y));

line(x * scaleFactor, y * scaleFactor, closestPt.x*scaleFactor, closestPt.y*scaleFactor);

}

}

}

}

endRecord();

println("okay!");

noLoop();

}

}

//Finds closest neighbor that doesn't already have a line drawn to it

PVector findClosest(PVector pos) {

PVector closest = new PVector(0, 0);

float least = -1;

for (int _y = -searchRad; _y <= searchRad; _y++) {

for (int _x = -searchRad; _x <= searchRad; _x++) {

int x = int(_x + pos.x), y = int(_y + pos.y);

float distance = abs(dist(x, y, pos.x, pos.y));

if (x < 900 && x > 0 && y < 900 && y > 0) {

if (distance != 0.0 && (cells[x][y] == 1) && ((distance < least) || (least == -1))

&& cells[x][y] != 2) {

least = distance;

closest = new PVector(x, y);

}

}

}

}

cells[int(closest.x)][int(closest.y)] = 2;

if (closest.x == 0 && closest.y == 0) return pos;

else return closest;

}

//If the sum of the cell's neighbors complies with the rule, i.e. has exacly 4 neighbors,

//it is left on, otherwise it is turned off. This effectively removes everything but the

//outlines of the blob patterns.

void updateCells() {

for (int x = 0; x < cells.length; x++) {

for (int y = 0; y < cells[x].length; y++) {

cells[x][y] = rule[sumNeighbors(x, y)];

if (cells[x][y] == 1) cellCount ++;

}

}

}

int sumNeighbors(int startx, int starty) {

int sum = 0;

for (int y = -1; y <= 1; y++) {

for (int x = -1; x <= 1; x++) {

int ix = startx + x, iy = starty + y;

if (ix < width && ix >= 0 && iy >= 0 && iy < width) {

if (cells[ix][iy] == 1) {

if (x != 0 || y != 0) sum++;

}

}

}

}

return sum;

}

void drawCells() {

loadPixels();

for (int x = 0; x < cells.length; x++) {

for (int y = 0; y < cells[x].length; y++) {

int index = (int(y*scaleFactor) * width) + int(x*scaleFactor);

if (cells[x][y]==1) {

pixels[index] = color(255);

}

}

}

updatePixels();

}

void mousePressed() {

saveFrame(str(random(100)) + ".jpg");

}