Miles: Looking Outwards & Project Ideas

inForm – Tangible Media Group at MIT Media Lab

inFORM – Interacting With a Dynamic Shape Display from Tangible Media Group on Vimeo.

inForm is a Dynamic Shape (shape-shifting) Display by the Tangible Media Group at MIT Media Lab. It is a step towards the group’s vision of “Radical Atoms”, or materials that change their physical form to reflect an underlying digital model. The documentation video is fairly comprehensive. It includes demos in which the display manipulates physical objects, visualizes data and responds to events like phone calls. The inForm reminds me of a pin point impression toy that I used to play with as a child.

I think this project has enormous potential to make the abstract tangible. One could use it to visualize trigonometric functions, or to represent data collected in an experiment. It also has architectural connotations. If one installs inForm in the floor of a room, the room itself can dynamically shapeshift.

“Face Visualizer”, “Face Instrument” – Daito Manabe

From Daito’s description of the project:

‘I got inspired “we can make fake smile with sending electric stimulation signals from computer to face, but NO ONE can make real smile without humans emotion”. This is words from Mr. Teruoka who is my collaborator to make devices.’

The notion of a “fake smile” is the impetus for my “Say Cheese” proposal below.

It’s interesting to conceive of the face as a means of visualizing emotional data. Daito’s project focuses on the performative aspect of the face, and the uncanny reality that a computer can manipulate a face with surgical precision.

I’m enthralled by the idea that facial expressions can be quantified and deployed on a face. It raises possibilities for cyborg theatre, performance art and retail technology.

Here are two project ideas that I thought of:

Consider a video game in which an alien jellyfish that attaches itself to your character’s face, then proceeds to take control of your real-life face.

Empathy Mirror: First, FaceOSC detects the facial expression of a person standing across from you. It then sends that data to a microcontroller which is attached to a series of electrodes. It contorts your face to the other person’s expression.

MOSS, The Dynamic Robot Constructor – Modular Robotics

The MOSS Kickstarter has, at the time of this writing, raised $252,042 – more than $100,000 over the original goal. It still has 20 days to go.

MOSS is the next iteration of Modular Robotics’ previous product, Cubelets. MOSS is a construction kit for building robots from magnetically connectable cubes and other components. One can combine and program them to make an infinite number of tiny robots – no coding required. Additionally, cubes transmit power and data between each other, so there is no need to individually program or charge them.

It’s clear from the success of the Kickstarter campaign that MOSS has the potential to make robotics more accessible than ever before. However, I’m concerned about the viability of a system in which coding isn’t an option. To what extent does this approach preclude complex designs/behaviors?

In any case, I’m excited to see how MOSS develops.

Project idea 1: Say Cheese

For far too long I have suppressed a burning hatred for cultural situations which require smiling. But I have had to “grin” and bear it: the ability to smile on command is a vital skill in America.

Recent immigrants and tourists might not be familiar with American smiling conventions. They might be shocked to find, for instance, that their neutral expression is interpreted as a sign of distress.

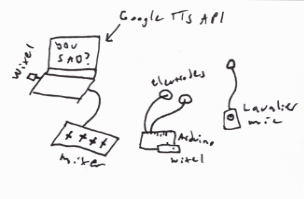

I propose a product called “Say Cheese” with these groups in mind. Say Cheese is a device comprised of a lavalier microphone, two electrodes, a wireless receiver (Pololu Wixel), and an Arduino microcontroller.

Using the Google Text to Speech API, it can pick up on certain key phrases:

• Say cheese

• You okay?

• What’s wrong?

• Are you depressed?

• You should really smile more

• Smile!

Each of these phrases prompts the device to send a current through the two electrodes, which are attached to the user’s face.

French neurologist Duchenne de Boulogne (1806 – 1875) experimented with electrically induced facial expressions

French neurologist Duchenne de Boulogne (1806 – 1875) experimented with electrically induced facial expressions

Regarding style, I figure that electrodes can’t be much worse than earbuds. And plus, wearing a Say Cheese indicates an earnest desire to assimilate to Our Way.

Variation 1: Clerk Control, a means of enforcing customer relations standards in the retail sector. The phrase “thank you, have a nice day” could trigger a wide, toothy grin.

Variation 2: Empathy Mirror. As described in my looking outwards, the Empathy Mirror matches your expression to that of another person (detected with FaceOSC).

Project idea 2: Turn on, tune in

Alarm Gates

Alarm Gates

Every time I pass the alarm gates to exit Hunt library, I hear a shrill, high pitched squeal – but only when I’m listening to music on earbuds. I was curious about this phenomenon, so I asked about it on a sound design forum. Here is what someone had to say:

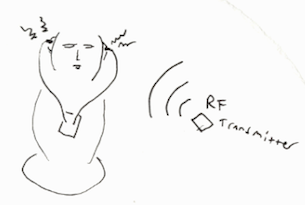

“Basically your earbuds’ cables act as an antenna and pick up the RF signal sent out by the gates to check for tags passing (which when present cause a specific signal to be picked up by the receiver in the gates which in turn triggers the alarm).

To “exploit” this all you need is a radio transmitter and a receiver ;)”

– André Engelhardt, Sound Design on Stack Exchange

I’m fascinated by the prospect of invading someone’s private musical space, even with a modest squeal. I need to research more to find out exactly how this could be implemented, but ideally the radio transmitter would be small and portable. Walking around with it would be like emitting an aural scent to anyone in range (anyone wearing headphones that is).

Backup idea: Breath Graph

Airflow sensor from Cooking Hacks

Airflow sensor from Cooking Hacks

Controlled breathing is a crucial skill in many activities: meditation and singing to name two. While it is possible to watch breathing in the present, it is difficult to notice gradual trends in breathing. The Breath Graph is a simple device that produces a history of breathing during the course of an activity – a breath graph.

It uses an airflow sensor to measure airflow rate from the nostrils, and a thermal printer to print a breath graph – a line graph with airflow rate on the y axis and time on the x axis – when the session ends.