Demonstrations of movement silencing

Thought I would share this for everyone.

http://visionlab.harvard.edu/silencing/

Thought I would share this for everyone.

http://visionlab.harvard.edu/silencing/

Alex and I were thinking of doing something related to manipulating noise/particles in a 3D space. Some sort of noise (perlin noise?) would drift randomly in the space, and when the user steps into the scene, the noise will interact with the user in some sort of way. The user can also use gestures to interact with the noise to control it. The manipulation could be similar to how the jedi manipulate the force in star wars.

It would be interesting to observe two people “duking it out” in our application by throwing and manipulating the particles around them.

A quick idea for interacting with computer vision.

What if life was a comic book? Interactions between people took place using drawn onomonopia balloons. Thoughts bubbles are generated above stationary people, while the microphone knows when to create speech bubbles. Contact generates “Pow, bang, and boom!” The bubbles and contact display with the correct size and context according to depth.

For my project, I would like to create an site-specific installation, in which when a person walks in to a space, a spot light begins to follow the person, and another one that runs away from the person. I would hope that this would encourage the person to play with the two lights, trying to get on to touch the other.

After speaking to Golan, he suggested I look at Marie Sester’s project Access, which is very similar to what I would like to do. Access, however, involves a user on the internet controlling the spotlight, as well as playing audio to the spotlight. Access is more of a commentary on surveillance. For my project, however, I would like to focus on facilitating unexpected interaction, as the participant does not know that they have entered an altered space until the light starts to follow them. I would also like it to feel game-like.

drawing and moving in a world via hand gestures.

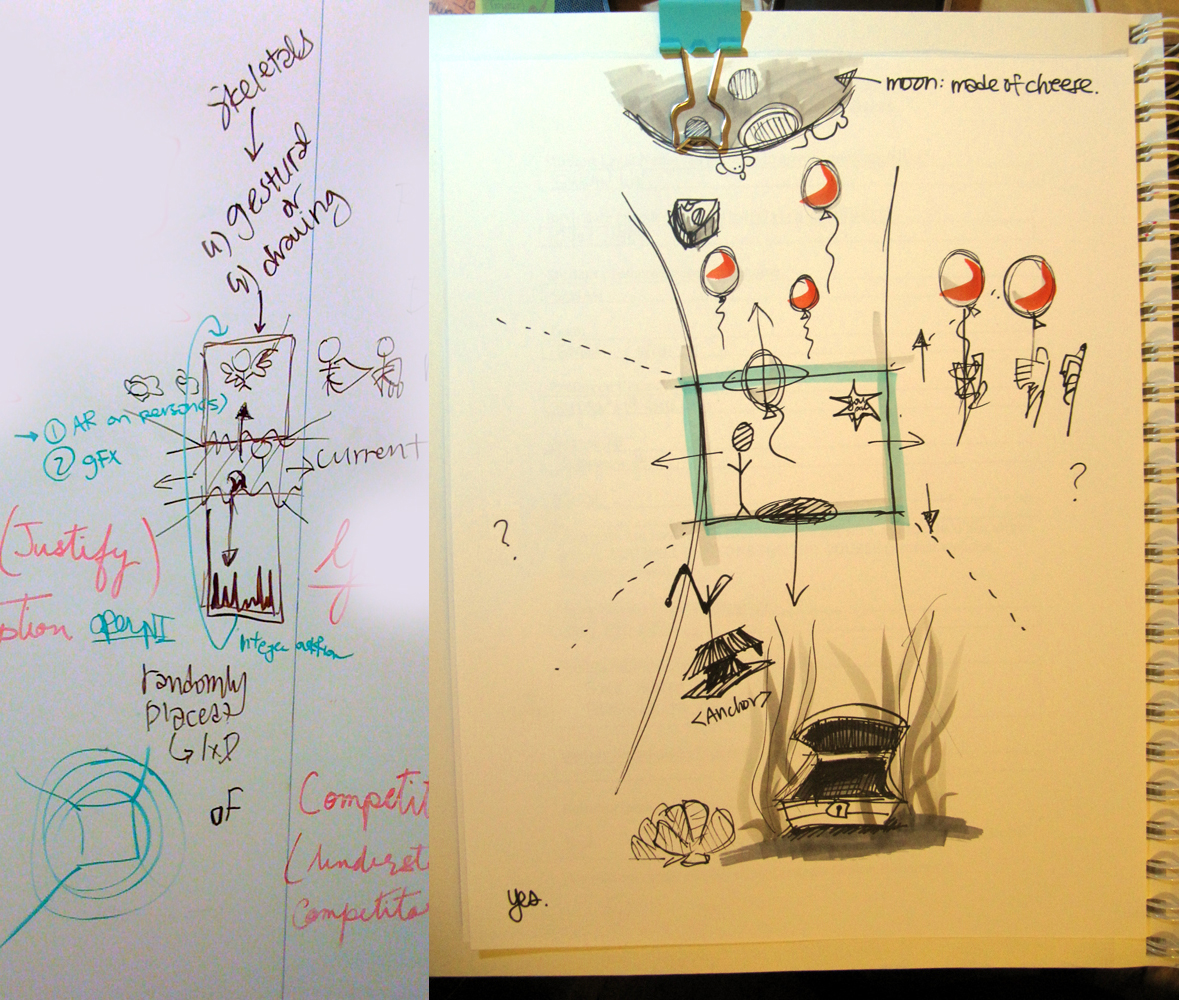

This idea involves allows users to fly upwards (and ideally, if time permits, downwards, and then in all 360 degrees) using hand gestures on the Kinect. Given the right hand gesture, the user will generate a balloon and float up into the sky. The sky will be drawn and/or generated as the user floats. Scrolling will simulate the flight.

We aren’t 100% sure what the final product will be, it will really depend on the finesse of the gesture detection. Potentials include a game where you dodge or collect items in the sky. Or a flushed out virtual world to explore.

We do know that isn’t impossible to detect fingers:

This idea was inspired by Volll’s site. This European design firm came up with a hard to hate layout and navigation scheme.

—

Excited for this upcoming collaboration with Chong Han.

Tim and I have two ideas. First, we have a small box containing sand, clay, blocks, or some other familiar, plastic medium. A Kinect watches the box from overhead and gets a heightmap of whatever the participant creates. A program then renders a natural-looking landscape from the terrain, possibly including flora and fauna, which is projected near the sandbox.

Second, I Kinect watches a crowd of people and a program generates thought balloons above their heads. Thoughts would be selected based on observable qualities of each person, like height, gesture, and proximity to other people. Thoughts could be humorous or invasive.

So it seems really apparent at the moment how little technical kung-fu that we actually know. We’ve gotten the Kinect up and running in Jitter, but we haven’t the slightest idea how to get it to do anything. Other than play with the camera pitch control, which is surprisingly amusing. Scouring forums has proved only that there although is a lot of information out there on this topic, virtually none of it is explained. It’s as if they got past a certain complexity level and then went back and erased all evidence of the basics. Congrats Max MSP community, this shit is Nintendo hard.

With the elephant in the room being our inherent noob-saucery in this field, we need to go forward think of how we’re going to either learn some chops or deal with what we have. It looks like we’re going to have to learn (be taught?) a very large variety of things damned quickly.

The bitch of all this, at least in the planning department, is that we are working with a tool that we don’t quite know the abilities or limits of. Feasibility of ideas cannot possibly be assessed without knowing what boundaries we’re operating within. All this withstanding… some basic ideas.

We want to make a puppet. We want to learn OSC so that we can get the Kinect working with Max, which this guy says can work with Animata. We thought about using Animata to create some sort of puppet, but then kind of realized it would basically just be using the software for it’s intended purpose. And that’s nothing unique. I think that the direction we want to go in is to have bodily actions control some sort of non-human puppet. Controlling a humanoid puppet via a human input gives you a a 1:1 with your arms controlling the puppet’s arm and your legs controlling the puppet’s legs, etc. If we make a non-humanoid puppet, we would force the audience to figure out how to control it instead of automatically assuming a 1:1 input-output ratio (am I making any sense? This is rather tough to explain).

tl;dr:

TECHNICAL

CONCEPT: A puppet that is not a direct human analog.

…Gonna be a long couple weeks.

Honray and I were talking about simulating a constantly swirling force or wind that the user can manipulate with their movements, maybe featuring three dimensional perlin noise effect, or a flocking simulation that would swarm or avoid the user. It would recognize certain movements, such as making a sphere with your hands and respond accordingly

I was interested in the fact that even though the Kinect is designed to represent 3 dimensional space, it technically takes a 2 dimensional capture, leaving a hollow area behind the face you can see. I was thinking about creating a graphic that emerges from the hollow negative spaces, so that you’d only catch glimpses of it from a straight on view, but rotating the scene reveals it. Also playing with the same concept I thought of an app where you could paint on your face or body, like the invisible man. Only the portions of you touched by your hands would become visible on the screen, and you can observe your “hollow” body

For Fall/Winter 2010, Chanel not only hosted one of the most expensive runway shows of the season, but also managed to create the most ridiculous garment ever to grace them. The Chanel “Wookie Suit” could be interestingly recreated using the Kinect. I was looking at the Esotera Processing example, and thinking that you could extend every pixel in the group of pixels that compromise the person in order to create a simple “Wookifier”, so that you too can try on the world’s ugliest suit from home.

For Fall/Winter 2010, Chanel not only hosted one of the most expensive runway shows of the season, but also managed to create the most ridiculous garment ever to grace them. The Chanel “Wookie Suit” could be interestingly recreated using the Kinect. I was looking at the Esotera Processing example, and thinking that you could extend every pixel in the group of pixels that compromise the person in order to create a simple “Wookifier”, so that you too can try on the world’s ugliest suit from home.

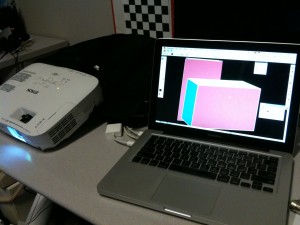

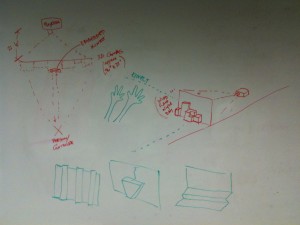

We want to explore projection mapping and the Kinect. We started working on the project by thinking about places where we could project something, the content of the projection and different interaction modalities.

We then moved on to do some testing! We brought a projector and some boxes and modeled how they looked in illustrator. This was a completely ad-hoc approach, but gave us some insight about how things could look…

We ended up being interested in a small scale projection, where the projector is placed behind some sort of 3D canvas. We thought we could place the Kinect right before this canvas, such that it captures the viewers. The current idea we have consists of changing the content of the projection to show rainy, windy, sunny or dark scenarios. We thought that we could do some hand gesture recognition for the interaction (i.e. if the user moves its hands down, then the night comes…)

The original incarnation of this project was inspired by the Good Morning! Twitter visualization created by Jer Thorp. A demonstration of CMU network traffic flows, it would show causal links for the available snapshot of the network traffic. All internal IP addresses had to be anonymized, making the internal traffic less meaningful. Focusing only on traffic with an endpoint outside of CMU was interesting, but distribution tended towards obeying the law of large numbers, albeit with a probability density function that favored Pittsburgh.

This forced me to consider what made network traffic interesting and valuable, and I settled on collecting my own HTTP traffic in real-time using tcpdump. I summarily rejected HTTPS traffic in order to be able to analyze the packet contents, from which I could extract the host, content type, and content length. Represented appropriately, those three items can provide an excellent picture of personal web traffic.

The visualization has two major components: Collection and representation. Collection is performed by a bash script that calls tcpdump and passes the output to sed and awk for parsing. Parsed data is inserted into a mysql database. Representation is done by Processing and the mysql and jbox2d libraries for it.

Each bubble is a single burst of inbound traffic, e.g. html, css, javascript, or image file. The size of the bubble is a function of the content size, in order to demonstrate the relative amount of tube it takes up to other site elements. Visiting a low-bandwidth site multiple times will increase the number of bubbles and thus the overall area of its bubbles will approach and potentially overcome the area of bubbles representing fewer visits to a bandwidth-intensive site. The bubbles are labeled by host and colored by the top level domain of the host. In retrospect, a better coloring scheme would have been the content type of the traffic. Bubble proximity to the center is directly proportional to how recently the element was fetched; elements decay as they approach the outer edge.

The example above shows site visits to www.cs.cmu.edu, www.facebook.com (and by extension, static.ak.fbcdn.net), www.activitiesboard.org, and finally www.cmu.edu, in that order.

Drawing Circles

Create a circle in the middle of the canvas (offset by a little bit of jitter on the x&y axes) for a radius that’s a function of the content length.

Body new_body = physics.createCircle(width/2+i, height/2+j,sqrt(msql.getInt(5)/PI) ); new_body.setUserData(msql.getString(4)); |

Host Label

If the radius of the circle is sufficiently large, label it with the hostname.

if (radius>7.0f) { textFont(metaBold, 15); text((String)body.getUserData(),wpoint.x-radius/2,wpoint.y); } |

tcpdump Processing

Feeding tcpdump input into sed

#!/bin/bash tcpdump -i wlan0 -An 'tcp port 80' | while read line do if [[ `echo $line |sed -n '/Host:/p'` ]]; then activehost=`echo $line | awk '{print $2}' | strings` ... fi |

Seinfelled

According to the creator, “A step by step tutorial on how to make a photorealistic computer generated rendering of a dream I had. In the dream I was drunk or dizzy and I stumbled around in Jerry’s apartment.”

This piece rows of reflective mirrors that interact with a user’s movement when the person moves close to them.

I particularly liked the different moods it creates when you interact with the piece. It almost feels as if an entity is following and watching you as you walk past the mirrors. Yet, when you stand in front of the piece and play with it, the mirrors “play” back to you in response.

It would be even better if the mirrors could move around in addition to changing their orientation. This would be able to bring out the experience more so than non-moving mirrors (imagine the mirrors following you when you walk away from them!).

https://www.youtube.com/user/MediaArtTube#p/c/6C1909B96FB79A2A/21/6rhKIdt4LSE

This piece consists of a columns of drifting membranes that stop moving when a person stands in front of it.

I particularly liked this piece as a work of art. The layout and design of the membranes make the piece look like a work of art.In addition, the interaction is very subtle and graceful: the membranes grind slowly to a halt as a user stands in front of them. This interaction closely models how viewers of art pause to gaze at art in order to appreciate it. When users simply walk past the system, the membranes don’t slow down; this aspect forces users to pause and gaze at the piece in order to appreciate it.

This project uses wood pieces to create a mirror that interacts with people as they walk in front of it. A total of 830 wood pieces and motors are used that rotate vertically, changing their color as the user walks in front of the mirror.

I found the project interesting because of its novel use of wood, a non-reflective material, as a material for simulating a mirror. I am also surprised at how realtime the mirror is, despite its use of mechanical motors for each wood piece. It is also very creative in using the variation in brightness when a wood piece is rotated under light.

I think the project would be really cool if lenticular pieces were used instead of wooden blocks. That way, the blocks would actually change color (instead of brightness) when they are rotated.

Laika Font – interactive kinectic type

I love how this piece explores new ways of creating type, even though it is limited in its mapping of movements in how it translates gestures to font characteristics. It’s a perfect next step in terms of typography. The first faces of moveable type were based on the movements of hand written script. It is only very recently that grotesque, humanist, geometric fonts have becoming conventionally acceptable, as their lack of relationship to the human hand were originally found unpleasant. The concept of allowing human, physical interaction in a different way to inform the creation of font characteristic could be quite inventive and beautiful. It could meld strong computation with physical markmaking of the human body — what if you could dance a typeface, or a yoga position, or your pet ferret.

DaVinci by Razorfish

This game allows people to draw shapes which the program then understands and renders as weighted physical objects. Unlike funky forest, which provides a highly stylized environment, DaVinci creates shapes that are fairly direct translations of the participants gestures. As a paradigm for interaction, it is really lovely, and suggestive of uses — creating games where participants have to move through obstacle courses, or drawing elaborate Rube Goldberg machines. This environment allows the participant to create highly functional (though perhaps not useful) creations that act, with little overhead.

I learned about this project at the openFrameworks lectures earlier this semester, and responded to it because I love how it creates an immersive experience. The installation allows viewers to create and maintain a forest, where they can grow trees with their bodies, interact with flying animals on the walls, and move water on the ground. I appreciate the integration of using real, physical objects to interact with the simulated worlds as well as physical gestures which nudge people to interact in ways that the normally do not. Like my previous post, this project allows the viewer to participate in the creation process. There is a careful balance of creating constructed rules for the environment and interaction with creating an open ended environment that allows the viewers to interact as they feel.

1. Philip Worthington – Shadow Monsters, Responsive Environment 2005

This is definitely an impressive project. Creating shadow puppets is a great concept. I sometimes have the thought that if I have the ability to control the shape of my shadow that will be awesome. I am glad this project made it partially come true. Maybe using shadow to create a fighting game is a great idea?

2. Daniel Rozin – Mirrors Mirror, Motoristic Responsive Sculpture 2008

The idea that using tiny mirrors as pixels is smart. The property of mirror made this project really fun. If using kinect to track a full body movement could be more interesting? Or, the installation could beyond 2D surface? Like distorting mirror?

3. Kinect Rubber Sheet