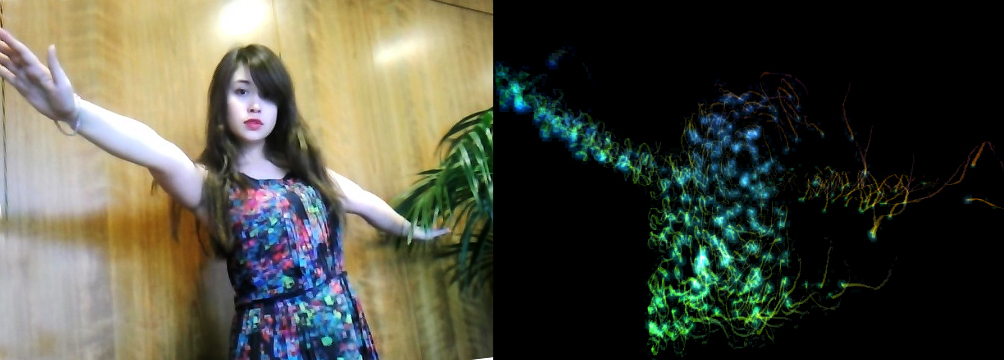

What if you could use hand gestures to control an audio visualization? Instead of relying on audio metrics like frequency and volume, you could base the visualization on the user’s interpretation of perceivable audio qualities. The end result would be a better reflection of the way that people feel about music.

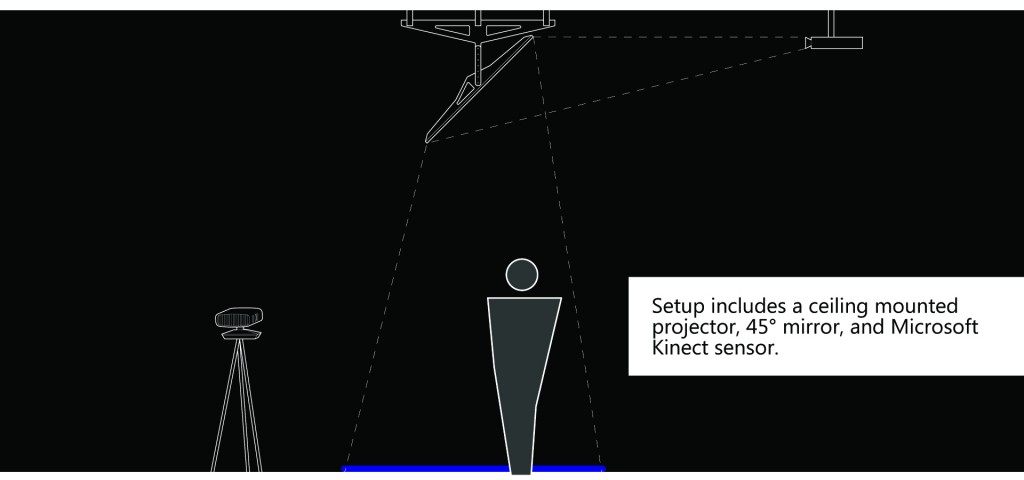

To investigate this, I wrote an OpenFrameworks application that uses depth data from the Kinect to identify hands in a scene. The information about the users’ hands – position, velocity, heading, and size – is used to create an interactive visualization with long-exposure motion trails and particle effects.

There were a number of challenges in this project. I started with Processing, but it was too slow to extract hands and render the point sprite effects I wanted. I switched to OpenFrameworks and started using OpenNI to extract a skeleton from the Kinect depth image. OpenNI worked well and extracted a full skeleton with wrists that could be tracked, but it was difficult to test because the skeletal detection took nearly a minute every time the visualization was tested. It got frustrating pretty quickly, and I decided to do hand detection manually.

Detecting Hands in the Depth Image

I chose a relatively straightforward approach to finding hands in the depth image. I made three significant assumptions that made realtime detection possible:

- The users body intersects the bottom of the frame

- The user is the closest thing in the scene.

- The users hands are extended (at least slightly) in front of their body

Assumption 1 is important because it allows for automatic depth thresholding. By assuming that the user intersects the bottom of the frame, we can scan the bottom row of depth pixels to determine the depth of the users body. The hand detection ignores anything further away than the user.

Assumptions 2 and 3 are important for the next step in the process. The application looks for local minima in the depth image and identifies the points nearest the camera. It then uses a breadth-first search algorithm to repeatedly expand the blob to neighboring points and find the boundaries of hands. Each pixel is scored based on it’s depth and distance from the source. Pixels that are scored as part of one hand cannot be scored as part of another hand and this prevents near points in the same hand from generating multiple resulting blobs.

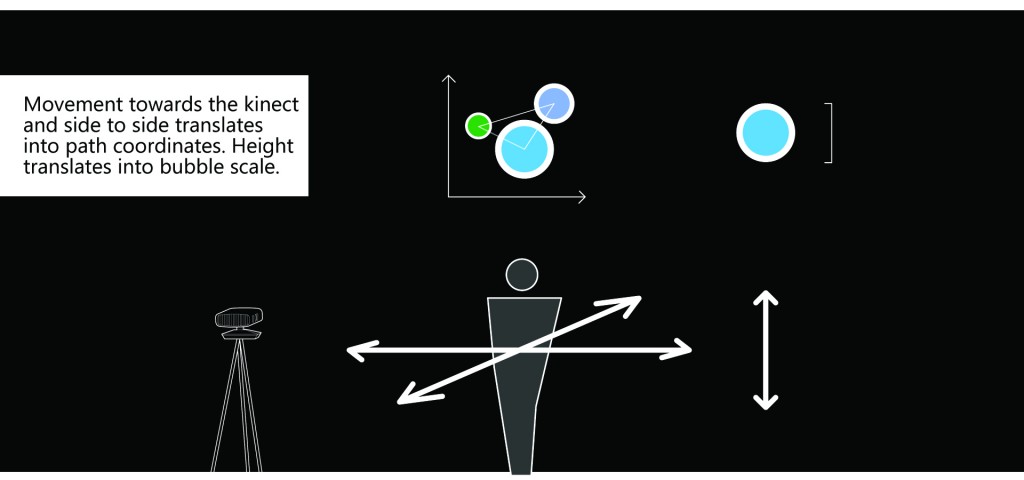

Interpreting Hands

Once pixels in the depth image have been identified as hands, a bounding box is created around each one. The bounding boxes are compared to those found in the previous frame and matched together, so that the user’s two hands are tracked separately.

Once each blob has been associated with the left or right hand, the algorithm determines the heading, velocity and acceleration of the hand. This information is averaged over multiple frames to eliminate noise.

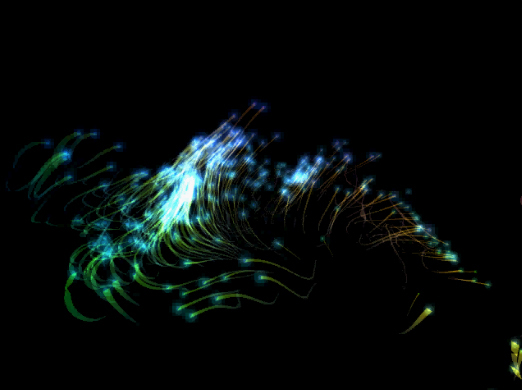

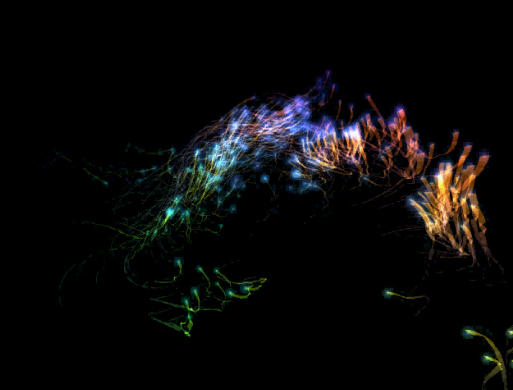

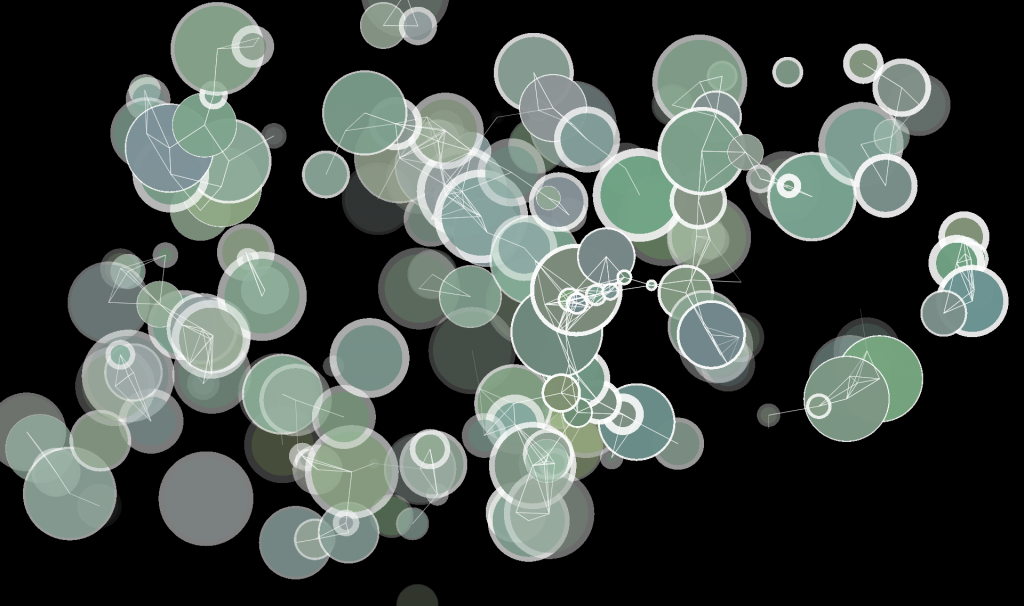

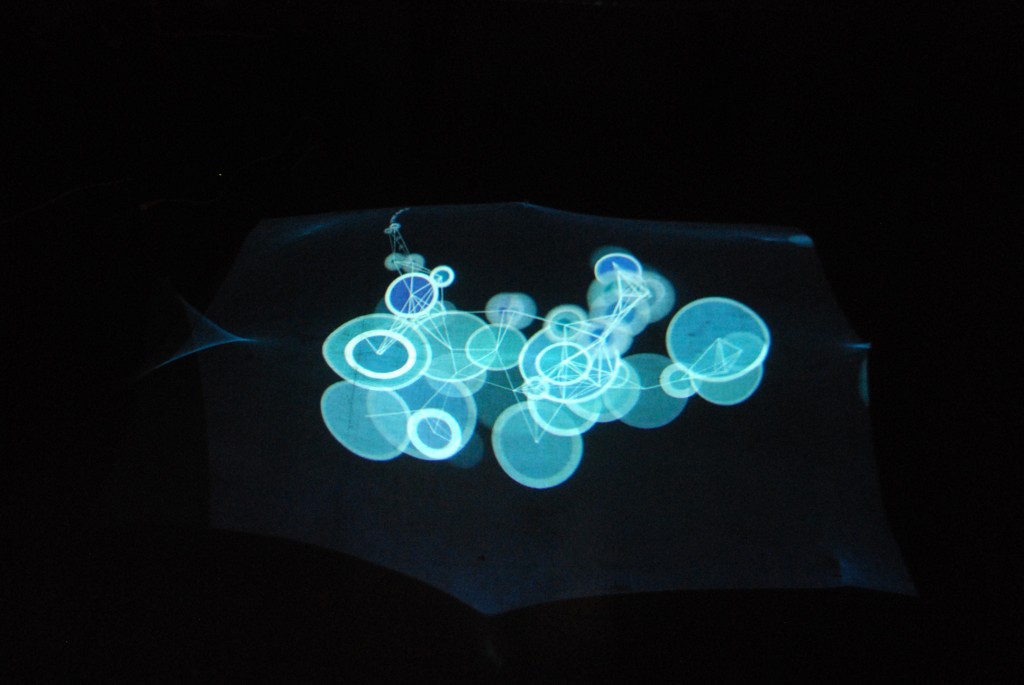

Long-Exposure Motion Trails

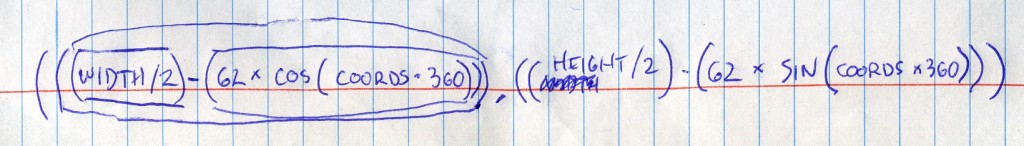

The size and location of each hand are used to extend a motion trail from the user’s hand. The motion trail is stored in an array. Each point in the trail has an X and Y position, and a size. To render the motion trail, overlapping, alpha-blended point sprites are drawn along the entire length of the trail. A catmul-rom spline algorithm is used to interpolate between the points in the trail and create a smooth path. Though it might seem best to append a point to the motion trail every frame, this tends to cause noise. In the version below, a point is added to the trail every three frames. This increases the distance between the points in the trail and allows for more smoothing using catmul-rom interpolation.

Hand Centers

One of the early problems with the hand tracking code was the center of the blob bounding boxes were used as the input to the motion trails. When the user held up their forearm perpendicular to the camera, the entire length of their arm was recognized as a hand. To better determine where the center of the hand was, I wrote a midpoint finder based on iterative erosion of the blobs. This provided much more accurate hand centers for the motion trails.

Particle Effects

After the long-exposure motion trails were working properly, I decided that more engaging visuals were needed to create a compelling visualization. It seemed like particles would be a good solution because they could augment the feeling of motion created by the user’s gestures. Particles are created when the hand blobs are in motion, and more particles are created based on the hand velocity. The particles stream off the motion trail in the direction of motion, and curve slightly as they move away from the hand. They fade and disappear after a set number of frames.

Challenges and Obstacles

This is my first use of the open-source ofxKinect framework and OpenFrameworks. It was also my first attempt to do blob detection and blob midpoint finding, so I’m happy those worked out nicely. I investigated Processing and OpenNI but chose not to use them because of performance and debug time implications, respectively.

Live Demo

The video below shows the final visualization. It was generated in real-time from improv hand gestures I performed while listening to “Dare you to Move” by the Vitamin String Quartet.