This was a really fun and new process that I haven’t experienced. I found that using just a single figure to insert would be simple and nice but just not my style. I used the two versions of my past project this semester to combine their two features with an ongoing pattern. By cutting out the inputs and outputs in specific parts, it became really difficult and time consuming. But overall, this was super fun and I will definitely be using this tool again in future video projects.

Category: Deliverables-08

fr0g.fartz-ImageProcessor

I made a project of me trying to ollie on a skateboard, but put it in outer space! I couldn’t find an option to input my own video, I think you had to pay. It’s fun because I’m actually doing the ollie on grass (much easier, I’ve never attempted it on concrete), but you can’t tell that from the video! It’s crazy how easy this technology is to use. Although it’s less precise than things you could get with Photoshop, it does a pretty great job at detecting which parts you want to stay in the image. I was happy with the result of my project and I will likely use this tool again!

minniebzrg-ImageProcessor

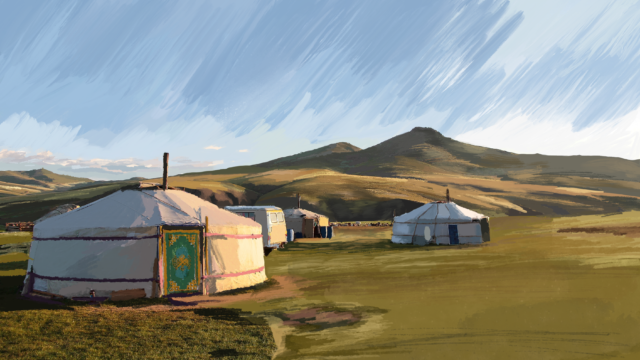

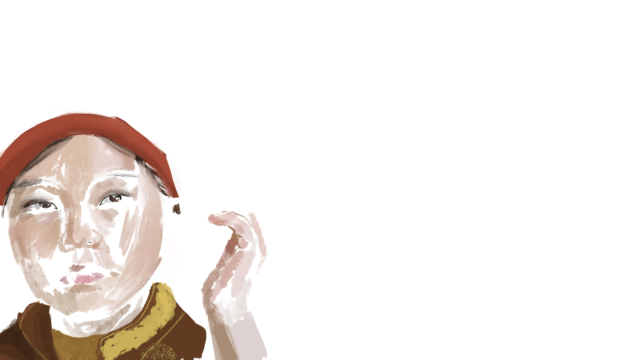

In an alternate life, I would have grown up in Mongolia, spending my summers in the grassland in our summer traditional home(a ger). I would wake up to the beautiful clear blue skies of Mongolia and smell the mixture of livestock and fresh grass in the air. The wind blows through my hair and I thank God for creating me in this land. If I didn’t come to America, this would be a possible reality.

Process:

First, I recorded several videos. My original idea was to make this short film of me doing “daily tasks” that would be a reality in Mongolia. However, I didn’t anticipate the amount of time it would take me to draw the background and foreground, the quality of the video/my drawing skills, and all the steps it takes to make one ebsynth production. I stuck to one keyframe and one short clip and with some more time (and more keyframes) I could complete my original idea. The final product is very rough because there are sections of the video that couldn’t register the style I drew.

“don’t spend more than 2 hours on this” a h a h a….. I had trouble working ebsynth. I ran into trouble because I made the keyframe separately on procreate and this messed up the resolution of the keyframe from the rest of the frames. As a result, ebsynth wouldn’t render the output. I figured out how to do this by redrawing the keyframe using the png from procreate. Luckily I separated the layers so this was possible.

anna-08-lookingOutwards

Okay, where was this when I lived and breathed graphic design? The project I’ve chosen to highlight is titled Fontjoy, by Jack Quiao, which generates font pairings. Its aim is to select pairs of fonts that have distinct aesthetics, but share similar enough qualities for them to be functional as a font pair. I don’t quite understand how it all works, but the fact that there’s a neural net out there that has fonts categorized on a scale of most to least similar warms my heart, if not for any reason other than that someone has made the thing that I’ve very much wished existed every time I go to select fonts on a document.

Use the tool here.

bumble_b – ImageProcessor

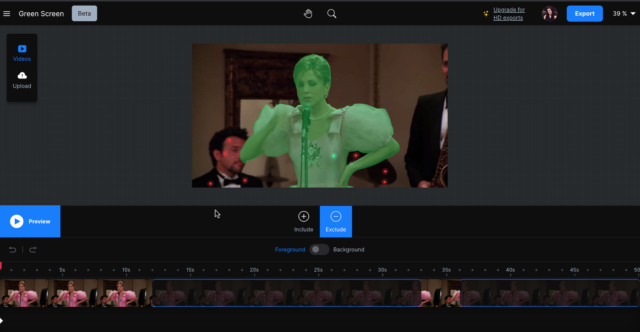

I decided to use RunwayML’s green screen effect to pick out one person in a video and retain their color against a black/white version of that video. I chose a cute scene from Friends (my favorite show ever), and I keyed out Rachel using their green screen feature.

So, I tried to get it perfect, and when I thought it was perfect, I exported it. Unfortunately, once I clicked export it looks like it got rid of all the work I did? Maybe I don’t know the website well enough, but I couldn’t find any saved file or anything. When I went and rewatched the video, I noticed there were some parts I would’ve really liked to tweak, but I wasn’t willing to go and do everything all over again because I was definitely approaching the 2 hour time cap.

Anyway, I took the now green-screened video into Premiere, Ultra Key’d the green screen out and layered it on top of the same video that I added that black/white effect on,

and this is the result:

bumble_b – SituatedEye

The door to my room doesn’t close, which created a unique problem once our puppy Chloe realized slamming her head into my door would open it and she could enter even though she knows she’s not allowed to.

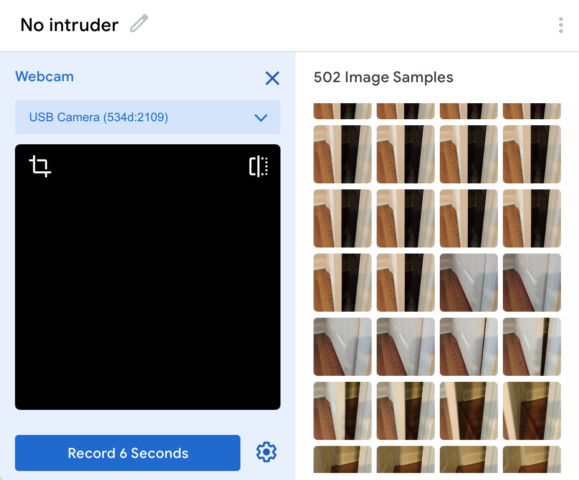

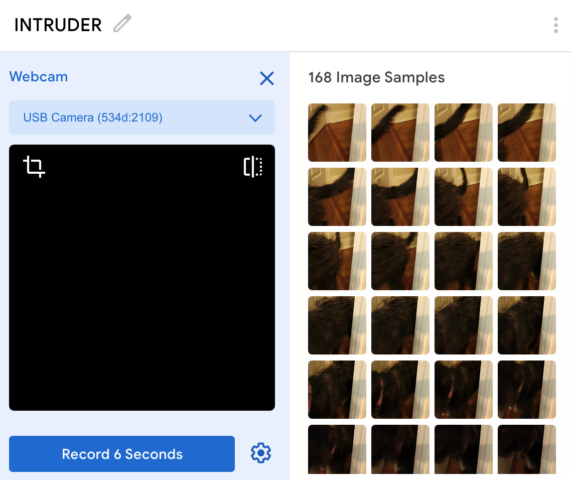

So, I decided my model would warn me every time Chloe came into my room. I taught it that the door being closed, the door being open, and me and my roommates coming in and out were okay (class labeled “No intruder”), and then I taught it that Chloe coming in was not okay (class labeled “INTRUDER”).

Training behind-the-scenes:

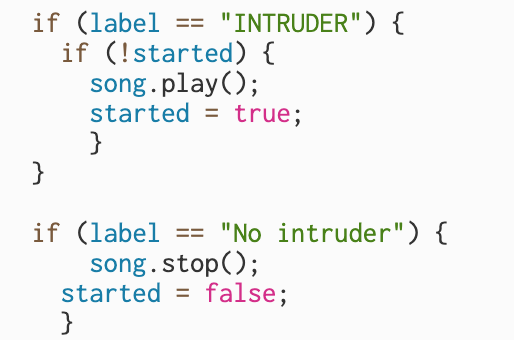

Then, I went into p5.js with my model, and made it so that whenever the video was classified as “INTRUDER” it would play an alarm:

(Disclaimer: I don’t even know if this code makes any sense; I just kept adjusting the lines until it worked because I ran into a lot of weird glitches with other variations.)

So, I present to you all: the cutest burglar you have ever seen:

Here is the gif, though sound is obviously an important aspect of my project:

peatmoss-FaceReadings

This is a little late, but what struck me so hard with the pieces we investigated on Facial Recognition is that all of this is here. It’s already in so much of our lives in so many ways. Another thing that struck me was the research behind (not the actual claim, unfortunately this is very much known to me) the paleness factor in how computers identify white faces better. You can see this happening even in Twitter’s auto-cropping system.

peatmoss-TeachableMachine

Screen Recording 2021-04-16 at 11.28.47 AM

^^ Screen recording of my model. For this I decided to train the model to be able to identify each of my wolf figures as wolves, separate from my hands and my face. It worked pretty well, but some grey elements of my background trick it.

shrugbread- ImageProcessor

I decided to focus on the selfie 2 anime GAN photo manipulating program on Runway ML. I found the results quite hilarious because in most cases it failed entirely to deliver on the promise of realistic anime stylized images. I found some of my results closer to a Picasso rendition than an anime style. The resulting images more so just blocked out certain colors and bumped up the saturation, while fun looking I can’t quite say that they recognized the facial features as easily as in the reference images. A version of this filter also exists on Snapchat and works much more consistently and live, but using it for a bit I can tell that it’s pulling from a database of hairstyles, face shapes, and tracking them onto the face whereas this uses a GAN to tweak the image and until it is considered “anime style”

fr0g.fartz-DeepNostlagia

I made a funny looking video of myself. I don’t think it looks like me.