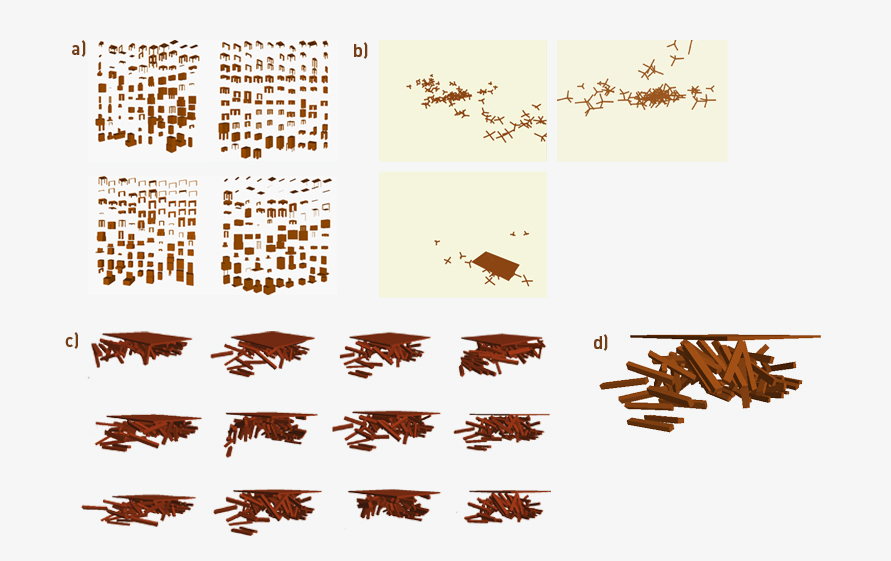

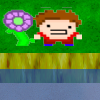

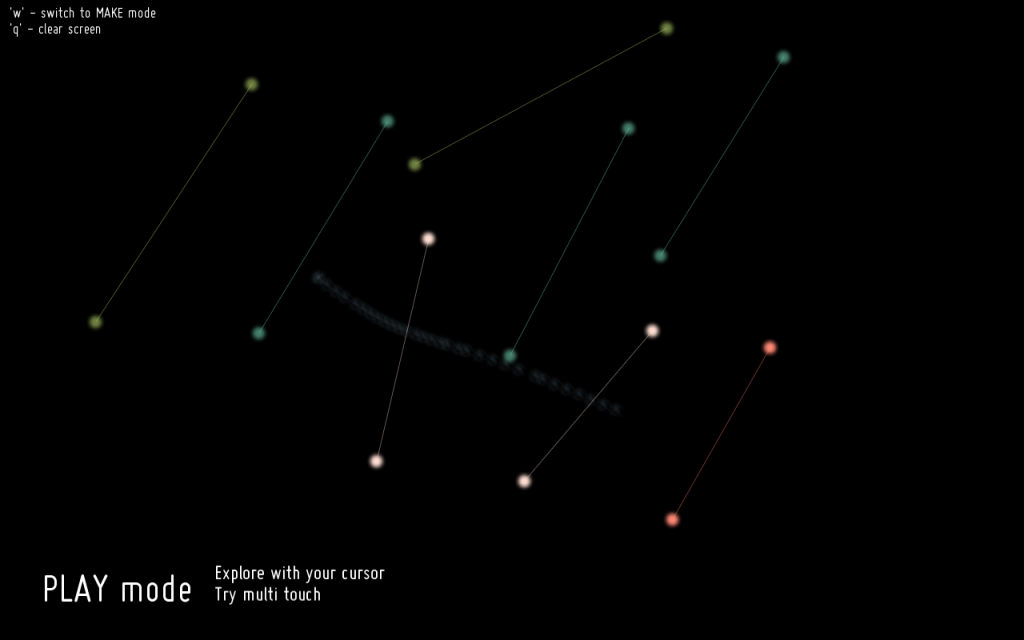

Whatever is built on the table is rendered onscreen as a landscape. Landscapes can be built out of anything. Four heroes explore the world you build. There are many wonderful things in it. But many dangerous things as well. Who knows what will befall them?

Made using openFrameworks with ofxKinect and ofxSpriteSheetLoader. The sprites were loosely based off of examples included with ofxSpriteSheetLoader.

Inspiration

This project was developed from an earlier project by Paul Miller and me, Magrathea. More information on the inspiration and process for that project can be found through that link, I won’t reiterate it here. Instead, I’ll talk about my own inspiration for the particular direction I took this older work in.

I felt Paul and I had been successful with Magrathea, and a lot of the positive feedback we received had to do with how much potential the work seemed to have. A lot of this feedback had to do with the potential of using the engine for a game, and this was something that particularly piqued my interest. I have a strong interest in game design, and the ability to use such an accessible and non-traditional mechanic/control scheme for a game was very exciting. This is what made me decide to work from Magrathea for my final project.

I took inspiration from a number of other sources:

I’ve always been enchanted by the Pikmin games, made by Shigeru Miyamoto at Nintendo. The feeling of controlling a mass of little characters across this giant world that dwarves them and you really appealed to me, and the bond the player forms with the little creatures was something I wanted to evoke.

I also took inspiration from the Lemmings games, in which you have to give tools to a group of steadily marching creatures in order to help them survive through the danger-filled, booby-trapped landscape that they aren’t intelligent enough to navigate on their own.

I wasn’t sure for a lot of this process whether to go down the road of a game, or make a more sandbox-styled project, but I knew I wanted interaction with many characters like in these titles.

Process

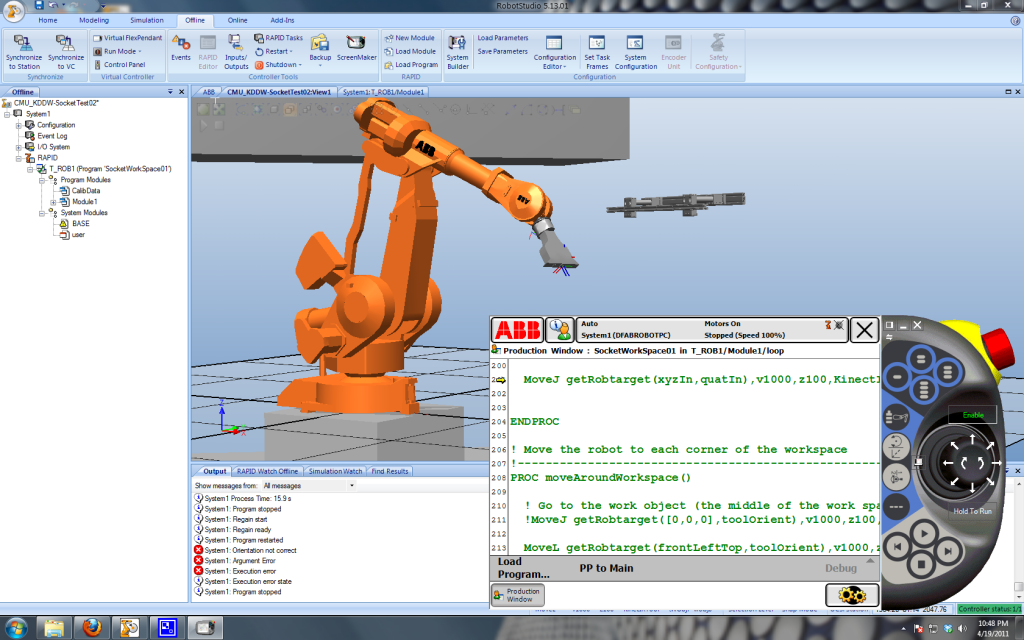

I’ll talk mostly about design issues here, as technical issues are covered in my other blog posts: Hard Part Done and Final Project Update.

The process for finding a game using the Magrathea system wasn’t an easy one for me. I went through a number of ideas, and talked to Paolo Pedercini for advice in developing a mechanical system that worked. The design didn’t really come together until I had to make my “Late Phase” presentation in front of the class, recieve their feedback, see what was working, what wasn’t, etc. It seemed from the feedback I recieved at that session, that people were connecting to the character enough when he was incredibly simple, stupid, and unchanging, so I decided that expanding that, by adding more characters with more moods, deeper behaviors, etc. would result in an engaging and fun end result.

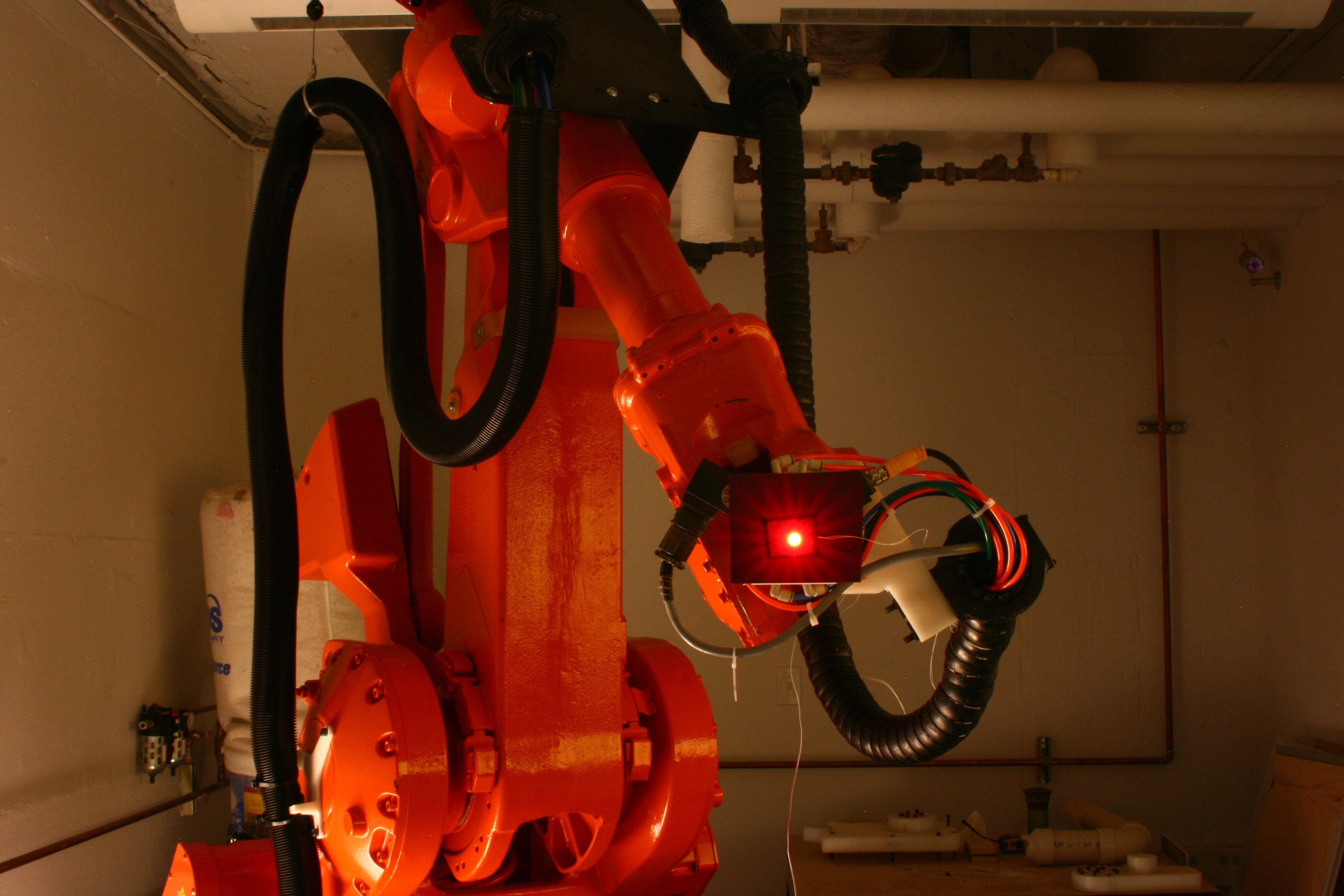

I added the abilities for characters to wander, instead of just seek the current flower, which enabled me to have more flowers, more characters, a troll that can wander as well, etc. The characters were also given new animations for running from the troll in fear, drowning, and a ghost that rises from the point where they died. This helped to flesh them out and make them feel more alive, which led to users enjoying a stronger feeling of connection and interaction with the characters.

Conclusion

After the exhibition, I gained a lot more perspective on this project. If anything, my belief that the core mechanic of building terrain is intensely interesting has been strengthened – a lot of people were drawn in by this. The characters though, seemed to be the hook that stuck a lot of people in, and I definitely think that people developed connections with them. There was a range of approaches – some ignored them, some obsessed over specific ones, some tried to just get rid of the troll whenever he appeared, some tried to help the troll eat everyone, or drown everyone.

I noticed that for small children, the Play-Doh was the biggest appeal at first – the actual landscape generation was totally ignored. After a few minutes though, they began to pay attention and care about the landscape – the characteres, I’m not sure ever made much of an impact, though they certainly noticed them. More feedback might be necessary in order to really hammer the point home.

I’m happy with how this project turned out overall – a lot of people seemed to really enjoy it during the show. I think theres a lot more room to explore using the terrain generation system, as well as systems using the kinect above a table. The most requested / asked about feature was color recognition – This is something I know is capable, but I didn’t have time to implement, or design a reason to implement it. Using multiple colors of play-doh perhaps begs this question, however.

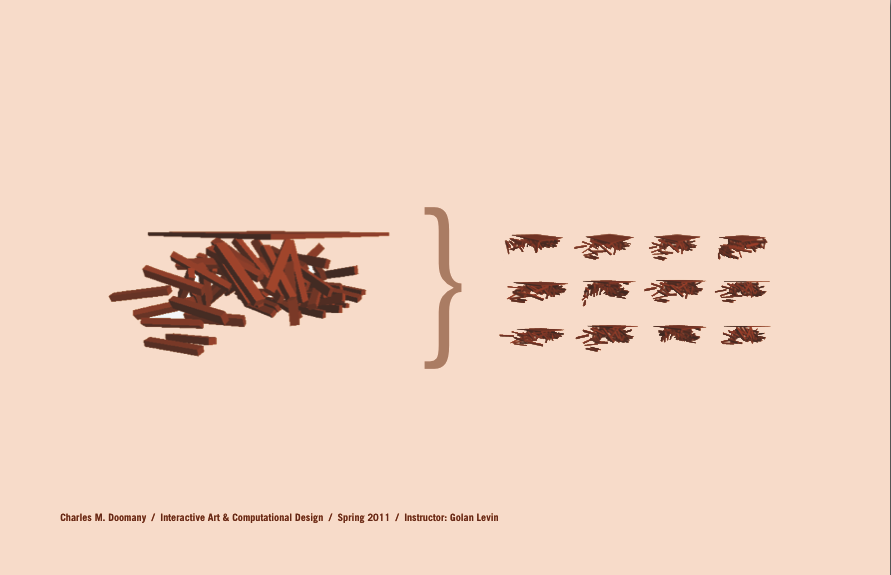

Icon and Summary

Whatever is built on the table is rendered onscreen as a landscape for characters to explore.

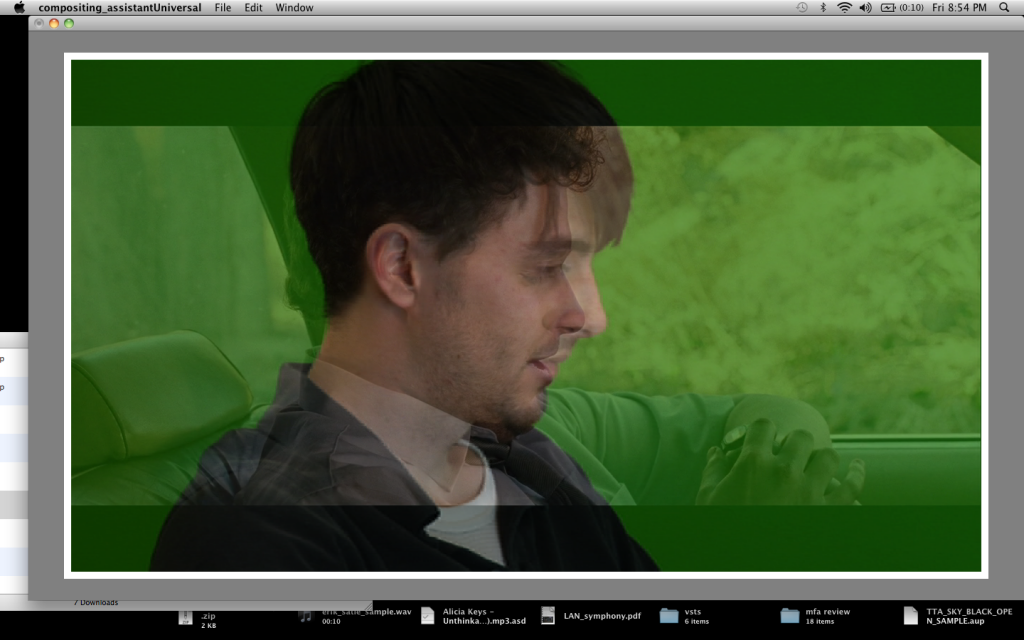

![[Untitled]005](../../wp-content/uploads/Untitled005-300x235.jpg)

![[Untitled]004](../../wp-content/uploads/Untitled004-300x235.jpg)

![[Untitled]003](../../wp-content/uploads/Untitled003-300x235.jpg)

+

+