Matter.js + Webcam Hand Tracking: Real Hand Puppeteering

This project allows users to puppeteer a rag doll with their real hands in their browsers through gesturing in front of webcam. (https://puppeteers.glitch.me)

^ an animated GIF showing a play session

I came up with the idea since it appears to me that human body tracking and physic engines combines well. Maybe it’s because we always wanted touch and feel objects.

I never learned to puppeteer in real life. I thought rag doll physics might simulate it well, so it’s also a chance to try out puppeteering virtually.

Hand tracking has been around for a long time, but I want to make a new tracker in js for the browser so everyone can play without acquiring hardware or software.

I used matter.js for the physics engine, mainly because I wanted to try something new. But in retrospect, maybe box2d.js would have worked better. However, matter.js seem to have an easier API compared to box2d.js’s automatically ported C-style code.

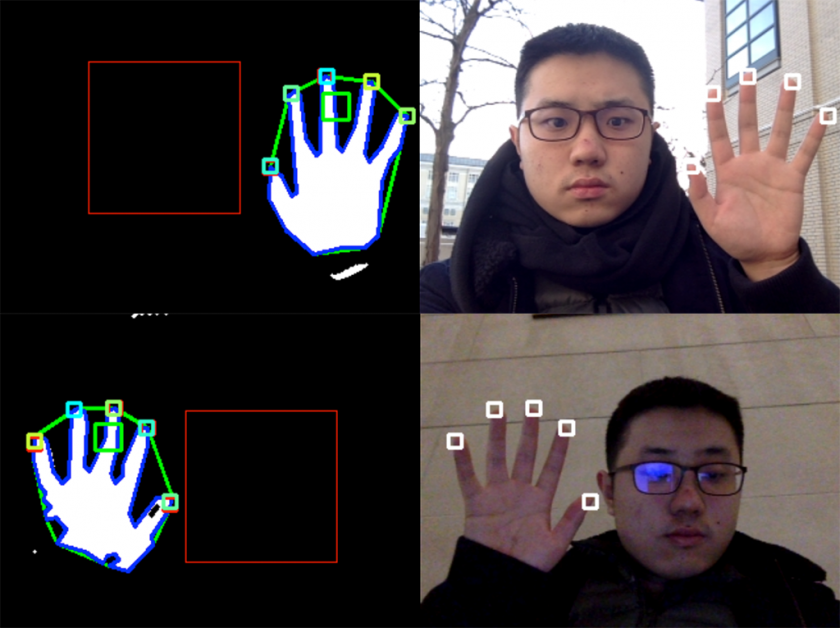

^ screenshot showing debug screen and camera view.

Custom Software for Hand Tracking in the Browser

Demo of finger tracking available on Glitch: https://fingers.glitch.me

^ Screenshot showing detection and tracking of multiple hands and fingers

OpenCV.js is used to write all the computer vision algorithms. I used HSV ranges, convex hulls etc. to find fingers in web cam image.

Assumptions:

- Face and hands of the same person have at least somewhat similar color

- Background is not exactly same color as skin

- Person relatively near the camera, and their face is visible

- Person is not totally naked

- Person not wearing gloves or have stuff over their faces

I’ve worked with OpenCV in C++ and python, but haven’t used the JavaScript port. So this time I gave it a try. I think the speed is actually OK, and API is almost the same as in C++.

^ Testing algorithm before different backgrounds and lighting situations to make sure it is robust.

Result

I think the result is quite fun, but I’m most bothered by matter.js’s rag doll simulation (which I based on their official demo). Sometimes the rag dolls fly away for no apparent reason. One probability is that I’m missing something in the parameters that I need to tweak.

Another problem is that hand tracking is lowering the FPS a lot. When there’s either only physics or only hand tracking, it’s pretty smooth, but when there’s both, things started to get slow.

In terms of puppeteering, I was only able to make the puppet jerk its arm or move a leg. There’s no complex movements such as walking, punching, etc. But I think it’s still interesting to experiment with.

In the future I can also add other types of interactions to the system, for example shooting stuff from fingers, grabbing/pushing objects, etc.