iLids from Marisa Lu on Vimeo.

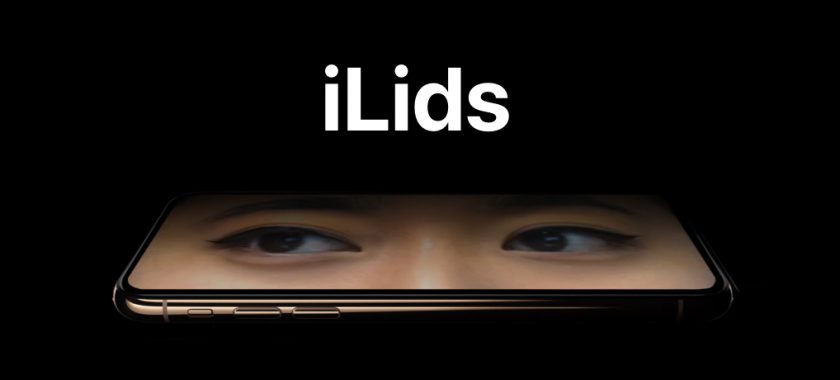

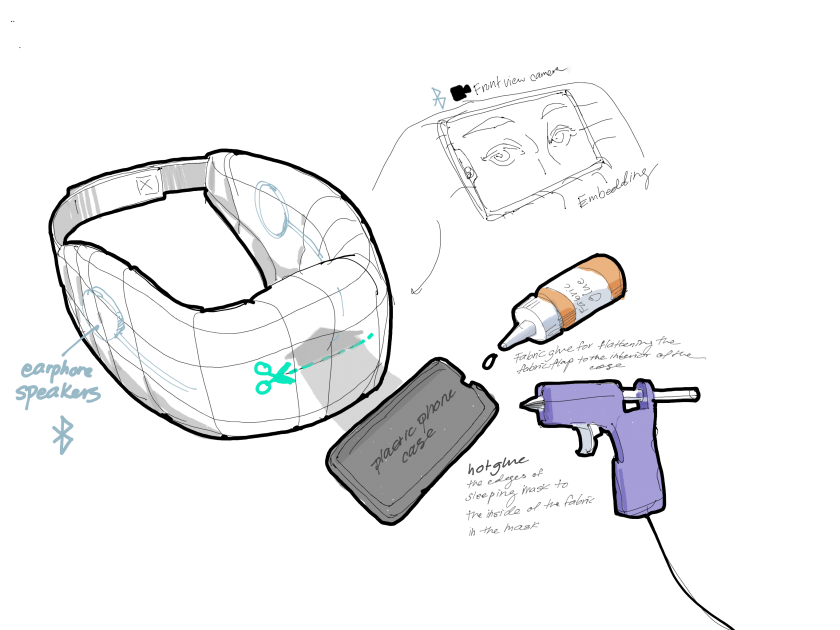

A phone powered sleeping mask with a set of cyborg eyes and an automatic answering machine to stand in for you while you tumble through REM cycles.

Put it on and konk out as needed. When you wake up, your digital self will give you a run down of what happened.

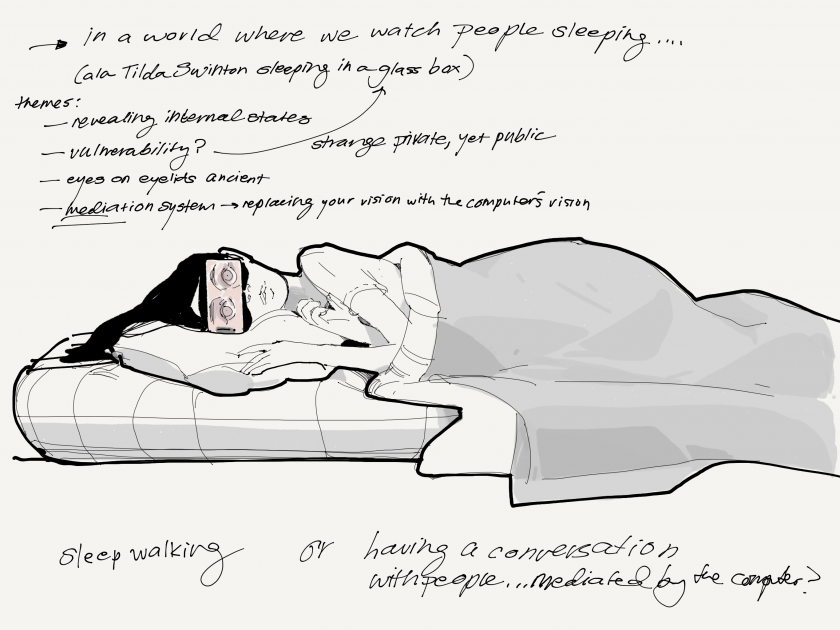

The project was originally inspired by the transparent eye lids pigeon’s have that enable them to appear awake while sleeping. This particular quirk has interesting interaction implications in the context of chatbots, artificial voices, computer vision, and the idea of a ‘digital’ self embodied by our personal electronics. We hand off a lot of cognitive load to our devices. So how about turning on a digital self when you’re ready to turn off? The project is a speculative satire that highlights the ever increasingly entwined function and upkeep of self and algorithm. The prototype works as an interactive prop for the concept delivered originally at the show as a live-sleep performance.

- Narrative: In text of approximately 200-300 words, write a narrative which discusses your project in detail. What inspired you? How did you develop it? What problems did you have to solve? By what metrics should your project be evaluated (how would you know if you were successful)? In what ways was your project successful, and in what ways did it not meet your expectations? (If applicable) what’s next for this project? Be sure to include credits to any peers or collaborators who helped you, and acknowledgements for any open-source code or other resources you used.

Butterflies, caterpillars, and beetles have all developed patterns to look like big eyes. Pigeon’s appear constantly vigilant with their transparent eyelids and in the mangrove forests of India, fishermen wear face masks on the back of their heads to ward off attacks from Bengal tigers stalking from behind. These fun facts fascinated me as a child. In Chinese paintings, the eyes of imperial dragons are left blank – because to fill them in, would be to bring the creature to life. In both nature and culture, eyes carry more gravitas than other body parts because they are most visibly affected by and reflective of an internal state. And if we were to go out on a limb and extrapolate that further – making and changing eyes could be likened to creating and manipulating self / identity.

Ok, so eyes are clearly a great leverage point. But what did I want to manipulate them for? I knew that there was something viscerally appealing to having and wearing eyes that on a twist, weren’t reflective of my true internal state (which would be sleeping). There was something just plain exciting about the little act of duplicity.

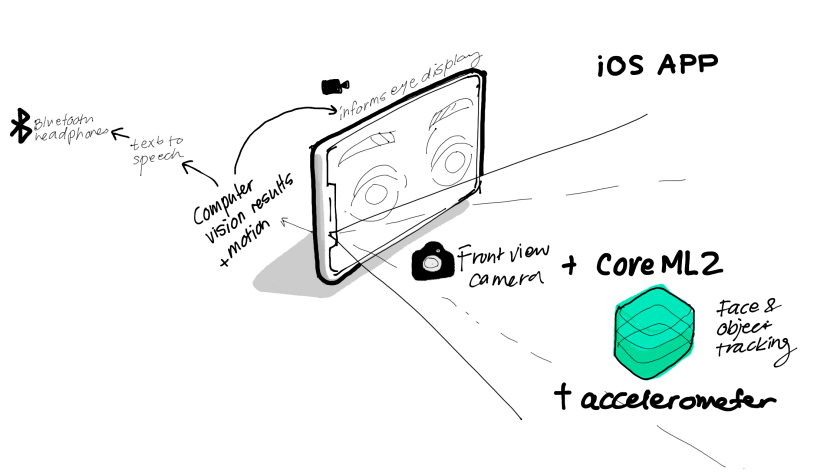

The choice to use a phone is significant beyond its convenient form factor match with the sleeping mask. If anything in the world was supposed to ‘know’ me best, it’d probably be my iPhone. For better or for worse, its content fills my free time, and what’s on my mind is on my phone — and somehow that is an uncomfortable admission to make.

And so, part of what I wanted to achieve with this experience, was a degree of speculative discomfort. People should feel somewhat uncomfortable watching someone sleep with their phone eyes on, because it speaks to an underlying idea that one might offload living part of (or more of) their life to an AI to handle.

Of course however uncomfortable it might be, I do still hope people find a spark of joy from it, because there is something exciting about technology advancing to this implied degree of computer as extension of self.

In more practical terms of assessing my project, I think it hit uncanny and works well enough to get parts of a point across, but I struggle with what tone to use for framing or ‘marketing’ it. And I would additionally love to hear more on how to figure out how to make more considered metrics for evaluating this. On the technical side, the eye gaze tracking could still be finessed. The technical nuancing of how to map eye gaze to ensure the phone always looks like its staring straight at any visitors/viewers is an exciting challenge. For now, the system is very much based off of how a different artist achieved it in a large scale installation of his — insert citation — but could be done more robustly by calculating backwards from depth data the geometry and angle the eye would need to be. Part of that begins with adjusting the mapping to account for the front view camera on the iPhone being off center from the screen which most people are making ‘eye contact’ with.

In my brainstorms, I had imagined many more features to support the theme of the phone taking on the digital self. This included different emotion states as computational filters, of microphone input to run NLP off of and automating responses for the the phone to converse with people trying to talk to the sleeping user.

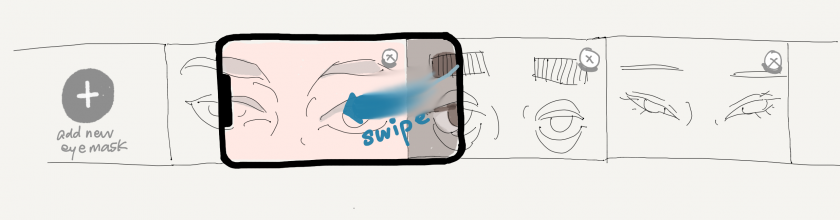

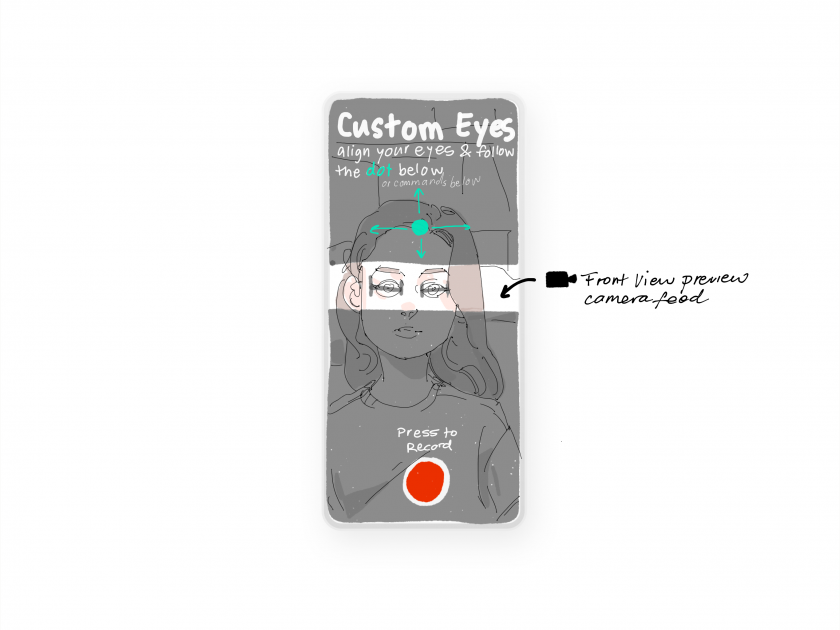

The next iteration of this would be to clean this up to work for users wanting their own custom eyes.

But for now — some other technical demos

Credits:

Thank you Peter Shehan for the concise name! Thank you Connie Ye, Anna Gusman, and Tatyana for joining in to a rich brainstorm session on names, and marketing material. Thank you Aman Tiwari, Yujin Ariza and Gray Crawford for that technical/ux brainstorm at 1 in the morning on how I might calibrate a user’s custom eye uploads. I will make sure to polish up the app with those amazing suggestions later on in life.

- Repo Link.

Disclaimer: Code could do with a thorough clean. Only tested as an iOS 12 app built on iPhone X.