shawn sims-lookingOutwards-FINAL

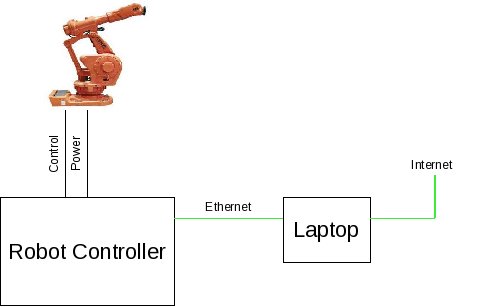

I plan on continuing to work with the ABB4400 robot in dFab. My final goal is live, interactive control of the machine. This may take the form of interactive fabrication, dancing with the robot, or some type of camera rig.

Inspiration

There have been a few projects and areas of research that have given me inspiration. The use of robotic surgery tools is an extremely adept example of interactive/ live control of robots while maintaining the precision and repeatability they are designed for. The ultimate goal of my project is to leverage these same properties of the robot through a gestural interface.

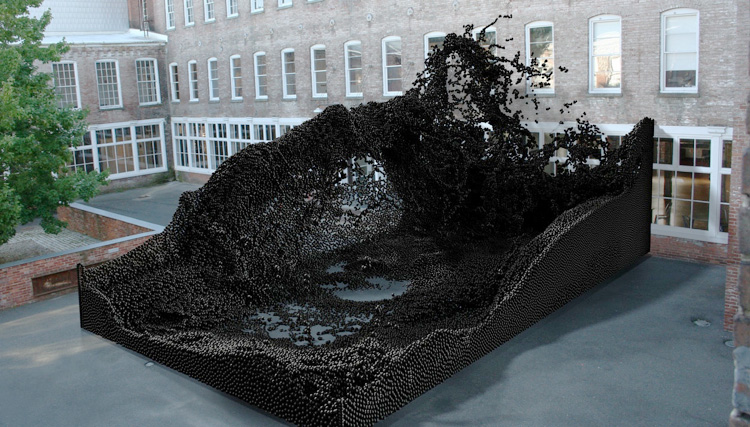

There is a very interesting design space here, which is the ability for these robots to become mobile and perform these tasks in different environments. My vision on the future of architecture is these robots running around building and 3d printing spaces for us. something like this…

Design Goal

The project will explore the relationship between the users movement and gesture and the fabrication output of the robot. This is to say that the interpretation of the input will be used to work on a material that offers a unique and efficient relationship to the user. IE the user bends a flex sensor and the robot bends steel, or the user makes a ‘surface’ by gesturing hands through air and robot mills an interpretation of that surface. A few other ideas are an additive process like gluing things together based on user input like this example…

Technical Hurdles

TCP/IP opensocket communication is proving to be a bit tricky with the ABB Robot Studio software. I beleive that we can solve this problem but there is some worries about making sure we dont make the super powerful robot bang into the wall or something because that would be costly and bad.

Question

What are some interesting interfaces or interactions you can imagine with the robot? input // output?

There are a few constraints like speed, room size, safety, etc…

Thanks

Here are today’s comments:

This seems like an involved project, part of a longer ongoing project. What part of this do you want to realize for the project in this class. I think you need to scale back a bit and work on a sub module for April. Choose a realizable goal.

can you make a DIY match moving rig with the arm using depth data? I would like that for my own personal filmmaking needs http://en.wikipedia.org/wiki/Match_moving – robotic camera – either camera or kinect

That picture is real—it was in the courtyard of a museum in Prague. I have a looking outwards about it. -Max

That is one big ass robot.

There’s a robot at the Carnegie Science Center that might be interesting for inspiration – here’s a video of it:(http://www.vimeo.com/21702710) – Ben

Hell yeah! Big ass robots.

damn. brave voulenteer. is that particle thing real? SO FREAKING COOL.

Okay cool. But what is your actual output going to be? What is the thing? The process will undoubtable cool, but you need a concept for the final form/product. Even though just figuring out how to control it is going to be difficult enough, I definitely think you should make something at the end of the day. (Even if its something simple/small)

When you get live control of the robot, I think that you should have a simple, purely novel demostration of that interactivity … maybe something like playing catch, with one of the people controlling how the robot arm catches …?

What if the robot helps you build something more than just letting it build whatever? This might be an interesting twist

THAT SOUNDS INTENSE.

There will always be a delay of some sort in “real-time” fabrication.

What about clay punching?

What about something that copies an idiosyncratic lump of clay that the user has sculpted?

What about copying+iterating the visitor’s movements? The user scratches the clay, then the robot makes 10 more scratches that are identical.

Building on Ben’s idea, it could be cool to collectively create something with the robot, so each person can contribute and decide where some piece of the final structure should go.

Definitely intense. I like the superpower idea, reminds me of exo-skeleton devices, but frankly I don’t think that’s the most effective way to develop this project.

I’m not sure if interactive can be.. fast enough. i.e. robot keeping up with the human speed?

Almost all kinect + robot arm demos I’ve seen online have been pretty laggy. Functional, but functional like three seconds late.

omg that robot is so scary it looks like a tarantula oh god

Something about having the robot in my guts makes me uneasy.

Going wild with the robot.

Anything that dosn’t involve a mouse would be good.

Having a robot do surgery on a kids toy would be awesome. Or if it played that board game Operation.

Anything that involves live mice, though, is a whole ‘nother ballgame.

I think direct interaction is better. Drawing => scratching a sheet of metal?