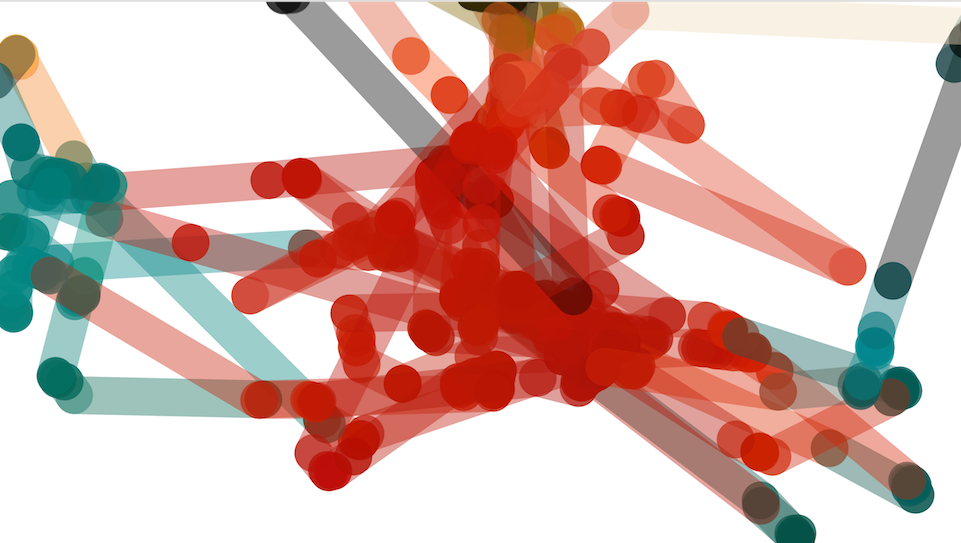

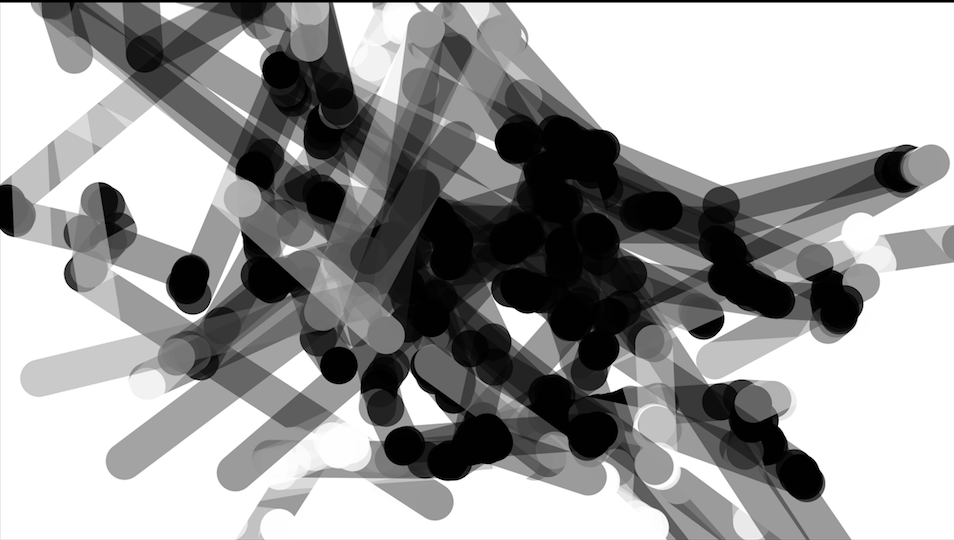

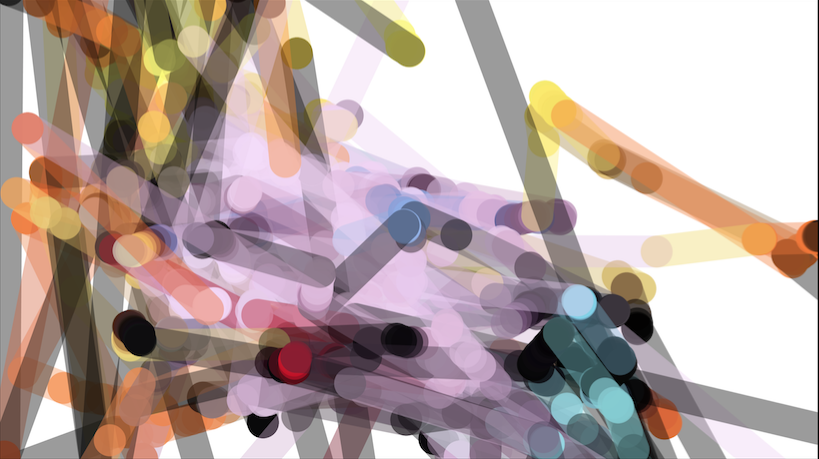

An early version of the program which simply draws a semi-transparent mark at each x,y location the viewer gazes at onscreen. This was simply to get a sense of how the eye moved, what the drawing looked like naturally, and to gauge how much control someone has over their eye drawings (not very much).

In this video clip, I screen recorded as I ran the program with a video from https://vimeo.com/uahirise (hi-res photos from Mars surface). At the end of the video, you can see the resulting eyeball drawing.

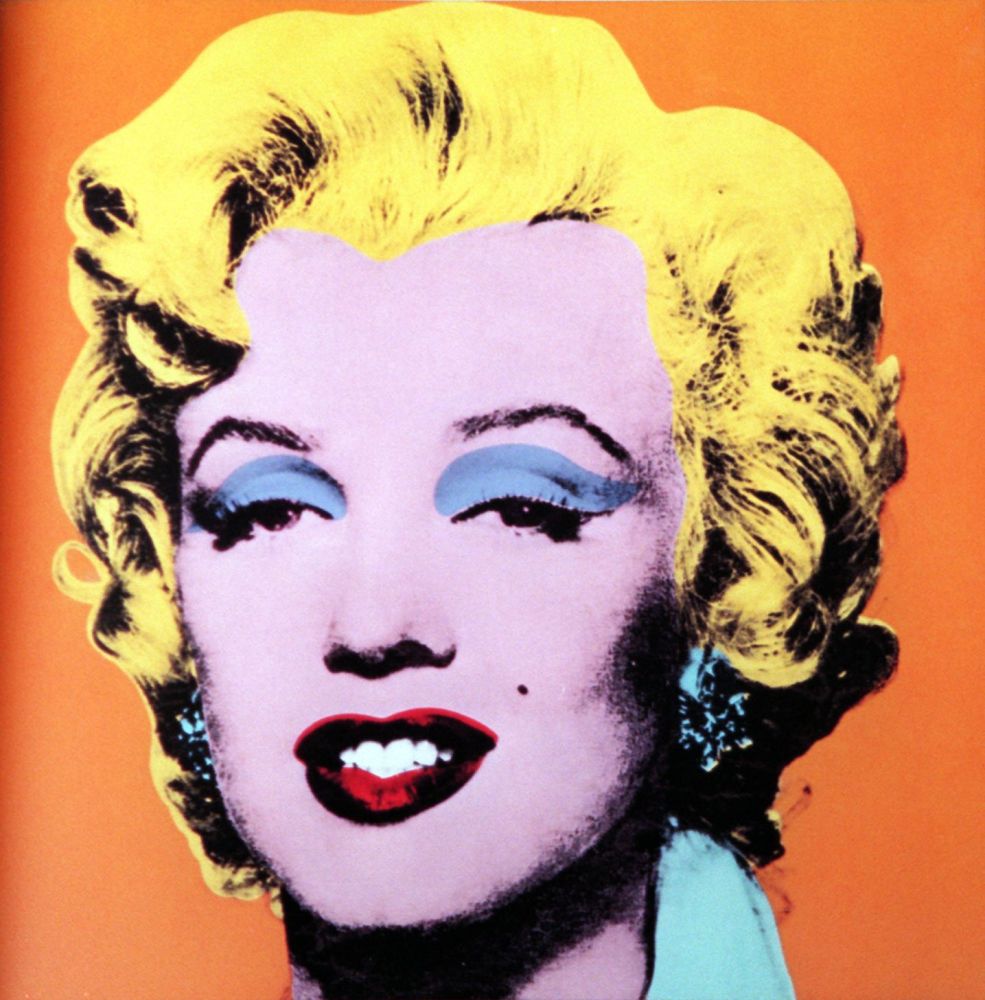

Part of the inspiration for this project came out of curiosity for trying a new input device: an eyetracker (see photo below). One way to augment the act of drawing is to modify how the drawing is recorded or what body part is responsible for controlling the drawing. Typically eyetrackers are used for things like research, usability testing, and advertising to see what holds people’s attention the longest, what they look at, as well as what they don’t look at. So keeping this history in mind, I thought I’d apply eyetracking to other visual artifacts, like movies, clips, drawings, paintings, etc. I like the idea of collecting gaze data over time and visualizing that as a single image. If the experience or memory of looking at an image or movie could be condensed into a single frame, it could be a way to reflect on what you found instinctively compelling.

For the implementation, I used Processing to record the gaze data given off by the Eye Tribe. As a person looks at the frame, the coordinates of the gaze are collected along with the pixel color at those coordinates. At the end of the looking, the program presents the gaze drawing.