For my final project, I continued the work I started in a previous project attempting to isolate and re-contextualize images of people in Duke University’s collection of images of outdoor advertisements.

The previous iteration, in which I located as many faces as I could as sequenced them by date was an interesting step, but I wanted to find a way to work with the images in a way that didn’t lose the original contexts quite so completely.

Conversations with Luca and Golan led me to Microsoft COCO, and from there to this research on conditional random fields as recurrent neural networks as a way to try to isolate entire human figures, not just faces. Although the group published their source code, the installation instructions seemed a bit out of scope for this class deadline. Thankfully though, they also have a working online demo, so I was able to cobble together a script to upload the whole archive to the tool and download the results. Then, using a Photoshop script, I was able to extract all of the highlighted regions from the processed images and use those as a template to cut out the found people from the original photos.

Here’s what the different stages of the process look like:

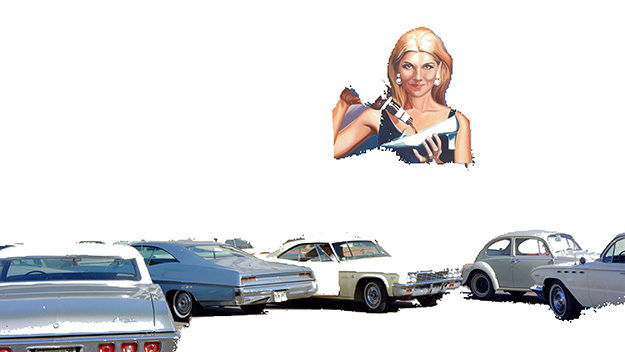

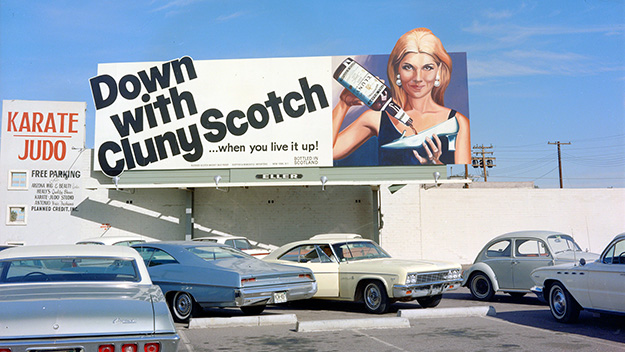

Example of an image from the archive, pre-processing.

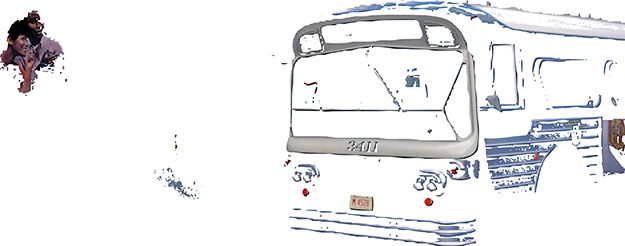

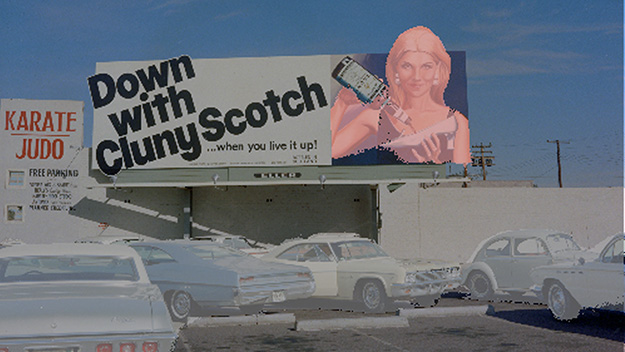

Image returned by CRF as RNN demo with person and vehicles highlighted.

It was a quirk of the way that I was using Photoshop that meant that both people and cars were cut out (those are both categories that the semantic image segmentation demo had been trained on). While this was not my original intent, the results have proved fascinating, setting up interesting relationships between the billboard people and the street scenes they overlook.

More of these cutout images are available here.

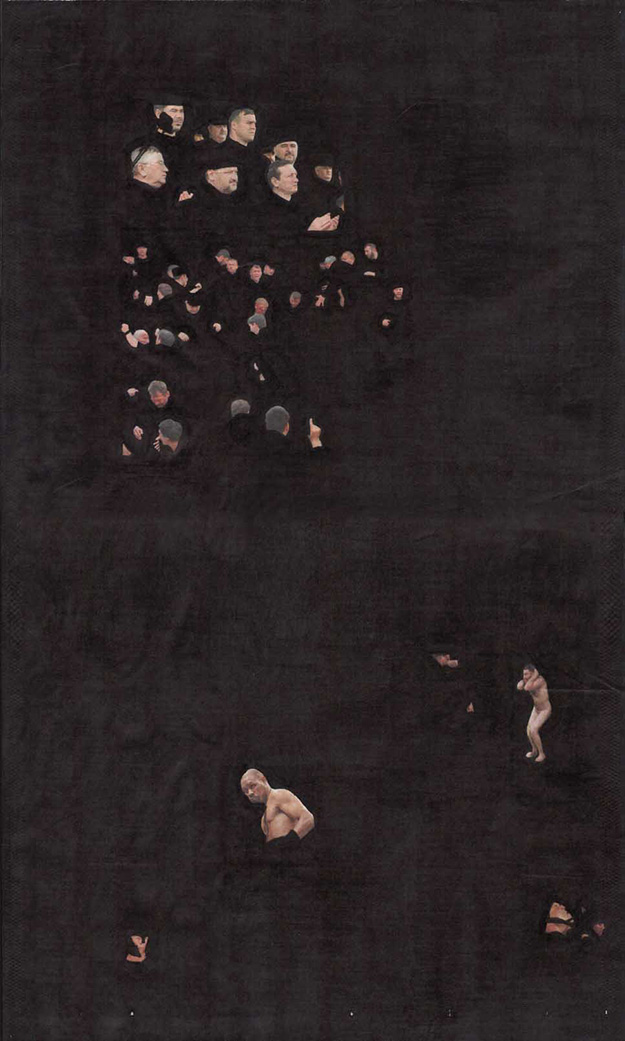

In conversation with Golan, we noted that the cutout images are reminiscent of The Epic, a project by Rutherford Chang in collaboration with Emily Chua in which entire newspaper pages were blacked out except for the faces.

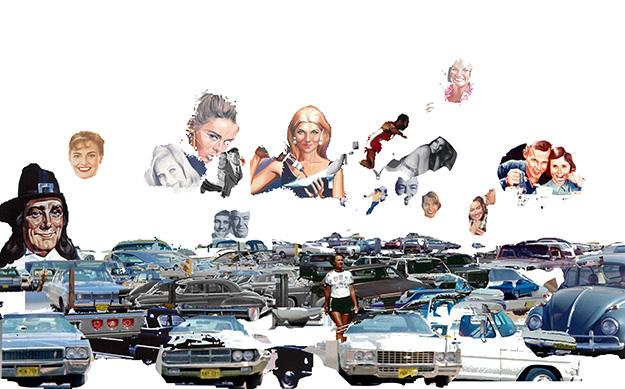

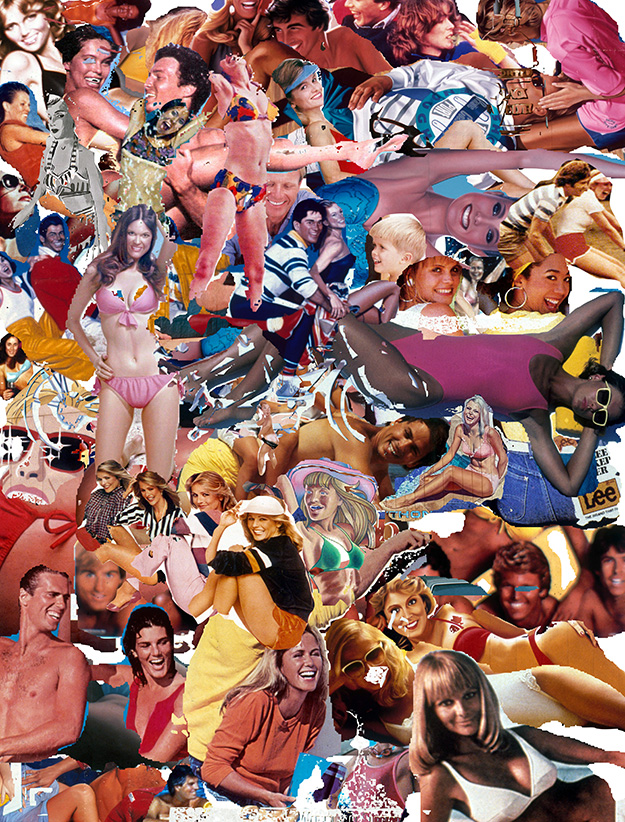

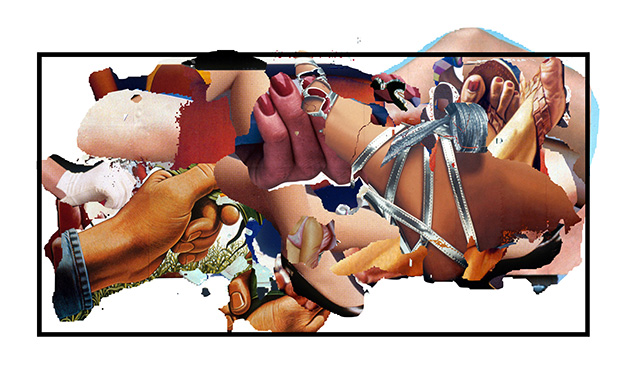

My next impulse was to experiment with combinations of the resulting cutouts. Here are two collages that were created “by feel”:

General response to the single image cutouts was that there is something interesting there that’s waiting to be pushed further. Response to the collages has been that there is too much of my hand in it, and that that’s distracting from what is inherently interesting in the set. So for my final iteration for now, I made more collages, but for these I left all of the figures in the position they inhabit in their original advertisement.

The script for batch processing via the CRF as RNN demo was written in Python, and the Photoshop script for cutting out the highlighted areas was written in Javascript. Both are available on my github here.