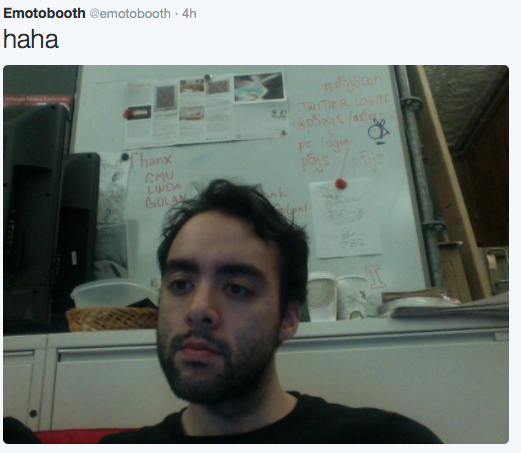

@emotecam monitors users’ keystrokes and takes photos of their faces when they emote in text. Tweets their real face captioned with what they typed.

Emotecam is a continuation of a similar existing project called Emoticam (name changed on Twitter due to conflict with an existing account), which was originally inspired by a conversation I had with a friend over gchat about how blank my face was at all times regardless of the conversation. I’ve considered it unfinished because the existing version required a shared Dropbox folder between me and other users, making it impossible to distribute publicly. It also lacked visibility, since it published results to a webpage (which can be seen here). I decided to use this opportunity to try to add these elements.

Since this is based on an existing project, the individual results haven’t been able to surprise me, but I’m looking forward to seeing how different it feels to have more eyes on it in a social context. Regarding Emotecam identity as a bot, I think it falls best under the category of a ‘feed’, in that the automation is focused on transmitting data about users’ off-network activity. For users of the app and their friends, I can see there being value in following the feed. In my experience with v1, although one expects the results to demonstrate a form of disconnect, there’s also an odd feeling of connectivity in getting to see these particular snippets of friends’ recent activity. Of people further removed from those appearing in the feed, I’d be a bit surprised to see many following @emotecam, but I’m curious to see what about the project does click for strangers.