Adam Milner, an MFA student in the School of Art, manually collected 7,000 images of torsos from Grindr over the course of a few years. I’m currently collaborating with him to computationally analyze the dataset, searching for new forms of meanings.

The torso is the most typical photo used in Grindr profiles. Photos of faces aren’t used in order to preserve anonymity, while photos of explicit content are immediately removed by the site. This leaves the classic torso—the quintessential symbol of masculinity—as the defining feature which these men put forth as their identity.

We began by running the images through ofxTSNE, a machine learning algorithm that uses ofxCcv to encode each image as a feature vector, then reduces the dimensionality of this data to two dimensions. This process produces clusters of similar images. However, since all images were of torsos, there is one primary cluster, within which images are grouped into subclusters based on additional characteristics, like shorts, phones, hair, etc.

Making the photos greyscale before running them through TSNE additionally helped the algorithm cluster similarly oriented torsos.

Using ofxAssignment, the images were then assigned to the nearest (roughly speaking) square on a large grid.

Visualizing the images this way was a good start to trying to understand the dataset’s pattern. However, the brute force of this visualization clouds the more poignant aspects of the images.

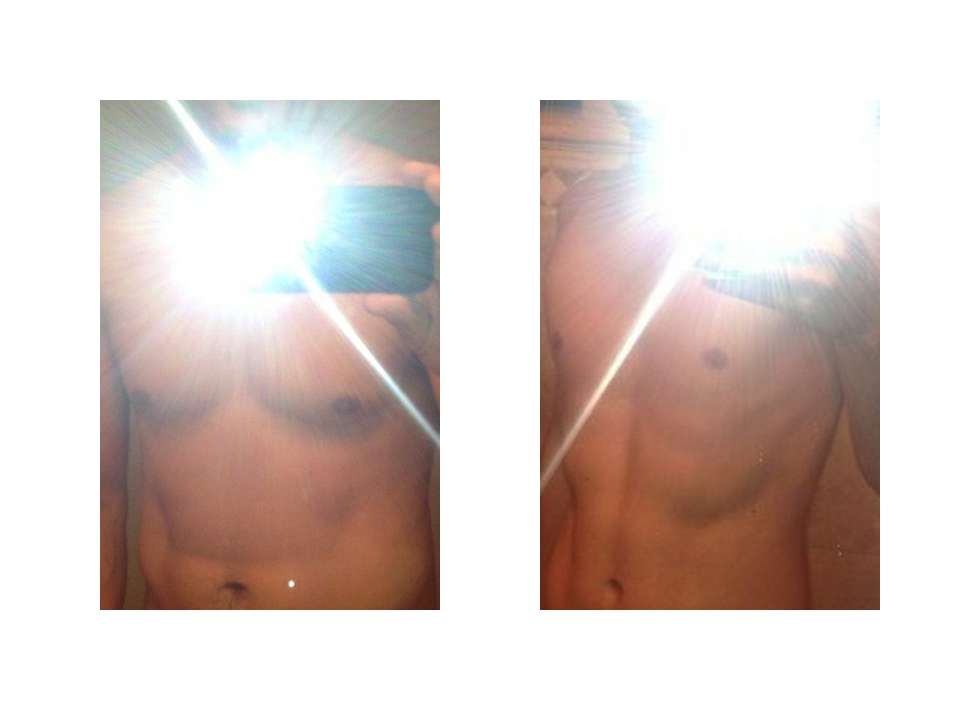

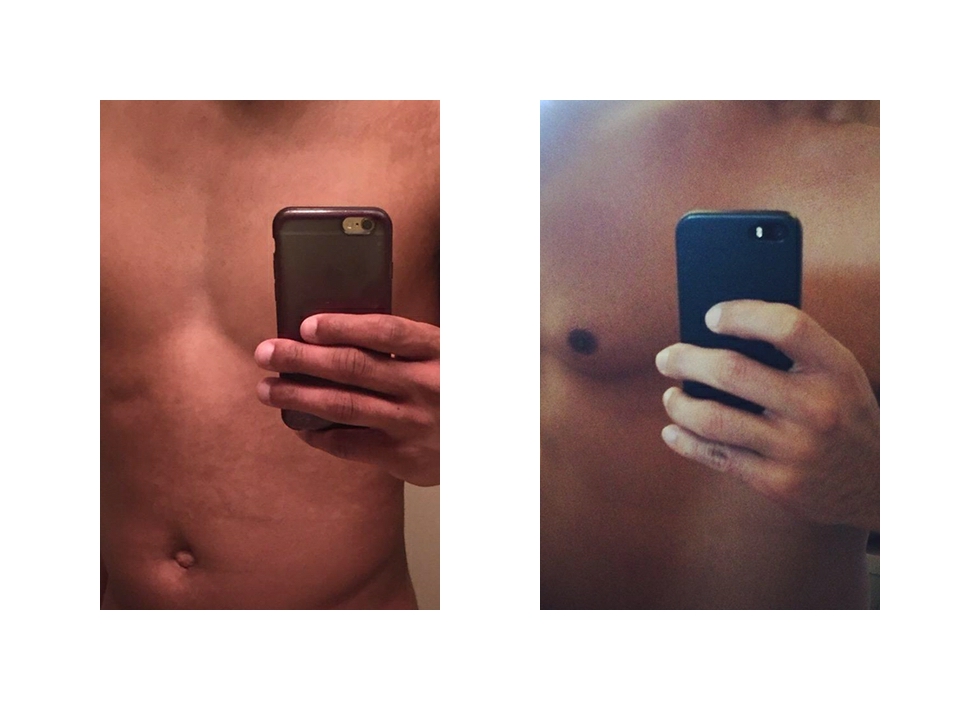

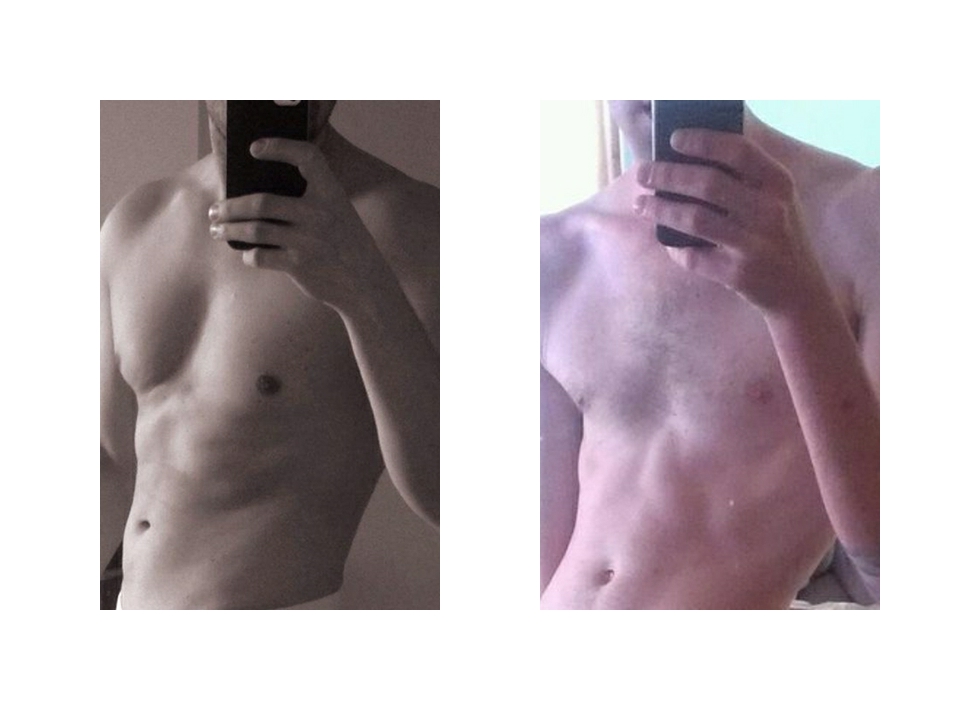

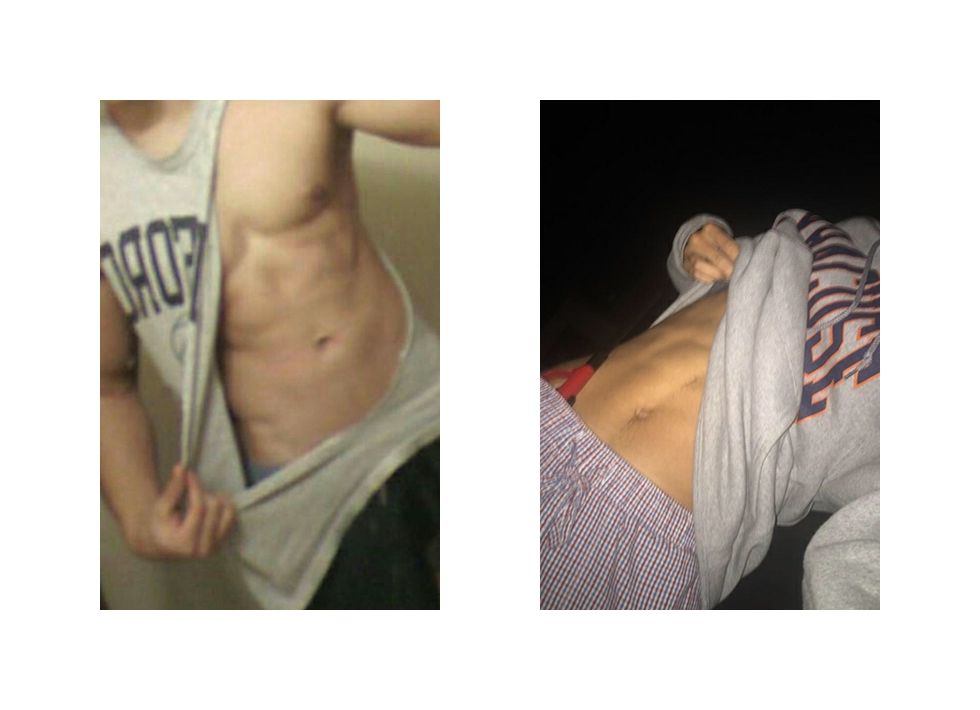

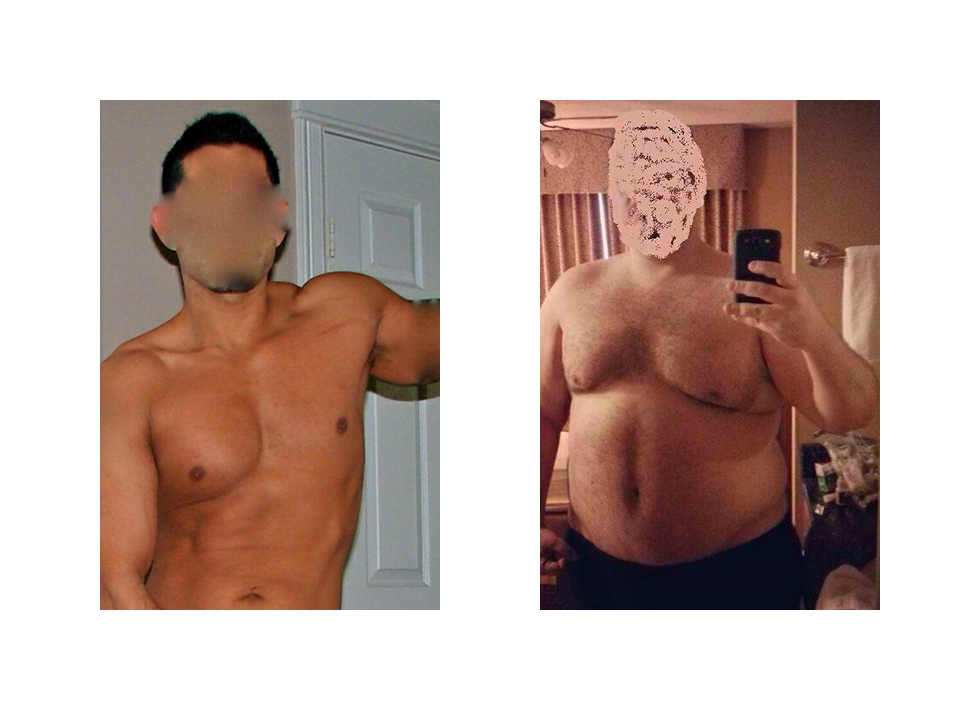

We then compared every image to every other image to determine how close every pair of images was. We took all pairs of images with proximity <= 1% in a normalized feature space and produced diptychs of these pairs. Some of the pairings are quite pronounced: they reveal the similarity with which all kinds of men take photos of their body. Certain archetypal poses develop, especially with regard to photos containing the phone taking the photo.

Additionally, TSNE finds some wonderful diptychs where most, but not all elements, are similar. The “accidental” arrangement of these pairs is wonderful, but still requires a human to select from thousands of generated diptychs.

Our exploration of the data will continue with a more in-depth analysis of the diptychs and processing of the orientation of the torsos using TensorFlow, a machine learning library.

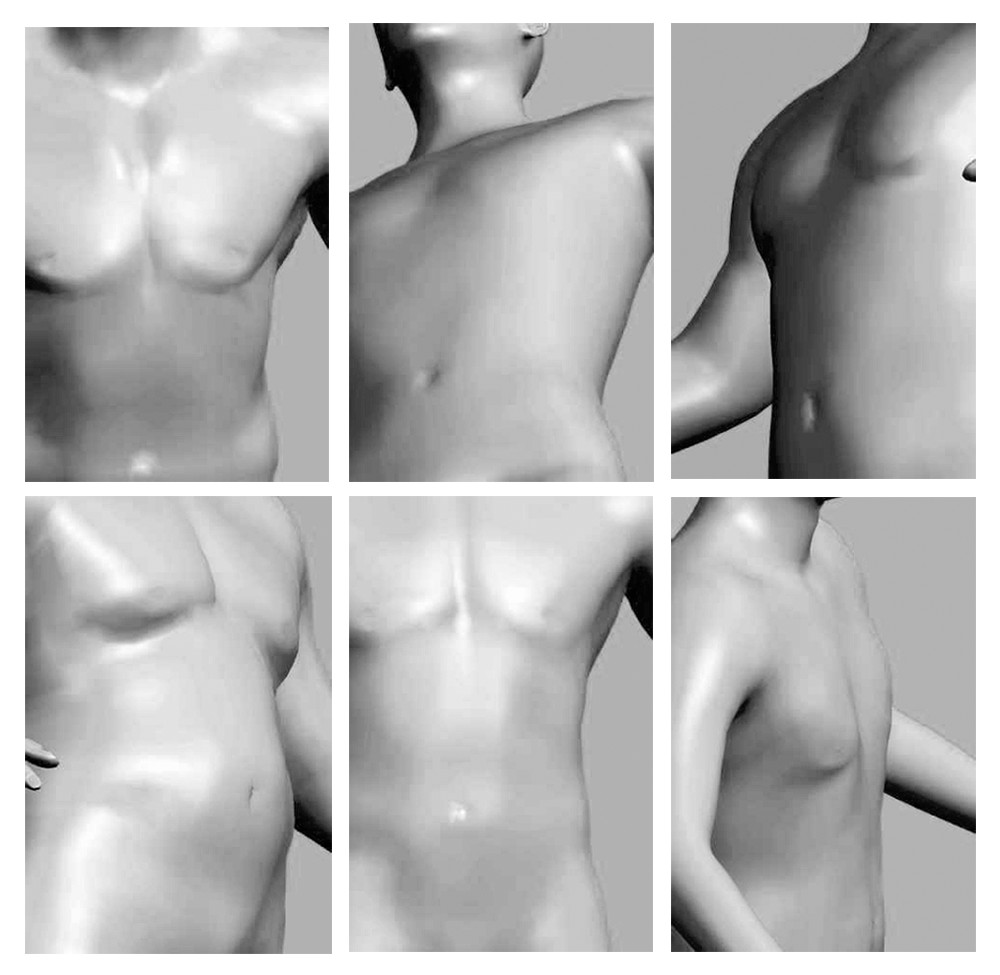

In order to understand which direction each torso is facing, thousands of artificial torsos must be generated with known orientations. Using a javabot made by Luca Damasco, we took 28,000 screenshots of a parametric male model of varying muscularities, weights, and proportions within a free program called MakeHuman.

Using these generated torsos, we can teach a neural net to classify supplied torsos as one of 13 distinct orientations. So far, the model can only predict 36% of the real torsos correctly. We’re continuing to iterate on this, exploring new models like Alexnet.

Code here for processing torsos.