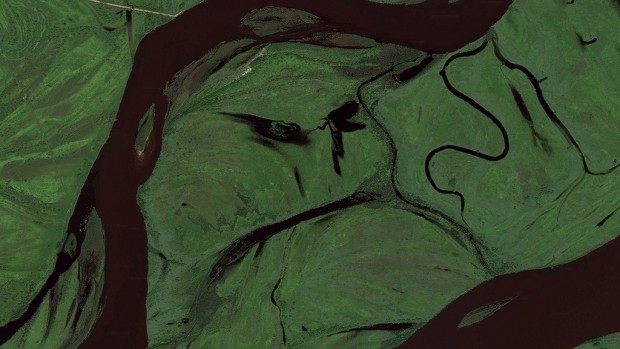

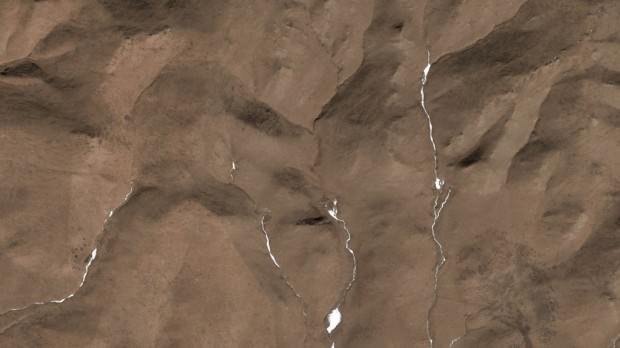

Google Faces is a project by the studio Onformative that aims to identify faces in Google Earth using a face-tracking algorithm implemented in OpenFrameworks.

What fascinates me about this project is the completely novel use of an existing data set to create incredibly moving portraits—portraits that have existed for many years but have never yet been seen. Revealing faces in an object so obvious and so massive—the Earth—reminds me of the childhood stories and cartoons that portrayed ‘Mother Nature’ as alive and anthropomorphic, in much the same way we speak of the man on the moon. This research begs us to give character to our one and only rock, forcing us to see it with whatever brevity as alive as we consider ourselves, but on a scale much more profound.

I’m curious to know if the team that made this turned the Earth at any angle to look for faces, since the earth (nonwithstanding Eurocentric topologies) has no proper “up” or “down.”

What’s even more profound about this project is that they kept the algorithm running for months, looking for faces at an ever-increasingly small scale. With each zooming in, search time increases exponentially. How long would the search take for it to get so detailed that it began to identify our own faces?

———————————————————————————————————————————

I would be curious to start exploring the Machine Learning addons, especially ofxLearn from Gene Kogan. I’m interested in exploring the relationship between human and computer and pushing the limits of computational modeling of biological systems, especially of the mind.