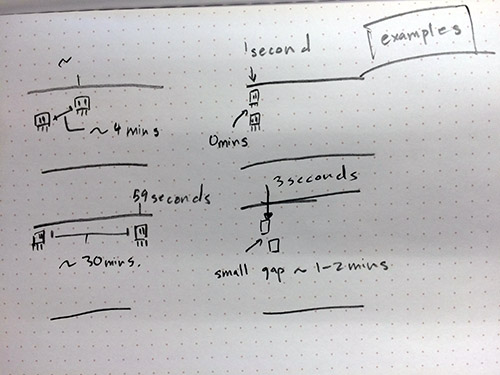

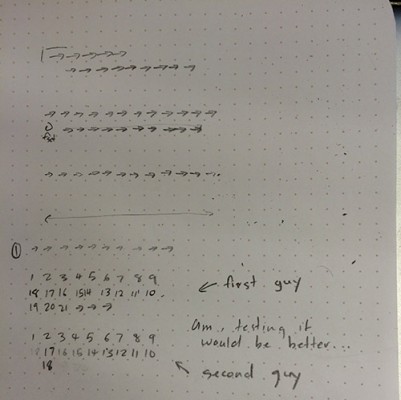

starts synced ->, synced <- bottom guy turned first.

Hi this is my poem / timer.

Each row is 30 steps, and takes 30 seconds.

The return trip included makes it 1 minute per cycle. At each 59th step of the second being, he/she turns around before the 60th step. This causes a small gap that represents a minute. Each round the characters’ gap increases once as more minutes are represented. This pattern eventually cycles though and returns to its default state. There can be a total of 60 gaps between them. Meaning that this piece can time up to exactly 1 hour.

It’s also a little poem about two friends separating and getting back together :D

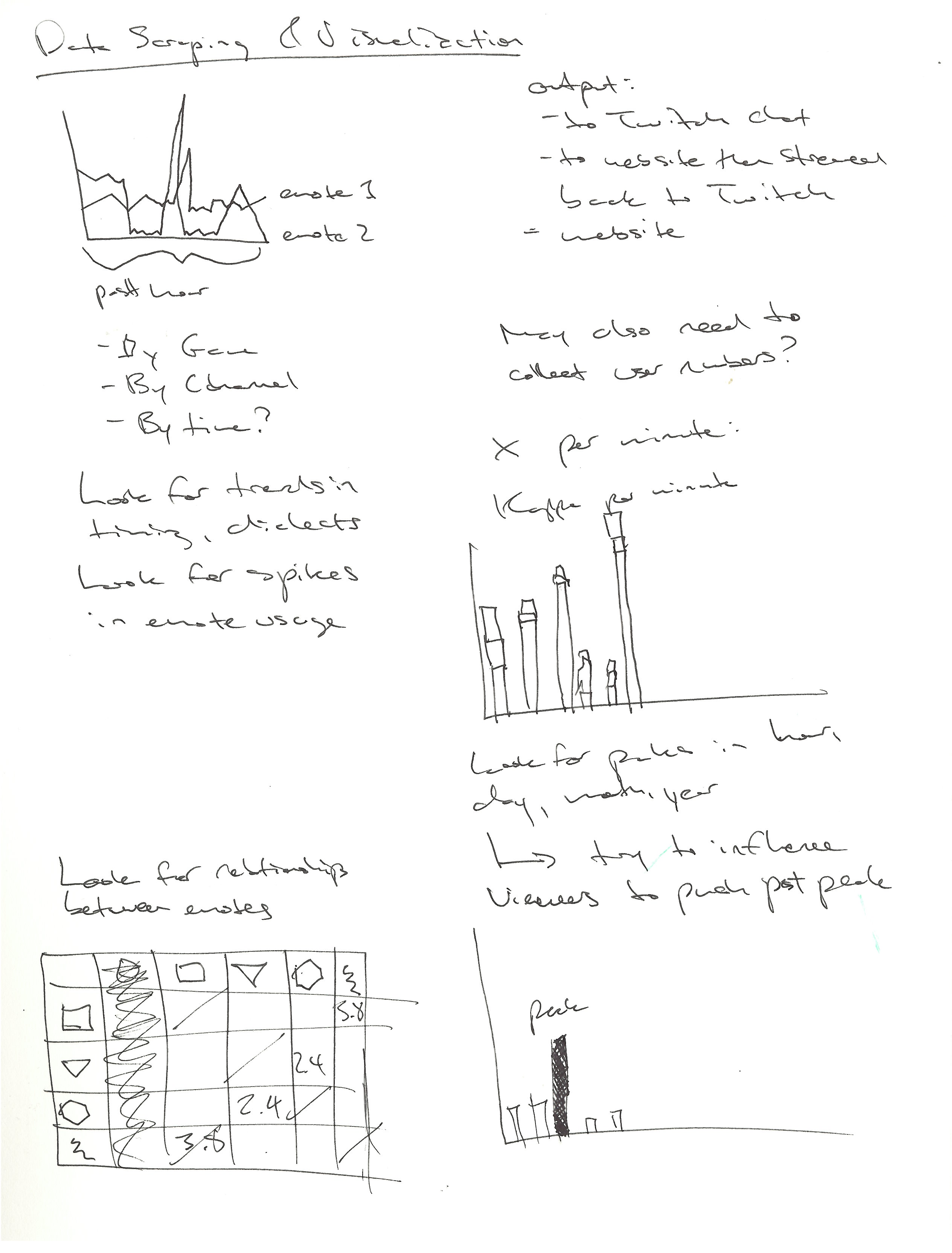

Here is my sketch. and some explaination

Here is my code!

#include <pebble.h>

#include <stdlib.h>

static Window *s_main_window;

int s_second = 0;

int s_second2 = 0;

bool direction1 = true;

bool direction2 = true;

bool isam = true;

static BitmapLayer *s_bg;

static GBitmap *s_bg_bitmap;

static BitmapLayer *s_sprite_minuite1;

bool s_sprite_minuite1_frame = true;

static BitmapLayer *s_sprite_minuite2;

bool s_sprite_minuite2_frame = true;

static GBitmap *s_sprite1f1_bitmap;

static GBitmap *s_sprite1f2_bitmap;

static GBitmap *s_sprite2f1_bitmap;

static GBitmap *s_sprite2f2_bitmap;

static GBitmap *s_spritef1_bitmap;

static GBitmap *s_spritef2_bitmap;

static GBitmap *s_spritef1L_bitmap;

static GBitmap *s_spritef2L_bitmap;

typedef struct vector2 vector2;

struct vector2

{

float x;

float y;

};

vector2 sprite1Position = {10, 10};

vector2 sprite2Position = {10 ,23};

bool animationFrame = false;

static PropertyAnimation *s_property_animation;

static PropertyAnimation *s_property_animation2;

static void destroy_property_animation(PropertyAnimation **prop_animation) {

if (*prop_animation == NULL) {

return;

}

if (animation_is_scheduled((Animation*) *prop_animation)) {

animation_unschedule((Animation*) *prop_animation);

}

property_animation_destroy(*prop_animation);

*prop_animation = NULL;

}

static void trigger_custom_animation() {

destroy_property_animation(&s_property_animation);

//*s_property_animation = NULL;

destroy_property_animation(&s_property_animation2);

//*s_property_animation2 = NULL;

Layer *s_layer = bitmap_layer_get_layer(s_sprite_minuite1);

Layer *s_layer2 = bitmap_layer_get_layer(s_sprite_minuite2);

// Set start and end

GRect from_frame = layer_get_frame(s_layer);

GRect to_frame = GRect(sprite1Position.x, sprite1Position.y, 10, 11);

GRect from_frame2 = layer_get_frame(s_layer2);

GRect to_frame2 = GRect(sprite2Position.x, sprite2Position.y, 10, 11);

// move first guy

if (direction1 == true)

{

if (sprite1Position.x < 130)

{

sprite1Position.x += 4.0;

}

}else

{

if (sprite1Position.x > 10)

{

sprite1Position.x -= 4.0;

}

}

if (direction2 == true)

{

if (sprite2Position.x < 130)

{

sprite2Position.x += 4.0;

}

}else

{

if (sprite2Position.x > 10)

{

sprite2Position.x -= 4.0;

}

}

// Create the animation

s_property_animation = property_animation_create_layer_frame(s_layer, &from_frame, &to_frame);

s_property_animation2 = property_animation_create_layer_frame(s_layer2, &from_frame2, &to_frame2);

// Flip the animations

if (s_sprite_minuite1_frame)

{

bitmap_layer_set_bitmap (s_sprite_minuite1, s_sprite1f2_bitmap);

s_sprite_minuite1_frame = !s_sprite_minuite1_frame;

}

else

{

bitmap_layer_set_bitmap (s_sprite_minuite1, s_sprite1f1_bitmap);

s_sprite_minuite1_frame = !s_sprite_minuite1_frame;

}

// Flip the animations

if (s_sprite_minuite2_frame)

{

bitmap_layer_set_bitmap (s_sprite_minuite2, s_sprite1f1_bitmap);

s_sprite_minuite2_frame = !s_sprite_minuite2_frame;

}

else

{

bitmap_layer_set_bitmap (s_sprite_minuite2, s_sprite1f2_bitmap);

s_sprite_minuite2_frame = !s_sprite_minuite2_frame;

}

// Schedule to occur ASAP with default settings

animation_schedule((Animation*) s_property_animation);

animation_schedule((Animation*) s_property_animation2);

}

static void main_window_load(Window *window)

{

// Sprite 1 textures

s_spritef1_bitmap = gbitmap_create_with_resource(RESOURCE_ID_f1);

s_spritef2_bitmap = gbitmap_create_with_resource(RESOURCE_ID_f2);

s_spritef1L_bitmap = gbitmap_create_with_resource(RESOURCE_ID_f1L);

s_spritef2L_bitmap = gbitmap_create_with_resource(RESOURCE_ID_f2L);

// Sprite 1 Set initial

s_sprite1f1_bitmap = s_spritef1_bitmap;

s_sprite1f2_bitmap = s_spritef2_bitmap;

// Sprite 2

s_sprite2f1_bitmap = s_spritef1L_bitmap;

s_sprite2f2_bitmap = s_spritef2L_bitmap;

s_bg_bitmap = gbitmap_create_with_resource(RESOURCE_ID_bg);

s_bg = bitmap_layer_create(GRect(10, 10, 120, 120));

bitmap_layer_set_bitmap(s_bg, s_bg_bitmap);

layer_add_child(window_get_root_layer(window), bitmap_layer_get_layer(s_bg));

// Sprite Minuite 1

s_sprite_minuite1 = bitmap_layer_create(GRect(0, 0, 10, 11));

bitmap_layer_set_bitmap(s_sprite_minuite1, s_spritef1_bitmap);

layer_add_child(window_get_root_layer(window), bitmap_layer_get_layer(s_sprite_minuite1));

// Sprite Minuite 2

s_sprite_minuite2 = bitmap_layer_create(GRect(0, 0, 10, 11));

bitmap_layer_set_bitmap(s_sprite_minuite2, s_spritef1_bitmap);

layer_add_child(window_get_root_layer(window), bitmap_layer_get_layer(s_sprite_minuite2));

}

static void main_window_unload(Window *window) {

// Destroy TextLayer

}

static void tick_handler(struct tm *tick_time, TimeUnits units_changed)

{

trigger_custom_animation();

s_second = s_second + 1;

// First sprite takes 30 steps, 30 steps

if (s_second >= 30)

{

if (direction1)

{

s_sprite1f1_bitmap = s_spritef1L_bitmap;

s_sprite1f2_bitmap = s_spritef2L_bitmap;

}

else

{

s_sprite1f1_bitmap = s_spritef1_bitmap;

s_sprite1f2_bitmap = s_spritef2_bitmap;

}

direction1 = !direction1;

s_second = 0;

}

// Second sprite takes 30 steps, 29 steps

s_second2 = s_second2 + 1;

if (s_second2 >= 29)

{

if (direction2)

{

if (s_second2 >= 30)

{

s_sprite2f1_bitmap = s_spritef1_bitmap;

s_sprite2f2_bitmap = s_spritef2_bitmap;

direction2 = !direction2;

s_second2 = 0;

}

}

else

{

s_sprite2f1_bitmap = s_spritef1L_bitmap;

s_sprite2f2_bitmap = s_spritef2L_bitmap;

direction2 = !direction2;

s_second2 = 0;

}

}

}

static void init() {

// Create main Window element and assign to pointer

s_main_window = window_create();

// Set handlers to manage the elements inside the Window

window_set_window_handlers(s_main_window, (WindowHandlers) {

.load = main_window_load,

.unload = main_window_unload

});

// Show the Window on the watch, with animated=true

window_stack_push(s_main_window, true);

// Register with TickTimerService

tick_timer_service_subscribe(SECOND_UNIT, tick_handler);

trigger_custom_animation();

}

static void deinit() {

// Destroy Window

window_destroy(s_main_window);

}

int main(void) {

init();

app_event_loop();

deinit();

}