I found a neat popup book entitled ABC3D, invented by Marion Bataille.

This project is a Popup book with a lenticular cover that conveys each letter of the alphabet through a dynamic, interactive reading experience. The letters are implemented as popup shapes that require many different movements to view properly.

When I started exploring digital art (broadly construed), I communed with many fascinating structures that I had never seen in the real world. I witnessed fields of perfect sinusoidal fields, utilitarian user interfaces, and other peculiar visual forms that many people had independently discovered, and visualized. I believed these visual artifacts to be products of the virtual world and completely separate from the Physical world that the average person eats, sleeps, and breaths in.

As time has passed, I have noticed that it is possible to naturally encounter many visual effects that seem to be unique to the digital world in the real world, for instance I was sitting at the dinner table at my parents house one day and looked out a screened window and noticed that the screen actually pixelated my view of the outside world! I currently believe that visual effects of digital culture should also be present when encountering designed culture, i.e. buildings, chairs, side walks, and everything else that humans have made. It makes sense that there might be an equivalence relation between the two because both of these fields of work were created by humans.

This project tickles my fancy, because it demonstrates properties of font geometric decomposition, interactivity, movement, and experimental structure that I have previously only seen in Electronic art and typography. The project also uses connective pieces, reflectivity, and translucence in ways that appeal to the pattern and form lover in me.

As for a critique, I will say that the color scheme could have been more varied and it seemed as if some of the letters repeated themselves. I think the author nailed the creative task of finding relationships between subsequent letters and did quite well at working within the ordering constraint. The history of pop-up books is pretty well documented, due to it being a very well known medium. The designer’s website is: http://www.marionbataille.com/ She has made several artistic books based on forms.

Second Project

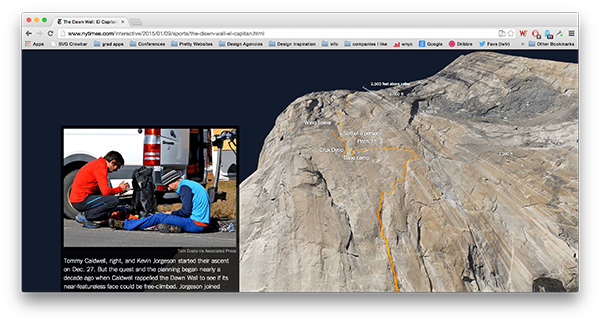

http://www.csismn.com/SYN-Phon

This project, based on the art and composition of Candaş Şişman displays a lengthy graphical display that conveys subject matter through time. As the imagery scrolls performers produce tones to match the imagery. I like this project because it conveys a story through structured imagery along with a relationship between musical sound and imagery. I think that the documentation provided makes it a bit unclear how improvisational the performance was, but I feel as if the music could have been a bit more consonant. It would be interesting to make the reverse project, that in which musicians perform and a computer generates structured platforms that represent the performance. Then a 2D platform based game could be formed with a unique procedural creation technique. One potential influence is the capturing of local sounds as a representation of culture. I have seen several pieces including one at the Frame Gallery that have used locally captured sounds to make the artwork more intimate to its intended audience.