Fragmented Memory

In this project, a pattern for a tapestry is designed out of a translation of a computer’s physical memory. Groups of 6 bits in memory are translated into RGB color values for that position in the cloth. This project is inspiring to me because it is taking a physical object (physical memory chip), and using the data encoded in the physical object to create a new, completely different physical object. So, the data serves as a map or bridge between two different real world objects, which I think is a cool concept. Additionally, the end result pattern in the tapestries has an old 8-bit game sort of feel to it, which is an aesthetic I like. I think this project could be better if there were also some extra side-by-side comparison, where you could see what parts of memory align to which parts of the cloth. For someone familiar with the workings of computers, it could be interesting to see how exactly particular computer commands correlate to different patterns in the cloth, and would provide more real-world context for the patterns which emerge. It appears that the creator, Phillip Stearns, creates a lot of “glitch textiles” artworks, and is interested in exploring the computer glitch aesthetic in many of his works.

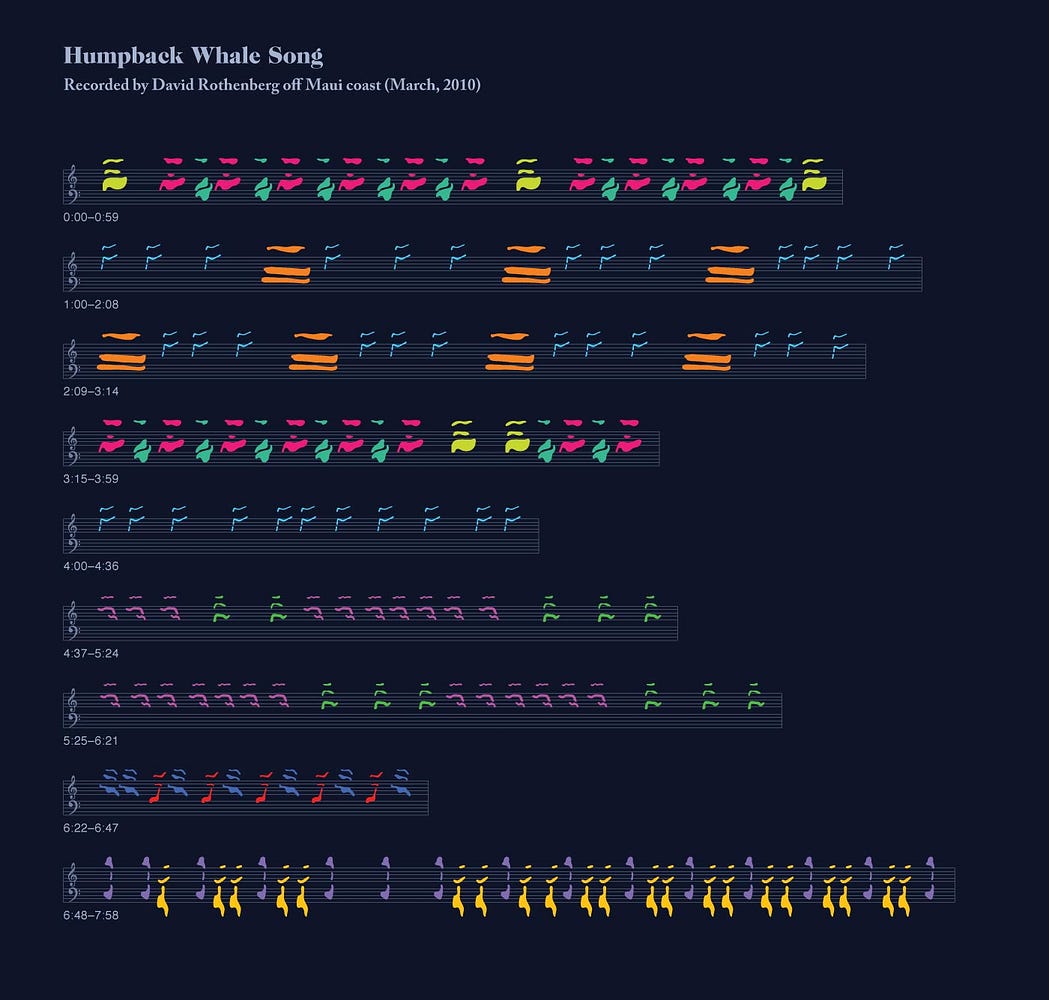

Whale Sound Visualization

This project discusses how humpback whales create organized songs using the sounds they make. These song patterns are repeated over and over by the whales, so it’s not just a random collection of noises. The various whale noises used in the songs were given stylized visualizations according to how the soundwaves of each noise look like. Then these stylized notes were arranged on a music note sheet. What I like about this visualization is that the whale notes look very interesting and pleasing to the eye. Additionally, I think exploring the concept of alternate ways of displaying music notes for noises beyond our current understanding of music, and thinking of how to represent visually the language of animals, is a very interesting concept. After all, the alphabets that humans use are just visualizations for the noise information that humans make. So the attempt to codify animal noise information in the same way as human noise information is codified is really intriguing to me. I think the project could be improved if the songs were presented in a way that would give them more context. For example, it would be cool to have a map interface, where the user could see where in the world the data for each of the whale songs was recorded. It would also be cool to be able to compare different whale songs side by side, and see if there are any overarching trends to the songs depending on the geographic region that the whale composer inhabits.