BACKGROUND

Anyone who has worked in a large, bureaucratic organization can relate to the absurdity of Dilbert’s high-tech workplace experiences, which is why Dilbert is perhaps the most photocopied, pinned-up, and e-mailed comic strip in the world. Created by Scott Adams, Dilbert has been published for over 26 years and now appears in 2,000 newspapers worldwide.

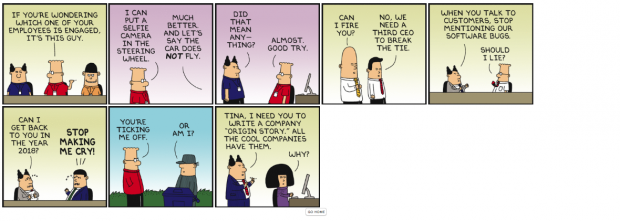

For my final project, I’ve been working on creating the ability to create new mashups of different panels from the Dilbert comic strip based on the conversational content of each panel.

All of Dilbert’s individual comic strips can be viewed on Dilbert.com, and, for the most part, each strip’s dialogue can be viewed in the metadata of the strip’s web page. The problem is that the transcribed dialogue on the strip’s web page isn’t separated by their individual panels.

PROJECT DETAILS

I developed some Python code to help get around this. First, I used Beautiful Soup, a python package for parsing HTML documents, to scrape and download all of the comic strips, nearly 10,000 of them, and the strip’s transcribed dialogue. I then used the Python Image Library to take each strip and crop them into individual panels.

To get the dialogue content for each individual panel, I initially used PyTesseract, an optical character recognition tool for Python, to decipher the panel’s text content. This proved to be problematic since the text used in Dilbert is styled in a hand-written typeface, leading to recognition errors. Dilbert doesn’t use enclosed speech bubbles, so there are many cases where the speech for two different characters are spaced so closely together that the OCR tool erroneously recognizes the dialogue as a single block of text. To get around this, I used OpenFrameworks and OpenCV with a lot of help from Golan and his clever ideas to perform a series of image manipulations to isolate the character’s lines in each panel. By forming bounding boxes around each character’s dialogue, I used OCR and compared the recognized text to the transcribed dialogue, which served as the ground truth. This allowed me to assign each panel its associated dialogue.

This groundwork allowed me to create new mashups using individual panels. By using the Natural Language Toolkit and CMU’s pronouncing dictionary, for example, I created a web page that allows users to create strips that all rhyme with each other. I also used the CMU’s pronouncing dictionary to create strips that are haikus (strips consisting of 5-syllable-, 7-syllable-, 5-syllable-panels)

This was a challenging but incredibly fun project to work on. I learned how to program in Python and learned to use data scraping methods and tools like computer vision and natural language processing.

IMPROVEMENTS

The image manipulation operations helped a lot to recognize the dialogue, but there are still many strips that have missing or incorrectly-ordered dialogue or words. Future work can enlist workers from Mechanical Turk to read each individual panel and improve the accuracy of the transcribed dialogue that served as the ground truth for my OCR operations.

For counting syllables, there are many words in Dilbert’s dialogue (proper nouns, some corporate jargon) that are not in the CMU pronunciation dictionary. These words threw off the syllable count for generating haikus. I would like to add code to handle approximate pronunciation for these cases.

There’s much more that can be done with the individual panels and the resulting associated text. Future work can include word clouds of common phrases or words, or infographics about which characters speak to whom.

I registered a domain name to transfer my work, including 2 GB of individual comic strip panels, online, but setup issues with the server didn’t allow me to deploy this project online by today’s date. I’m still working to debug it so I can have it viewable online within the next week.

Code is available at: https://github.com/ronkim/capstone

All individual comic strips and separated panels are available in a single 1.6 GB file at https://www.dropbox.com/s/gkc65hver6dqgf0/images.zip?dl=0

UPDATE: The Dilbert mashup page is viewable at dilbertmashups.com