Overview

This project is an open source and affordable hardware platform for advanced cinematics. These technologies for camera movement are typically only available to financially able production companies, but this device will give the same level of dynamics to anyone with a laptop.

What’s Out There

There are a large number of commercial products and diy tutorials that cover this subject. However, there is quite a disparity between the diy/ open source builds versus the commercial. On top of this fact, the commercial does not reach the ability to track subjects in real time until the budgetary stratosphere is reached. This space remains entirely unexplored as an affordable means to obtain high quality, and dynamic footage.

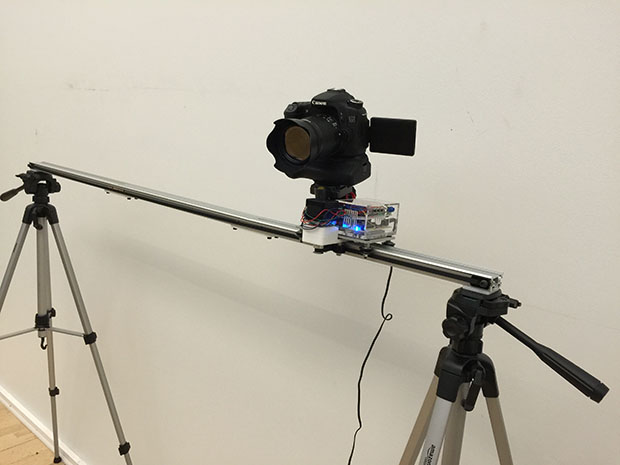

Hardware

+What is needed?

-Stepper Motors (sound control // smoothness)

-Aluminium rail (light // strong)

-Acrylic parts (easy to fabricate // inexpensive)

-Arduino + Drivers

-Kinect

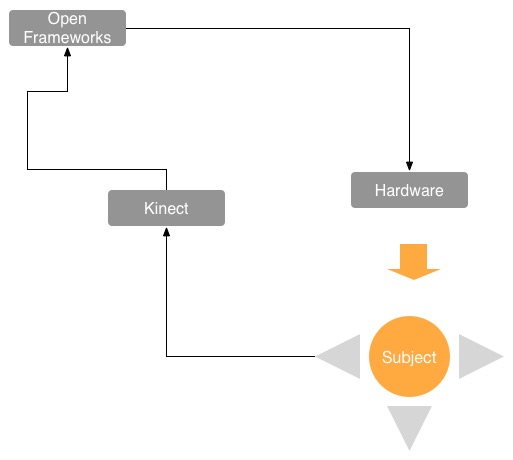

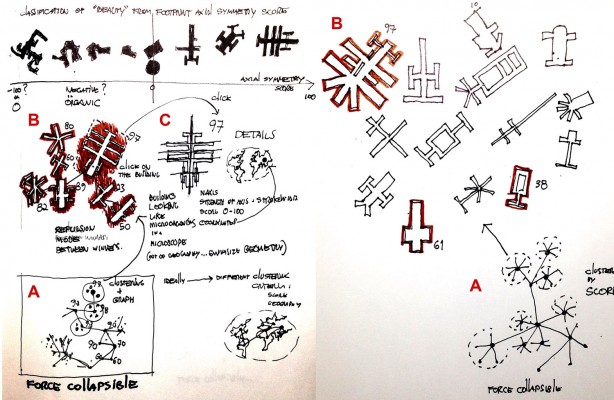

Code Schematic

What’s Next

+Develop Pan Tilt Mechanism

+Devise Tracking Algorithms

+Machine Connectors for a Sturdy Result

+Define Choreography

Schedule

March 31st– Completed Pan Tilt Mechanism [Design and Build]

-Strategy for Choreography

April 14th– Kinect / Development Completed

April 28th– Completed/Refined Prototype for Critique