UPDATED Project Idea: A posable crab figure to control a virtual crab simulator.

So, instead of making a universal humanoid action figure controller to control first-person and third person video games, I’m going to make a posable crab figure that can control only one game (which I will create). So this is basically going to be a 4-legged, 12 degree of freedom (3 per leg) toy that hooks up electronically to your computer, communicating in real time via Arduino to a Unity application through Standard Firmata. The idea of the actual game is that you control a crab and make it move by actually moving the real crab figure’s legs.

————–(Previous Information Below)—————–

Capstone Project Idea: An action figure that can be used to control first-person shooter video games.

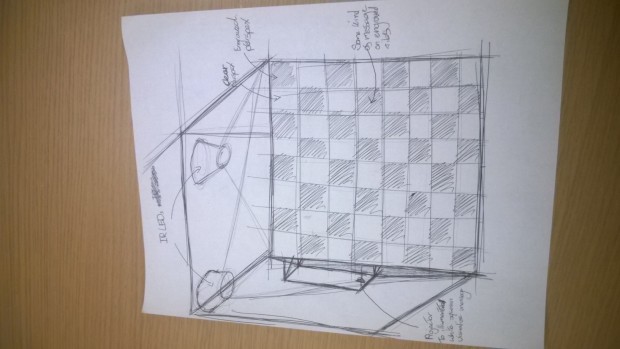

Here is a concept sketch:

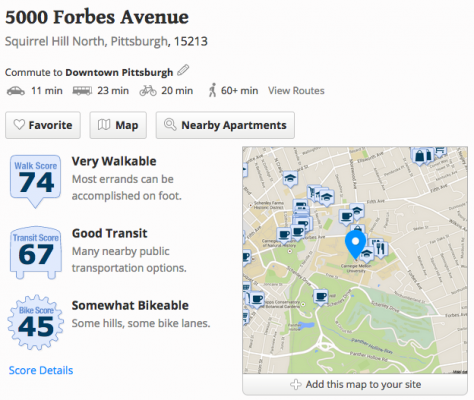

The basic idea for this project is to develop a novel video game controller in the shape of an action figure. Key motions can be mapped using standard 3-pin potentiometers. Additional motions can be mapped using the joystick and the buttons laid throughout the figure. The key difference between this project and the projects described below is that my project is designed to work as a video game controller rather than an electronic mannequin for animation. This means focus will be placed on durability and ease of access to inputs. While this will be certainly more difficult to use when compared to traditional game controllers, the action figure shape is hypothesized to bring a completely different kind of immersion to video games, where the player is not just pushing arbitrary buttons to control a game character, but instead directly controlling the character via pose control. The plan in constructing this is that major components will be soldered onto 3D printed parts and run on an Arduino, interfacing with Processing to control a video game in real time.

This is very similar to a couple other projects I’ve found, which are:

Tangible Augmented Reality Action Figure by Alcyone, 2013

This is basically a posable figure with a bunch of fiducial markers for joints. An augmented reality camera processed by a computer shows a virtual figure with the same joint orientations as the action figure in real time. Additional interfaces such as a virtual reality device will allow the user to interface with the digital 3D figure like an actual pet. A big advantage this project has over other augmented reality applications is that here you can actually touch the figure, which the digital representation can respond accordingly.

QUMA by SoftEther, 2011

I mentioned this in my first Looking Outwards post. Instead of using fiducial markers to track joint poses, this project uses standard potentiometers. With more than 20 degrees of freedom, this is an electronic mannequin that interfaces with a computer via USB and can be used to pose and animate 3D humanoid characters.

Modular Input Puppet by ETH Zurich, 2014

I also mentioned this in my first Looking Outwards post. This is very similar to the above project, except that this project is modular, which basically means that the figure can be reconstructed to fit any skeleton, not only humanoids. This great in that the modular system can be used to animate elephants, spiders, and even dinosaurs (like in Jurassic Park).