Life ; History

http://openprocessing.org/sketch/7508

Another Cellular Automata Visualization. But this one is pretty cool. Each generation of cells falls and gets rendered progressively darker each frame until it disappears into the black background. The Cells are in a 2d grid. Each cell is a small colored tile, and each generation’s tiles sit directly below its child. The result is a 3d form. When there is a high degree of change the structure appears branched . This particular implementation uses the rule that less than 2 or more than 3 neighbors causes death, and exactly 3 neighbors causes the cell to come alive. In addition the cells are colored according to their neighbors. The cells are initially colored in random patches. This gives a sense of which cells “took over” or had dynamic behavior that caused them to move about and influence other cells. Pretty interesting. They should 3d-print it or make it somehow. With those colors.

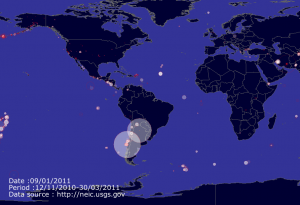

This project makes me think about how cellular automata could be a macroscopic model for population dynamics. Each color could represent a tribe or tribe of tribes, that takes over other regions, grows, and dies. Maybe this could even be a model for civilizations.

In fact it has been used in this way in relation to biology. Here is one example:

http://www.exa.unicen.edu.ar/ecosistemas/Wetland/publicaciones/papers/29_ISRSE_RM.pdf

We Met Heads On

This project takes a mesh and deformes it using sound as input. The vertices of the mesh are twisted according to the strength of the soundwave. The objects were taken from 3d-scans made available on thingiverse. I like the idea of being able to visualize sound by using it as a parameter in distorting a mesh. It seems like many computational tools, and art being made with those tools, allow for creating simulated synesthesia. Synesthesia, the confusion of different senses, seems like a data mapping phenomena. Input like sound might be mapped to another sense like vision. While the ways that the human brain does this are undoubtedly insanely complex, I imagine that the mathematical and computational methods for mapping data to other sets of data are related to synesthesia.

It would be pretty interesting if this project could be re-created in physical space realtime. Maybe if each vertice of the mesh was a physical node, and each edge a small gas-spring, and motors or solenoids pulled on the mesh, one could get a rough approximation of what these people have done using processing. Obvously it would be way slower than the animation, but it might be pretty interesting. And it has the potential to be interactive.

Pixel Knitting

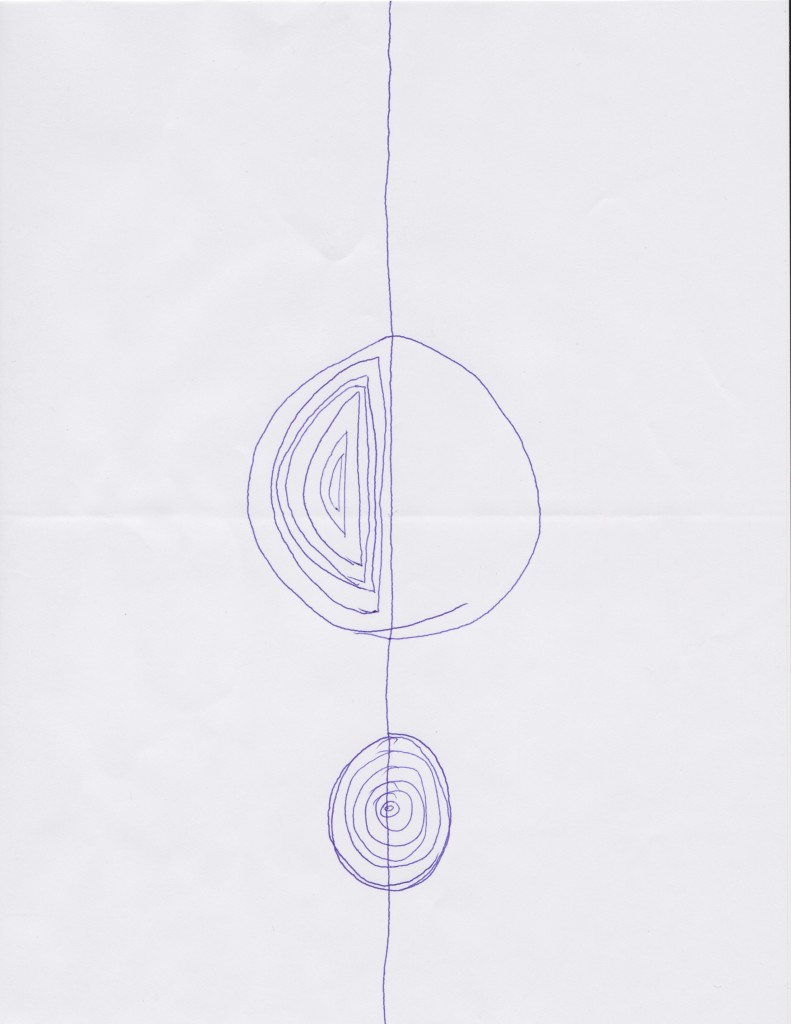

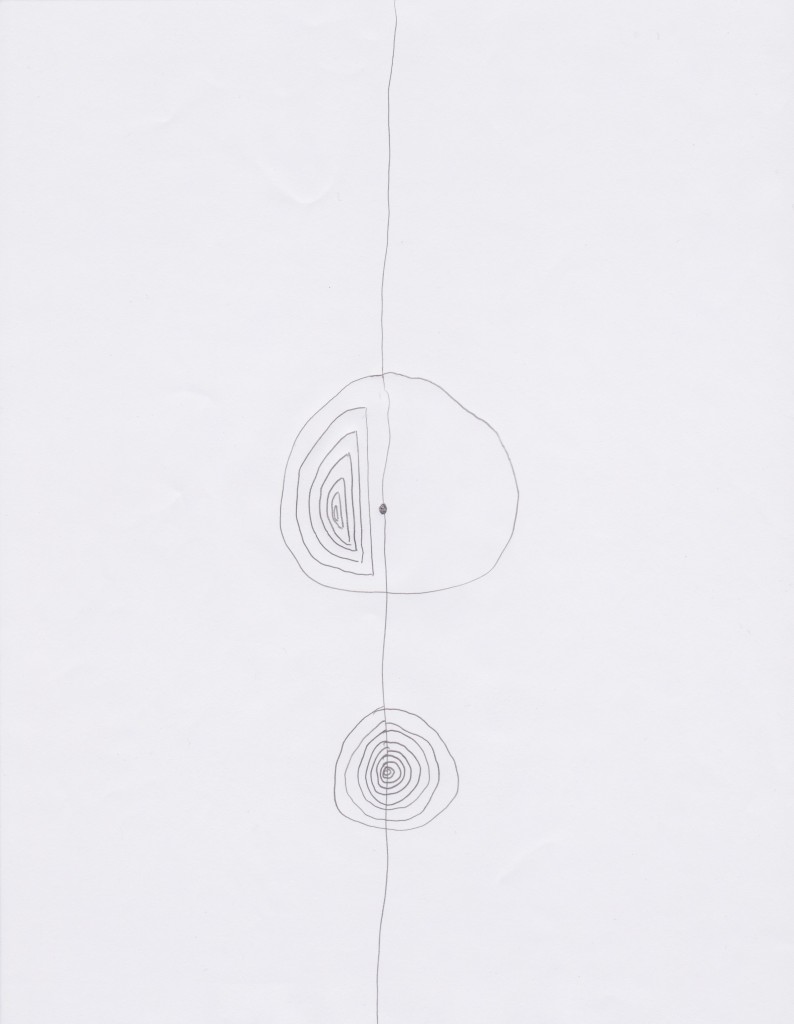

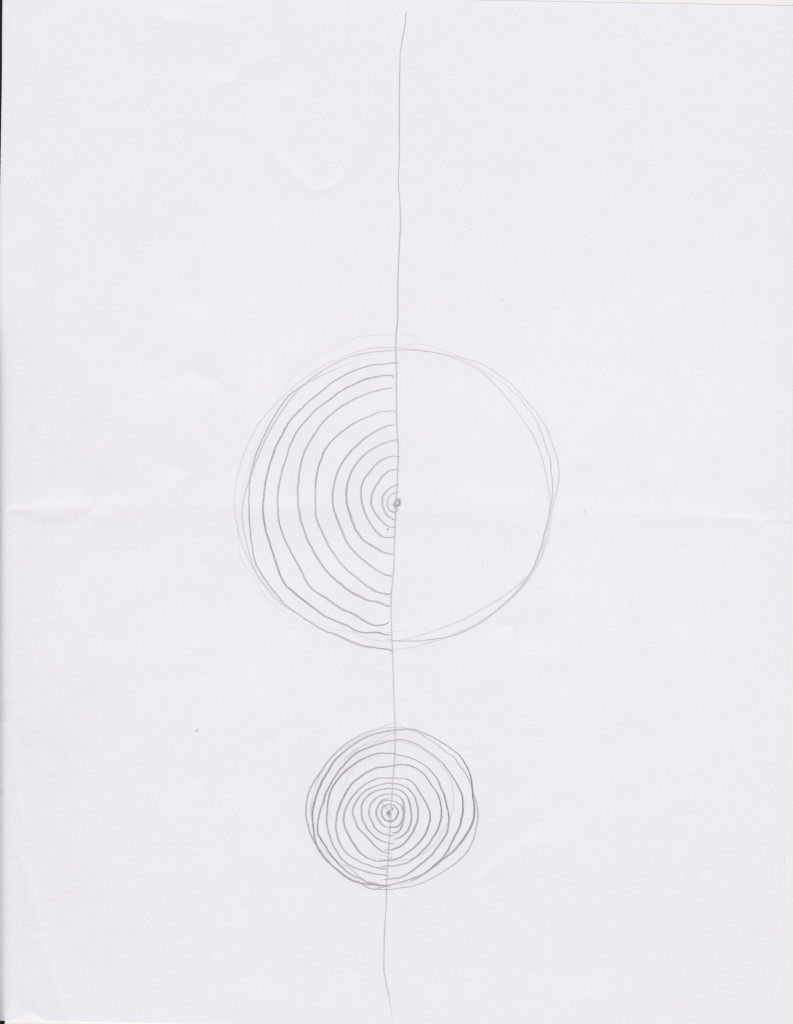

This project, by Pierre Commenge, takes a digital image and draws lines and circles whose properties are determined by the pixels’ color, brightness and saturation in the images. The code is beautifully simple, yet the output is bizarre and complex. While clearly generated from photographs, the images take on a cartoonish or surreal nature.

I would like to see a set of images where the output from one image is fed back into the algorithm. Perhaps the algorithm, when run once, would make very minor tweeks, but over many iterations of feedback would produce wild results. Or maybe that would just produce mush (it probably depends alot on the details of the algorithm).

An even more ambitous extension of this idea would be taking it into the 3d realm. The input would be a 3d scan. The algorithm would add points, spheres, cubes, rods, etc. according to the point cloud or mesh. Maybe local curvature, relative position in space, or surface roughness could be used as parameters in generating new geometry. If the 3d-scan was one of those fancy ones with textures as well as 3d information, then similar methods used in the above video could be used: color, brightness, saturation.