Designing Expressive Motion for a Shrubbery… which just happens to be a lot like designing expressive motion for a non-anthropomorphic social robot AND an exploration of theory of mind, which is when you imagine yourself in another agent’s place to figure our their intention/objective!

Turns out we’ll think of a rock as animate if it moves with intention. Literally. Check out my related work section below! And references! This is serious stuff!

I’m a researcher! I threw a Cyborg Cabaret! With Dan Wilcox! This project was one of the eight acts in the variety show and also ended up being a prototype for a experiment I want to run this summer!

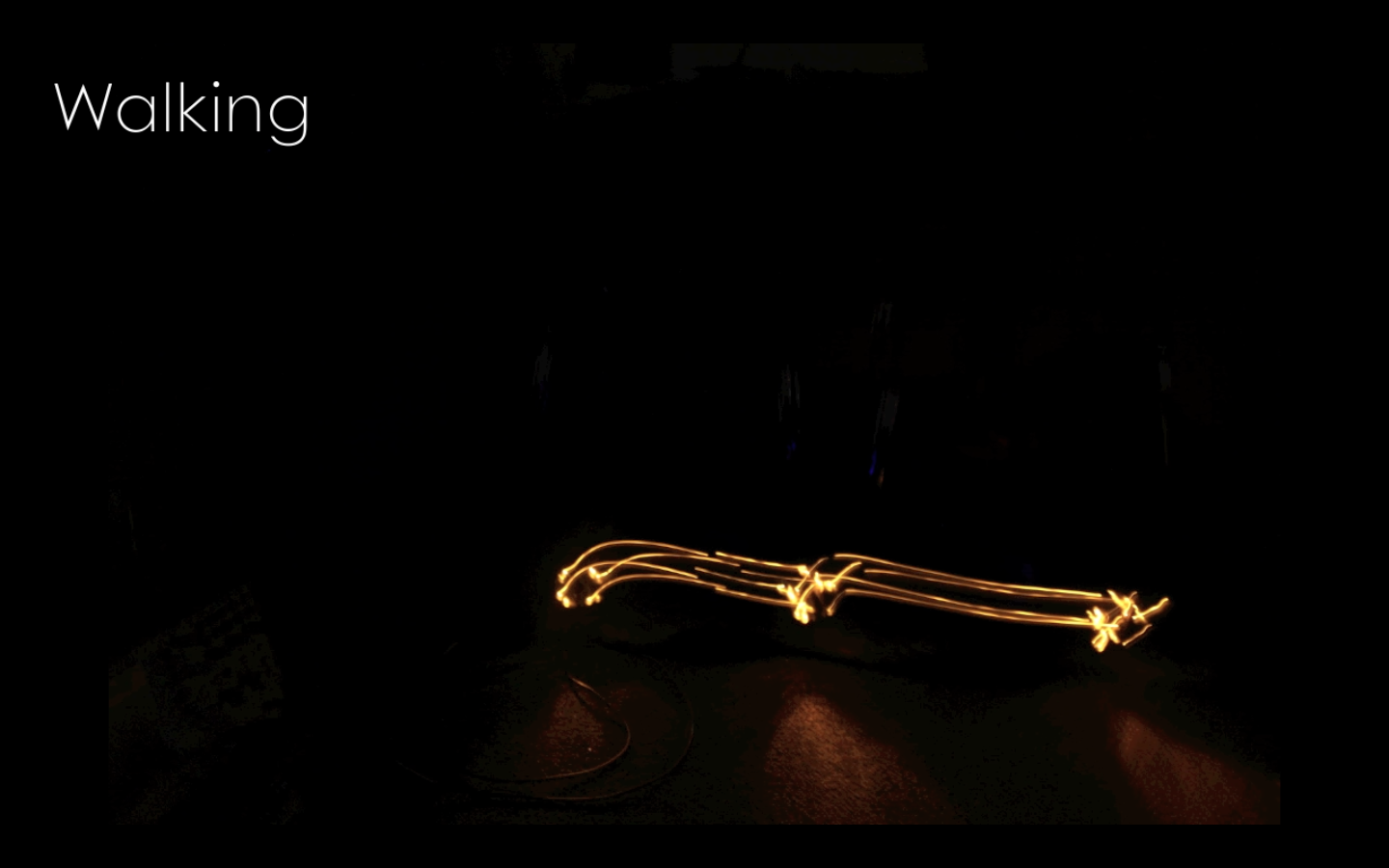

[vimeo=https://vimeo.com/42049774]

Here’s my fancy-pants description:

What is the line between character and object? When can movement imbue a non-anthropomorphic machine with intent and emotion? We begin with the simplest non-anthropomorphic forms, such as a dot moving across the page, or a shrubbery in park setting with no limbs or facial features for expression. Our investigation consists of three short scenes originally featured at the April 27, 2012 Cyborg Cabaret, where we simulated initial motion sets with human actors in a piece called ‘Simplest Sub-Elements.’

[vimeo=https://vimeo.com/41955205]

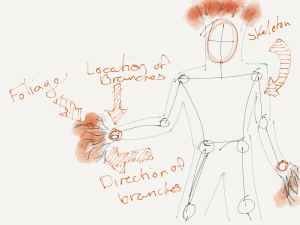

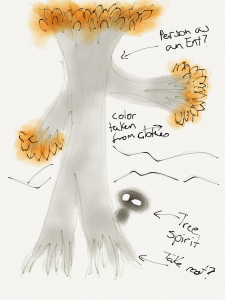

Setting: A shrubbery sits next to a park bench. The birds are singing. Plot summary: In Scene I, a dog approaches the shrubbery, tries to pee on it, and the shrubbery runs away. In Scene II, an old man sits on a park bench, the shrubbery surreptitiously sneaks a glance at the old man, the old man can’t quite fathom what his corner of eye is telling him, but eventually catches the bush in motion, then sequentially moves slowly away, gets aggressive and flees. In Scene III, a quadrotor/bumblebee enters the scene, explores the park, notices the shrubbery, engages the initially shy shrubbery, invites her to explore the park, teachs her to dance, ending in them falling in love.

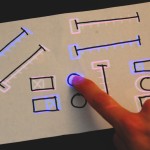

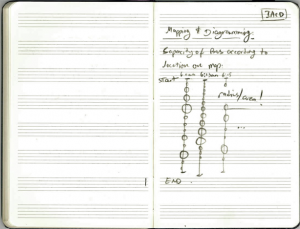

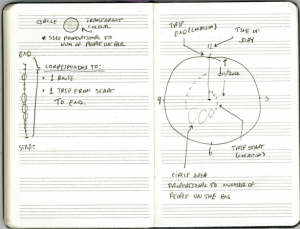

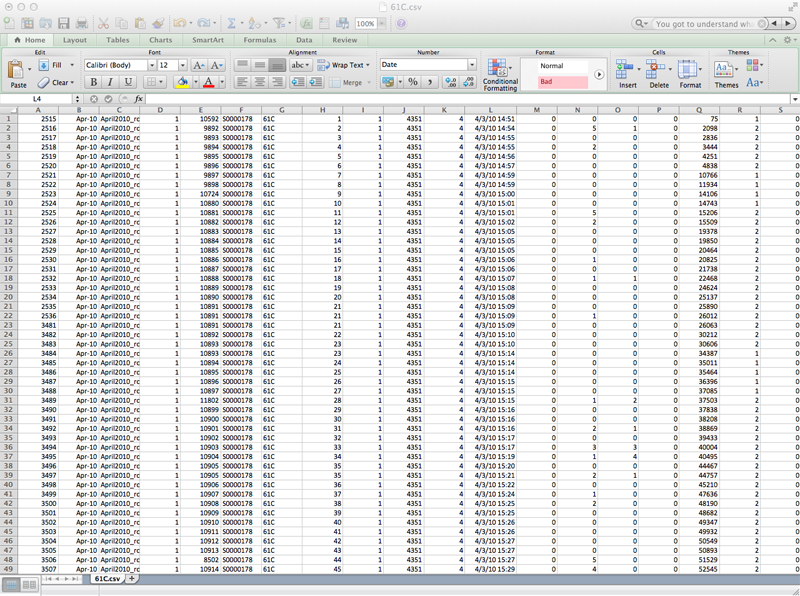

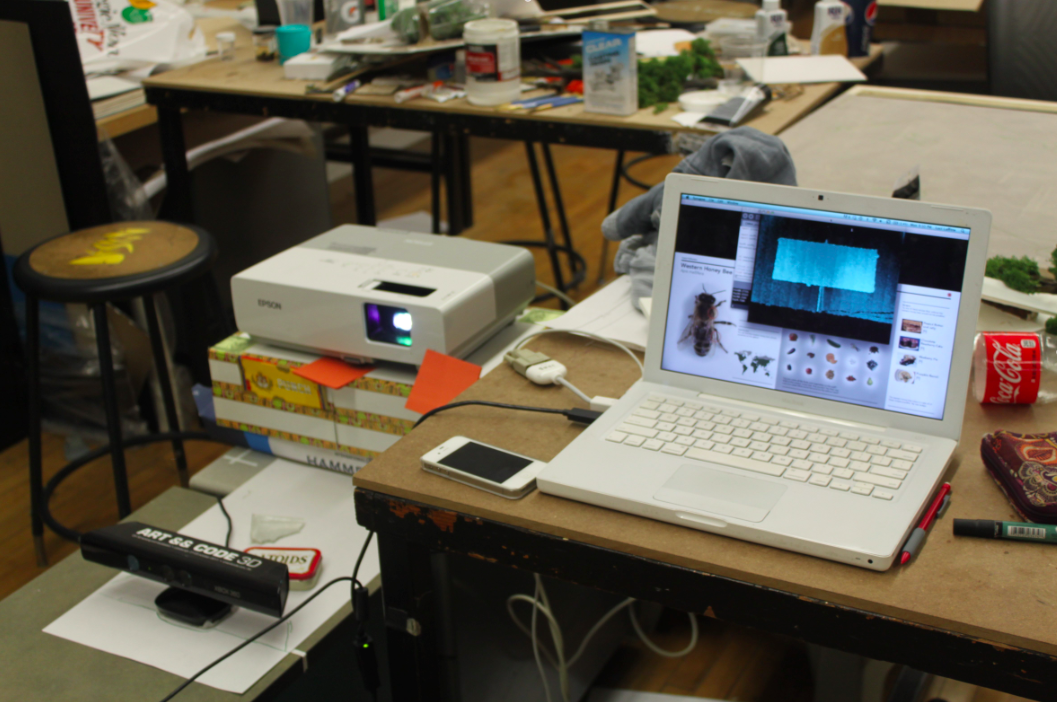

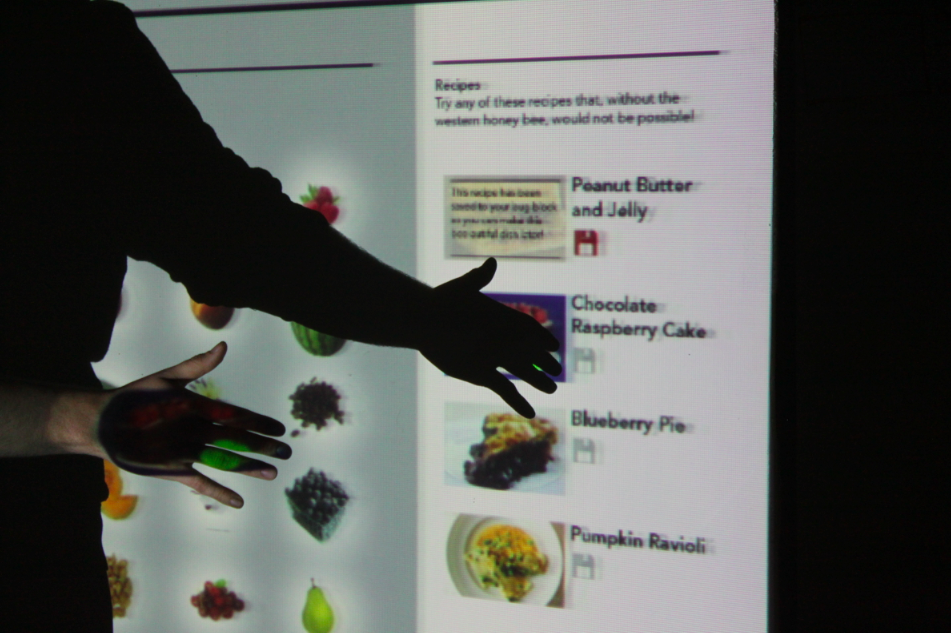

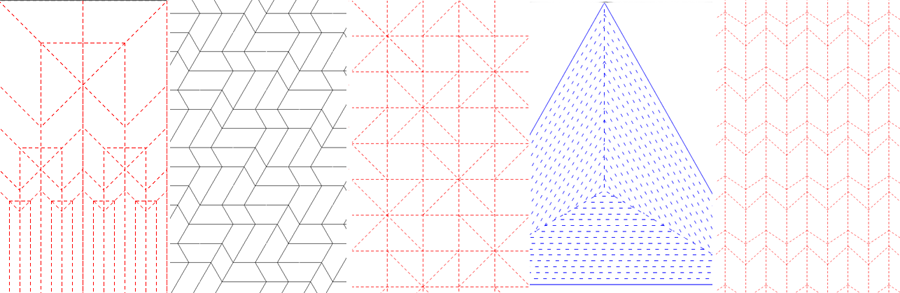

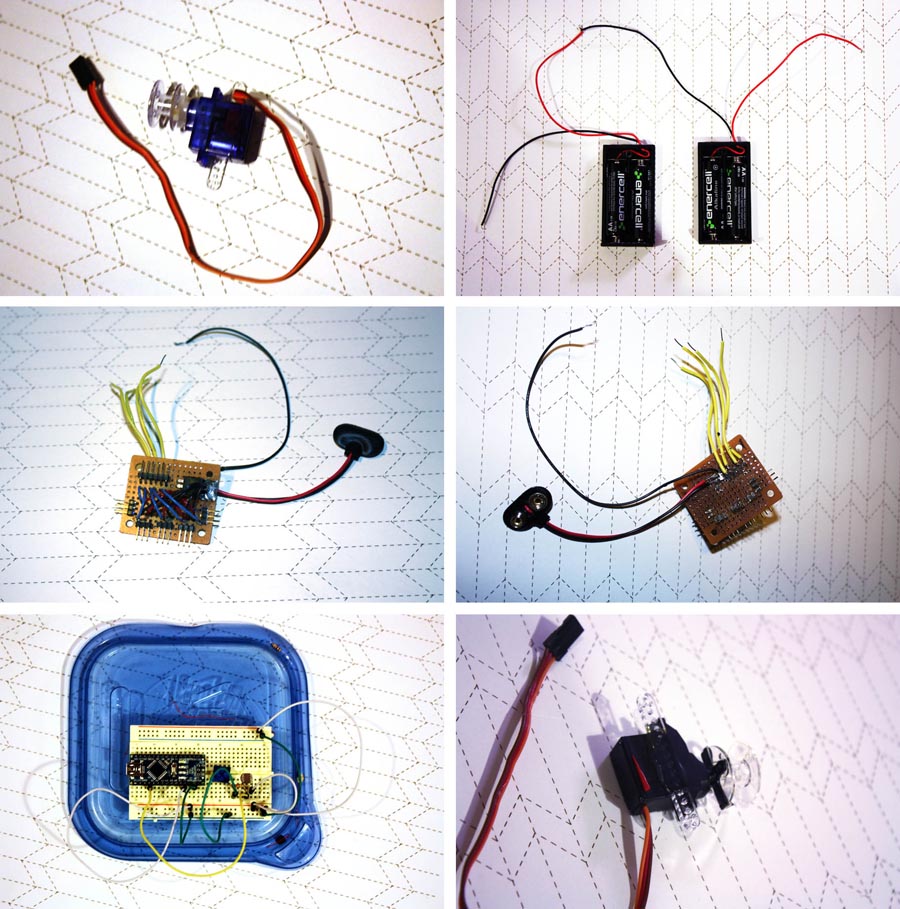

As continuing work, I want to invite you back to participate in the recreation of this scene with robotic actors. In this next case, you can help us breakdown the desired characteristics of expressive motion, regardless of programming background by describing what you think the Shrubbery should do in three dramatic scenes using three different interfaces. These include 1) written and verbal descriptions of what you think the natural behaviors might be, 2) applets on a computer screen in which you can drag an onscreen shrubbery’s actions and 3) a sympathetic interface in which you move a mini-robot across a reactables surface with detection capabilities.

If you would like to participate, send your name, email and location to heatherbot AT cmu DOT edu with the subject line ‘SHRUBBERY’! We’ll begin running subjects end of May.

We hope your contributions will help us identify an early set of features and parameters to include in an expressive motion software system, as well as evaluate the most effective interface for translating our collective procedural knowledge of expressive motion into code. The outputs of this system will generate behaviors for a robotic shrubbery that might just be the next big Hollywood heartthrob.

INSPIRATION

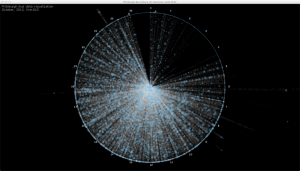

Heider and Simmel did a great experiment in 1944 (paper here) where they showed that people think moving dots are character. This is the animation they showed their study subjects without introduction:

[youtube=https://www.youtube.com/watch?v=76p64j3H1Ng]

All of the subjects that watched the sequence made up a narrative to describe the motivations and emotional states of the triangles and circle moving through the frames, with the exception of those that had lesions in their brain. This is good news for social robots; even a dot can be expressive, so Roombas have it made! The section below outlines in further detail why this is so. The experiments we wish to run will explore this phenomena in more detail and seek to identify the traits that a machine could use repeatably and appropriately.

RELATED WORK // THE NEUROSCIENCE

Objects can use motion to influence our interactions with them. In [6] (see bottom of post for references), Ju and Takayama found that they could use a one-axis automatic door’s opening trajectory affected the subject’s sense of that door’s approachability. The speed and acceleration with which the door opened could reliably make people attribute emotion and intent to the door, e.g, that it was reluctant to let them enter, that it was welcoming, urging entrance or that it judged them and decided not to let them in.

This idea that we attribute character to simply moving components is longstanding [7]. One of the foundational studies, the video of which was discussed above, reveals how simple shapes can provoke detailed storytelling and attribution of character is Heider’s ‘Experimental Study of Apparent Behavior’ in 1944 ([4][5]). The two triangles and a circle move in and around a rectangle with an opening and closing ‘door.’ Their relational motion and the timeline evoked in almost all participants a clear storyline despite the lack of introduction, speech, or anthropomorphic characteristics outside of movement.

These early findings encourage the idea that simple robots can evoke a similar sense of agency from humans in their environment. Since then researchers have advanced our understanding of why and when we attribute mental state to simple representations that move. For example, various studies identify the importance of goal directed motion and theory of mind for attribution of agency in animations of simple geometric shapes.

The study in [1] contrasted these attributions of mental states between children with different levels of autism and adults. Inappropriate attribution of mental state for the shape was used to reveal impairment in social understanding. What this says is that it is part of normal development for humans to attribute mental state and agency to any object or shape that has the appearance of intentional motion.

Using neuroimaging, [2] similarly contrasts attribution of metal state with “action descriptions” for simple shapes. Again, brain activation patterns support the conclusion that perception of biological motion is tightly tied into theory of mind attributions.

Finally, [3] shows that mirror neurons, so named because they seem to be internal motor representations of observed movements of other humans, will respond to both moving hand and a non-biological object, suggesting that we assimilate them through along the same pathway as we would use if they were natural motions. Related work on robotics and mirror neurons includes [10].

Engel sums up the phenomenon with the following, “The ability to recognize and correctly interpret movements of other living beings is a fundamental prerequisite for survival and successful interactions in a social environment. Therefore, it is plausible to assume that a special mechanism exists dedicated to the processing of biological movements, especially movements of members of one’s own species…” later concluding, “Since biological movement patterns are the first and probably also the most frequently encountered moving stimuli in life, it is not too surprising that movements of non-biological objects are spontaneously analyzed with respect to biological categories that are represented within the neural network of the [Mirror Neuron System]” [3]

RESEARCH MOTIVATION

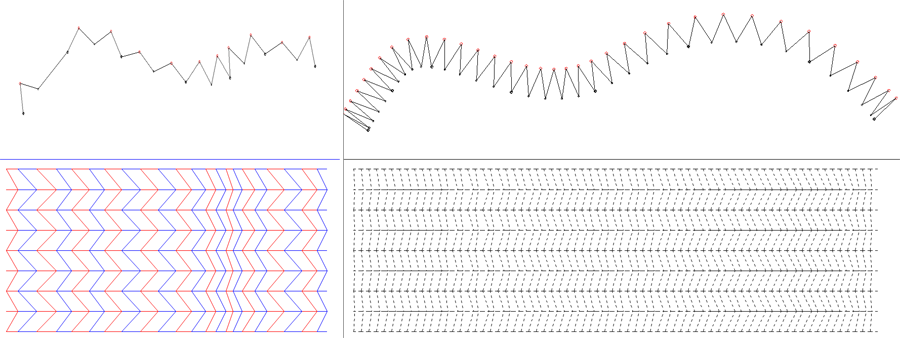

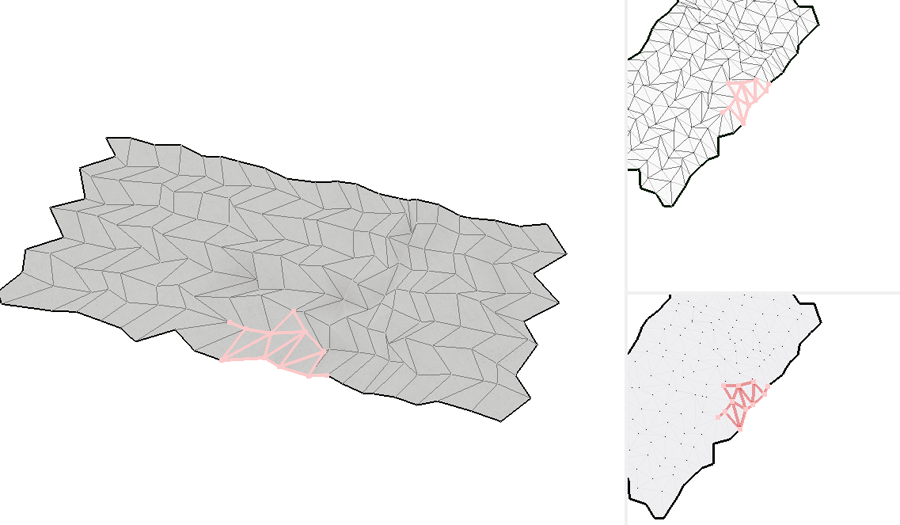

Interdisciplinary collaborations between robotics and the arts can sometimes be stilted by lack of common terms or methodology. While previous investigations have looked to written descriptions of physical theater (e.g. Laban motion), to glean knowledge of expressive motion, this work evaluates the design of interfaces that enable performance and physical theater specialists to communicate their procedural experience of designing character-consistent motion without software training. In order to generate expressive motion for robots, we must first understand the impactful parameters of motion design. Our early evaluation asks subjects to help design the motion of a robot without limbs that traverses the floor with orientation over the course of three interaction scenarios. By (1) analyzing word descriptions, (2) tracking on-screen applets where they ‘draw’ motion, and (3) creating a miniaturized scene with a mockup of the physical robot that they move directly, we hope to lower the cognitive load of translating principles of expression motion to robots.These initial results will help us parameterize our motion controller and provide inspiration for generative expressive and communicatory robot motion algorithms.

ADDITIONAL IMAGES

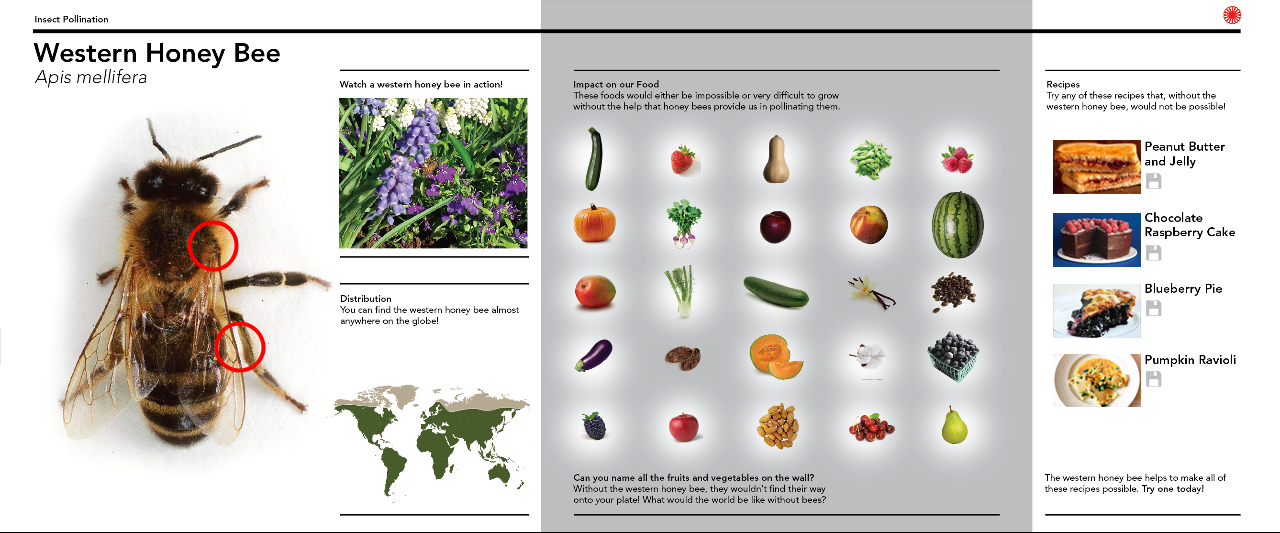

Surprised Old Man gives the shrubbery a hard look:

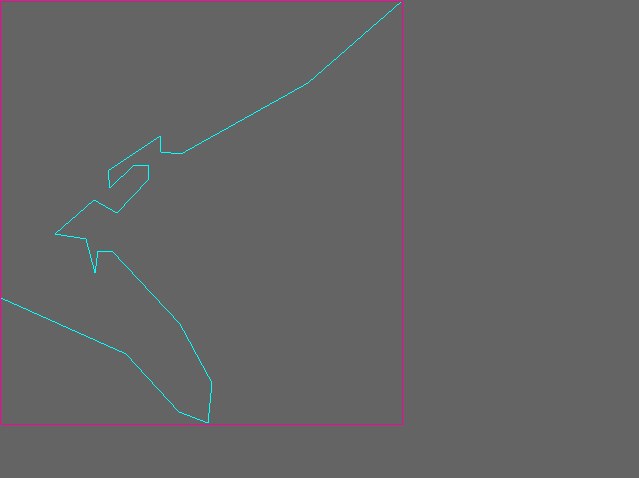

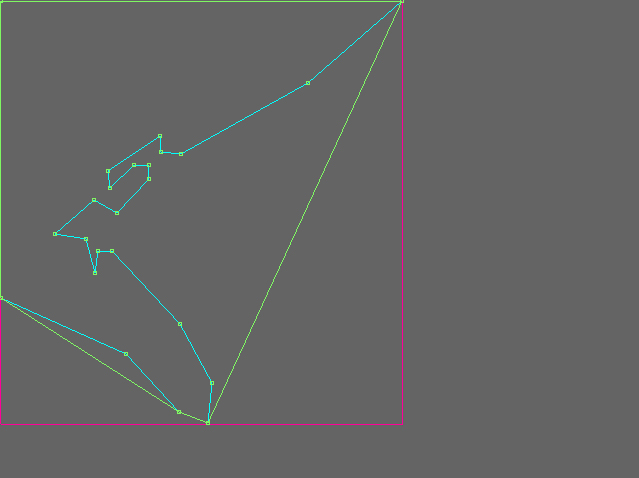

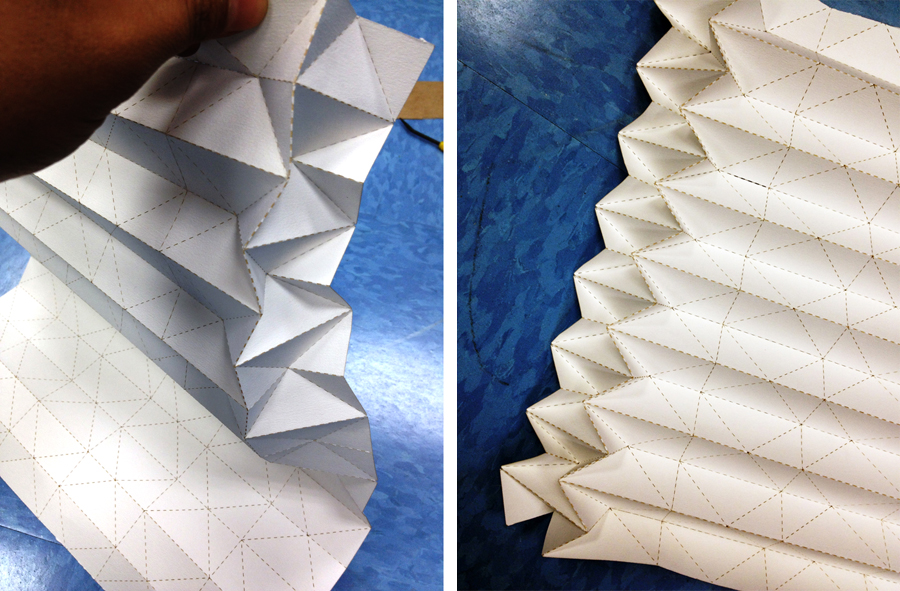

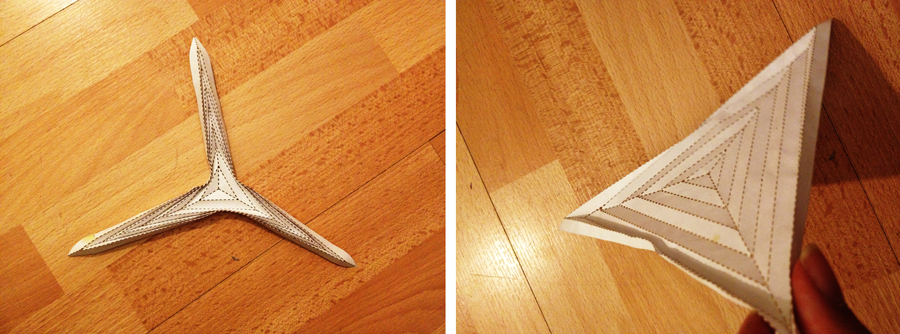

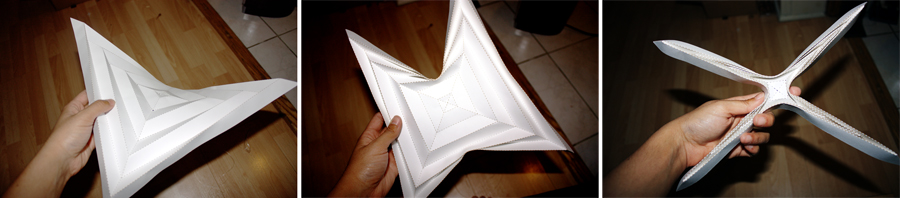

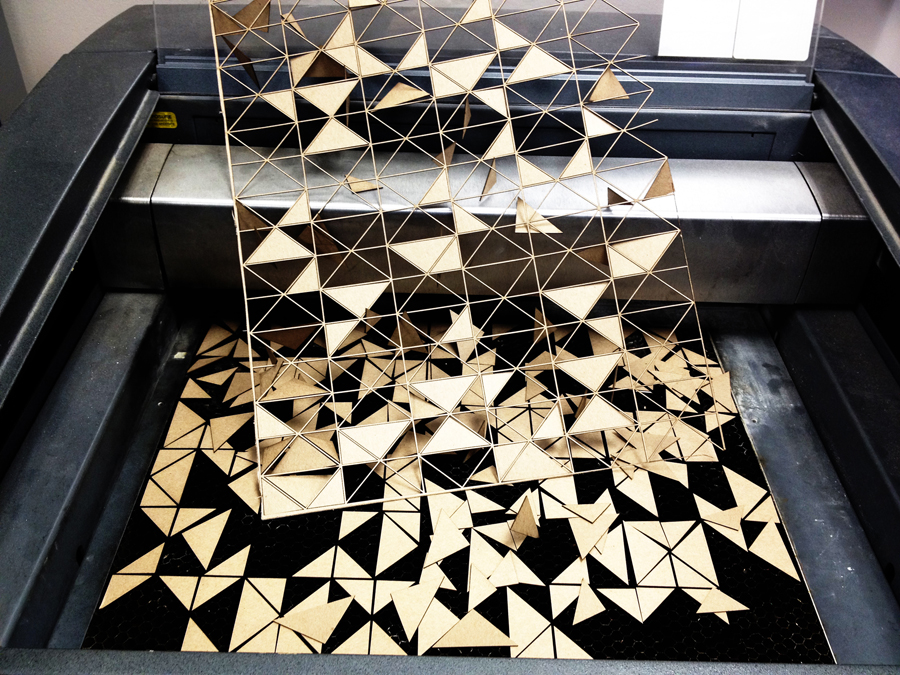

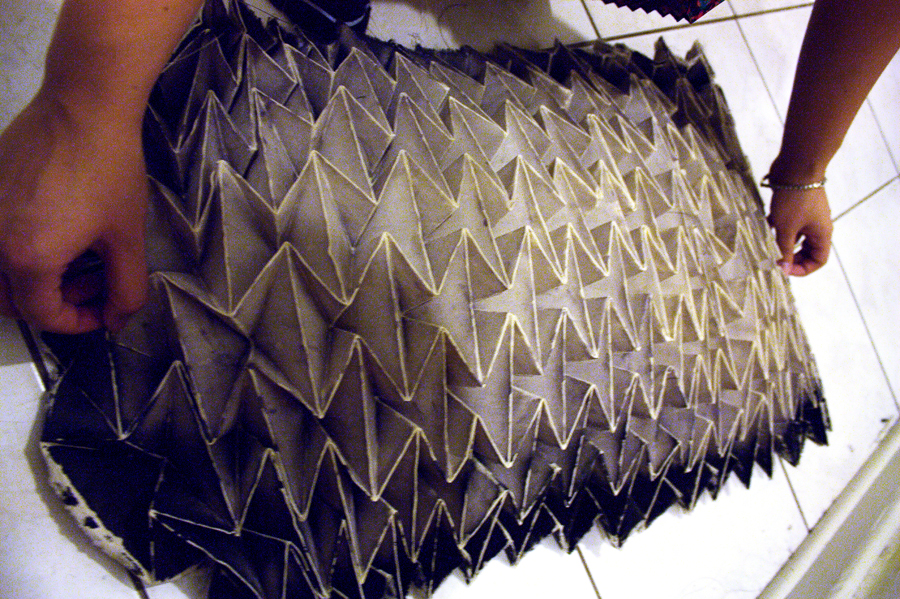

Sample scene miniatures displayed at the exhibition:

REFERENCES

[1] Abella, F., Happéb, F., Fritha, U. Do triangles play tricks? Attribution of mental states to animated shapes in normal and abnormal development. Cognitive Development. Volume 15, Issue 1, January–March (2000), pp 1–16.

[2] Castelli, F., et al. Movement and Mind: A Functional Imaging Study of Perception and Interpretation of Complex Intentional Movement Patterns. NeuroImage 12 (2000), pp 314-325.

[3] Engel, A., et al. How moving objects become animated: The human mirror neuron system assimilates non-biological movement patterns. Social Neuroscience, 3:3-4 (2008), 368-387.

[4] Heider, F., Simmel, M. An Experimental Study of Apparent Behavior

The American Journal of Psychology, Vol. 57, No. 2. (1944), pp. 243-259.

[5] Heider, F. Social perception and phenomenal causality. Psychological Review, Vol 51(6), Nov, 1944. pp. 358-374

[6] Ju, W., Takayama, L. Approachability: How people interpret automatic door movement as gesture. International Journal of Design 3, 77–86 (2009)

[7] Kelley, H. The processes of causal attribution. American Psychologist, Vol 28(2), Feb, 1973. pp. 107-128