Technology for Two: My Digital Romance

//Synopsis

From the exhibition flyer: Long distance relationships can be approached with new tools in the age of personal computation. Can interaction design take the love letter closer to its often under-stated yet implicit conclusion? Can emotional connections truly be made over a social network for two? This project explores new possibilities in “reaching out and touching someone”.

[youtube https://www.youtube.com/watch?v=h_aKZyIaqKg]

[youtube https://www.youtube.com/watch?v=tIT1-bOx5Lw]

//Why

The project began as one-liner, born of a simple and perhaps common frustration. In v 1.o (see below) the goal was to translate my typical day, typing at a keyboard as I am now, into private time spent with my wife. The result was a robot penis that became erect only when I was typing at my keyboard.

Her reaction: “It’s totally idiotic and there’s no way I’d ever use it.”

So we set out to talk about why she felt that way and, if we were to redesign it in any way, how would it be different? The interesting points to come out of the conversation:

- What makes sex better than masterbation is the emotional connection experienced by one participants ability to respond to the others cues. It’s a dialogue.

- Other desirable design features are common to existing devices.

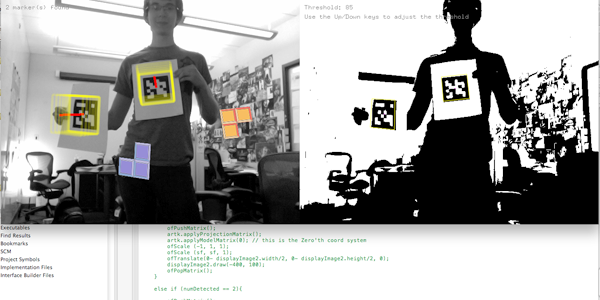

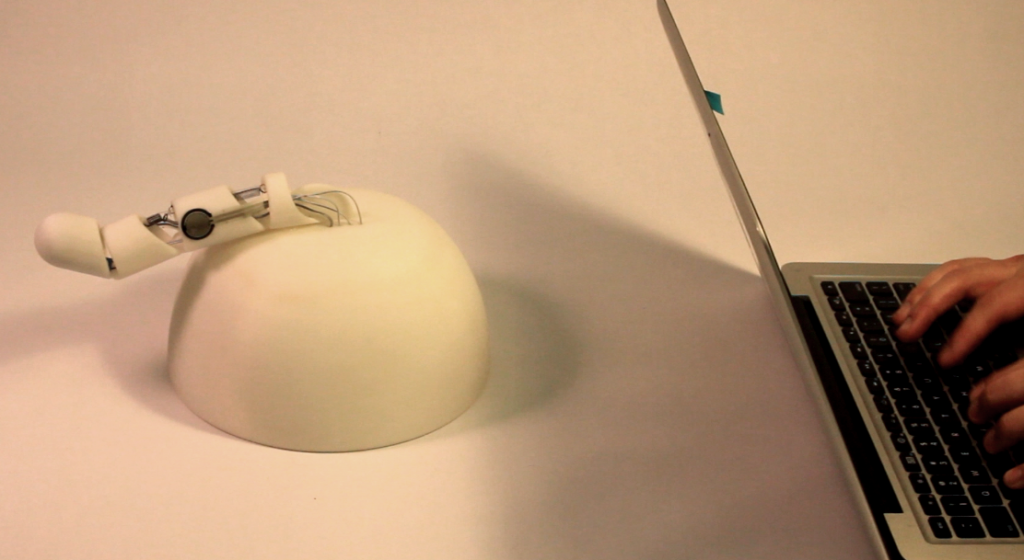

V2 was redesigned so nothing functions without the ‘permission’ of the other. The physical device is connected to remote computer over wiFi. The computer has an application that operates the physical device. Until the device it touched, the application doesn’t have any control. Once it is activated, keystrokes accumulate to erect it. Once erect, the device can measure how quickly or aggressively it’s being used and updates the application graphic, changing from cooler to hotter red. If the color indicates aggressive use, the controller may choose to increase the intensity with a series of vibrations.

While this physically approximates the interactive dance that is sex, the question remains ‘does it make an emotional connection?’. Because the existing version is really only a working prototype that cannot be fully implemented, that question remains unanswered.

//BUT WHY? and…THE RESULTS OF THE EXIBITION…

What no one wanted to ask was, “Why don’t you just go home and take care of your wife?” No one did. The subversive side of the project, which is inherently my main motivation, is offering viewers every opportunity to say ‘I’m an idiot. Work less. Get your priorities straight’.

I believe we’ve traded meaningful relationships for technological approximations. While I would genuinely like to give my wife more physical affection, I don’t actually want to do it with some technological intermediary. No more than I want “friends” on Facebook. But many people do want to stay connected in this way. The interesting question is,’ Are we ready for technology to replace even our most meaningful relationships?’. Is it because the tech revolution has exposed some compulsion to work, or some fear of intimacy? If not, why did not one of the 30+ people I spoke to in the exhibition question it? Continuing to explore this question will guide future work.

//Interaction

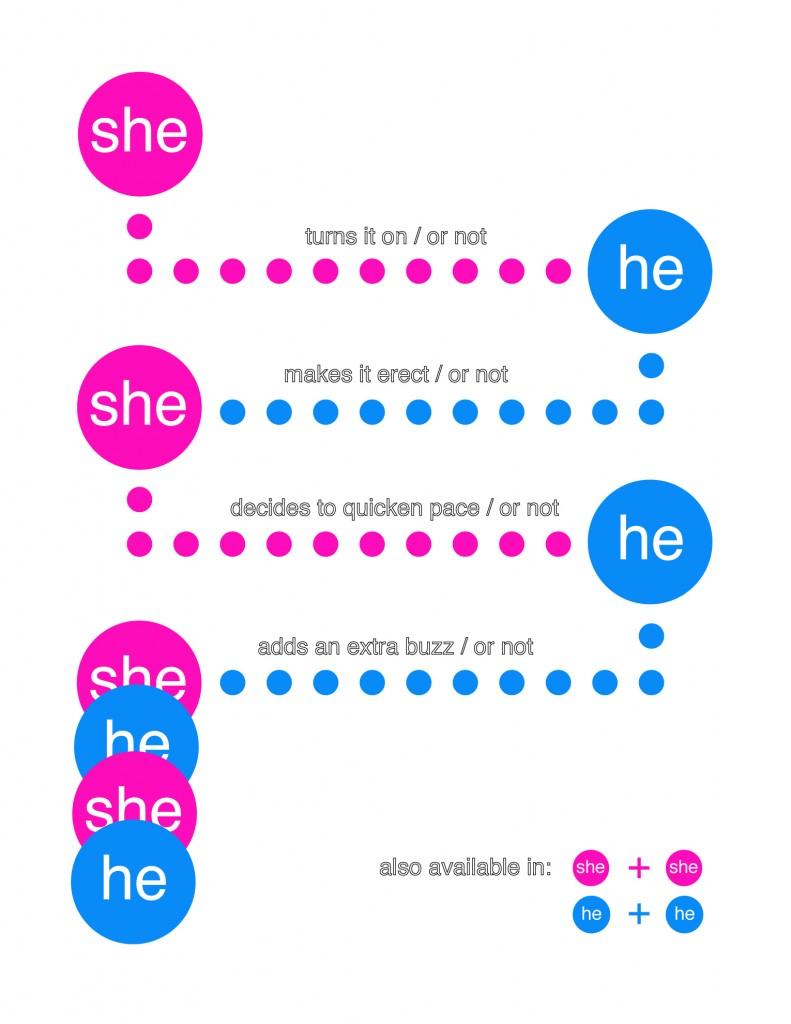

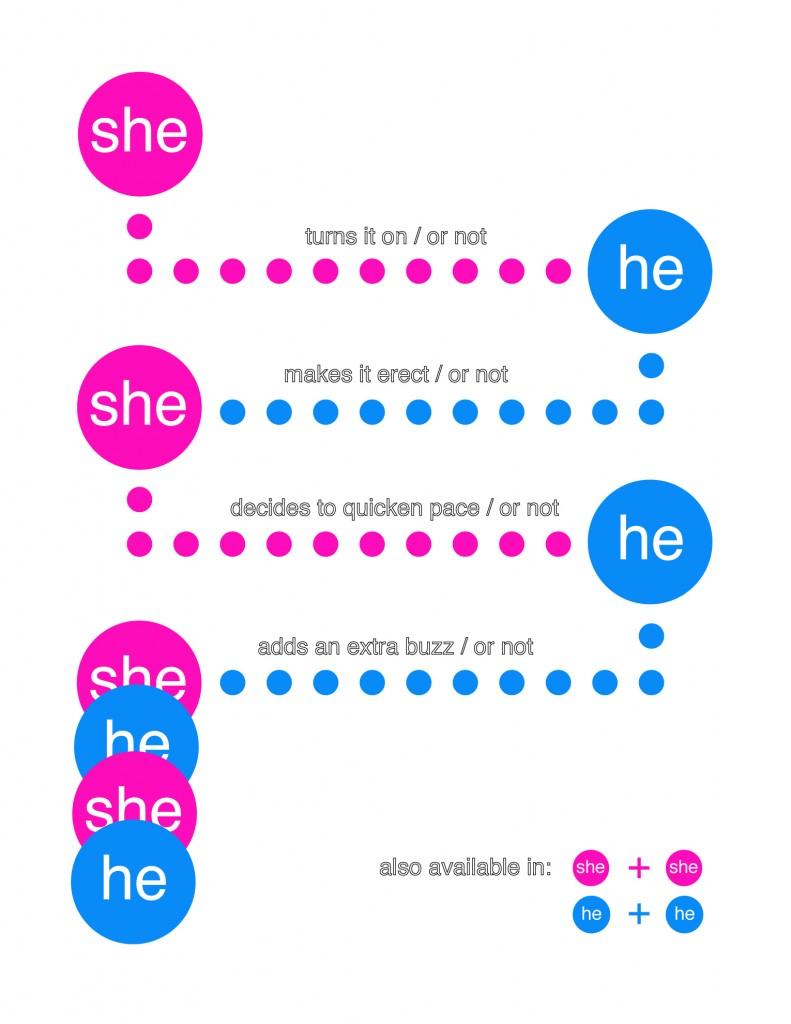

Inspired by some great conversations with my wife, “Hand Jive” by B.J.Fogg, and the first class erudition of Kyle Machulis, the flow of interaction was redesigned as diagramed here. It is important to note the “/or not”, following my wife’s sarcastic criticism, “Oh. So you turn it on and I’m supposed to be ready to use it.”

Reciprocity, like Sex

It is turned on by being touched. This unlocks the control application on the computer. If not used on the device side, it eventually shuts back down.

Twin Force Resistant Sisters

The application ‘gets aroused’ to notify the controller. At this point, keystroke logging controls the motor that erects the device.

Turned On

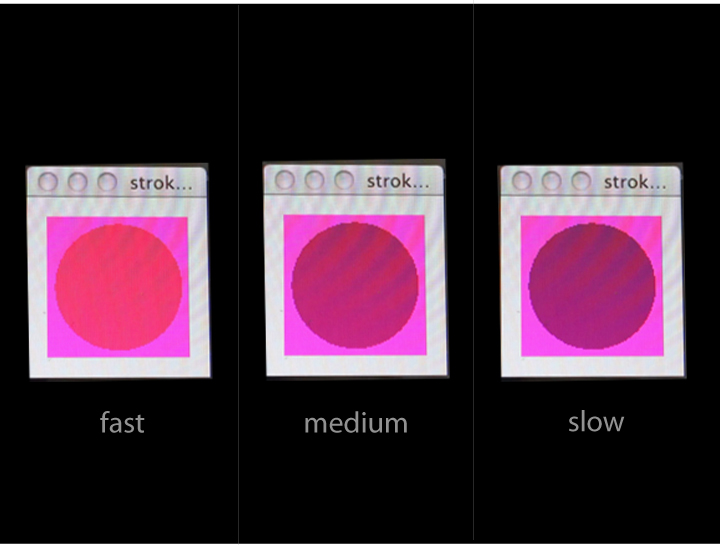

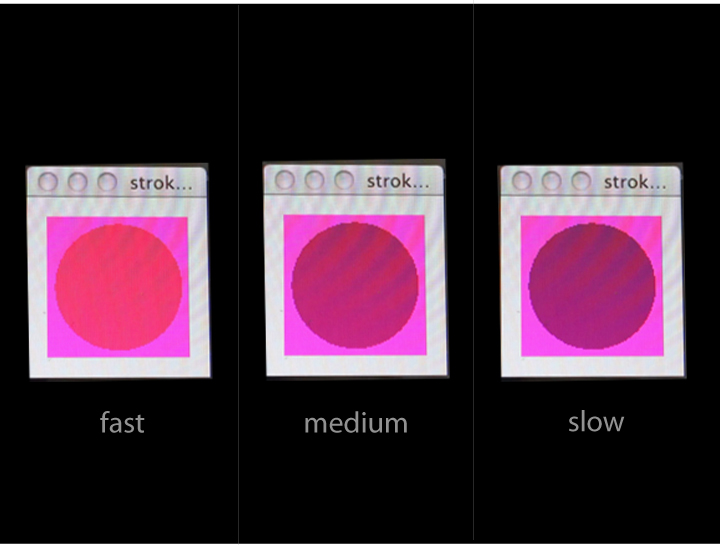

The application monitors how quickly the device is being stroked by measuring the input time between two offset sensors.

Faster, Pussycat?

The faster the stroke, the hotter the red circle gets. (Thank you Dan Wilcox for pointing out that is looks like a condom wrapper. That changed my life.)

Gooey

Two “pager motors” can then be applied individually or in tandem by pressing the keys “B”, “U”, “Z”. This feature could be programmed to turn on after reading a certain pace, or unlocked only after reaching a threshold pace.

Industry Standard

//Process

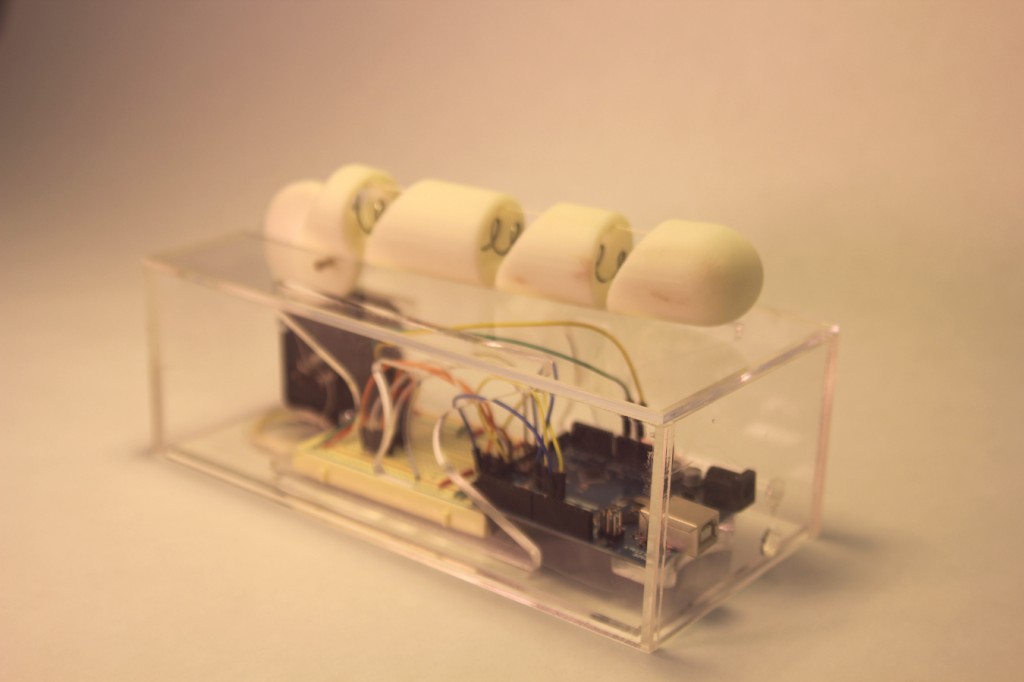

This was V1, a.k.a. “My Dick in a Box”.

v1.0

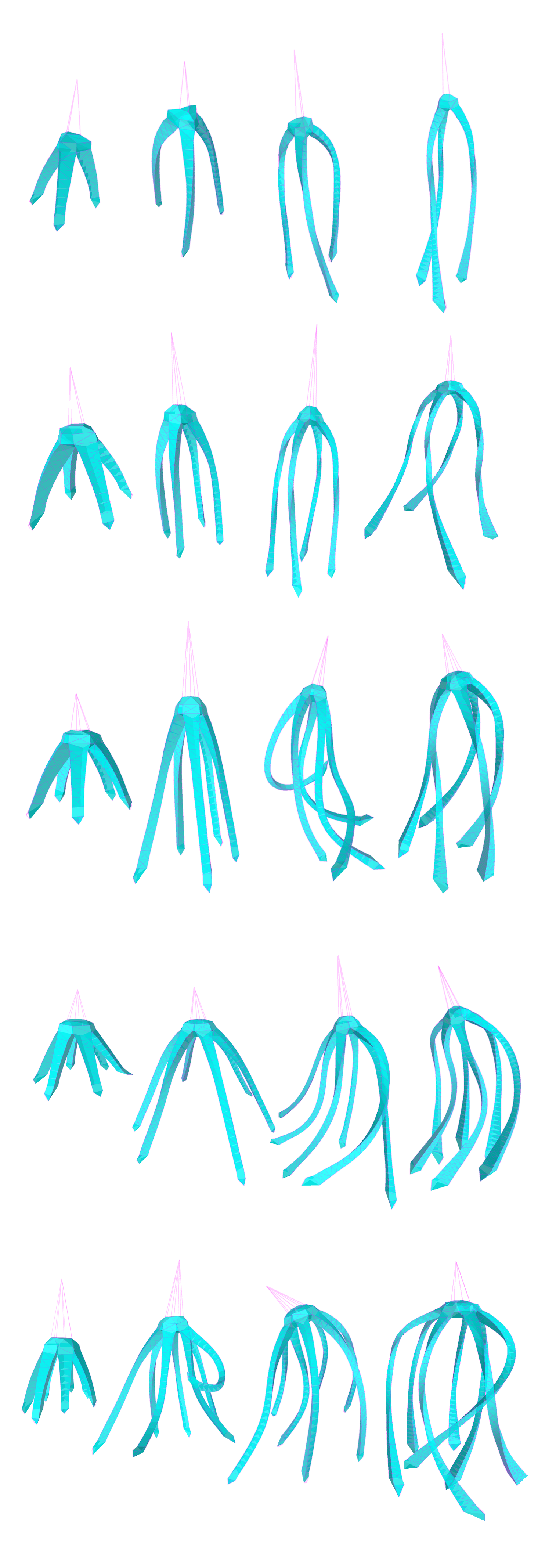

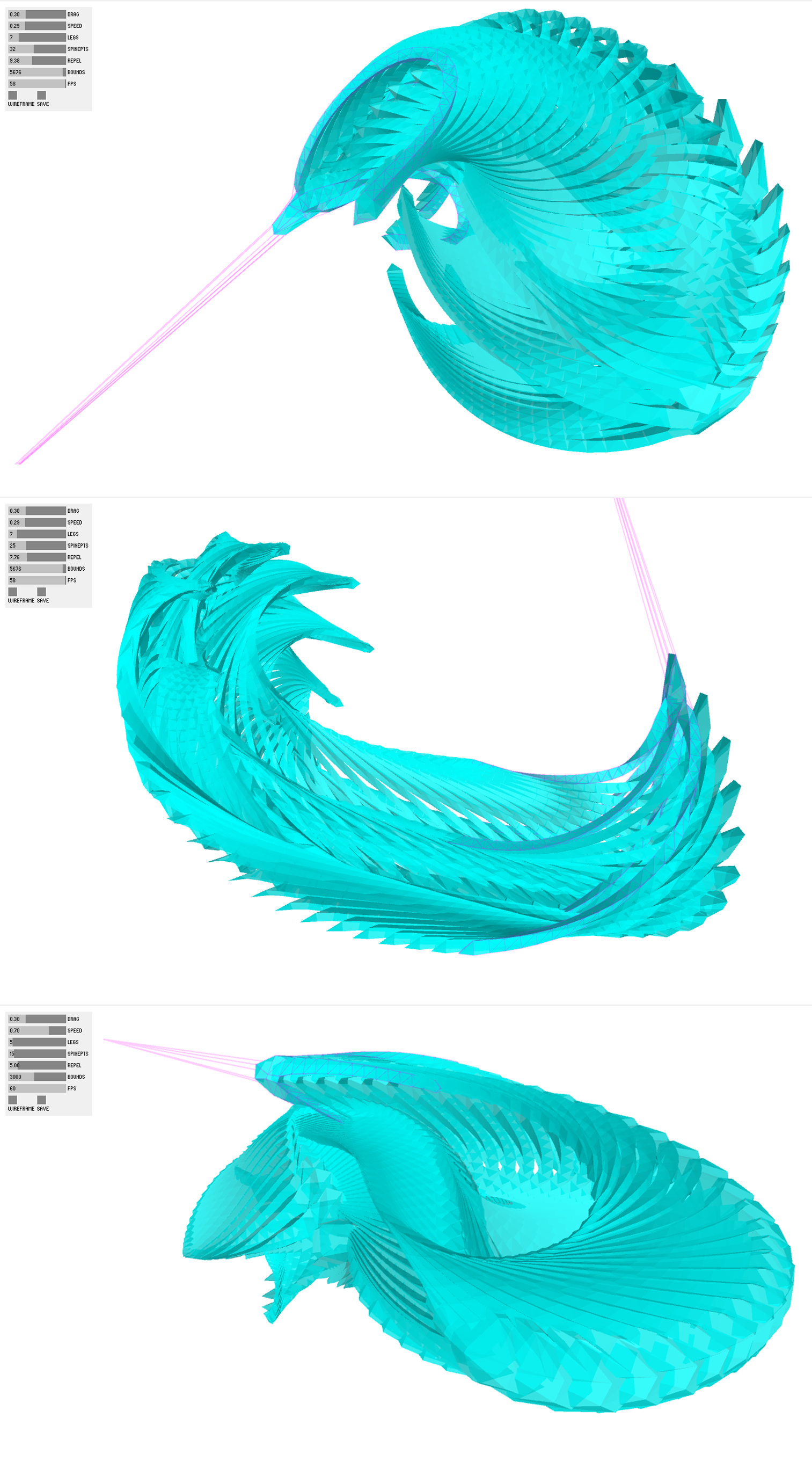

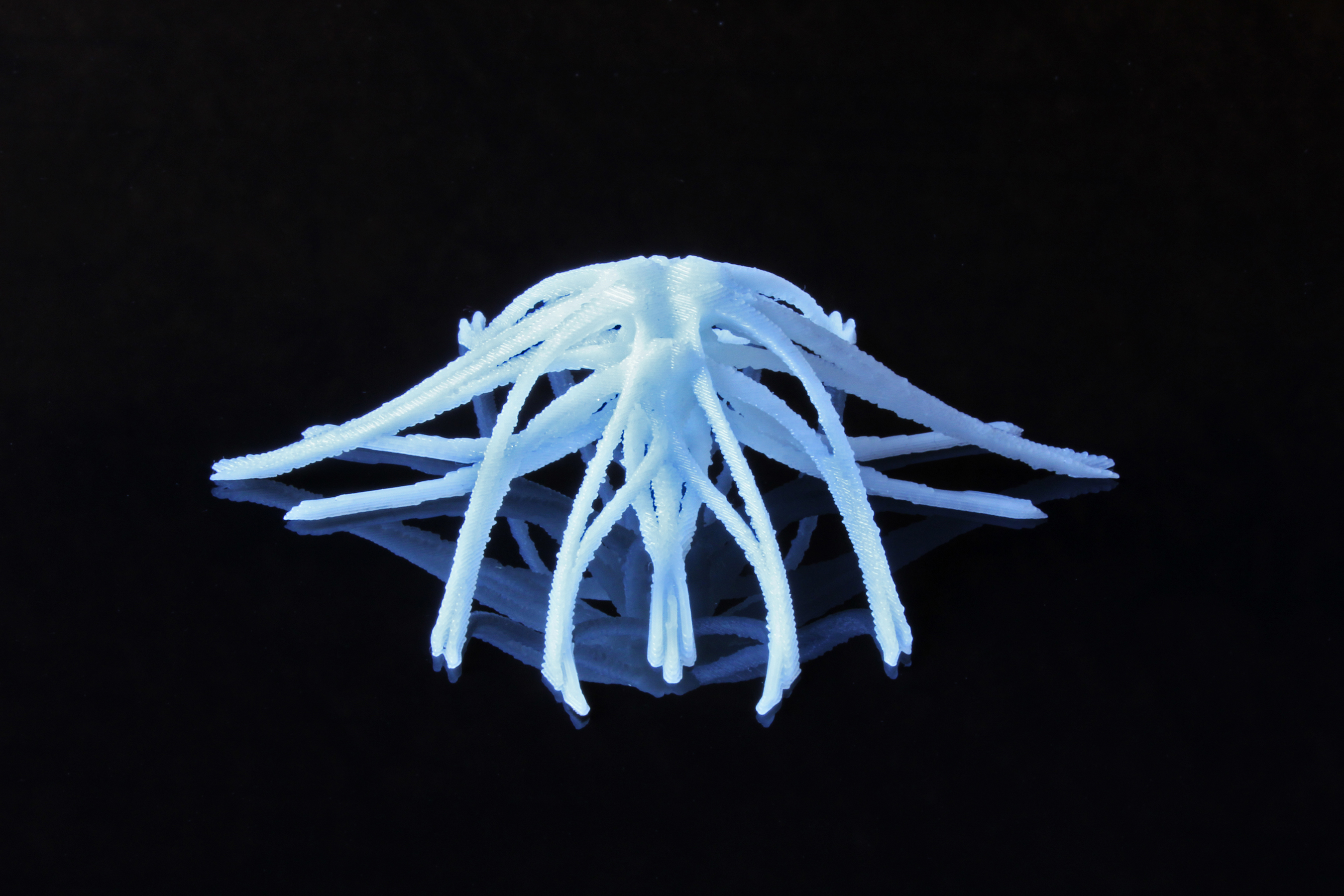

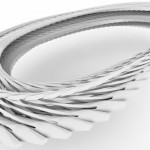

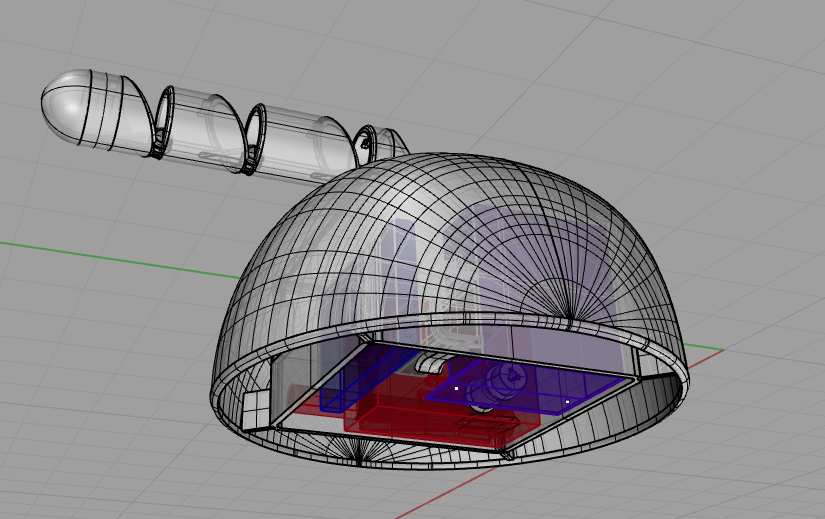

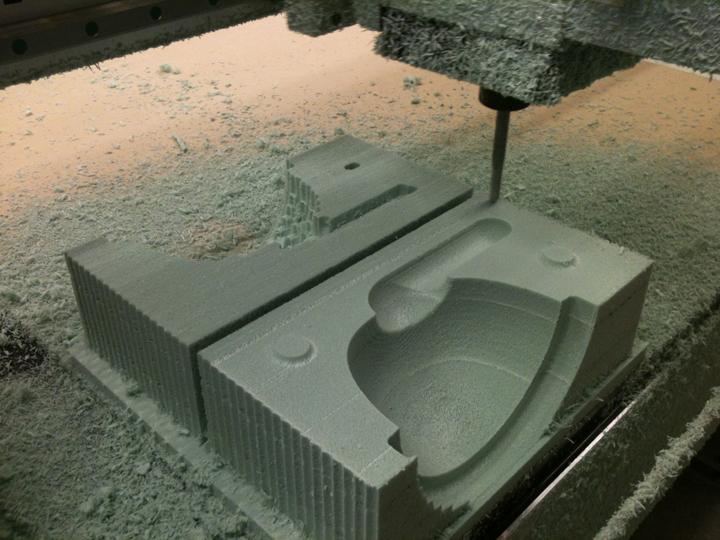

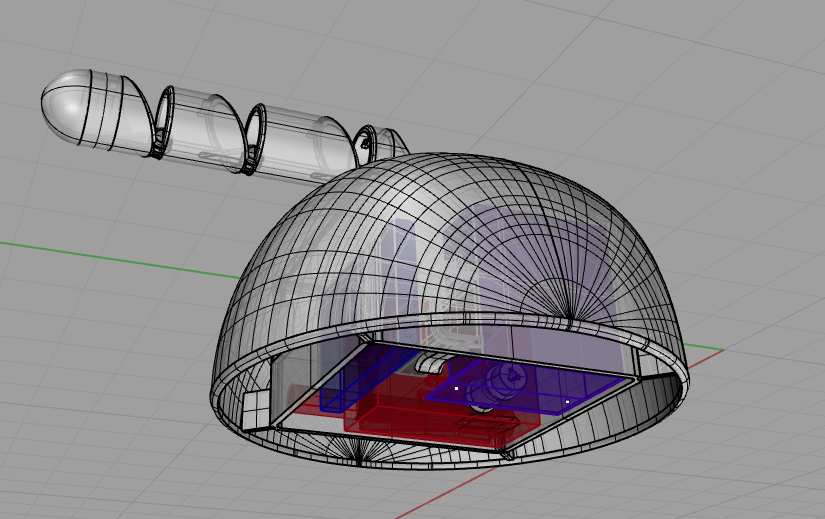

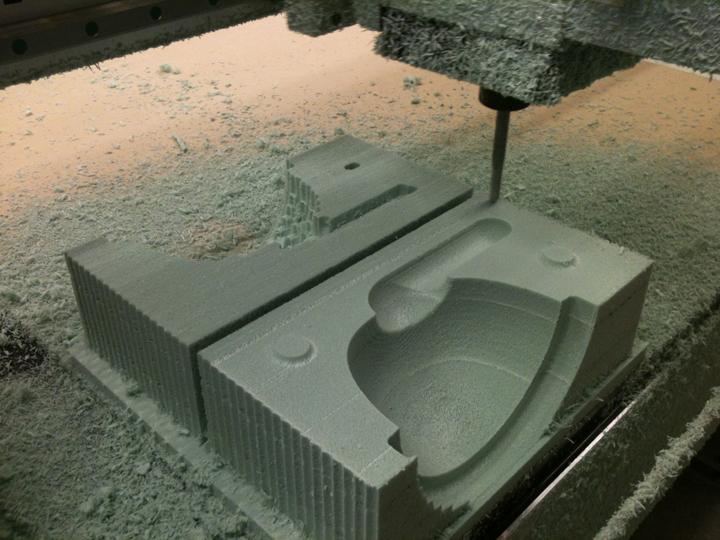

The redesign began in CAD. The base was redesigned to have soft edges and a broad stable base.

CAD

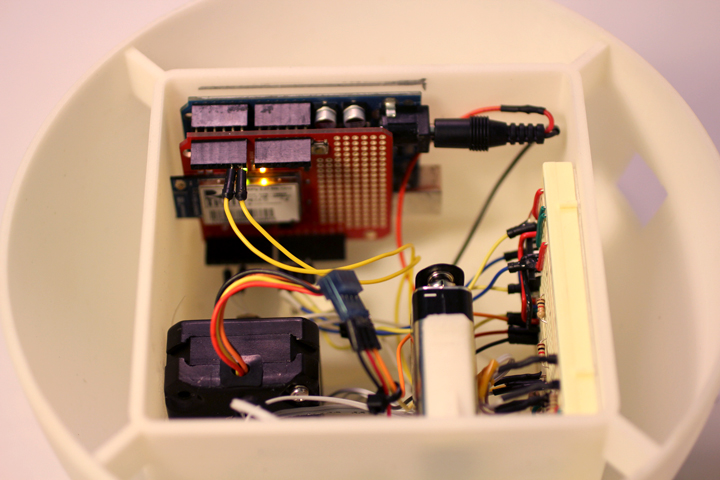

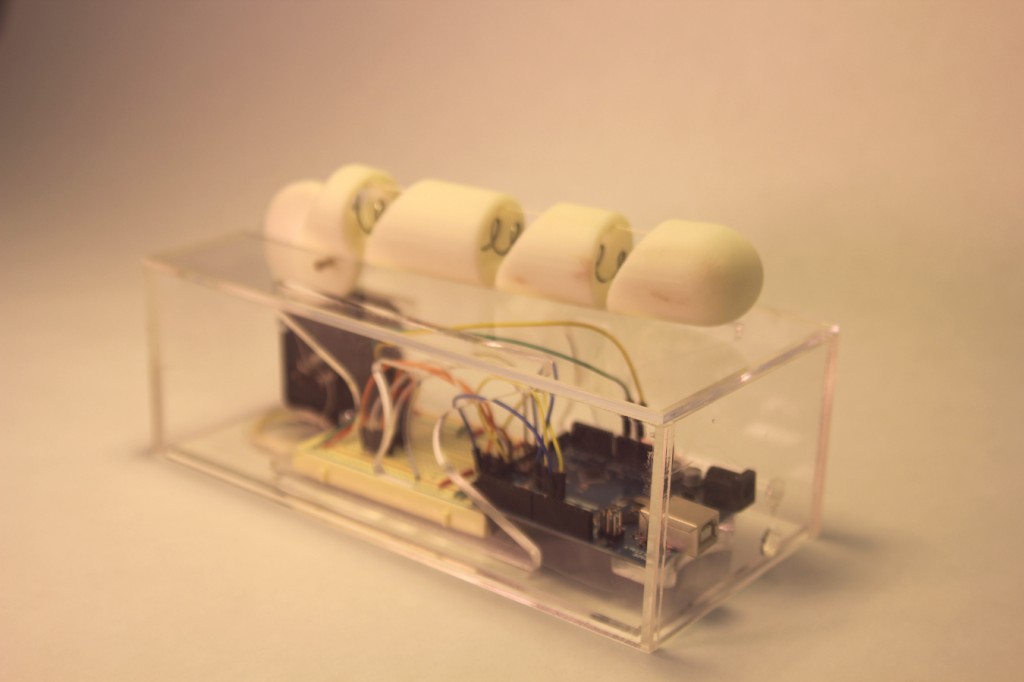

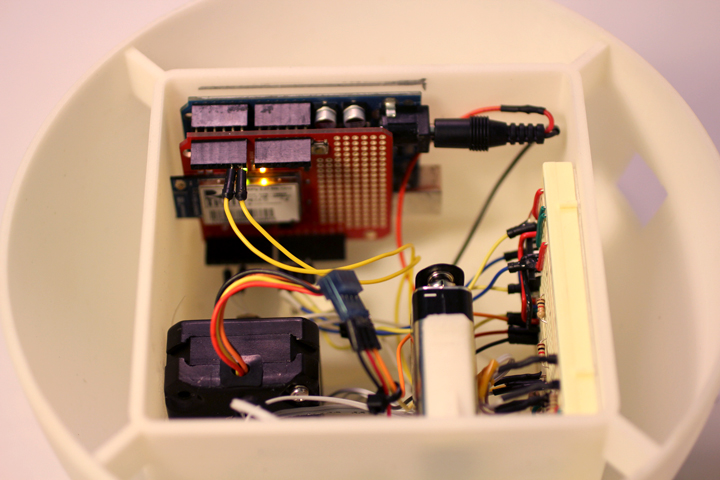

Much of the size and shape was predicated on the known electronic components including an Arduino with WiFly Shield, a stepper motor, circuit breadboard, and a 9v battery holder. A window was left open to insert a USB cable for reprogramming the Arduino in place.

Hidden Motivations

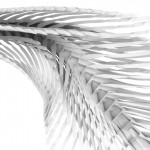

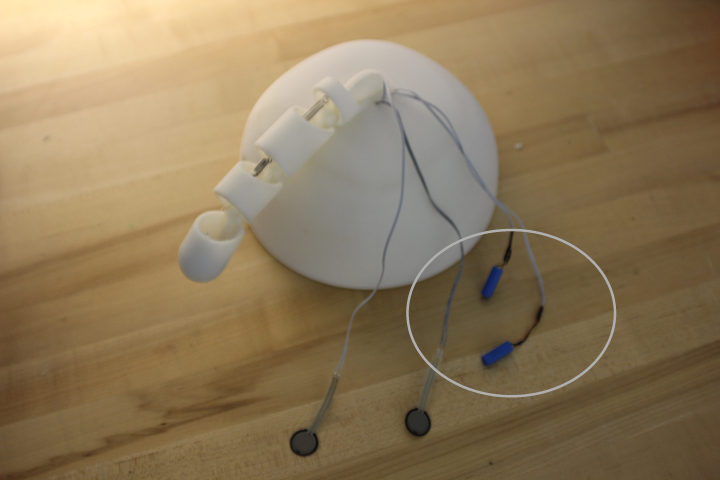

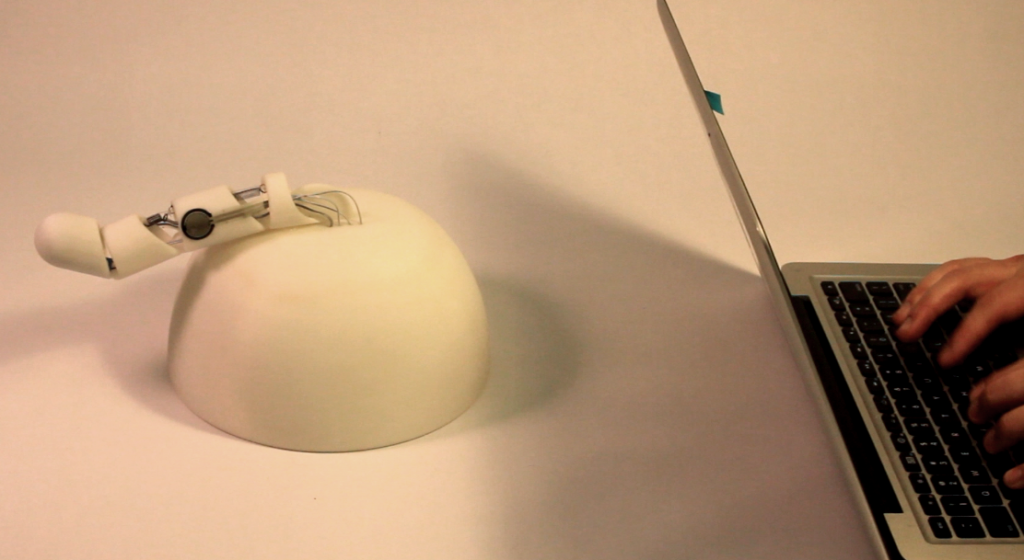

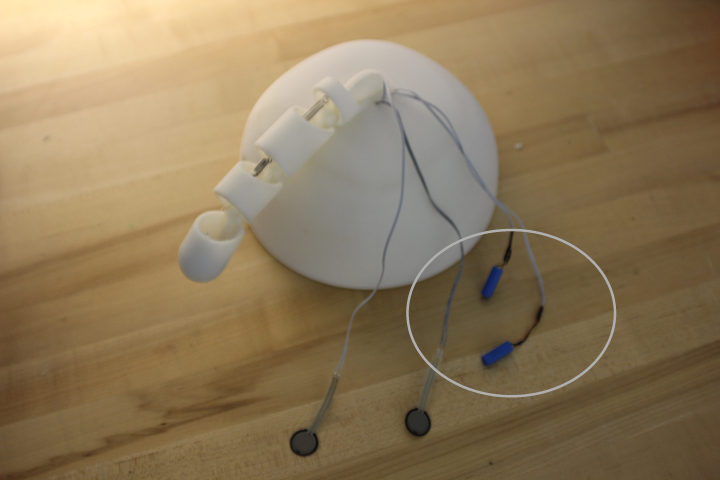

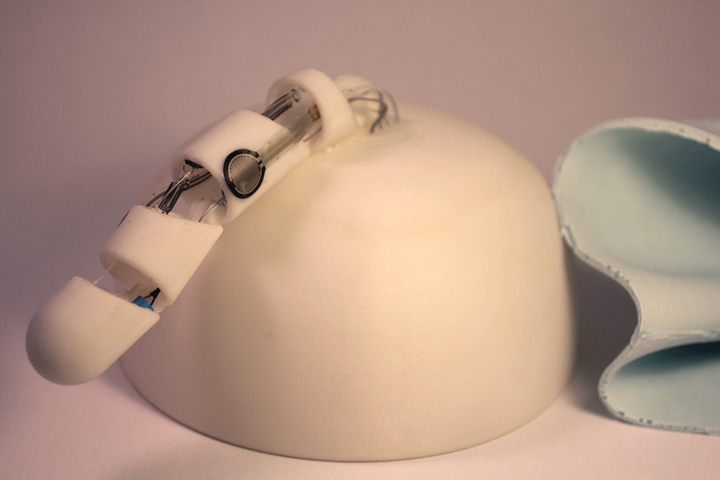

The articulated mechanical phallus operates by winding a monofilament around the shaft of the motor. The wiring for the force sensors and vibrators is run internally from underneath.

Robot weiner, Redefined

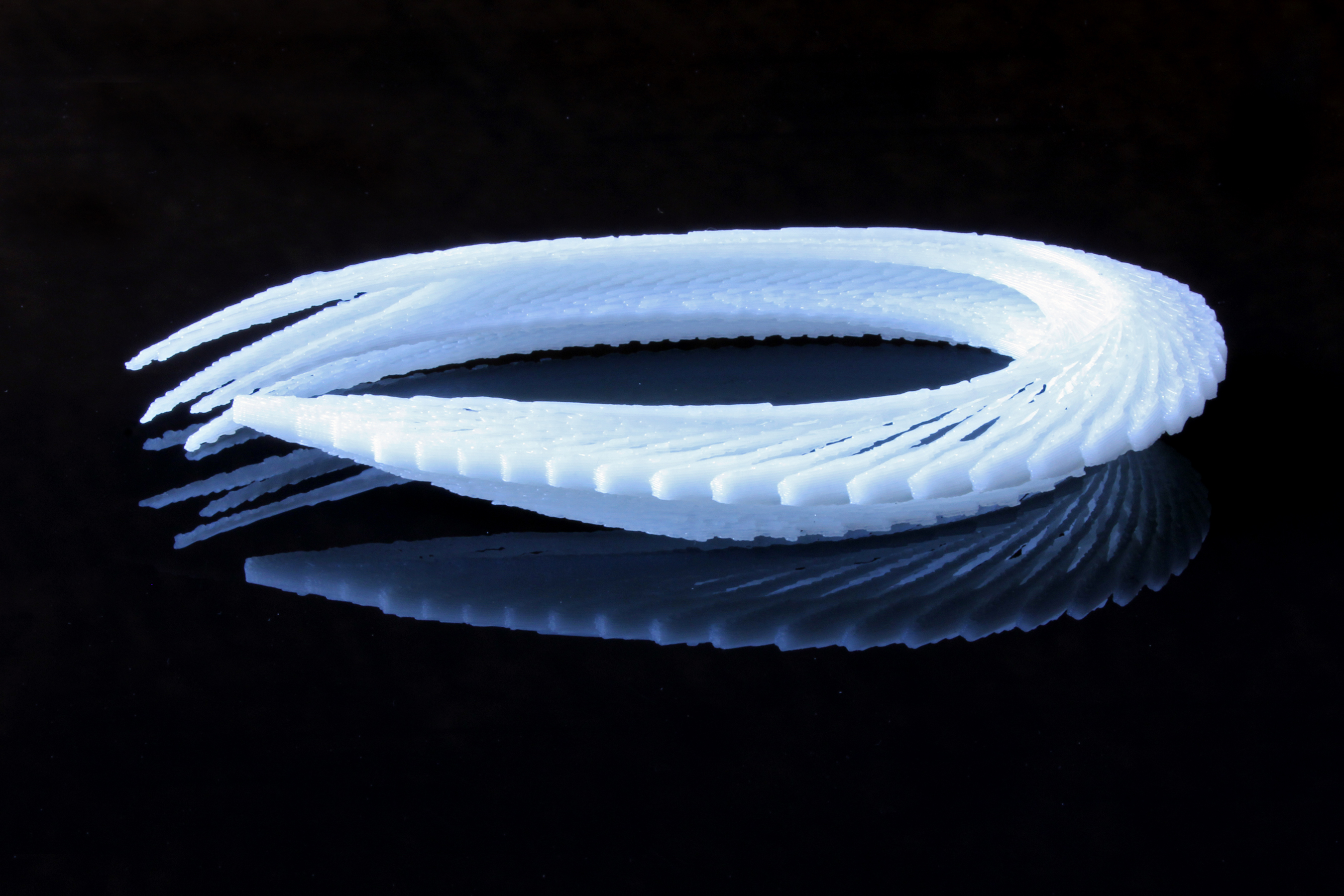

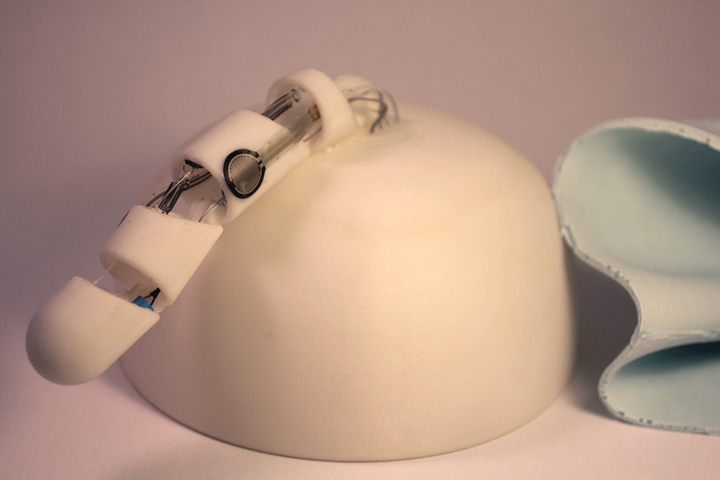

A silicon rubber cover was cast from a three piece mold.

Careful Cut

This process was not ideal and will ultimately be redone with tighter parting lines and with a vacuum to reduce trapped air bubbles. It is durable, and dish-washer safe.

Put a Hat On It.

While the wireless protocol was a poorly documented pain that works most of the time, it was a necessary learning experience and one I’m glad to have struggled through. It warrants a top notch “Instructable” to save others from my follies.

Look, No Wires!

Special thanks to:

- My wife

- Golan

- Kyle

- Dan

- Blase

- Mads

- Derren

- Luke