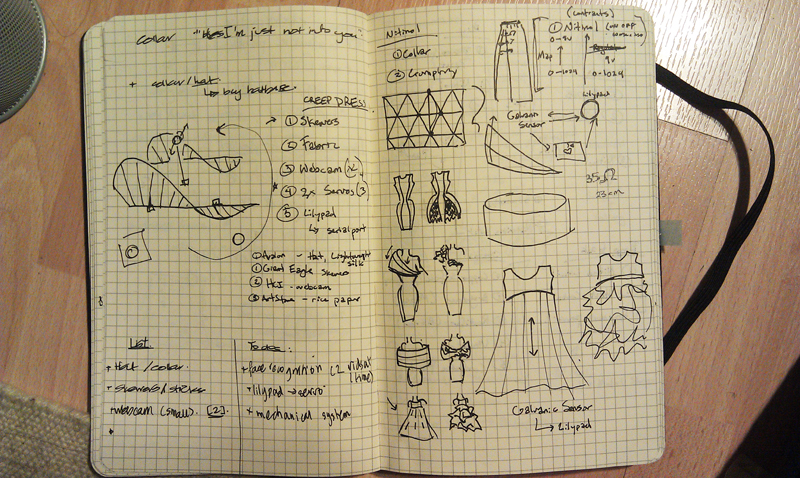

Concept

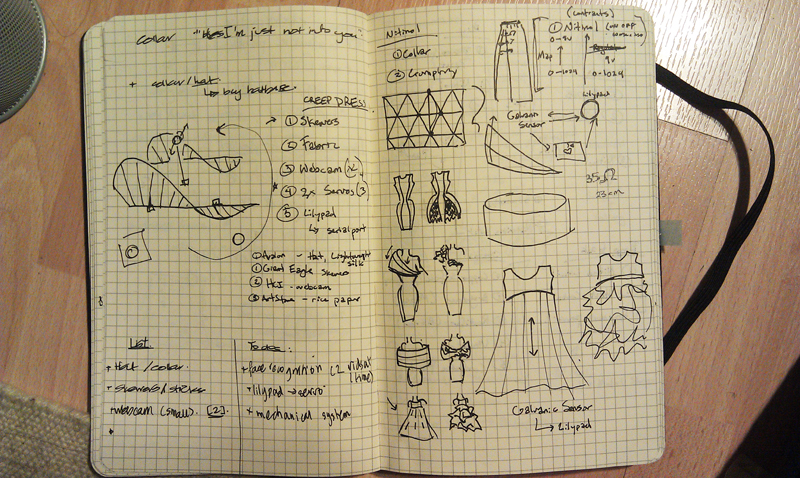

For our final project, Mahvish and I are developing a dress that shields the wearer from unwanted attention. If verbal communication fails to convey your disinterest, now it can have a physical manifestation, saving you from further measures of slightly harsher words, flight, or a long night of painful grimaces. The dress achieves this largely through a large kinetic collar attached to a webcam which can be hidden in a simple and ergonomically efficient topknot. By subtly placing a hand on one’s hip, the camera is told to take a picture of the perpetrator. Using a face recognition algorithm, the camera, which is mounted on a servo, will track the newly stored face while it remains in your field of view. The corresponding part of the collar will be raised to shield your face from whatever direction the camera is facing, sparing the wearer from both eye contact, and yet another incredibly awkward social situation.

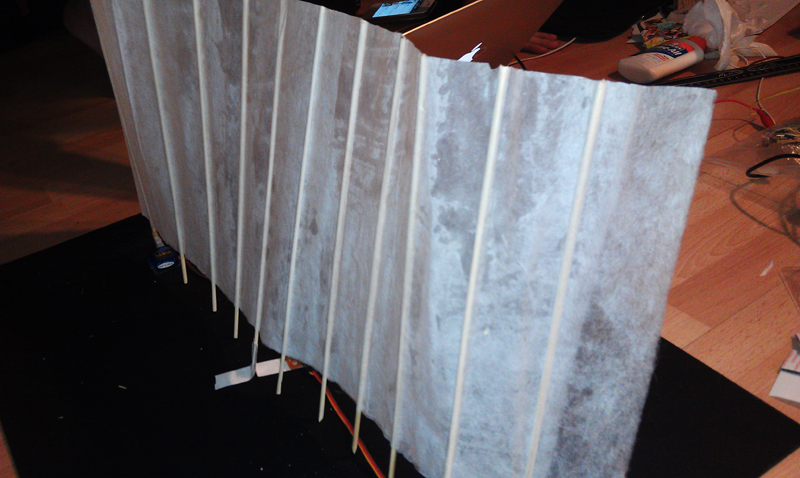

Mechanical/Electronic Systems

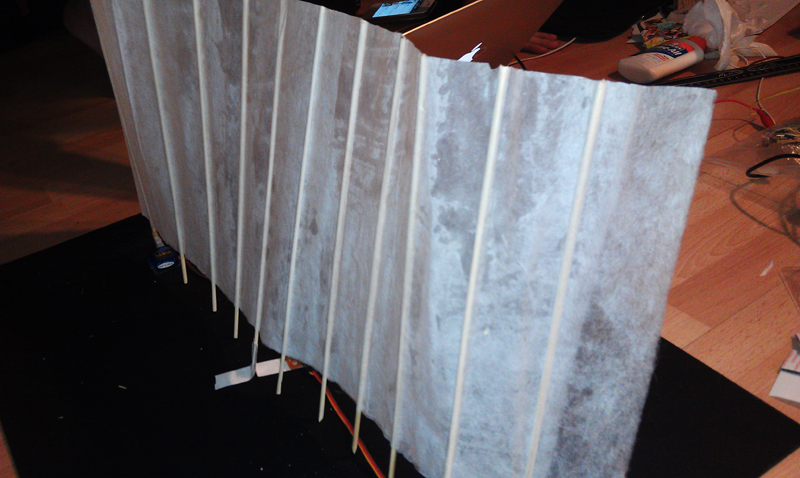

The first thing we attempted was a prototype of the collar design. We were inspired by Theo Jansen’s strandbeest’s wing movement and wanted to experiment with the range of motion we could achieve, as well as experiment with materials. So this initial form is created out of bamboo and laminated rice paper, for the final design we want to use a much more delicate spine material.

[youtube=https://www.youtube.com/watch?v=Kaw7lA5TfYM&feature=youtu.be-A]

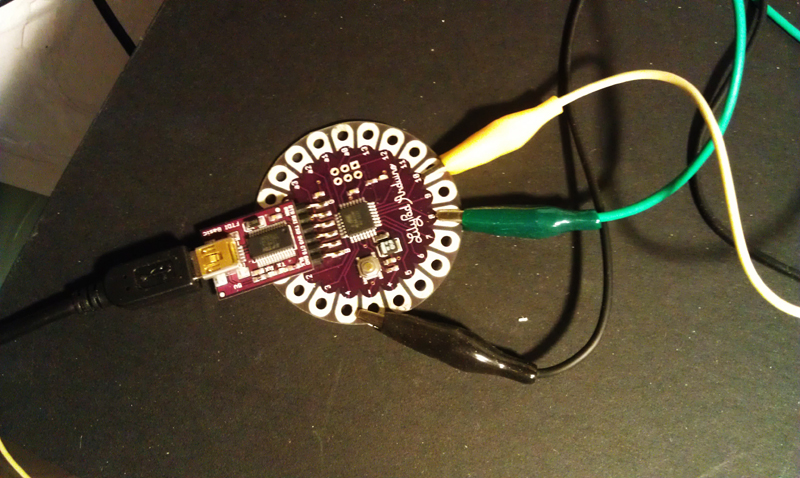

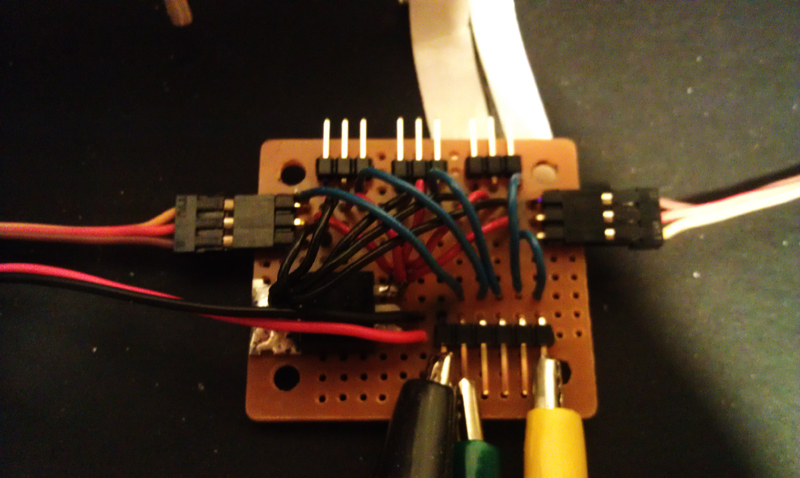

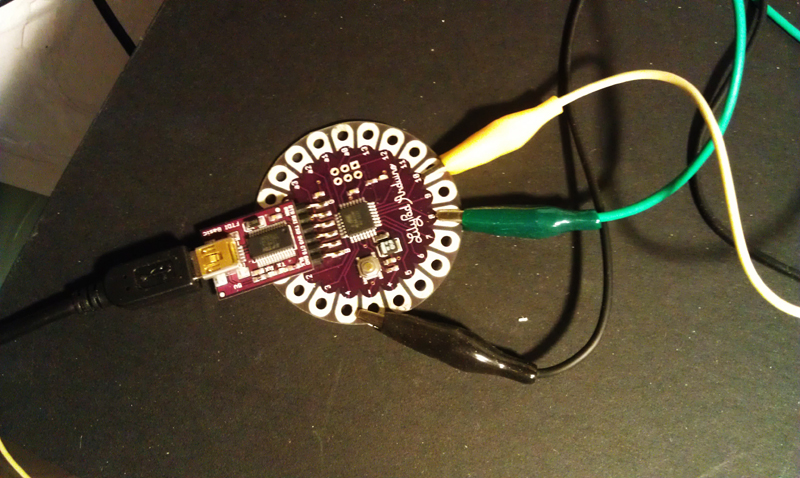

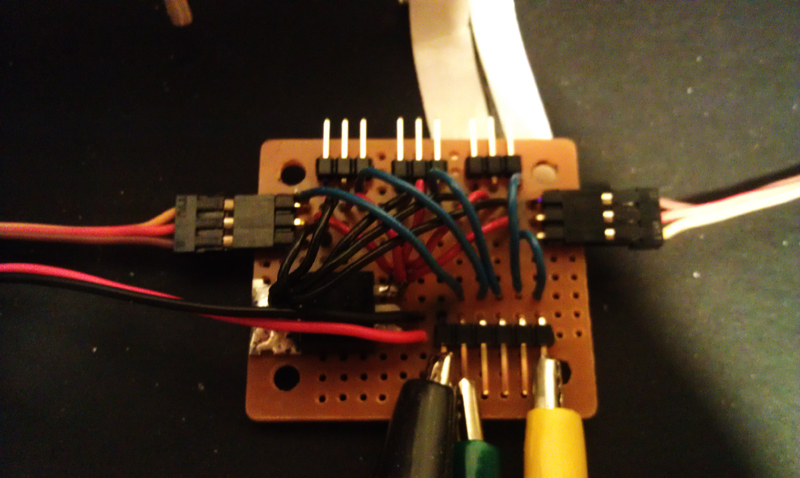

The collar currently is moved by servos which oscillate in separate directions. However, powering multiple servos from the Lily Pad does not work well at all, so we built (with much help from Colin Haas) a controller with an external power source to help us direct the four/five servos that will manipulate the collar, as well as the one hidden in the model’s hair.

The facial recognition code does require a laptop to run, so rather than trying to hide a large flat inflexible object in the dress, we’re going to construct a bag to go with it and run the wire up the shoulder strap. If you are the kind of lady who would wear a dress like this, it is very likely you’d like to have your laptop with you anyway. The rest of wires will be hidden in piping within the seams, with the lily pad exposed at the small of the back.

Facial Recognition + Tracking

For the facial recognition portion we’re currently using openCV + openFrameworks. When the image is taken, the centermost face is chosen as the target, and the dress will do its best to track it and avoid it until the soft “shutter” button on the dress is pressed again.

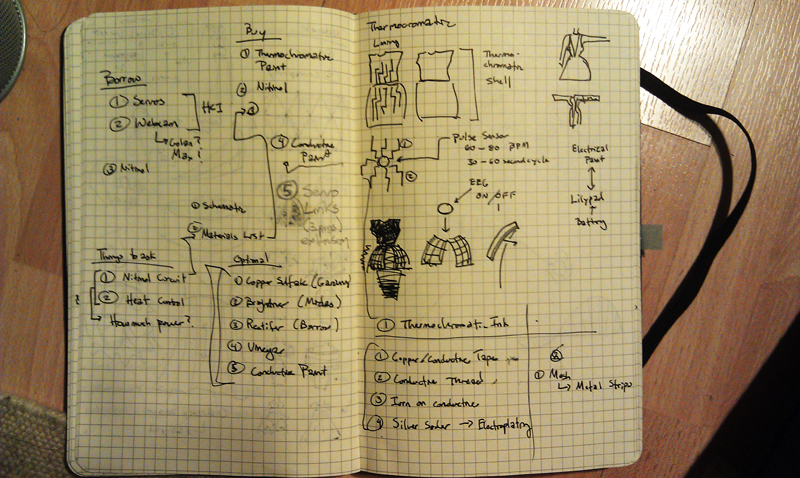

Other Concepts/Ideas

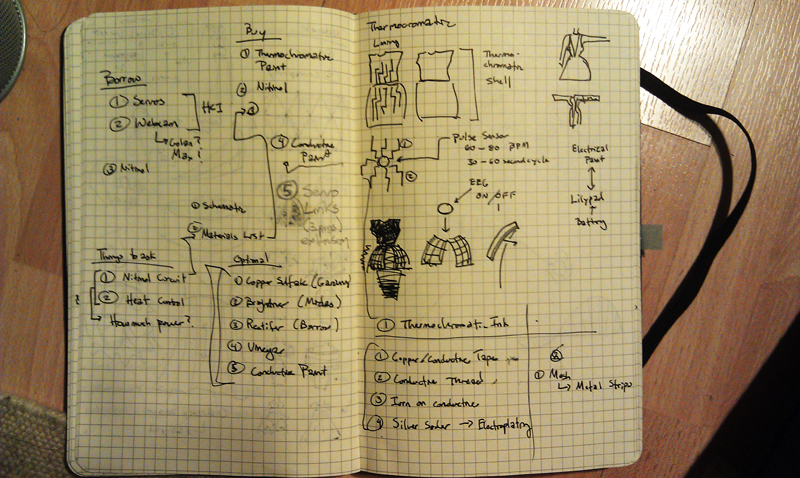

Depending on how quickly we can get this dress off the ground, some other dress designs we’d love to try to pull together would be a deforming dress that incorporates origami tessellations and nitinol, and a thermochromatic dress that would have a constantly shifting surface.