Kaushal Agrawal | Final Project | Snap

OVERVIEW

A picture is worth a thousand words. Today pictures have become popular means to capture and share memories. With the presence of high end cameras on mobile devices sharing pictures have become even faster and convenient. However clicking pictures still requires pulling out a device from your pocket.

Snap is built on the idea of allowing people to click pictures on the go. It uses a camera mounted on the glasses to click pictures and store them to your hard drive. A person can simply click a picture by creating a ‘L’ shaped gesture. It is similar to a person making a frame with two hands, instead Snap uses one hand and fills up for the missing point in the frame. The project makes uses the concept of skin segmentation to separate a hand from the background and uses hand gesture recognition concepts to identify the click gesture. Once the gesture is recognized it captures the region of interest contained within the half drawn frame.

VIDEO

[youtube https://www.youtube.com/watch?v=rJ8hy1Xfs6s&feature=youtu.be; width=600; height=auto]

MOTIVATION

The project idea was driven from the video called the “Sixth Sense”. The project uses a camera + projector assembly that hangs around the neck of a person and is used to take picture and project images on a surface. The person has radio-reflective material tied to their fingers. Whenever a person wants to click a photo, they create a virtual camera frame with their hands which triggers the camera to take a picture of the landscape contained in the camera frame.

[youtube https://www.youtube.com/watch?v=YrtANPtnhyg; width=600; height=auto]

IMPLEMENTATION

Snap is an Openframeworks project and uses OpenCV to achieve this goal.

Original Image

The video stream from the camera is polled 5 times per second. The captured frame from the camera acts as the original image. The video resolution is set to 640 X 480 to test and demonstrate the concept.

The image is then converted from RGB color space to YCrCb color space. The Y component describes brightness and the other values describes the color difference rather than a color. In contrast to RGB, the YCrCb color space is luma independent, resulting in a better performance.

The YCrCb image is then thresholded between minimum and maximum values of Y, Cr and Cb to separate skin colors from the non-skin colors in the background.

The resulting skin segmented image is further cleaned by using morphological operations, erosion and dilation. This reduces the noise in the image and sharpens the image.

The morphed and cleaned image is further processed using OpenCV to find out contours.

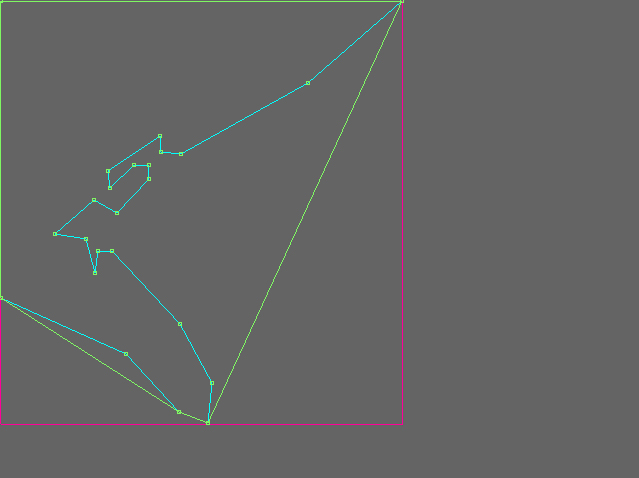

The largest contour is selected and a convex hull is created for that contour. The contour and convex hull are then used to find out convexity defect which are the local minimums in a hand. The resulting convexity defects and the contours are searched for the largest perimeter between the start and the end of the convexity defect. Also the angle created by this defect is filtered between 80 to 110 degrees.

Once we successfully filter the criteria for a gesture, a frame is constructed using that recognized defect. The image is rotated so it is easy to crop it. The three yellow circles form the vertexes of the resulting image.

The original image is then cropped to produce the final image that is stored on the computer.