The objective of this project is form generation via a Braitenberg vehicles simulation.

A Braitenberg vehicle is a concept developed by the neuroanatomist Valentino Braitenberg in his book “Vehicles, Experiments in Synthetic Psychology” (full reference: Braitenberg, Valentino. Vehicles, Experiments in Synthetic Psychology. MIT Press, Boston. 1984). Braitenberg’s objective was to illustrate that intelligent behavior can emerge from simple sensorimotor interaction between an agent and its environment without representation of the environment or any kind of inference.

What excites me about this concept is how simple behaviors on the micro-level can result to the emergence of more complex behaviors on the macro-level.

—————————————————————————————————————————————

>> inspiration and precedent work

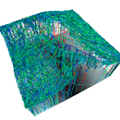

Below there is some precedent generative art work using the concept of Braitenberg vehicles:

Reas, Tissue Software, 2002

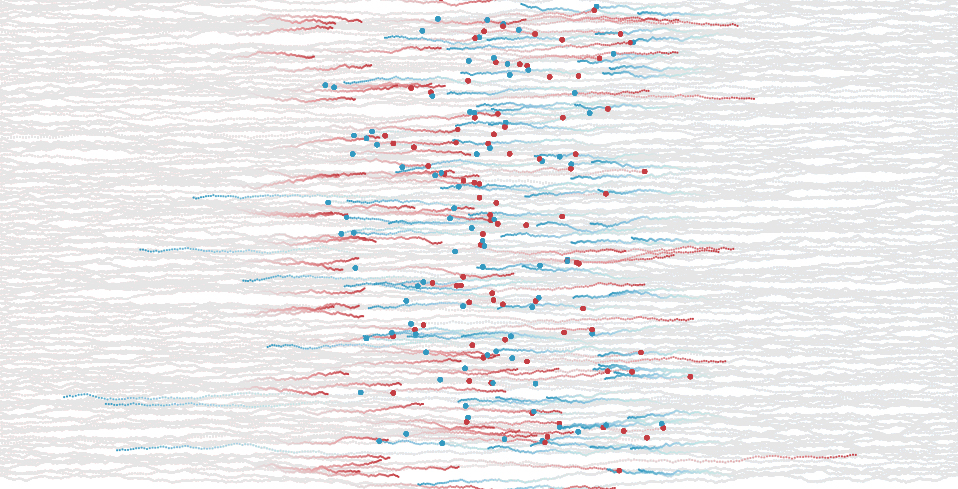

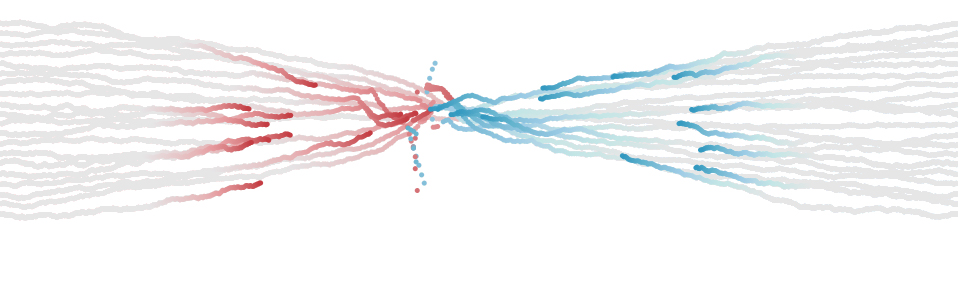

In Vehicles, Braitenberg defines a series of 13 conceptual constructions by gradually building more complex behavior with the addition of more machinery. In the Tissue software, Reas uses machines analogous to Braitenberg’s Vehicle 4. Each machine has two software sensors to detect stimuli in the environment and two software actuators to move; the relationships between the sensors and actuators determine the specific behavior for each machine.

Each line represents the path of each machine’s movement as it responds to stimuli in its environment. People interact with the software by positioning the stimuli on the screen. Through exploring different positions of the stimuli, an understanding of the total system emerges from the subtle relations between the simple input and the resulting fluid visual output.

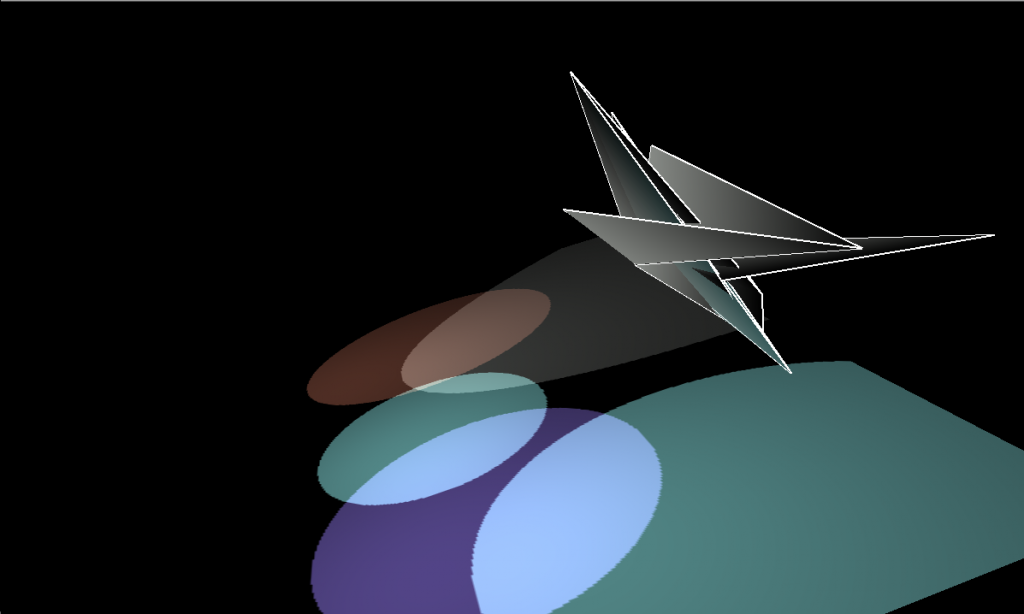

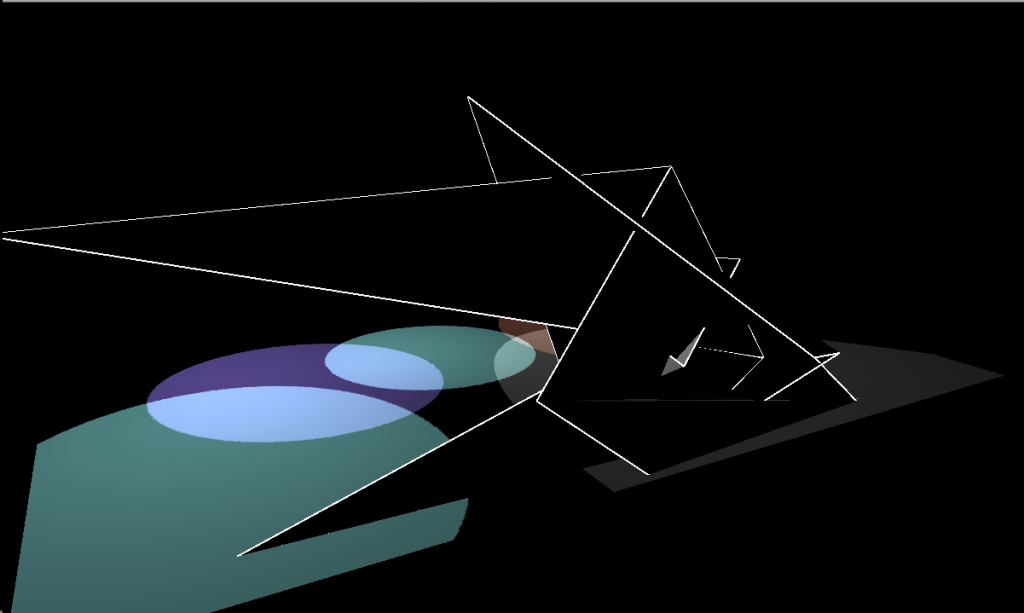

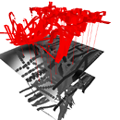

Yanni Loukissas, Shadow constructors, 2004

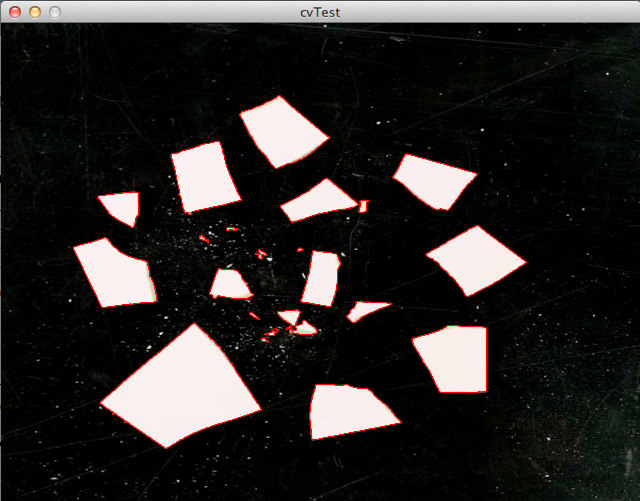

In this project, Braitenburg vehicles move over a 2d imagemap collecting information about light and dark spots (brightness levels). This information is used to construct forms in 3d, either trails or surfaces.

What I find interesting about this project is that information from the 3d form is projected back onto the source imagemap. For example, the constructed surfaces cast shaddows on the imagemap. This results in a feedback loop which augments the behavior of vehicles.

—————————————————————————————————————————————

>> the background story

There have been attempts in the field of dance performances to bring together explicitly movement with geometry. Two examples are described below:

“Might not the dancers be real puppets, moved by strings, or better still, self propelled by means of a precise mechanism, almost free of human intervention, at most directed by remote control ?”

Oscar Schlemmer

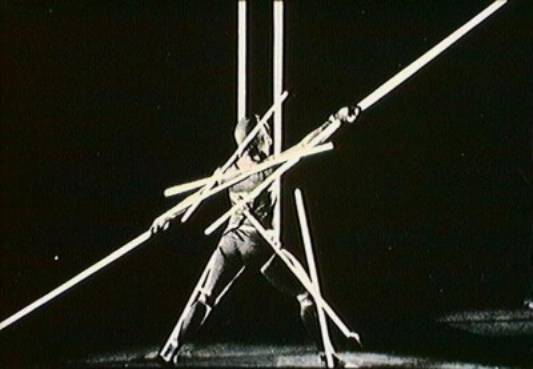

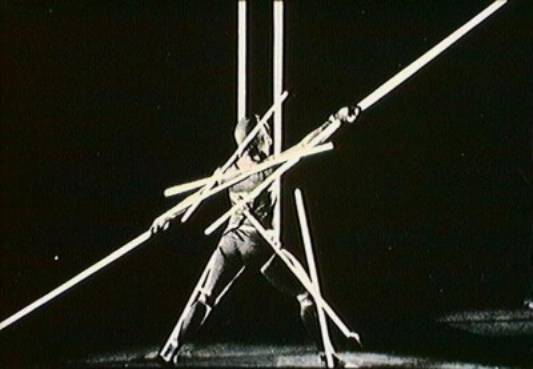

During Bauhaus, Schlemmer was organizing dance performances where the dancer was regarded as an agent in a spatial configuration; through the interaction of the dancer with the spatial container trigger the performance proceeded in an evolutionary mode. “Dance in space” and “Figure in space with Plane Geometry and Spatial Delineations” were performances that intended to transform the body into a “mechanised object” operating into a geometrically divided space, pre-existing the performance. Hence, movement is precisely determined by the information coming from the environment.

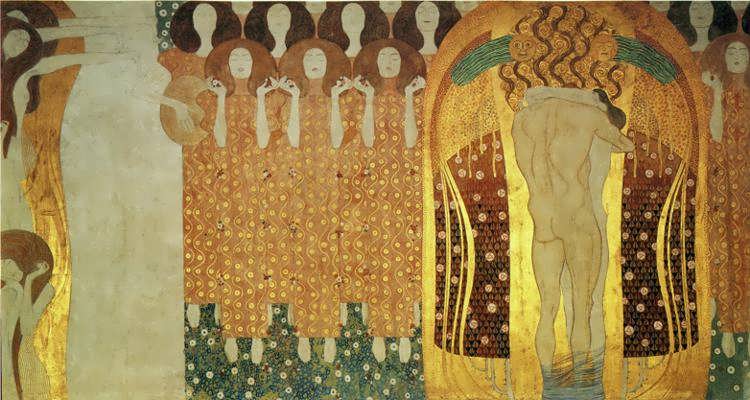

Slat Dance, Oscar Schlemmer, Bauhaus, 1926

William Forsythe, imagines virtual lines and shapes in space that could be bent, tossed, or distorted. By moving from a point to a line to a plane to a volume, geometric space can be visualized as composed of points that were vastly interconnected. These points are all contained within the dancer’s body; an infinite number of movements and positions are produced by a series of “foldings” and “unfoldings”. Dancers can perceive relationships between any of the points on the curves and any other parts of their bodies. What makes it into a performance is the dancer illustrating the presence of these imagined relationships by moving.

Improvisation Technologies – Dance Geometry, OpenEnded Group, 1999

>> The computational tool

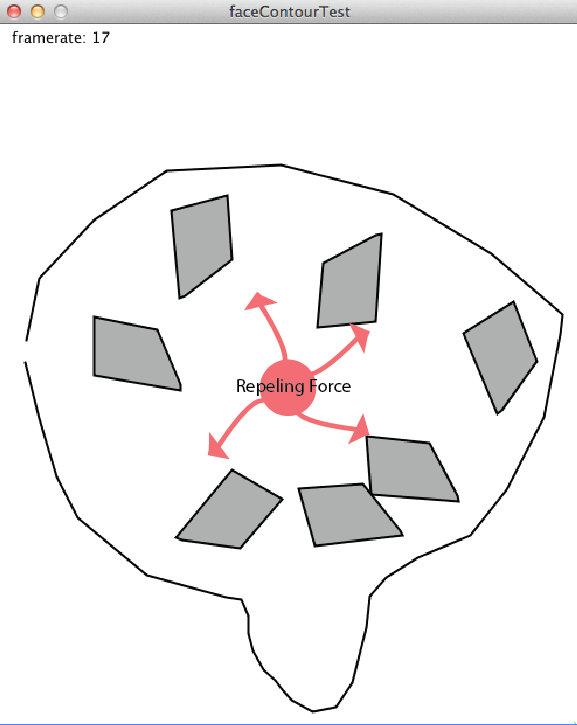

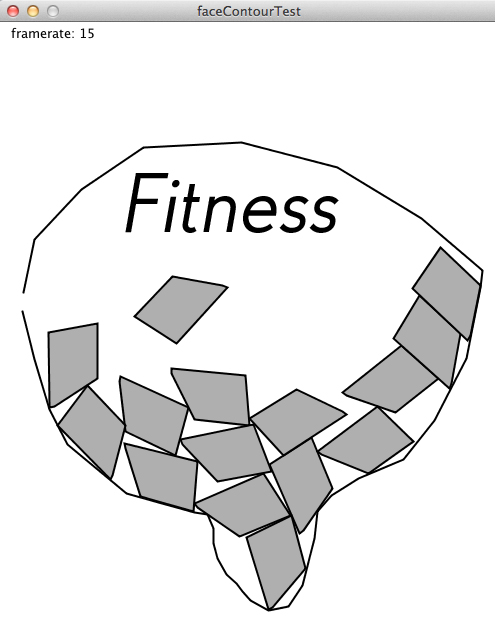

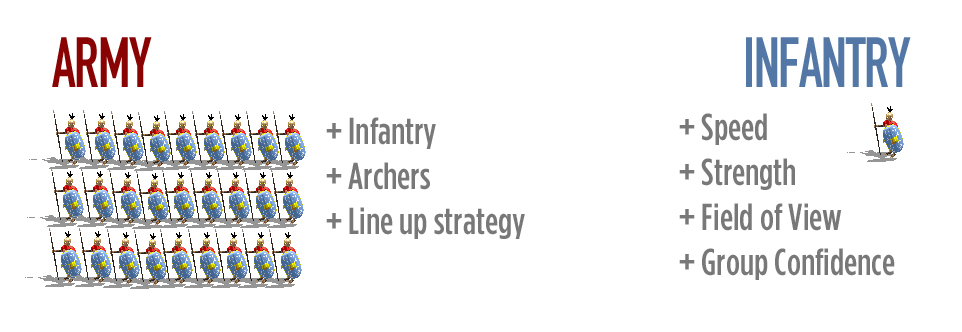

A Braitenberg vehicle is an agent that can autonomously move around. It has primitive sensors reacting to a predefined stimulus and wheels (each driven by its own motor) that function as actuators. In its simplest form, the sensor is directly connected to an effector, so that a sensed signal immediately produces a movement of the wheel. Depending on how sensors and wheels are connected, the vehicle exhibits different behaviors.

In the diagram below we can observe some of the resulting behaviors according to how the sensors and actuators are connected.

source: http://www.it.bton.ac.uk/Research/CIG/Believable%20Agents/

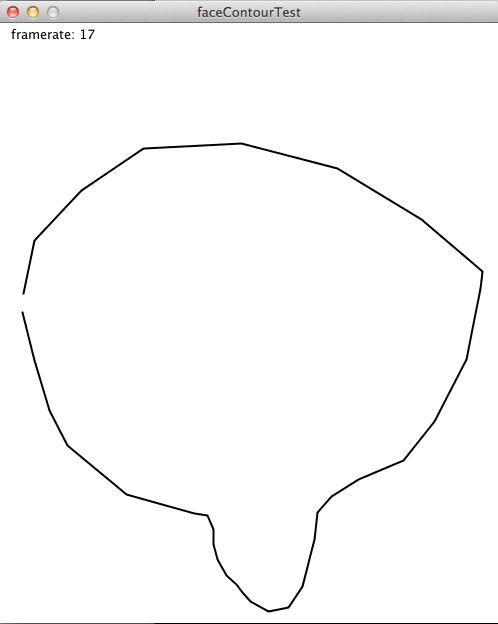

>> the environment

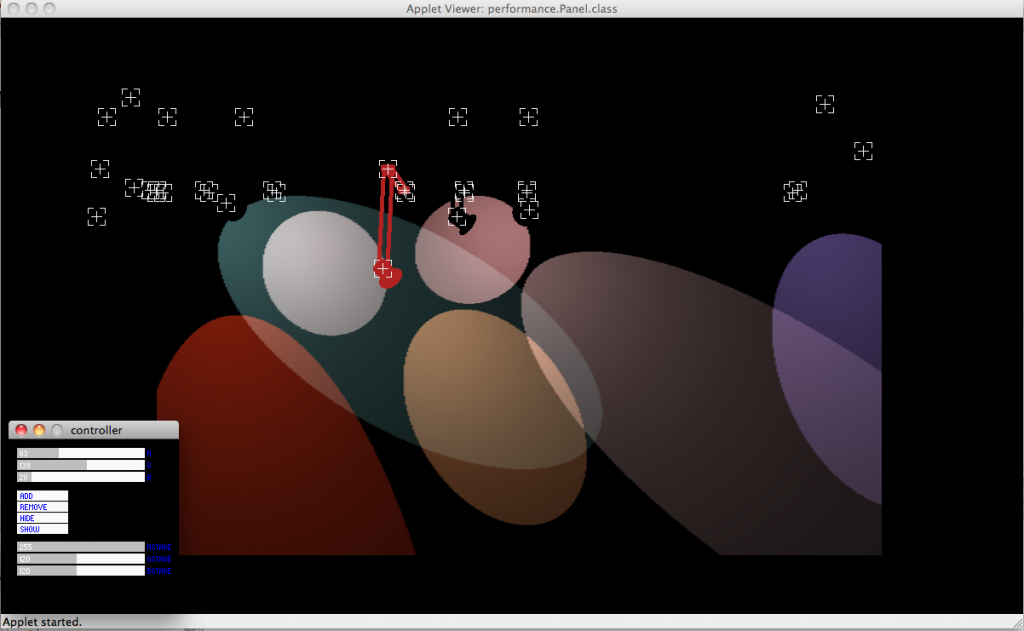

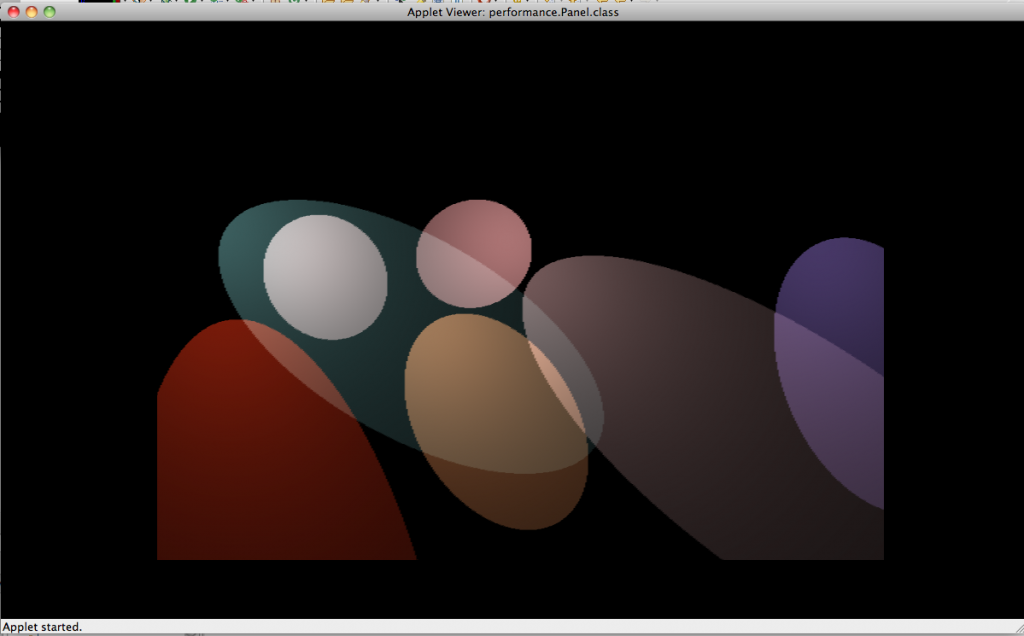

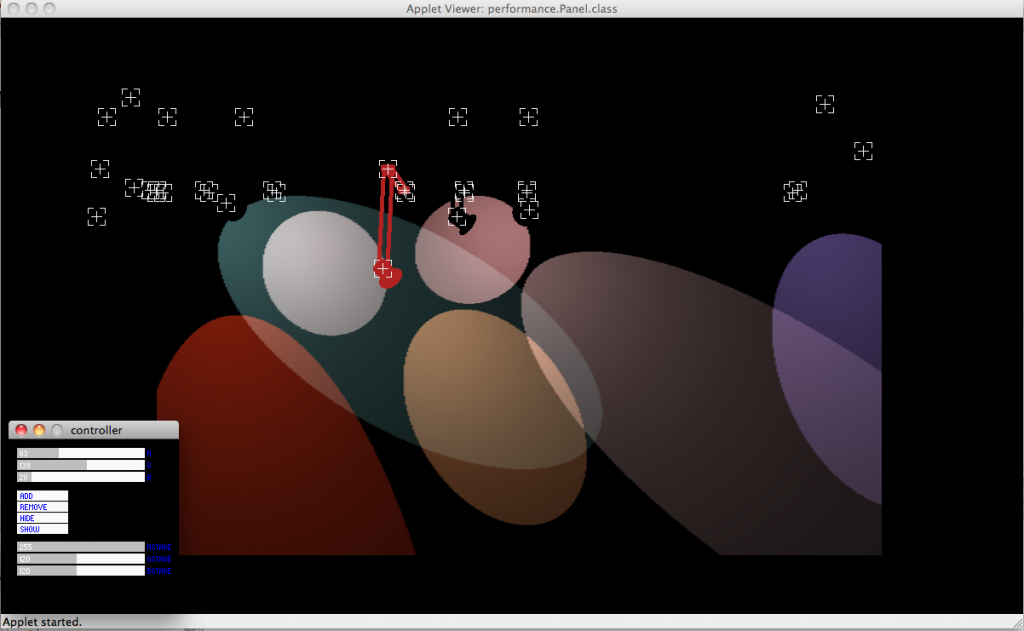

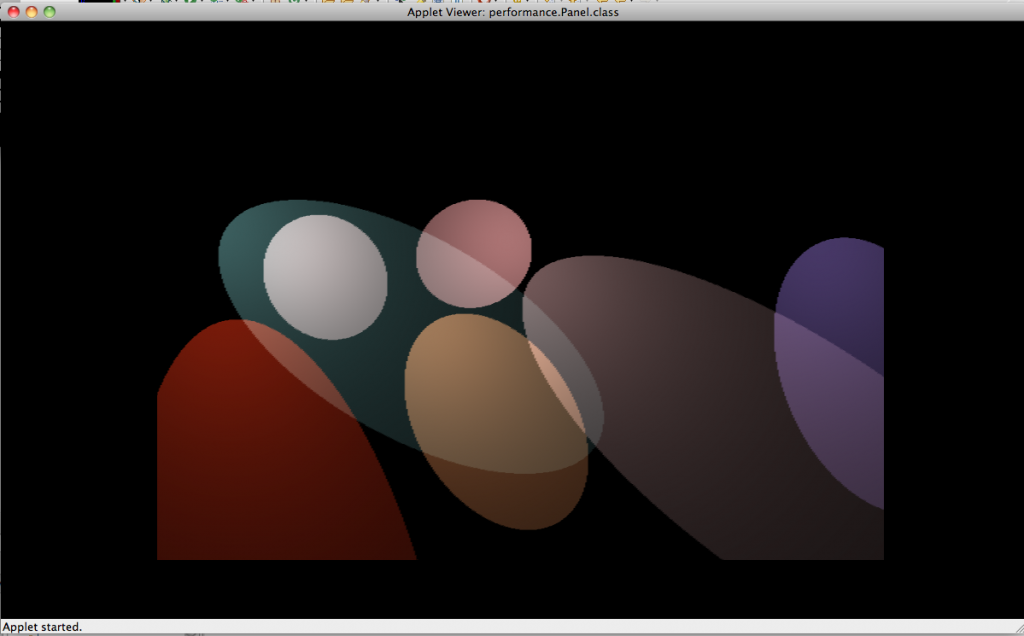

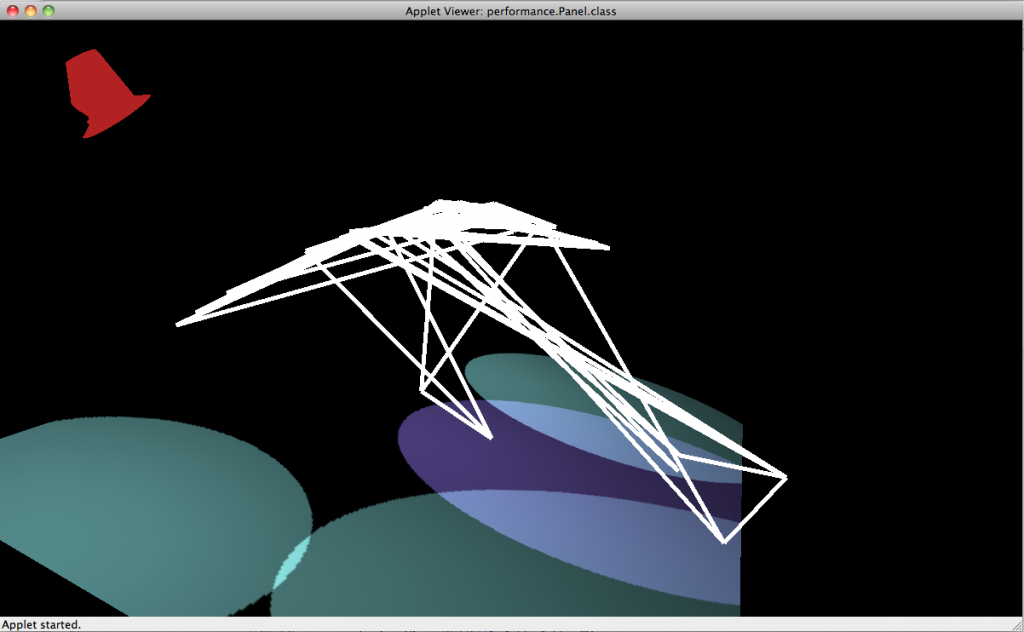

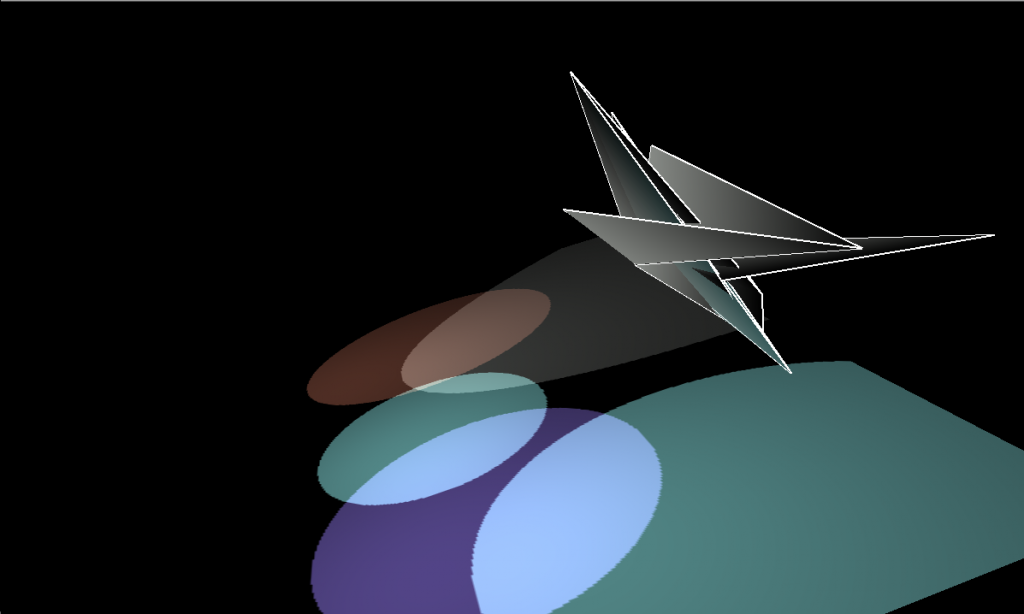

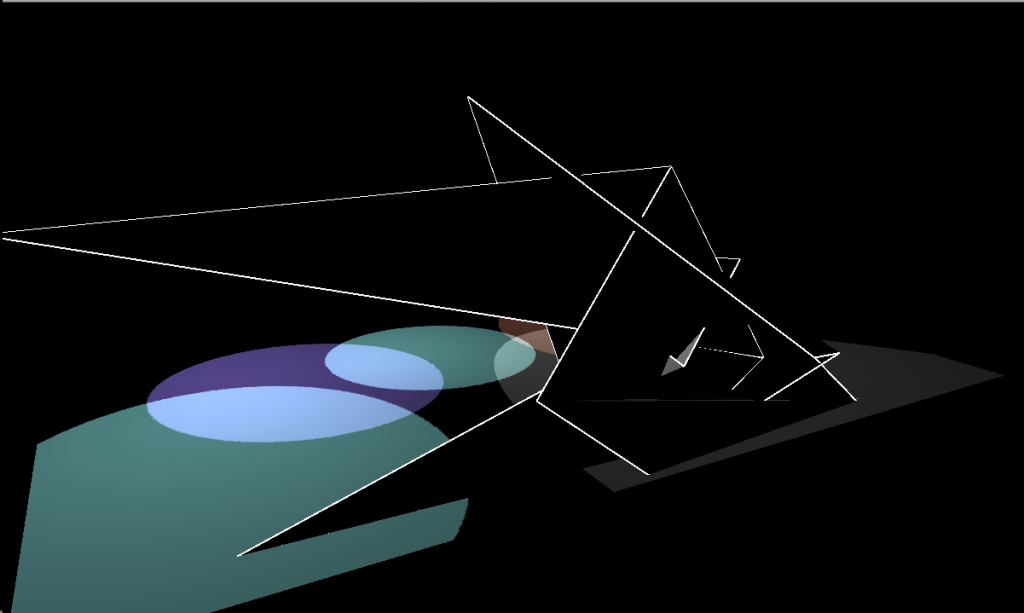

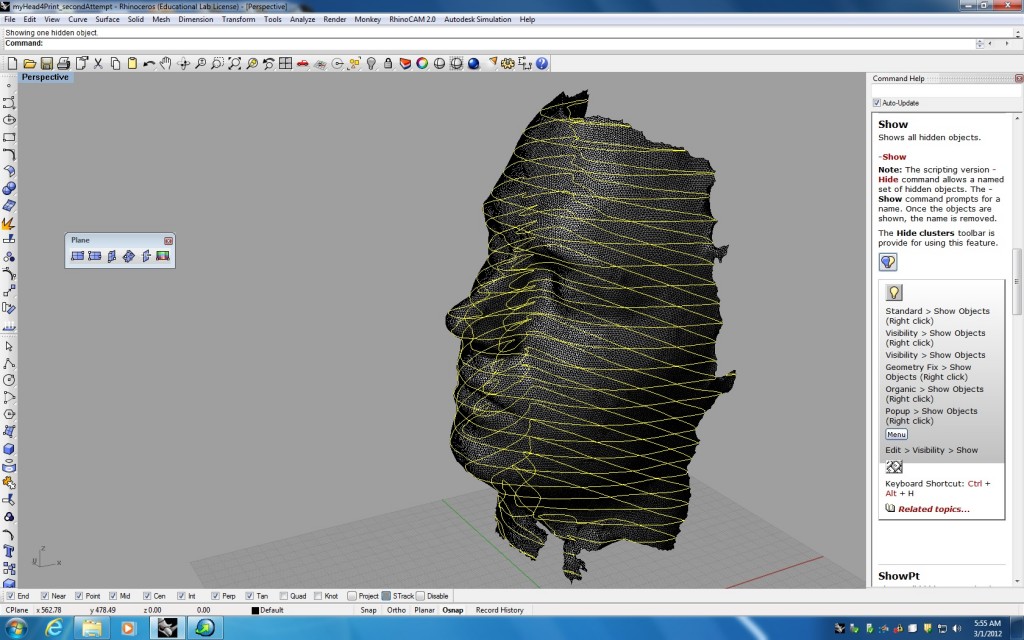

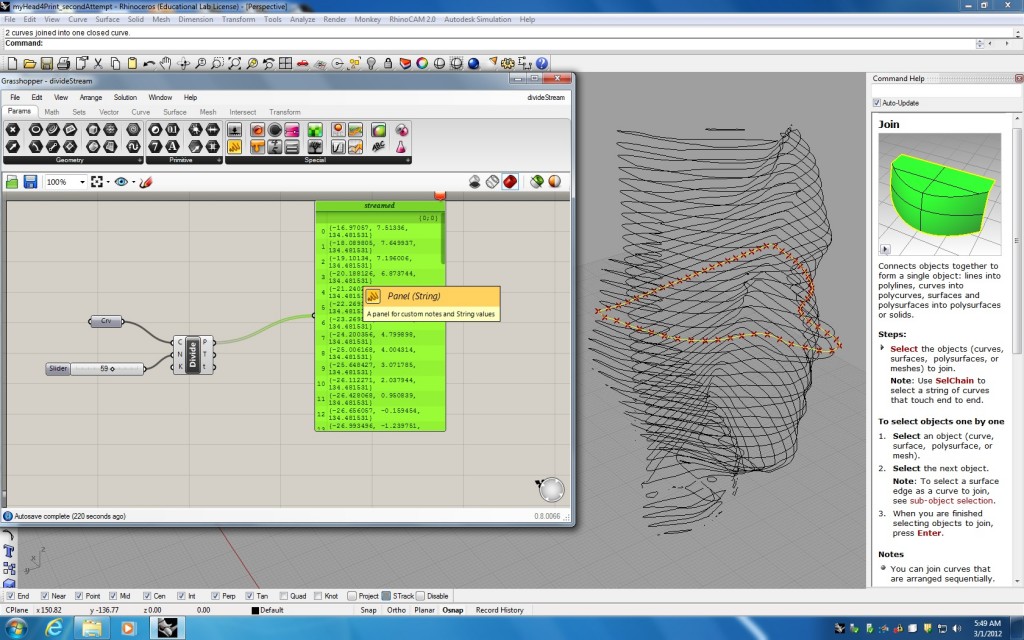

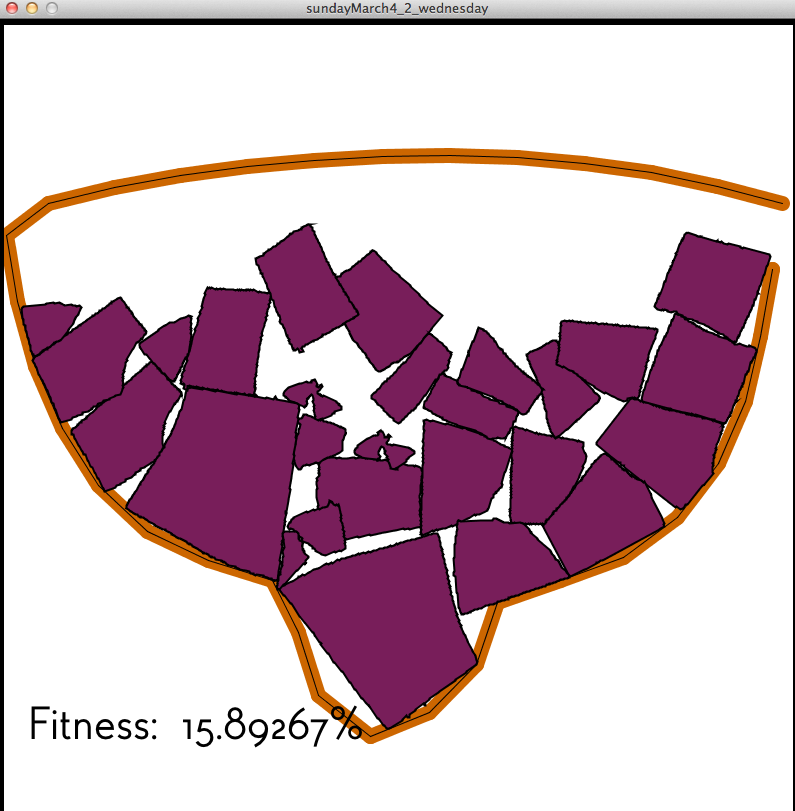

In the current project the environment selected to provide stimuli for the vehicles is a 3d stage where a number of spotlights are placed interactively by the user. The light patterns and colors can vary to actuate varying behaviors.

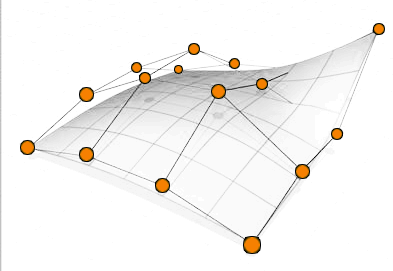

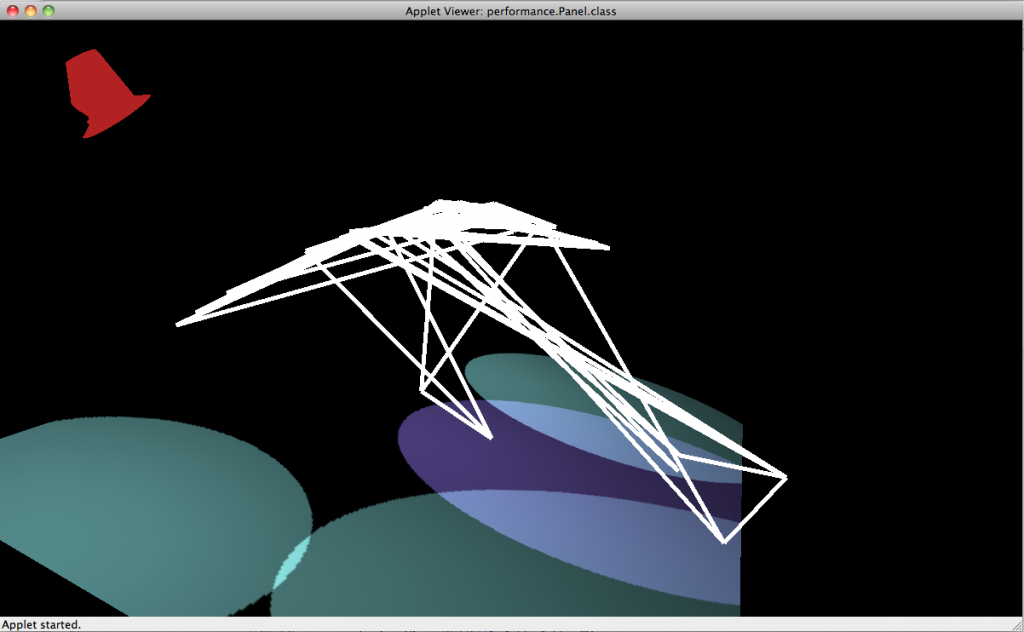

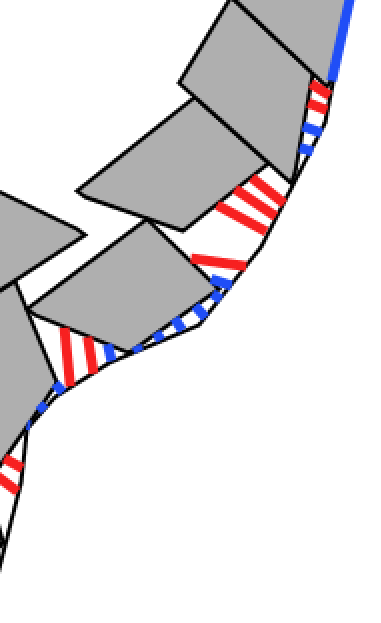

The vehicles can move in 3d space reacting to the light stimuli. The vehicles will be regarded as constituting the vertices of lines or the control points of surfaces which are going to get transformed and distorted over time. The intention is to constraint the freedom of movement of the vehicles by placing springs at selected points. The libraries of Toxiclibs, Verlet Physics and Peasycam are being used.