kelseyLee – Mobile in Motion

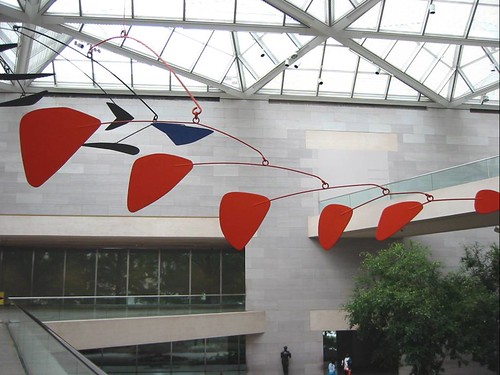

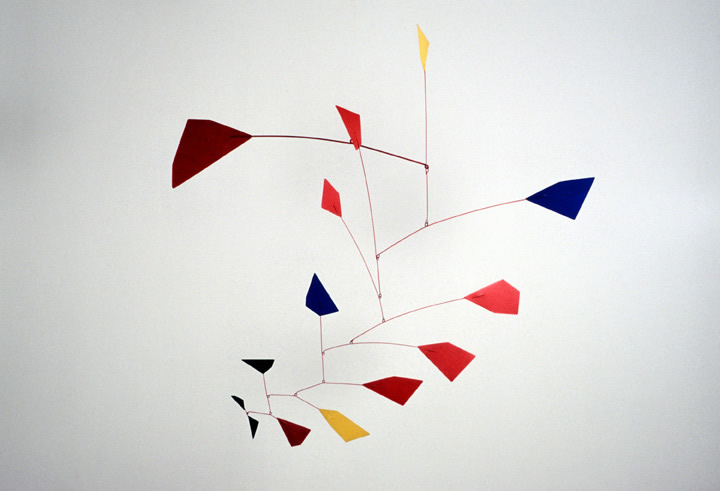

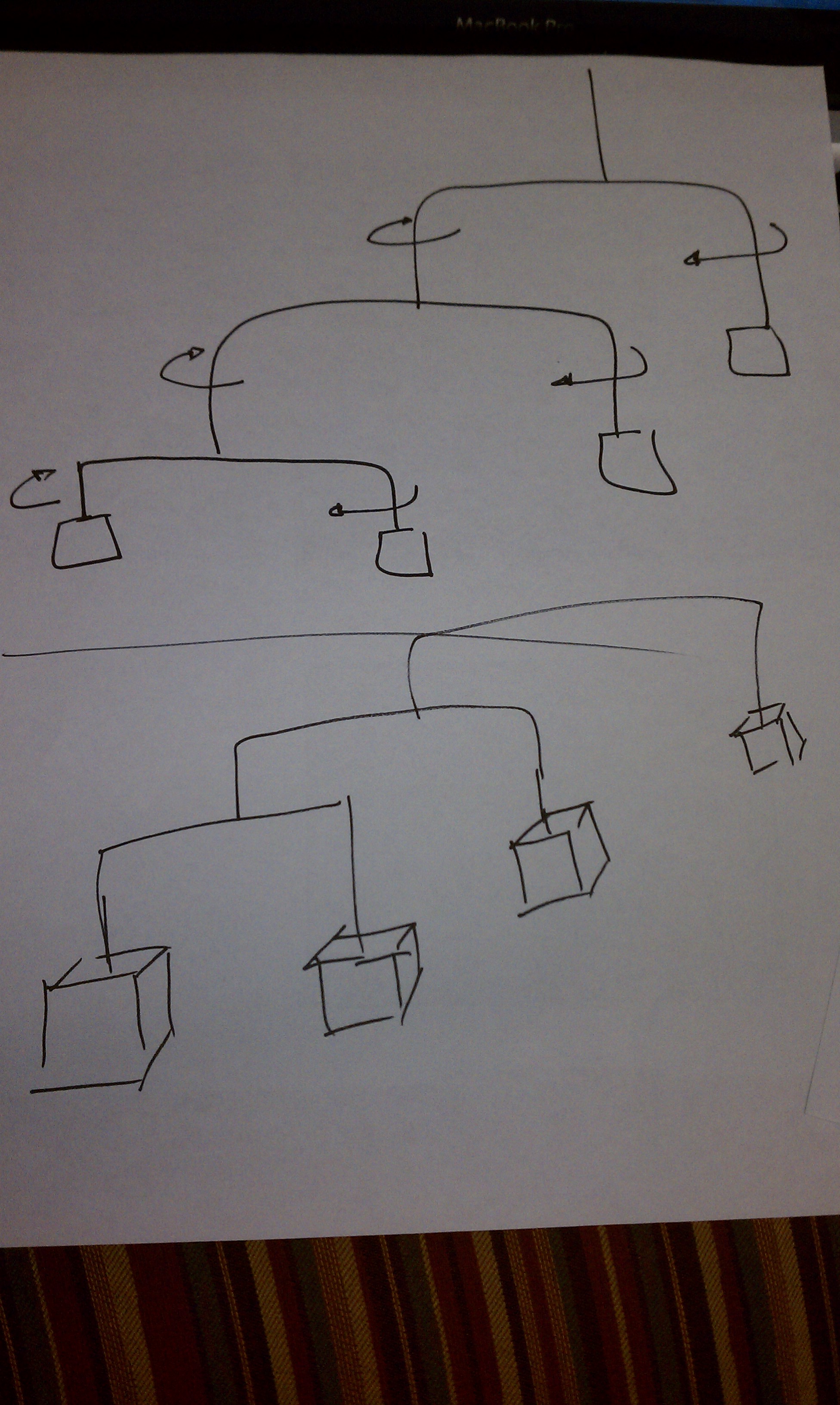

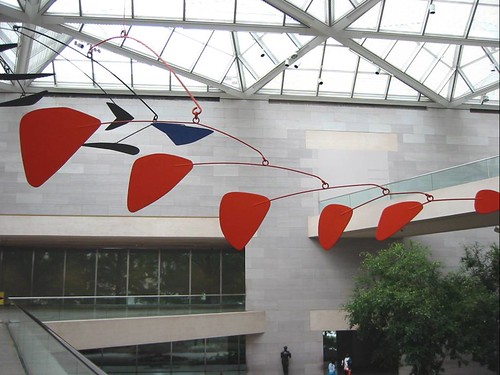

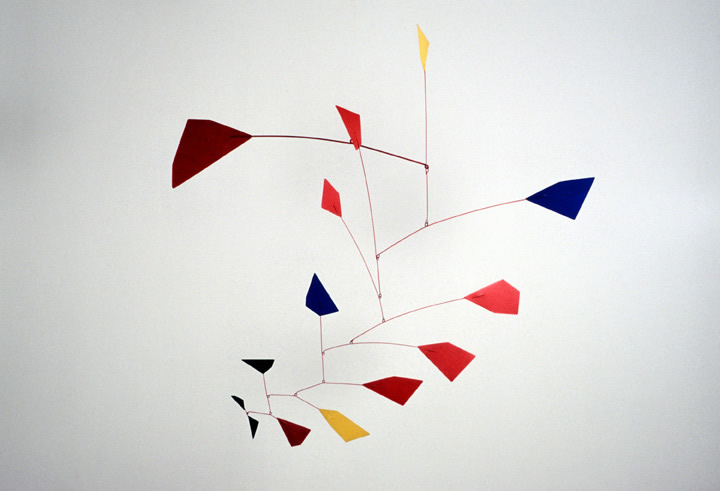

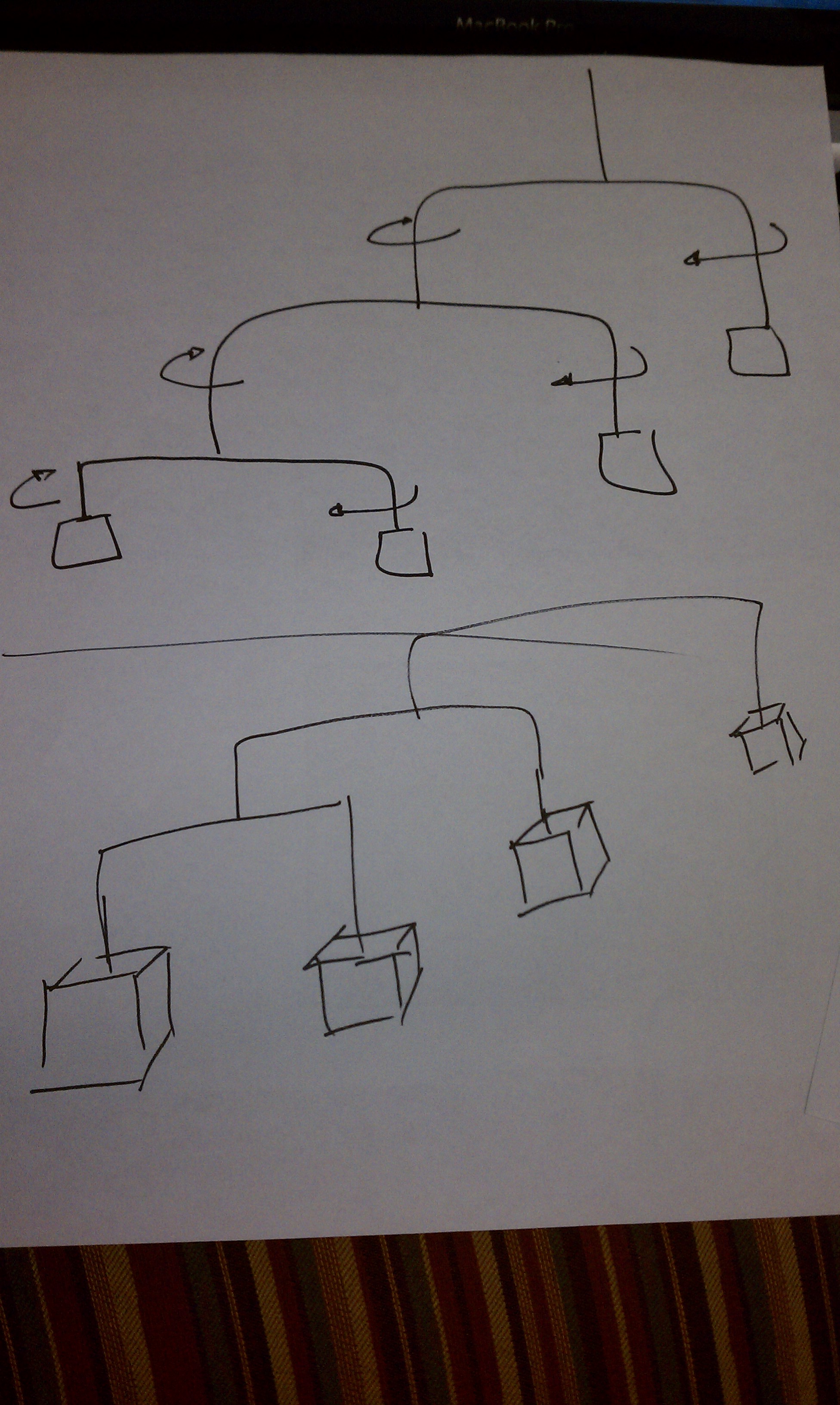

At this point I was inspired to generate a hanging mobile that would dance to the music.

[youtube=https://www.youtube.com/watch?v=WOpIPqEFJcg]

At this point I was inspired to generate a hanging mobile that would dance to the music.

[youtube=https://www.youtube.com/watch?v=WOpIPqEFJcg]

I’m not entirely sure where the idea for this project came from. I was exploring several ideas for using flocking algorithms when I suddenly thought of evolving solutions to the egg drop problem using genetic algorithms.

[vimeo=https://vimeo.com/37727843]

I recall performing this “experiment” more than once during my childhood, but I don’t think I ever constructed a container that would preserve an egg from a one story fall. There was something very appealing about revisiting this problem in graduate school and finally conquering it.

[vimeo=https://vimeo.com/37725342]

I began this project working with toxiclibs. It’s springs and mesh structures seemed like good tools for constructing an egg drop simulation. It’s lack of collision detection, however, made it difficult to coordinate the interactions of the egg with the other objects in the simulation. On to Box2D…

Box2D made it pretty easy to detect when the egg had collided with the ground. Determining whether or not the egg had broken was simply a matter of looking at its acceleration, and if that was above some threshold (determined experimentally), it broke.

What interventions can you make to preserve an egg during a fall? The two obvious solutions are 1) make the egg fall slower so it lands softly and 2) pack it in bubble wrap to absorb the force. These were two common solutions I recalled from my childhood. I used a balloon in the simulation to slow the contraption’s fall, and packing peanuts inside to absorb the impact when it hits the ground. This gave me several parameters which I could vary to breed different solutions: bouancy of the balloons to slow the fall; density of the packing peanuts to absorb shock; packing density of peanuts in the box. Additionally, I varied the box size and the peanut size both of which affect the number of peanuts that will fit in the box.

I also varied the color of the container, just to make it slightly more obvious that these were different contraptions.

My baseline for evaluating a contraption’s fitness was how much force beyond the minimum required to break the egg was applied to the egg on impact. A contraption that allowed the egg to be smashed to pieces was less fit then one that barely cracked it. To avoid evolving solutions that were merely gigantic balloons attached to the box, or nothing more than an egg encased in bubble wrap, I associated a cost with each of the contraption’s parameters. Rather than fixing these parameters in the application, I made them modifiable through sliders in the user interface so that I could experiment with different costs.

My initial idea for this project was to simulate a line or a crowd, by implementing a combination of flocking / steering algorithms. I planned to set some ground rules and test how the crowd behaves when interesting extreme characters enter the crowd and behave completely differently. Will the crowd learn to adapt? Will it submit to the new character?

After playing around with ToxicLibs to generate the queue, I thought about an idea that interested me more, so I only got as far as modeling one of the queues:

William Paley was a priest, who lived back in the 19th century. He coined one of the famous argument in favor of god’s existance and the belief that everything we see is designed for a purpose (by god, of course). As a rhetoric measure, he used to talk about a watch, and how complicated and synchronized need all its parts be for it to function well. In a similar fashion, are all other things we see around us on earth and beyond.

I wanted to use this lab assignment to play with evolution and see if I can create a set of rules that will generate a meaningful image out of a pool of randomly generated images. Following Daniel Shiffman’s book explanation about genetic algorithms, I designed a program that tries to generate a well-known image from a pool of randomly generated images. The only “cheat” here is that the target image was actually used to calculate the fitness of the images throughout the runtime of the program. Even with that small “cheat”, this is not an easy task! There are so many parameters that can be used to fine-tune the algorithm:

1. Pool size – how many images?

2. Mating pool size – How many images are in the mating pool. This parameter is especially important for a round where there are some images with little representation. If the mating pool is small, they will be eliminated and vice versa.

3. Mutation rate – is a double sided knife, as I have learned. Too much mutations, and you never get to a relatively stable optimum. Too little mutation, and you get nowhere.

4. Fitness function – This is the hardest part of the algorithm. To come up with criteria that measures what is “better” than another. As explained above, for this lab I knew what is my target, so I could calculate the fitness much easier. I used color distance for that: For each pixel in the image, I compared the three colors with the other image. The closer you are, the more fitness you have, and bigger your chances to mate for the next round.

5. Last,but not least – performance. These algorithms are time consuming and cpu consuming. When trying an image of 200×200, the computer reacted really slowly. I ended up with an optimum of 100px square images for the input assignment.

Following is a short movie that shows one run of the algorithm. The image is never drawn right. There are always fuzzy colors and in general it looks “alive”.

The End.

Not a Prayer

I started this project with the idea of generating a group of creatures that would worship the mouse as their creator. The sound I knew was going to be critical. I discovered a pack of phoneme-like sounds from batchku at the free sound project. I used the Ess library for processing to string these together into an excited murmur. It didn’t sound much like praying but I liked the aesthetic. In order to create the visuals I took a cue from Karsten Schmidt’s wonderful project “Nokia Friends.” I put the creatures together as a series of springs using the toxiclibs physics library.

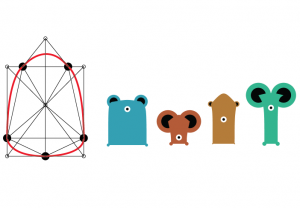

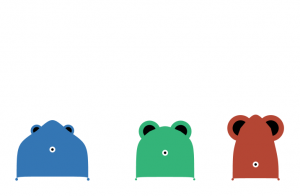

Here are some of my early character tests:

Getting the characters together took a lot of tweaking. I wanted as much variation in the forms as possible but I also wanted the bodies to be structurally sound. I found that the shapes had a tendency to invert, sending the springs into a wild twirling motion. This was sort of a fun accident but I wanted to minimize it as much as possible.

Here is the structural design of my creature along with some finished characters:

At this point I focused on the behavior of the creatures. I used attraction forces from toxiclibs to keep the creatures moving nervously around the screen. I also added some interactivity. If the user places the mouse over the eye of a creature it leaps into the air and lets out an excited gasp. Clicking the mouse applies an upward force to all of the creatures so that you can see them leap or float around the screen.

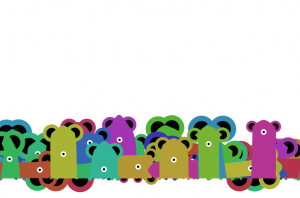

Here is a screen capture of me playing with the project:

[youtube https://www.youtube.com/watch?v=sMfVkc9OhU0&w=480&h=360]