kelseyLee – Mobile in Motion

I started out with the idea of wanting to generate a visual piece through utilizing a song’s data. I wanted the appearance to be abstracted, simplified and really liked the idea of using motion to convey different aspects of a song. A source of inspiration was:

[vimeo=https://vimeo.com/31179423]

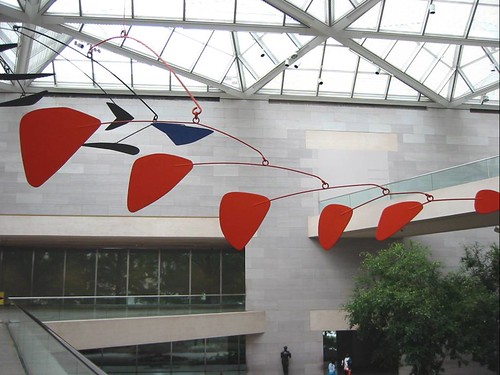

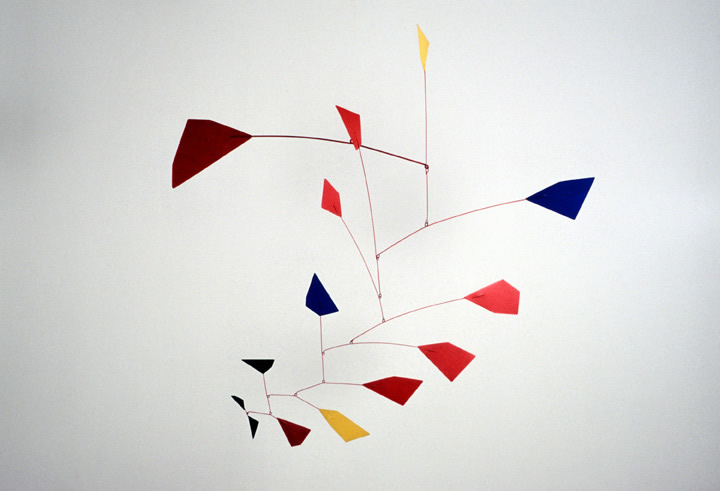

While brainstorming for the project, I happened across an Alexander Calder Sculpture at the Pitt Airport. A sculptor whose works I’ve admired for a long time, it struck me as strange that while these hanging sculptures seem so lively and free, hanging in space, they never actually move.

At this point I was inspired to generate a hanging mobile that would dance to the music.

I began by looking at a bunch of Calder mobiles, examining how the different tiers fit together.

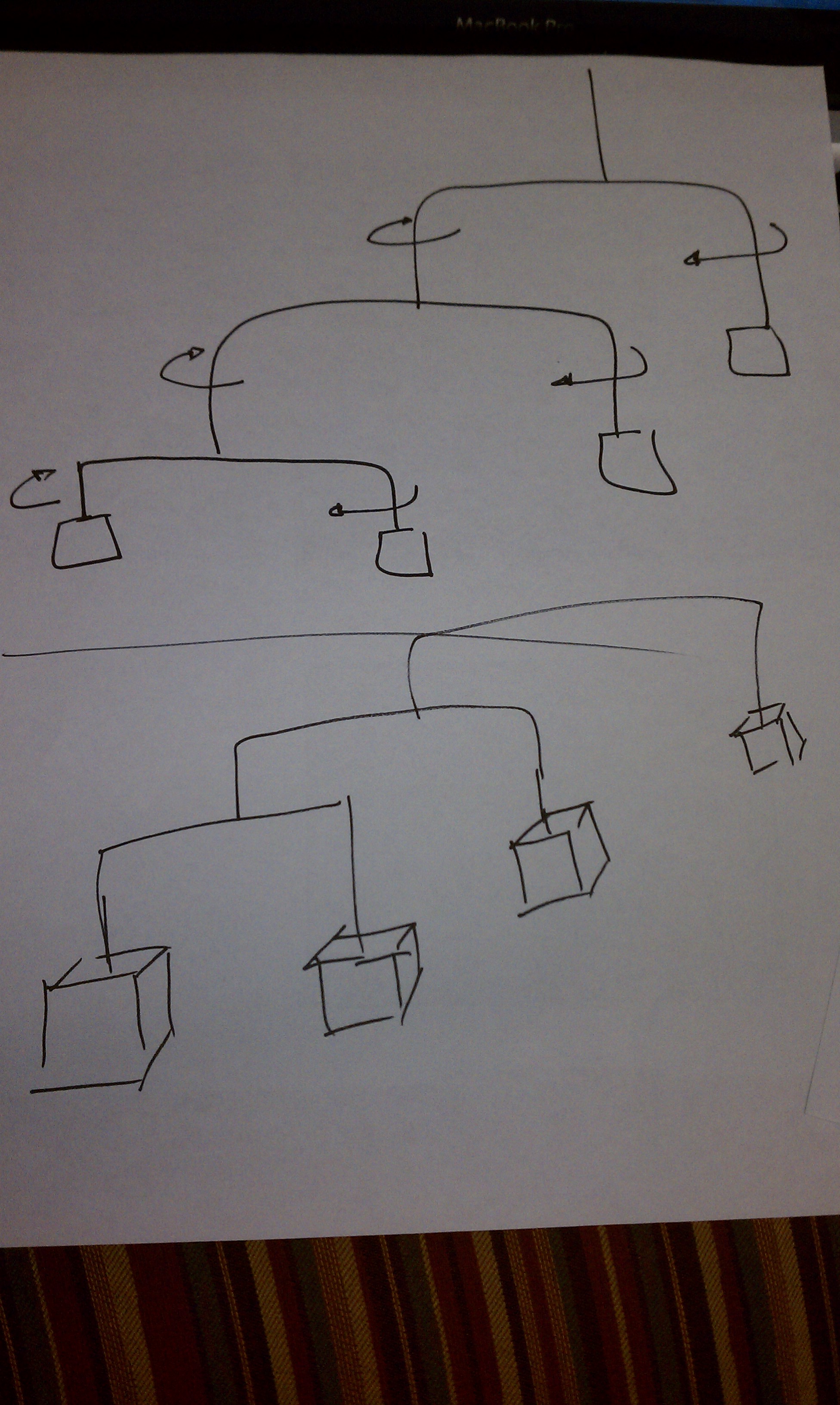

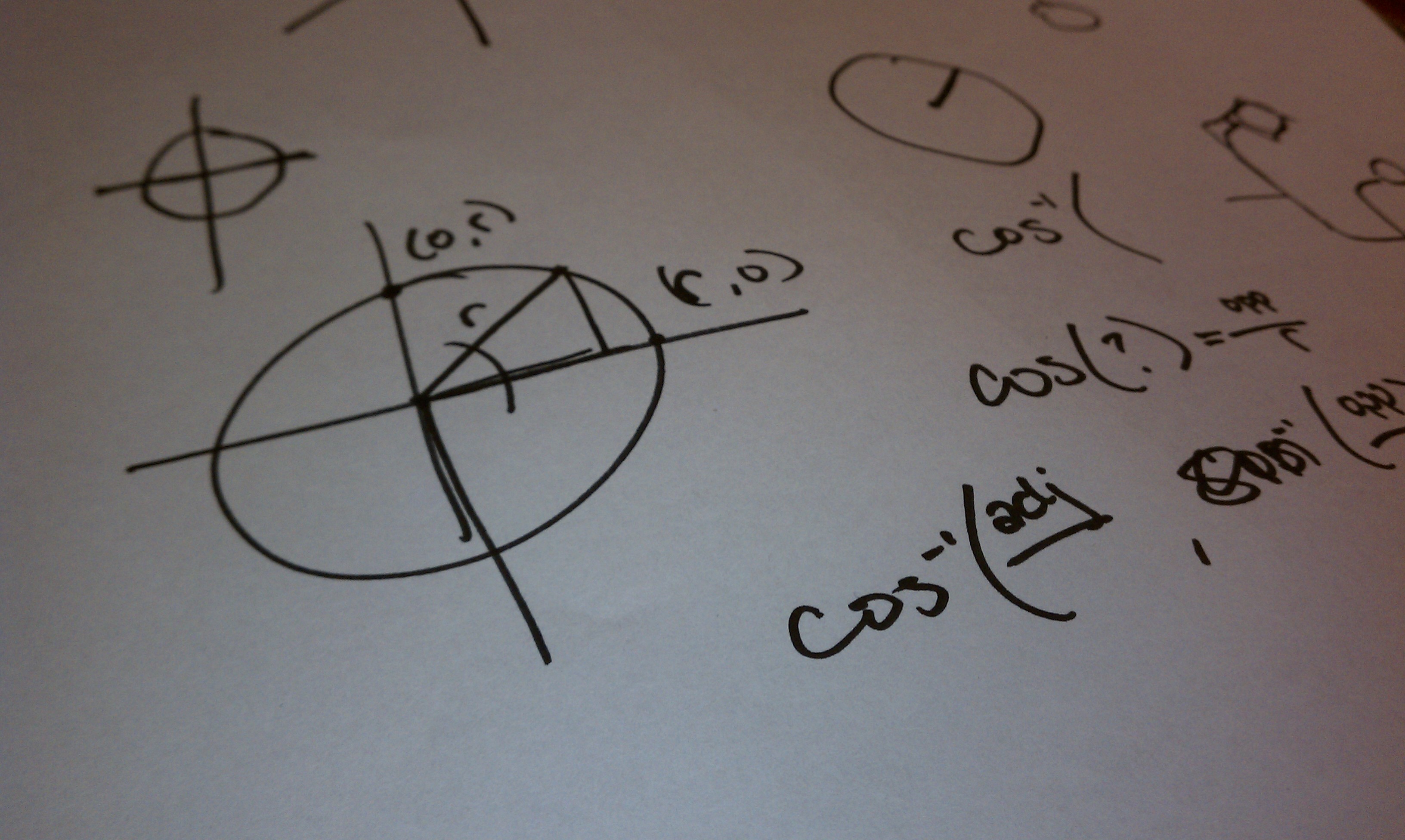

I then went on to examine OpenGL’s 3D library to determine how to generate the shapes in space. After sampling from a processing program that drew cubes, I then needed to figure out how to generate motion. It was at first difficult to think in terms of 3D coordinates, and then to have each tier connected to the above tier and move about in space while still staying connected. In my piece I store the tiers in an array and must calculate the top tier so that the below tier can be found, and so on.

As for the music, I used Echonest to analyze Phoenix’s Love Like a Sunset (Turzi RMX).

It was particularly difficult to get data because the Processing library that uses Echonoest had version control incompatibilities and wasn’t really working, so I want to thank Kaushal for helping me circumvent it to analyze the song I chose.

When I got the data, there was some interesting stuff related to the popularity level, among other things, but with a deeper search I was also able to access more granular data that had second by second analysis of pitches and timbre, etc. Since I wanted to show motion, I focused on the pitch data, which included over 3,500 segments of analysis for my song. I planned to time the motion of the mobile to the song data.

Then I encountered difficulties, because the pitch data was actually 12 pitches, on a scale of 0-1.0, and this could be found for about every 3-5 milliseconds. I couldn’t find any documentation about what the pitches were or why 12 pitches were associated with each segment. At this point it had taken me so long to prep for the data that I just decided to make due with it. I would correlate a range of pitches to a specific tier of the sculpture, and whenever that pitch was played, would move that tier of the mobile. With so much pitch data, I just took the first pitch in the sequence of 12 pitches and used that to determine which tier would move.

Originally I wanted only 1 tier to rotate at once and so simplified the data to only update the tier movements about once a second. This however seemed too choppy so I just utilized the appearance of a pitch to begin the tier’s movement. Ideally I’d like the movement to stop for that tier after some time so that it’d make for more interesting movement patterns, however this works as well. Watching how the sculpture moves as the song progresses, as more tiers become involved, as the asynchronous nature of the tiers ebbs and flows is interesting.

I wanted to show music doing a dance, something that it is unable to do. I wanted to move away from those electric, space-nebula filled looking music visualizers and do something a bit more relatable. Yes I would have liked to get more relevant data, that had more meaning, the actual note patterns of the song, discretized into pieces that were human understandable or even just supported by documentation. There is definitely room for improvement with this project, but I am happy with it in terms of the fact that I was able to generate motion from music in an inanimate object. I could easily plug in another song’s Echonest analysis file and a completely different dance would arise, and this fingerprinting of songs in a visual way was what I foresaw for my project in its original inception.

[youtube=https://www.youtube.com/watch?v=WOpIPqEFJcg]

Nice presentation of concepts and research

A mobile that has attractive and repellant forces could make a really crazy visualization. Rules could be applied to connections and each node of the mobile could be a particle with

physics forces. Sounds could be representative of physical interations. Toxiclibs could be useful to get this started quickly. What you have is so very much like a Calder, it misses

the oportunity to be a variation on Calder.+1

Seems like you have put a lot of thought into this project. And more important, you manage to convey that during the presentation. Good!

Sounds like this would be great if it could eventually make its ways into being an actual object!<--agree Looks great but not sure if the connection between the music and the motion is very apparent. This would be really cool if it was a physical installation that somehow was correlated to ambient sounds. Looks beautiful! Maybe add an ability to change direction for each of the mobile parts? It can generate a bit more diverse movement. The 3D visualization looks really great, but it's hard to see the music in the mobile. I feel like you could have drawn a little more from the plucking strings. For example, seeing the upper/larger parts of the mobile pulse according to the lower frequencies, or something along those lines. I am not sure how the mobile correlates with music? it seems like the mobile moves/rotates just randomly. Is physics involved in the mobile? to figure out if it really would move this way or be in possible in real lie ^^^ yeah. seems like it would get off-balanced potentially ^ I concur; is the physics for the calder mobile simulated (e.g. with an engine like Box2D, bullet, etc.) or faked (just rotating around.. all clockwise)? The concept of buffeting a mobile-like structure with forces from music analysis is a pretty good idea -- a great starting point. Using a real physics library would give you MUCH more dimensionality to visually express the impact (literally) of the musical events. But animating these explicitly is going to limit the expressiveness of the visualization. You've got high-dimensional data coming in (form Echonest); you deserve high-dimensional expressivity on the visualization. Maybe you could have use wind blowing on the entire structure (simulated in a physics engine) to visualize overall sound strength, etc.. Idea of descrete information. Its a nice idea of subtle visualization that might not spew data at people but rather ease them into it. i think it would be cool the other way around- like clicking to move the different peices generates a sound and maybe each "keypad set" are sections of a song I'm not really seeing a good correlation between the music and the movement It seems like you understand the low points. Becaause the high points are the visual form in 3D.. which is hard. If this is a "generative unit" I'm not sure if the form is made on the fly per song? ^It is not clear to me too, but I guess it would be interesting if each song had its own mobile structure generated according to relevant to the song characteristics. The spinning of the mobile is very nice. Its really impressive that you modeled the mobile in 3D. The continuous circular moion does not lend itself well to correlating with notes in a song. looks like basically an FFT over time, mapping average band strength to velocity... One problem is that once they start spinning, it's harder to see movement of neighbors... It's almost like replicating the movement of a music box with a model of a kinetic sculpture. I wonder if you could make the speed correlated to the frequency with which that pitch is played throughout the piece. Another interesting approach may have been to grow the mobile using some simulation of organic growth that is somehow related to the data from music piece; then perhaps the natural motion of the mobile would correlate to the piece. LOVELY!! The moving form is my favorite part. Well, the Alexander Calder mobiles DO move... which is why they're called "mobiles".. even though they're heavy, they swing around due to air currents. The Echonest library calculates pitch/timbre information for each beat in the music... hence ~3000 beats for a 3-minute song. It's a very powerful music analysis kit -- It sounds like you didn't spend enough time with it though! (or its documentation...) Nice! Is this actual 3D, or are you "faking it?" Could a really loud, fast strong buffet this thing to pieces? Cool visuals, I think you may have taken the Calder influence a little too strongly perhaps, for my tastes. You could use a similar, but more abstract branching tree pattern maybe. Why random colors??? I agree a physics engine would have helped here, you would get some emergence in the patterns and you would have different branches interacting with one another. It would be nice to have a higher quality render too. i like the visual look and movement of this. i also think of wind when i think of mobiles and the concept of weight of each of the different weights. i wonder if that could be tied to the movement and size of the weights. i think you could do this with a physics library. i also love your inspiration. i think this is an interesting project. this looks really cool- why is one of them blue? (you just answered nvm) \ I am a little curious about why it always spins in one direction... This looks really nice!! You could exaggerate your proportions a little more on each of the "branch" pieces to more closely mimic calder, and maybe get more interesting results when the physics is applied. Looks like it was a very interesting exploration! Also, Alexander Calder is the bomb. You should watch the videos about his circus. I could see your project also going much further if you had more time! The concept of moving mobiles in response to music is great, but I think the simple rotation isn't the best representation. As Golan just said, it's predictable, but I'm still having trouble relating it to the music. I guess if it's going to be predictable, I should be able to guess what will happen based on what I know of the song. But since the Calder mobiles are abstract to begin with, maybe more abstract influences would be more appropriate.