I’ve had enough of low-brow beer delivery robots. Clearly it is time for an impeccably dressed, charming robot sommelier!

One of the neat things about robots is that they can interact with objects, not just by sensing what’s going on around it, but also by acting on the world. Manipulation depends on the robot’s physical capabilities, the physics and material characteristics of the task, and would ideally include corrections for mistakes or the unexpected.

I spend most of my time thinking about how to design algorithms for machines to interact with people. For this project, I decided to ignore people altogether. Maybe machines just want to hang out with other inanimate objects. It is also a chance to explore a space that might help humans bond with robots… drinking wine.

My Nao robot, aka Data the Robot, is an aspiring robot actor. And as everyone knows, acting is a tough job. Lots of machines showing up at the castings, but only a few lucky electricals make it to the big screen. So obviously they need something that pays the bills along the way.

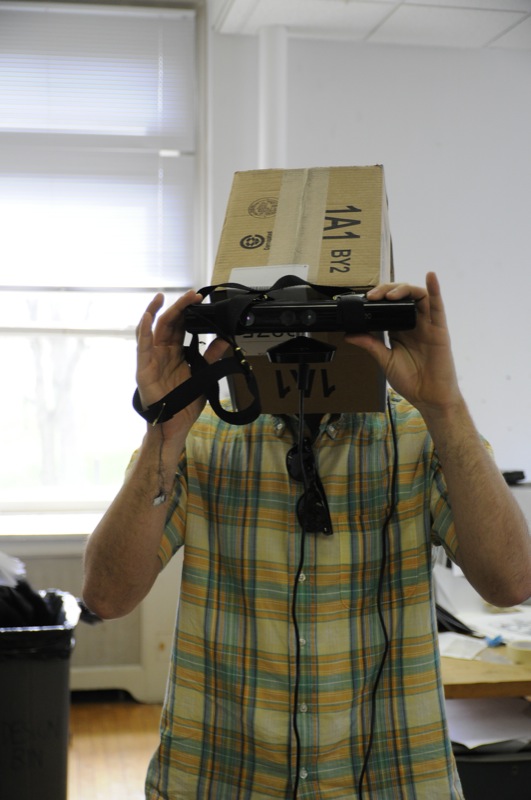

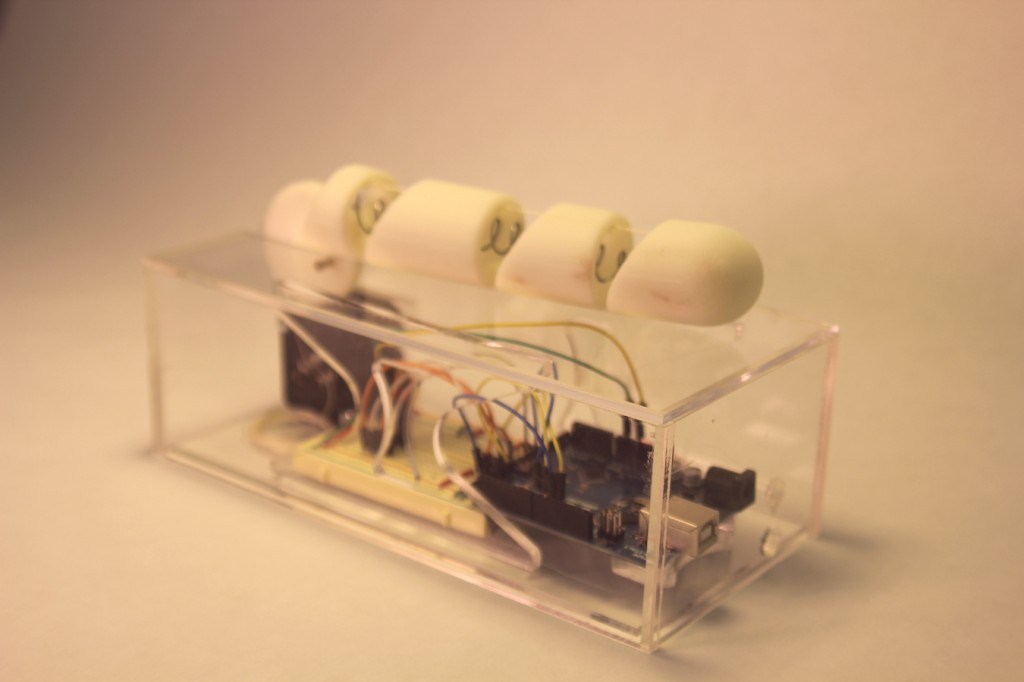

This project is an early exploration of how my robot might earn a couple extra bucks until he gets his big break. Automation really isn’t his thing, and there might be lots of human drama to learn from once the fermented grape juice starts flowing. In this initial work, I spent time exploring grasps and evaluating bottles, then decided to augment the world around the robot.

He’s not quite to real wine bottles quite yet. The three fingers of each hand are coupled, controlled by a single motor behind each hand, which tugs cables to move the springy digits. Past experience told me that the best grip would be with two hands, but it took some experimentation to figure out how to get the bottle to tilt downward.

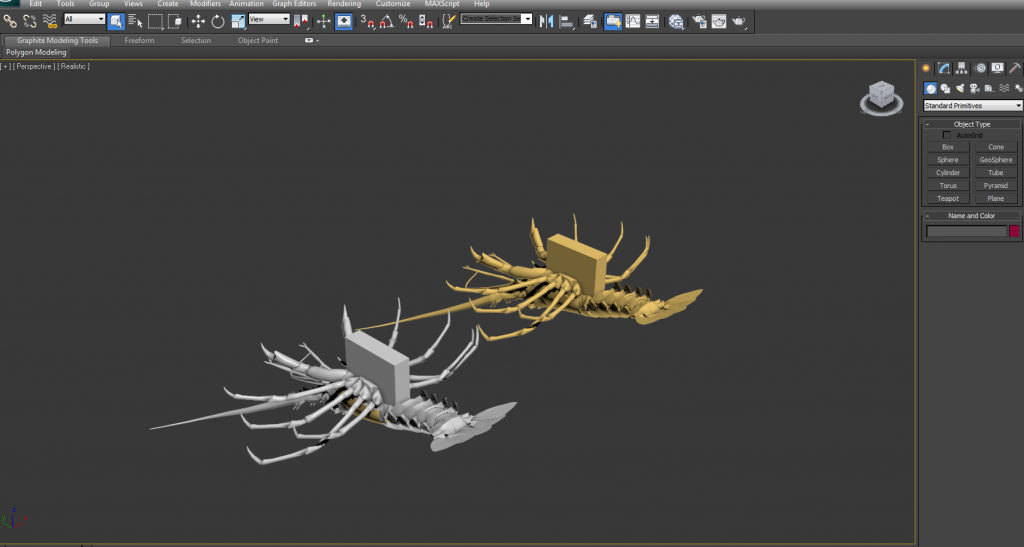

First I tried to get the bottle to tilt to the side, using one hand as the fulcrum and the other as the positioner, but there was never enough grip. Next I tried to use one arm as the fulcrum, giving excuse to the napkin over his arm in the initial photograph, but that was even less successful. Finally, I modified a small box to provide a cardboard fulcrum while the robot continues to use two hands. One could imagine a specialized bar with build in apparatus for robots, or conversely the robot to carry a portable fulcrum over before bringing the wine.

However, even that was not sufficient with arm motion alone. The closest I got to a (openloop) solution, featured in the photo above, was using the robot’s full body motion; crouching, bending forward, partially rising still bending (that makes the motors very tired), then all the way up.

What I am most interested in modeling in the project is the pour. Even in these initial experiments I see many complexities in the releasing and tipping the bottle. Grasping the lower end of the bottle tightly allowed for a stable hold through the initial descent, but when the bottle was fairly close to the fulcrum, I let it fall by loosening the robot’s fingers. I posted a short video showing this sequence:

[vimeo=https://vimeo.com/39392005]

When I was testing with one finger from each of my own hands, rotation was very useful, but that could be quite difficult on the robot. When I tested with the tips of two fingers, rotation no longer came easily, so my entire hand moved with the bottle until it was in the horizontal position, then I got underneath the back side of the bottle to continue tipping the front side lower and ensure flow.

Next up, adding real physics and algorithmic planning, filming with a proper wine glass and choosing *just* the right vintage. A little romance wouldn’t hurt either.