Duncan Boehle – Interaction Project

Inspiration

I often uncontrollably air-drum while listening to good music, and Kinect seemed like the perfect platform to realize my playing into sound. When I look around the internet, however, I mostly found projects that don’t encourage any kind of composition or rhythm, like simple triggers or theremin-like devices.

This is one of the examples of a laggy drum kit that I wanted to improve on:

[youtube=https://www.youtube.com/watch?v=47QUUqu4-0I&w=550]

Some other projects I found were really only for synthesizing noise, rather than making music:

[youtube=https://www.youtube.com/watch?v=RHFJJRbBoLw&w=550]

One game for Xbox360, in the Kinect Fun Labs, does encourage a bit more musicality by having the player drum along to existing music.

[youtube=https://www.youtube.com/watch?v=6hl90pwk_EE&w=550]

I decided to make my project unique by encouraging creative instrumentation and rhythm loops, to help people compose melodies or beat tracks, so they wouldn’t be limited to just playing around a little for fun.

Process

Because I wanted to control the drum sounds using my hands, I wanted to take advantage of OpenNI’s skeletal tracking, rather than writing custom blob detection using libfreenect or other libraries. Unfortunately, trying to use OpenNI directly with openFrameworks caused quite a few nightmares.

So instead of fighting against the compiler when I could be doing art instead, I decided to just use OSCeleton instead in order get the skeleton data from OpenNI over to openFrameworks. This solution worked pretty well, since even if openFrameworks didn’t work out, I would only ever have to worry about using an OSC library.

Unfortunately this added a bit of delay, since even with UDP, using inter-process communication was a lot more indirect than just getting the hand joint data from the OpenNI library itself.

The first step was to figure out how I would be able to detect hitting the instruments. The data was initially very noisy, so I had to average the positions, velocities, and accelerations over time in order to get something close to smooth. I graphed the velocity and acceleration over time, to help make sure I could distinguish my hits from random noise, and I also enabled the skeleton tracking for multiple players.

For the first version of the beat kit, I tried using a threshold on acceleration in order to trigger sounds. Even though this seemed more natural based on my own air drumming habits, the combined latency of Kinect processing, OSC, and the smoothed acceleration derivation all made this feel very unresponsive. For the time being, I decided to revert to the common strategy, which would just be spatial collision triggers.

Here’s the video of the first version, with all of the debug data visible in the background.

[vimeo 39395149 w=550&h=412]

Looking Forward

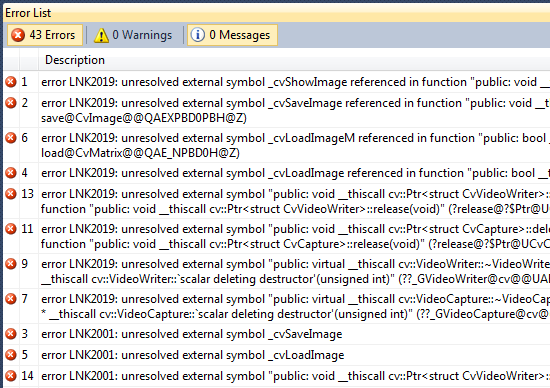

I was very frustrated by the linker errors that forced me to use OSC for getting the Kinect data, so immediately after the presentation I found Synapse, which is an open-source openFrameworks version of OSCeleton using OpenNI for tracking. Using that codebase as a starting point, I’d be able to have just one process using the Kinect data, and I might reduce some of the latency preventing me from using acceleration or velocity thresholds effectively. I could also greatly improve the UI/instrument layout, and add more controls for changing instruments and modifying the looping tracks.

nice choice of sounds, would love to play around with this. also, it’d be great to record ppl’s movement when they interact- could make for a cool second project.

This is a very nice project. Looks like a lot of fun to play with. I think you can maybe use fewer instruments so that you can not have to look as much and you can just get a feel for where the elements of the kit are.

As someone who probably annoys his officemates with his air drumming, I really like the idea of this project. The thing I think is possibly awkward is that you lose the feel of drumming with the orientation of the controls. But as a new kind of performance percussion instrument, I love it. In fact, it’s possibly more entertaining this way than just as an airdrumkit.

I want one!

I am thinking of this project(game) that my friend made with Kinect https://www.youtube.com/watch?feature=player_embedded&v=_GiYWqM-hMc

And I think the recording of the sequence make the game.

great that you also thought about the visual feedabck from the labels of instruments.

haha clearly, this has been very fun to all of us, could work on the interfact a bit

A good cheap accessible go anywhere drumset is perfect for kit drummers who are always playing wherever they are. Sitting, standing: drummers are always tapping away. Could this

become a mobile, wearable( like the 3rd person perspective head cam) so drummers can actually hear different percussion sounds? AR.

Nice story building, set the expectations for the project and makes it interesting. Also I like the inspiration videos.

Yeah…acceleration data is notoriously noisy, whether from an image or from an accelerometer.

The quantization is what really makes this “better” (from a musical perspective) than the other Kinect drum machines you showed

It would be nice to have longer loops or the ability to switch patterns (without a full reset)

But as Golan said, there are a lot of things you can extend on

For the final version of this… it could be cool if you recorded the music/beats created and the user had the option if they liked it to print out the score for what they just created.