HeatherKnight – Sommelier – Project4

I’ve had enough of low-brow beer delivery robots. Clearly it is time for an impeccably dressed, charming robot sommelier!

One of the neat things about robots is that they can interact with objects, not just by sensing what’s going on around it, but also by acting on the world. Manipulation depends on the robot’s physical capabilities, the physics and material characteristics of the task, and would ideally include corrections for mistakes or the unexpected.

I spend most of my time thinking about how to design algorithms for machines to interact with people. For this project, I decided to ignore people altogether. Maybe machines just want to hang out with other inanimate objects. It is also a chance to explore a space that might help humans bond with robots… drinking wine.

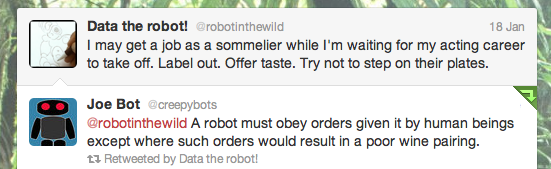

My Nao robot, aka Data the Robot, is an aspiring robot actor. And as everyone knows, acting is a tough job. Lots of machines showing up at the castings, but only a few lucky electricals make it to the big screen. So obviously they need something that pays the bills along the way.

This project is an early exploration of how my robot might earn a couple extra bucks until he gets his big break. Automation really isn’t his thing, and there might be lots of human drama to learn from once the fermented grape juice starts flowing. In this initial work, I spent time exploring grasps and evaluating bottles, then decided to augment the world around the robot.

He’s not quite to real wine bottles quite yet. The three fingers of each hand are coupled, controlled by a single motor behind each hand, which tugs cables to move the springy digits. Past experience told me that the best grip would be with two hands, but it took some experimentation to figure out how to get the bottle to tilt downward.

First I tried to get the bottle to tilt to the side, using one hand as the fulcrum and the other as the positioner, but there was never enough grip. Next I tried to use one arm as the fulcrum, giving excuse to the napkin over his arm in the initial photograph, but that was even less successful. Finally, I modified a small box to provide a cardboard fulcrum while the robot continues to use two hands. One could imagine a specialized bar with build in apparatus for robots, or conversely the robot to carry a portable fulcrum over before bringing the wine.

However, even that was not sufficient with arm motion alone. The closest I got to a (openloop) solution, featured in the photo above, was using the robot’s full body motion; crouching, bending forward, partially rising still bending (that makes the motors very tired), then all the way up.

What I am most interested in modeling in the project is the pour. Even in these initial experiments I see many complexities in the releasing and tipping the bottle. Grasping the lower end of the bottle tightly allowed for a stable hold through the initial descent, but when the bottle was fairly close to the fulcrum, I let it fall by loosening the robot’s fingers. I posted a short video showing this sequence:

[vimeo=https://vimeo.com/39392005]

When I was testing with one finger from each of my own hands, rotation was very useful, but that could be quite difficult on the robot. When I tested with the tips of two fingers, rotation no longer came easily, so my entire hand moved with the bottle until it was in the horizontal position, then I got underneath the back side of the bottle to continue tipping the front side lower and ensure flow.

Next up, adding real physics and algorithmic planning, filming with a proper wine glass and choosing *just* the right vintage. A little romance wouldn’t hurt either.

I’m glad you took this assignment as an opportunity to connect/push your doctoral research. That’s ideal.

This robot is kind of hot. What research was done to develop the emotional expression of R2-D2? I think it has been a topic of discussion that R2-D2 has a tremendous amount of expression

based on very simple movements, lights, and mostly sound. What’s most interesting about it is that there was no need to fully anthropomorphise R2-D2, and we still have emotional response to ‘him’, or ‘her’, it.+! This is further enforced by the fact that the anthropomorphic C3PO receives much less empathy. Do we feel less empathic toward him because he is annoying, or because it’s normal and acceptable

to dislike certain ‘people’?

Would love to see more of this robot stuff in your future work.

^^ I agree!

I am not sure I understood if there’s a logic or relation to any kind of input the robot gets. Or are the narrations you gave during the presentation just your interpretation of the robot’s randomly generated facial / “body” expressions.

I think there’s a lot of potential in where this project is going and understand there’s is a lot of work that goes into to getting the responses and simulated emotions to be realistic. I think it could be interesting

to see this very non-human robot emulating human interaction. There’s something creepy and intriguing about that.

Tank has a girlfriend?!

Thank god our robot overlords have finally arrived.

http://www.iwatchstuff.com/2011/02/14/great-gatsby-game.jpg

Eyebrows. Eyebrows are the easiest way to express changes of/give off emotion and I think they will help alot. Eyes and mouth are a little more subtle. (http://ars.sciencedirect.com/content/image/1-s2.0-S0165032797001122-gr1.gif )

^^ good point

^^ Agreed (somehwat related video: https://vimeo.com/5552957 )

So are you shifting from a state machine to a gradient space? So you need some kind of other system to tell it when to shift from emotion to emotion, and how far down that shift you are. So right now you have personal space. Maybe

they could respond to your expression, noise………. oh god this is a huge project.

Her reactions remind me of some girls I know.^ ^ lol

Humans trying to understand the generative emotions is really intriguing. Though I wish you had explained it more in the beginning. At the same time, I did find my self trying to read the emotions of the robot because it simply resembled a human face. I wonder if there would have been a different effect with a more abstract face (like a Furby) You’re right about the story aspect,

Do you listen to radioLab? If not, check out this podcast, it’s realllyyy interesting: “Talking to Machines” http://feeds.wnyc.org/radiolab

+1 for RadioLab

Explanation would be made more clear with diagrams. When you said that you were maneuvering through quadrangles, I initially thought you meant a 2D “emotion-space” (happy-sad-anger-fear) rather than actual physical quadrangles (NE-NW-SW-SE).

The occasional eye-rolling is pretty funny.

This is a good start in terms of creating the illusion of a rich interior emotional life of the robot. The main limitation now to my emotional suspension of disbelief, IMHO, is the linearity of the movements (stop-start-stop) without easing.

I wonder if you can use genetic algorithms and input from people to see what stories align with emotions to see if there’s an optimal set of emotion-face set for a particular emotion. or even tie words to the movements she’s making.

What kind of random walk model are you using? Is it kind of like a first-order Markov chain? The point is to get away from states, but I think you need some kind of general state space (e.g. think of a state as a square or circle in the continuous space instead of a fixed point) to really tell stories. If you have state spaces, then you could look into higher order chains to try and make the stories more evident and cover a large specturm of emotions. While you can project stories onto the motions you have, I still got the sense in many of the videos that the face was just randomly walking back and forth between happy and sad.

I think that understanding emotion is really interesting in the realm of autism research, I wonder if the generative nature of your robot’s emotions could be used for autism therapy

Maybe using non-linear navigation through the state space would give more human results? Not sure if this would work, but maybe you could use some kind of physics engine to drive the facial expressions, like there is a ‘gravity’ pulling the robot towards a certain pole of the emotion space, and the other elements of its face follow in tow. I feel like our emotional states and our various facial components have a certain inertia to them. Experimenting with algorithms along this route might be worthwhile. I really like your line of inquiry here. Reminds me of Cynthia Breazeal and her crazy furby bot. This is less creepy, the motor sounds and abstract face make it seem more honest.

So, the interesting thing here for me is the human perception of what these random motions mean. The robot is extremely expressive, or appears to be. I think adding you own commentaries or having a series of people dictate their emotional interpretation would add depth to your documentation and could be pretty funny.

did the robot consume all the champagne in the background?

I think teeth would be helpful in showing emotion. In your thought process of fake smile, it is hard to read into a fake smile. I think how much teeth and their overlap is telling of real or fake smile a person gives.

the eyerolls are really good and add the most expression I think.

Does random state generation constitute a generative algorithm? Or is the generative algorithm in our heads–psychological interpretation… I think this is the most interesting thing that even with total randomness, we’re able to come up with interpretations and narratives.+1

I think a few iconic states with a finite state machine and weighted transitions between states may be one way to explore it.

Agree about implementing easing as golan suggested.

i like her eye movements, they are very expressive, it is kindof hard to really get a sense of how it is to interact with it, it seems like more of a personal experience?

^ I agree – the pupils and blinking make it seem much more human, even if everything else doesn’t look human.

it’s really interesting to deal with the dexterity limitations of the robot, something I never really thought about before.

It’s very fun to see your robotics work. It looks like a very interesting (and important) problem to try to figure out how to get your robot to pour you wine.

At last, a practical use for robots!

I like robots and food and wine and things. that’s about all I’ve got on this.

i don’t understand why you have water in the cup in the video- where did it come from? you should try putting water in the bottle to make it more convincing, there are also issues with feeling the water flow through the bottle and feedback that we as humans have when we pour. i wonder how the robot would do with that

good to know that IACD is contributing to alcholics everywhere. And they say electronic art isn’t accessible to the masses.

A journey of a thousand drinks begins with a single robo-step.

Interesting how much of the problem is related to the specific design (biology) of your robot.

But it’s clear you’ve learned a ton about how to pour a bottle.

You didn’t get into “why data” rather than finding a more capable robot<-- he needs a job :-] Nice stuff, I'd like to see more on the interaction end. Maybe show a cheeky voice command beginning the automation in your video.