For this blog post, I wanted to go into detail about GANbreeder (soon to be renamed Artbreeder,) my favorite software art tool/project and one which I have used extensively. GANbreeder is a web application developed by Joel Simon, a BCSA alum, that allows users to interact with the latent space of BigGAN.

BigGAN is a generative adversarial neural network (GAN) that benefits from even more parameters and larger datasets than a traditional GAN. Therefore, its creations achieve a higher Inception score, a measure of the diversity and photorealistic quality of a generative network's creations. On GANbreeder, users can mix "genes" (ImageNet categories, say 'Siamese cat' and 'coffeepot',) and BigGAN will create novel images within the latent space between those categories. By "editing genes" (adjusting sliders,) the user can modulate the network's relative confidence levels of one category or another. The user can "crossbreed" two prior GANbreeder creations to mix their categories into one image, or spawn "children," which are randomized variations on the confidence levels that tweak the image slightly.

What I find most inspiring about GANbreeder as a project is the magical, surreal quality of the images that come out of it. These dreamlike images provoke responses ranging from awe to fear, and question the straightforward sense of how meaning is created in the mind. Perceptual information in the visual system provokes memories and emotions in other parts of the brain. But where in this process does a slightly misshapen dog make us feel so deeply uncomfortable?

As a tool, GANbreeder is inspiring because it democratizes a cutting-edge technology -- the user doesn't have to write a single line of code, much less possess a graduate ML degree. I've been interested in AI art since high school, but coding doesn't come naturally to me, so I have this project to thank for keeping my interest alive and helping me get a sense of what work I want to make.

From a conceptual standpoint, GANbreeder raises complicated questions about authorship. I chose the categories that make up 'my' creations and messed with sliders, but who 'made' the resulting image? Was it me, Joel Simon, the researchers who developed BigGAN, or the network itself? What about Ian Goodfellow, who is said to have 'invented' GANs in 2014, or all the researchers going back to the early days of AI? You can read here about a dispute between Danielle Baskin, an artist and active GANbreeder user, and Alexander Reben, who painted (through a commissioned painting service) a near-exact copy of one of Baskin's generated 'works.' At this time, GANbreeder was anonymous, but Simon has since implemented user profiles. It's not clear whether this will solve the question of authorship or merely complicate it further. As shown in the case of Edmond Belamy, any given person or group's ownership of AI artwork is tenuous at best.

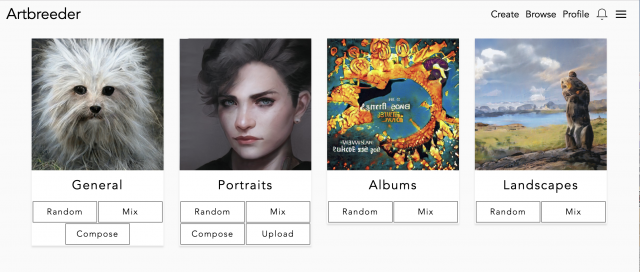

Simon is currently at work on a GANbreeder overhaul. Not only will the project be renamed Artbreeder, it will expand to include more categories, an improved ML approach (BigGAN-deep,) and specific models for better generation of album covers, anime faces, landscapes, and portraits. I'm in the Artbreeder beta, and I still think the standard BigGAN model ('General') produces the most exciting images. Maybe it's because lack of commonality between the categories leads to weirder and more unexpected imagery. But overall, as a sort of participatory, conceptual AI art project, I think GANbreeder is one of my favorite things created in the last two years.

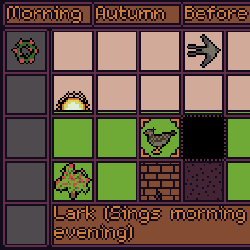

Here's a collection of my GANbreeder creations that I'm most satisfied with (I like to make weird little animals.)

There isn't a singular artist who I would say is making the 'best' GANbreeder work, but you can find great stuff on the Reddit page or the featured page on the site.