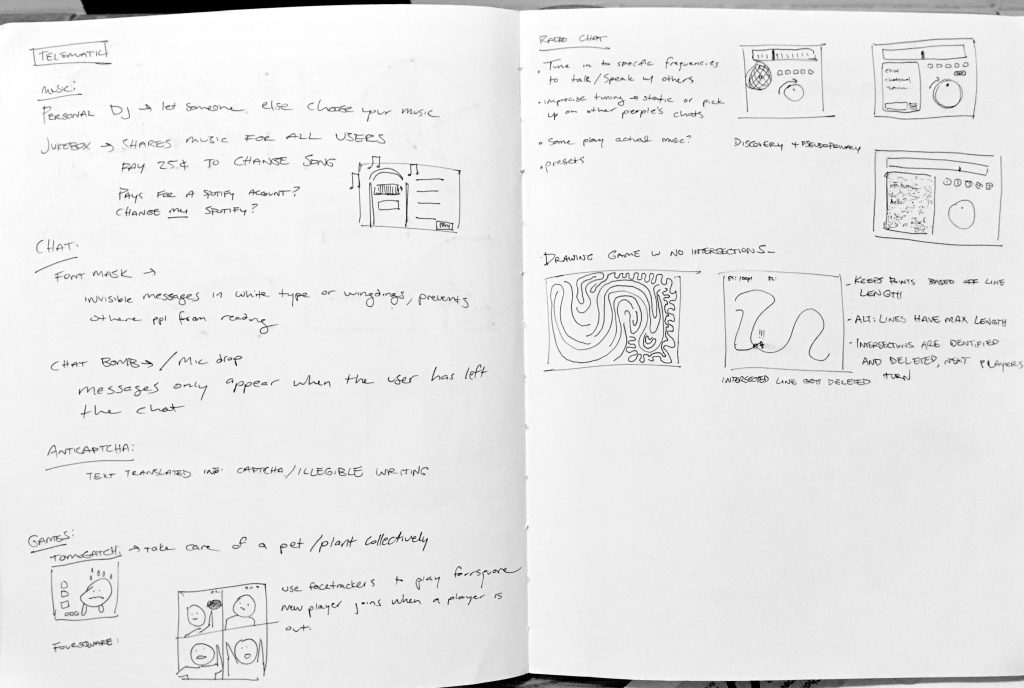

Moood is a collaborative listening(ish) experience that connects multiple users to each other and Spotify, using sockets.io, node.js, p5.js, and Spotify API's.

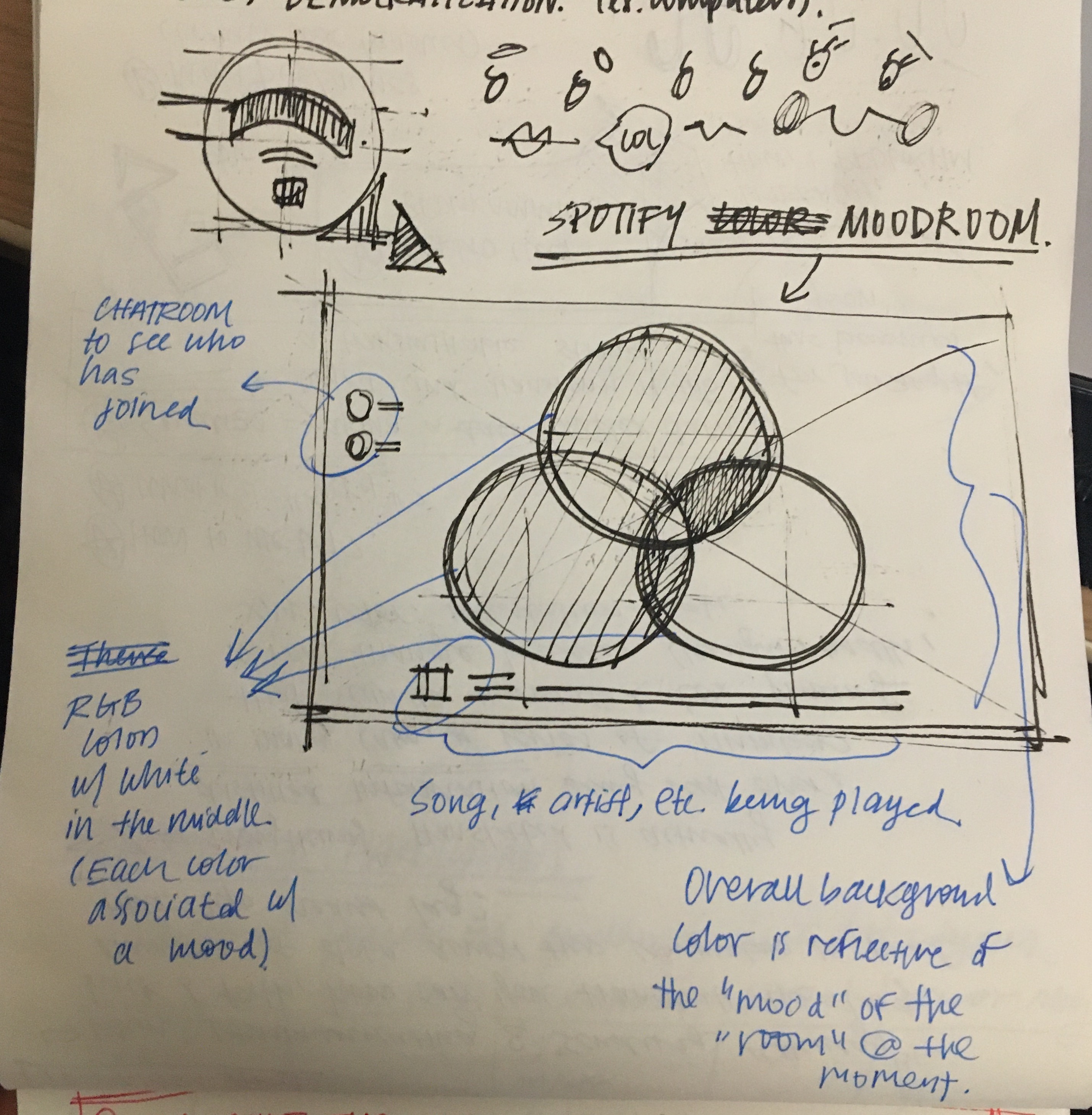

In this "group chatroom" of sorts, users remotely input information (song tracks) asynchronously, and have equal abilities in leveraging the power (color, or mood) of the room.

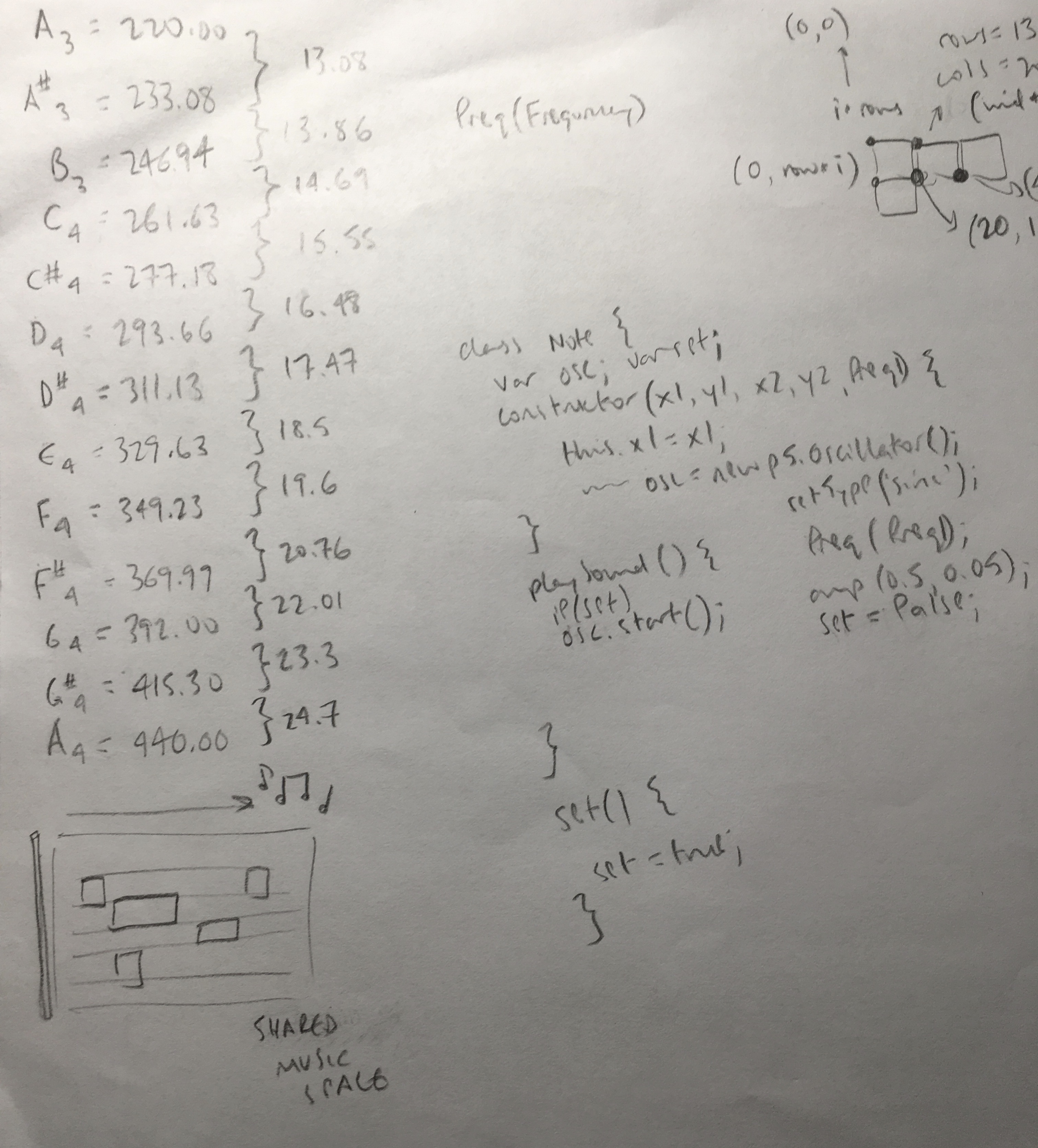

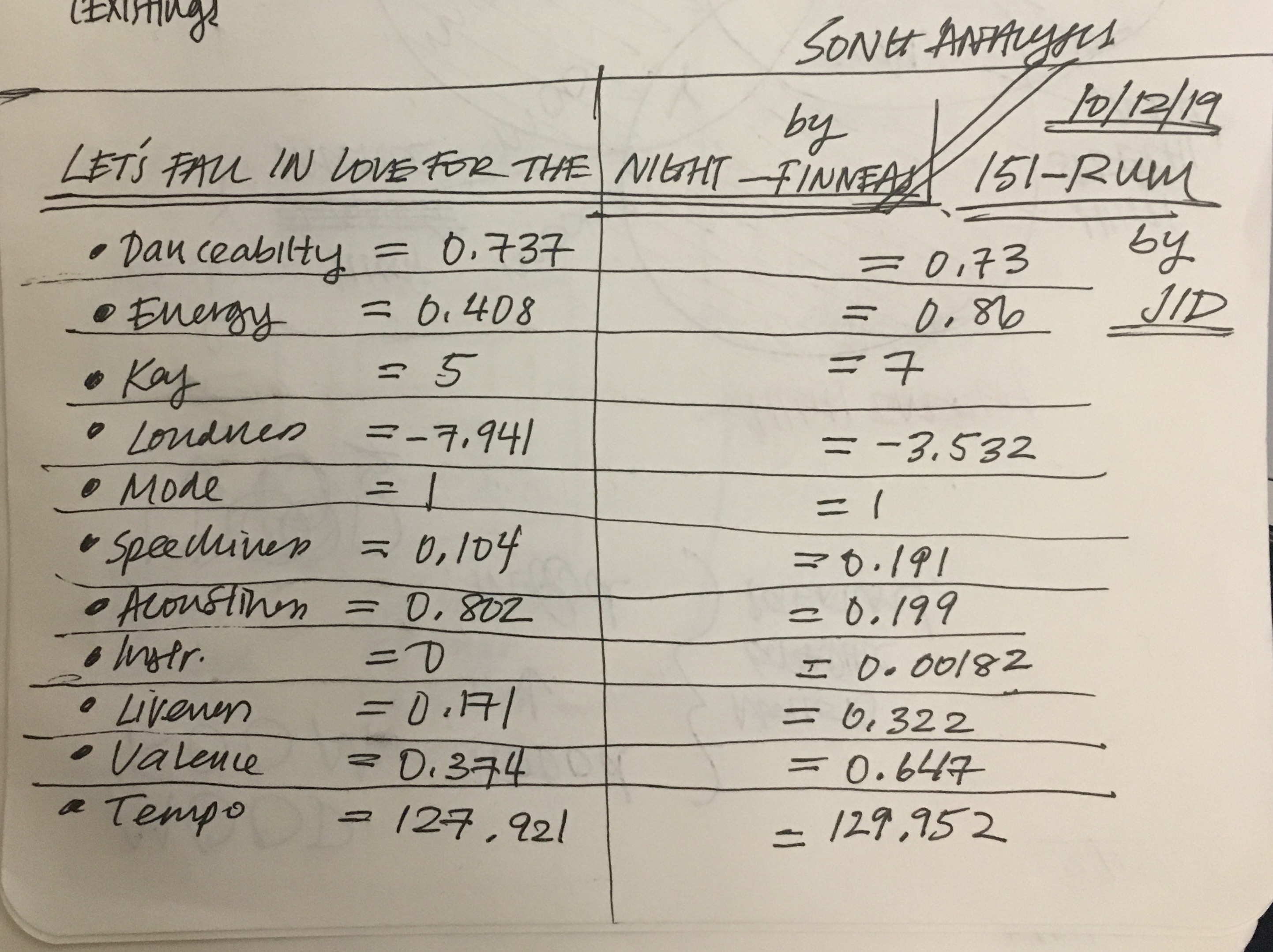

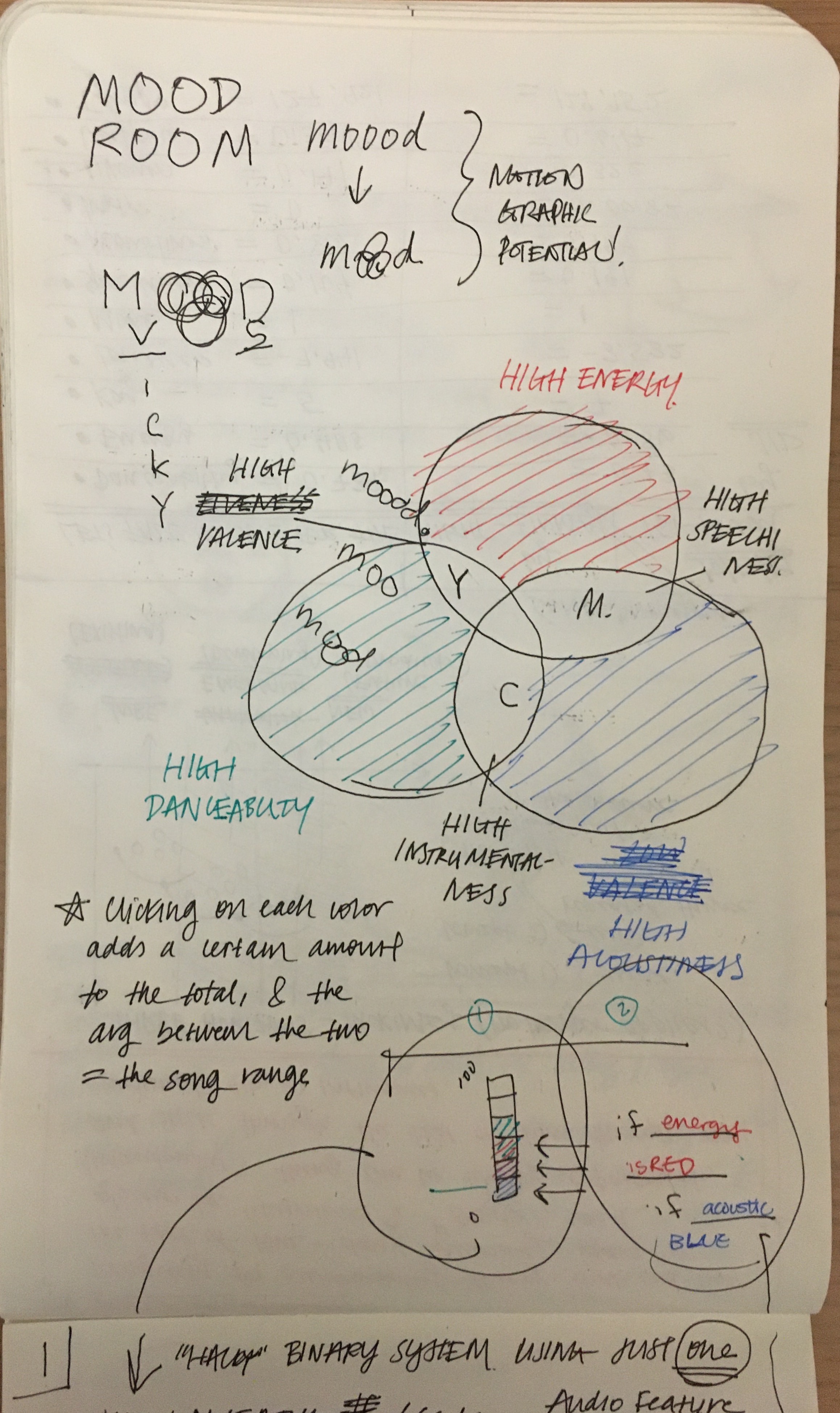

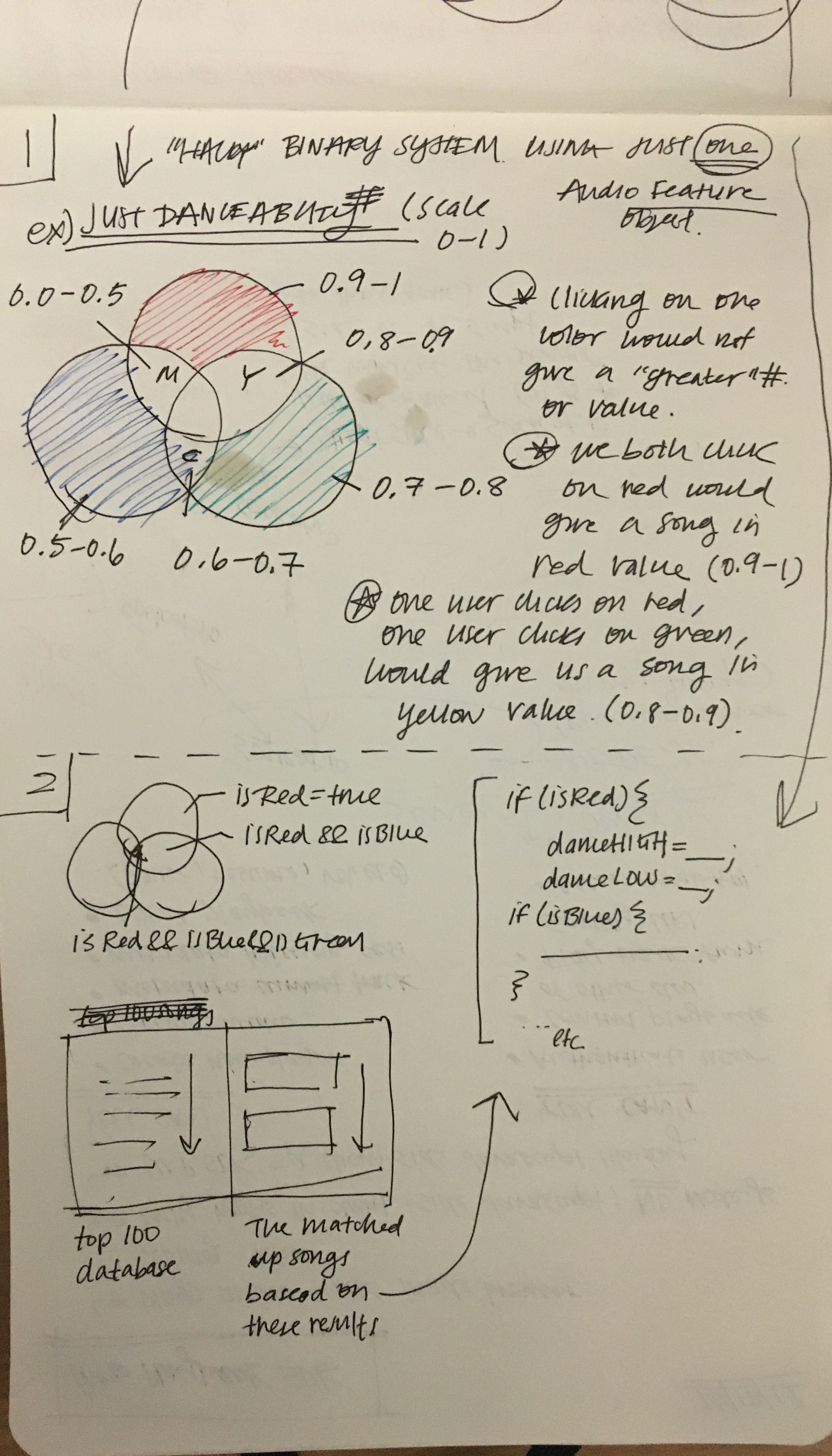

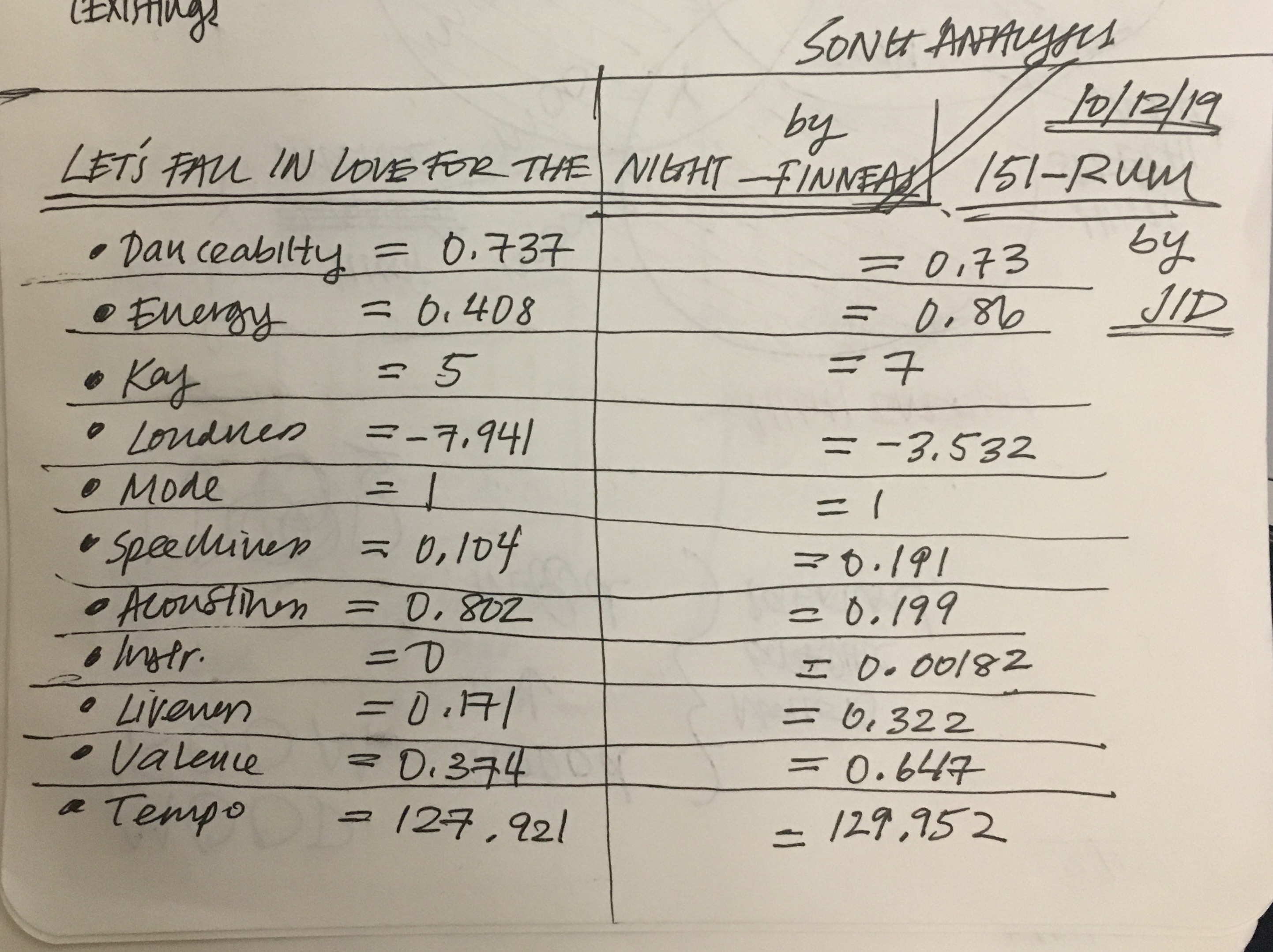

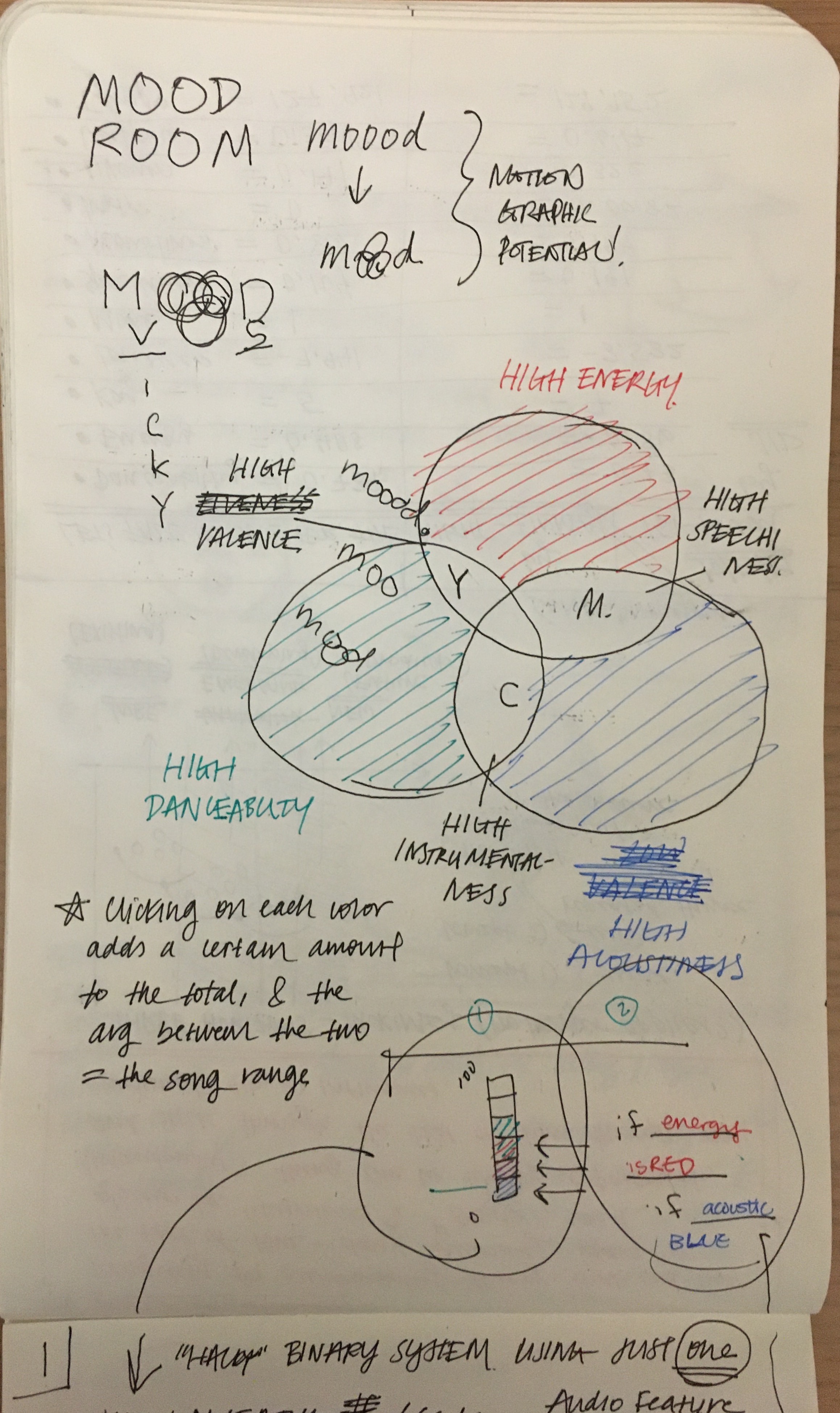

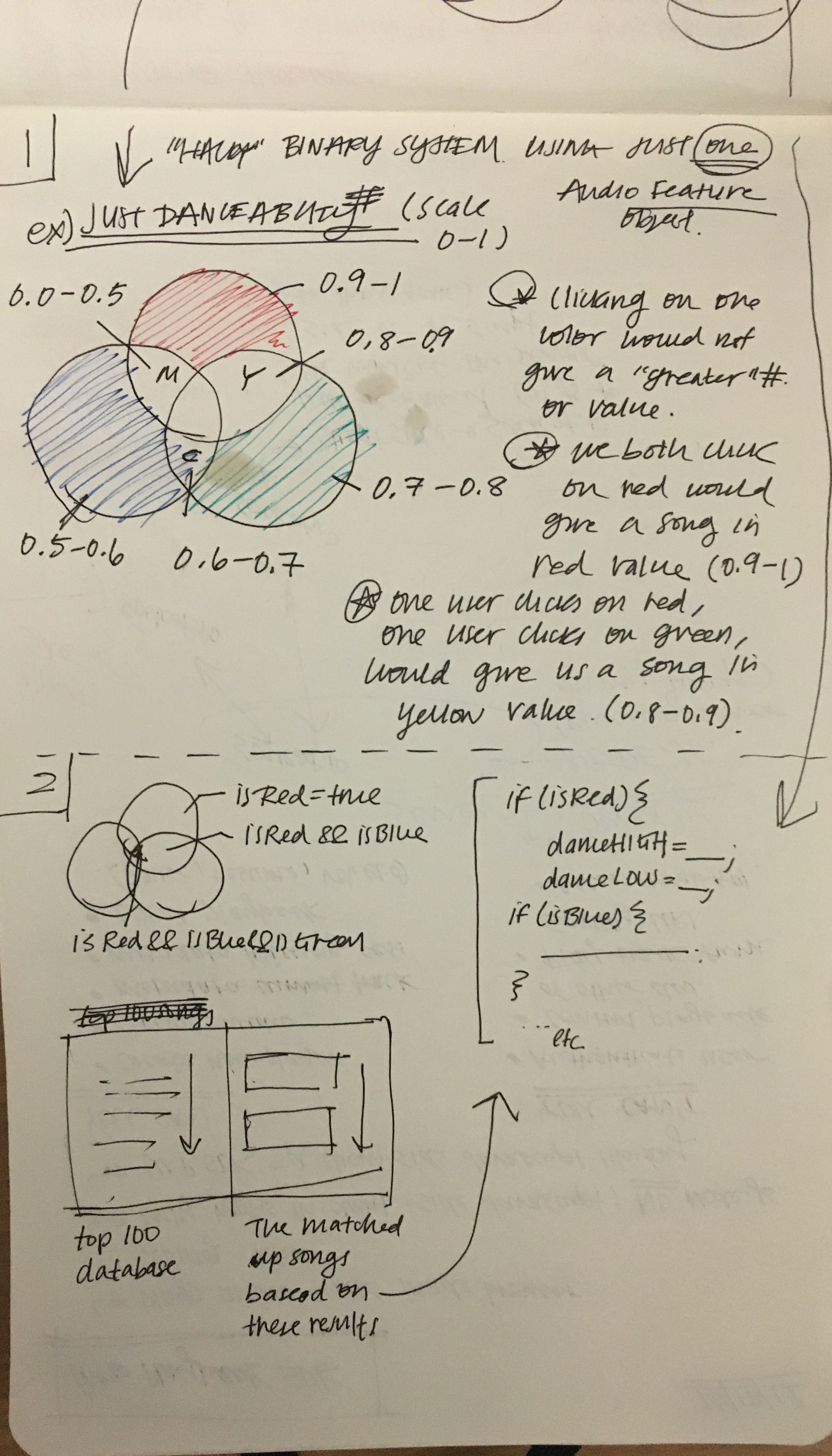

This first iteration, as it currently stands, is a bare minimum collaborative Spotify platform. Users type a song track (must be available on Spotify), which would then be sent to a server, to be communicated to Spotify. Spotify would then analyze the track based on six audio features: 1. Valence (a measure describing the musical positiveness), 2. Danceability (a measure describing how suitable a track is for dancing based on a combination of musical elements including tempo, rhythm stability, beat strength, and overall regularity), 3. Energy (a measure representing a perceptual measure of intensity and activity), 4. Acousticness (a confidence measure of whether the track is acoustic) 5. Instrumentalness (a prediction on whether a track contains no vocals), and 6. Liveness (a detection of the presence of an audience in the recording) These six audio features are then mapped onto a color scale, and are the aspects in which dictate the color gradient being represented on the screen, which will then be broadcasted to all clients, including the sender.

At this stage, users would be able to share current songs they are listening to and dictate the way in which the "mood" of the room is represented, by changing the color in which room would be. Color is an extremely expressionistic and emotional visual cue, which has the ability to tie in beautifully with the aspect of music.

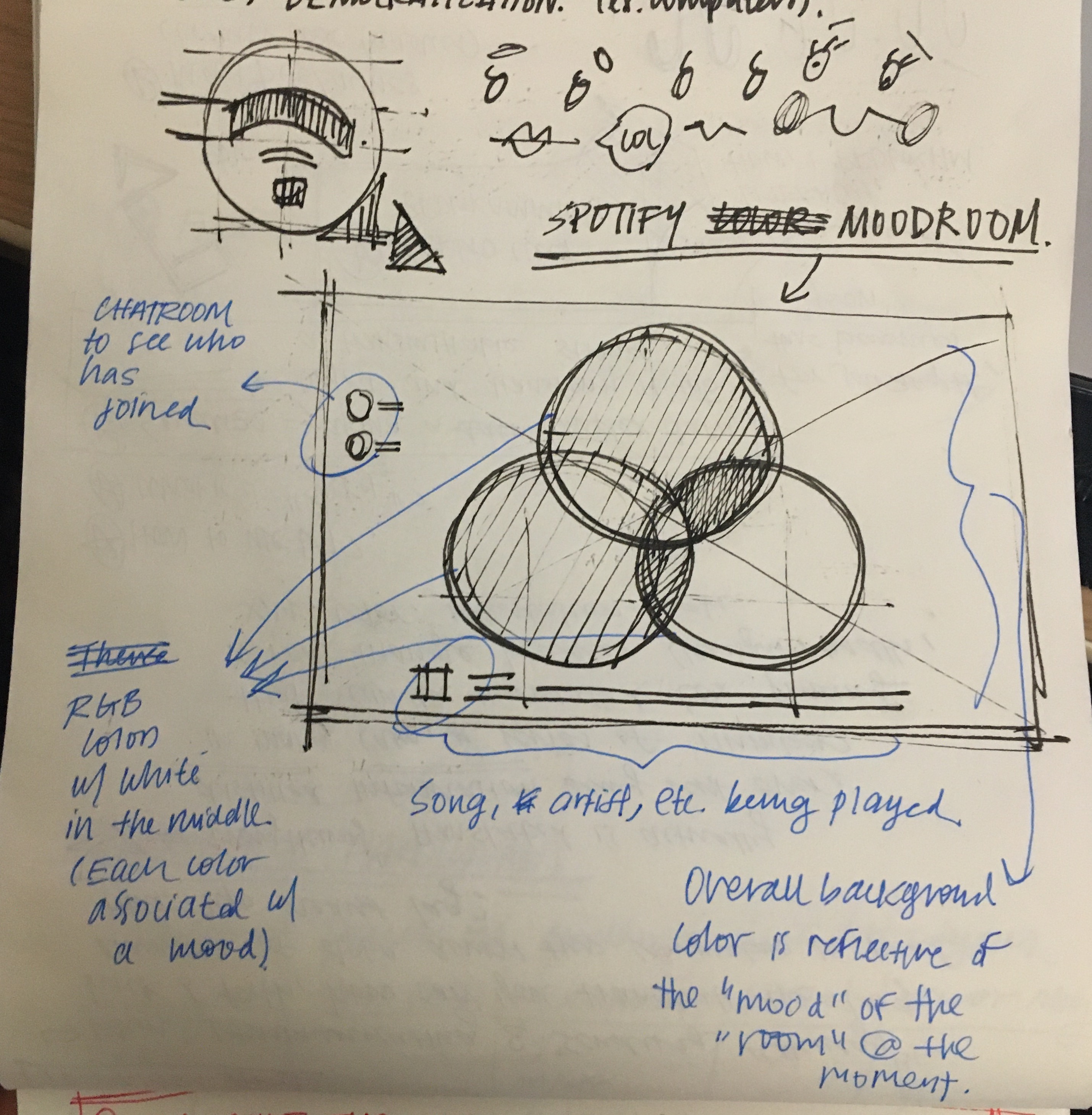

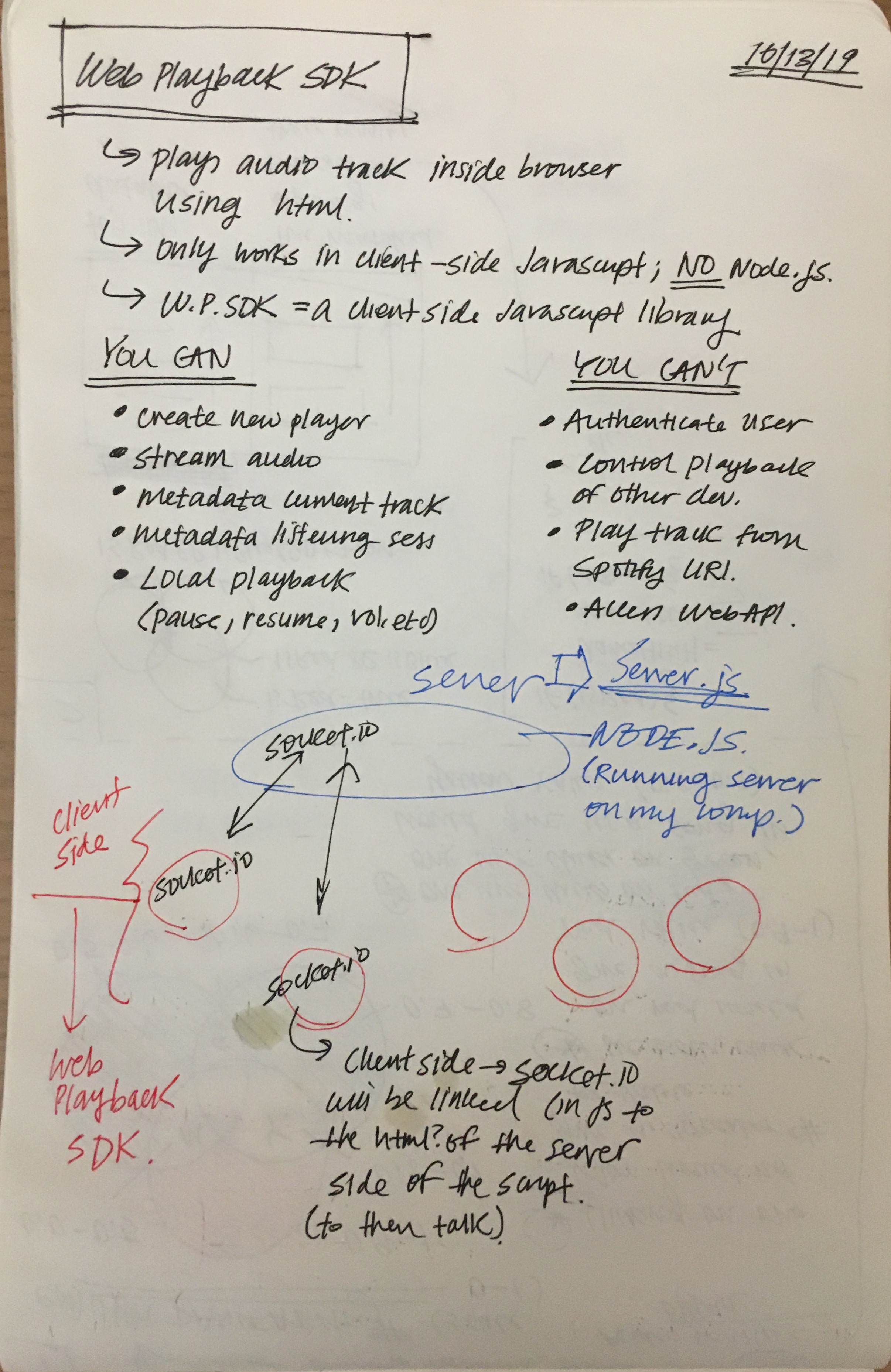

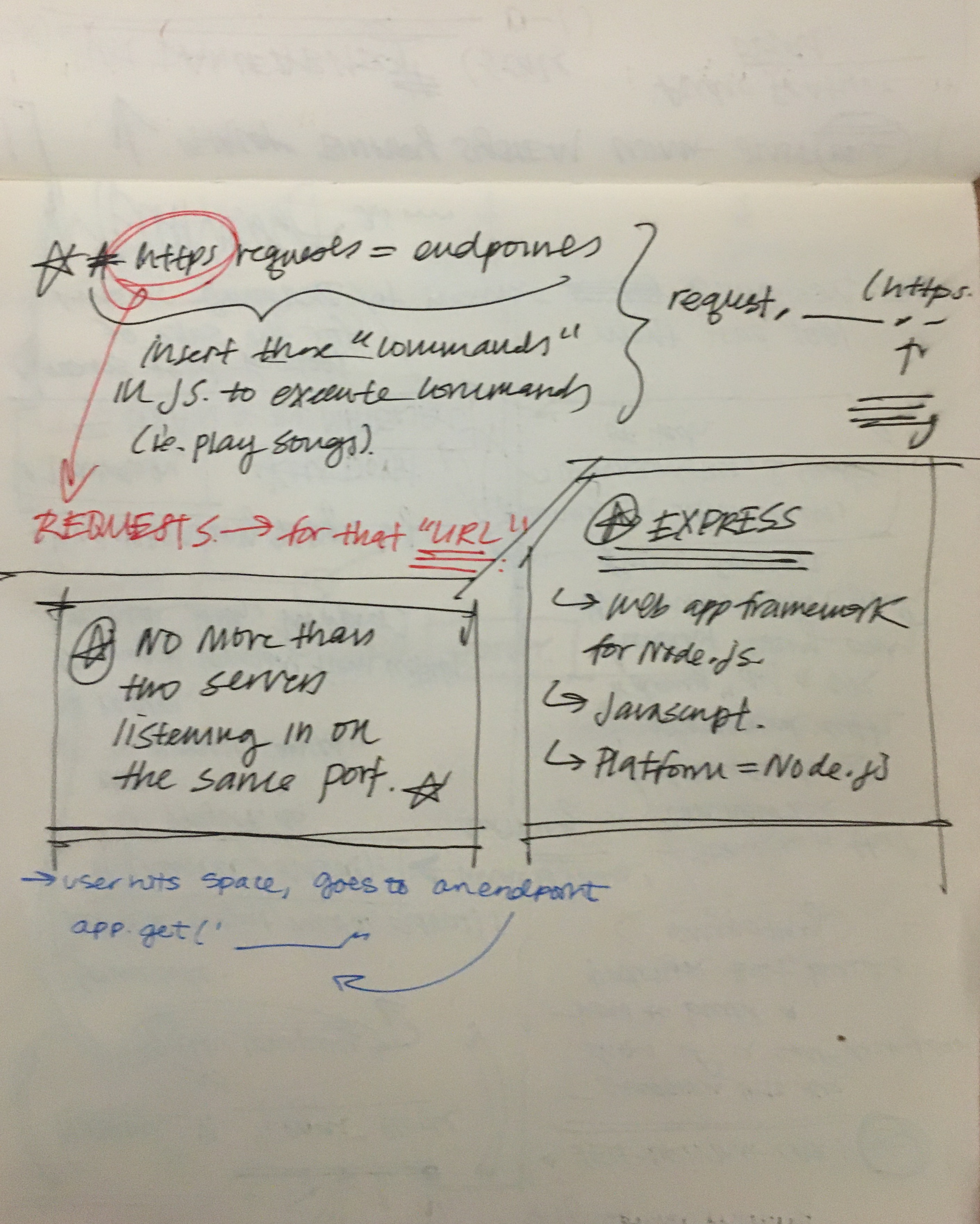

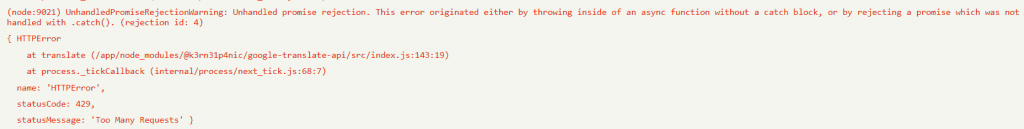

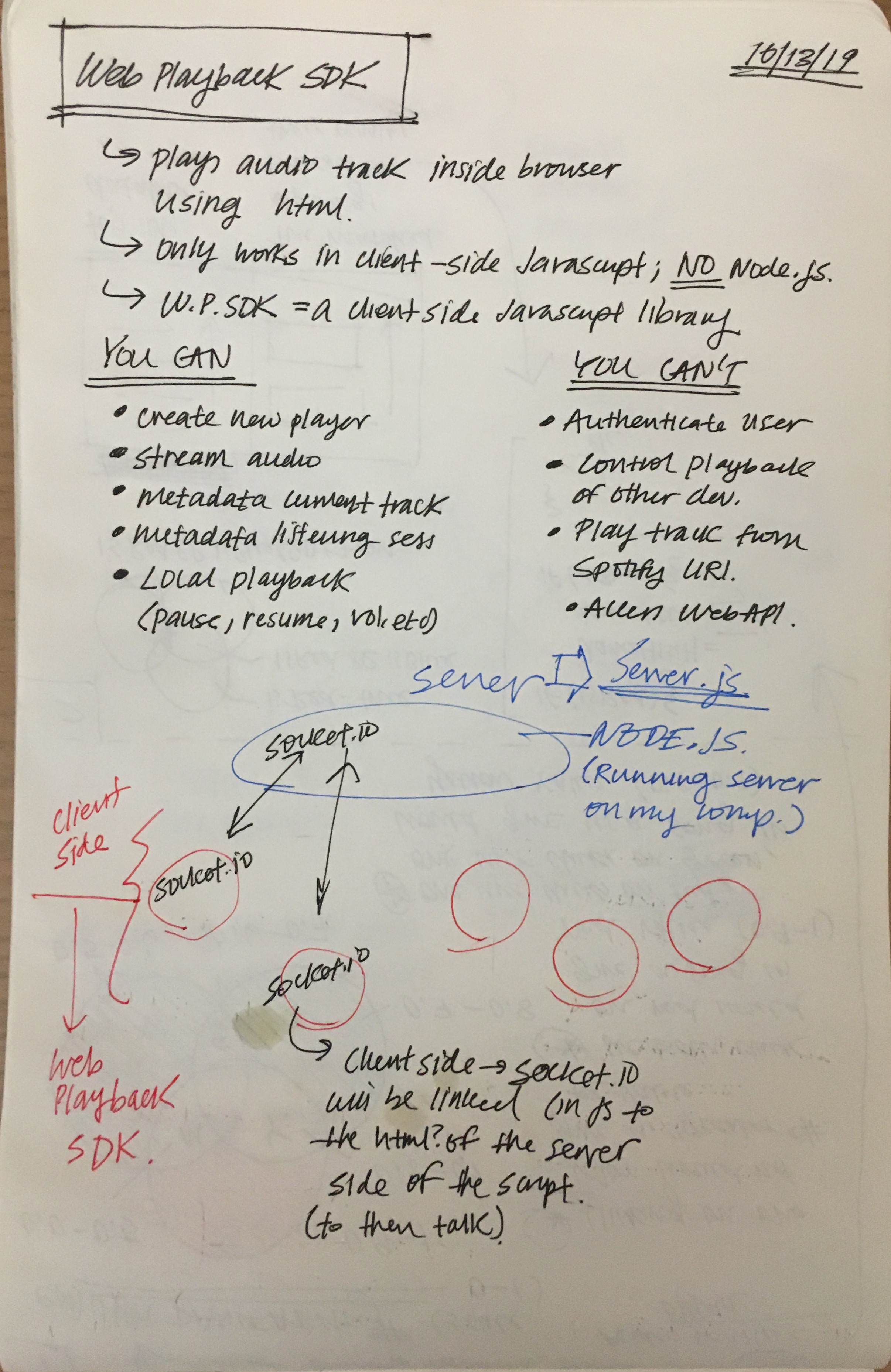

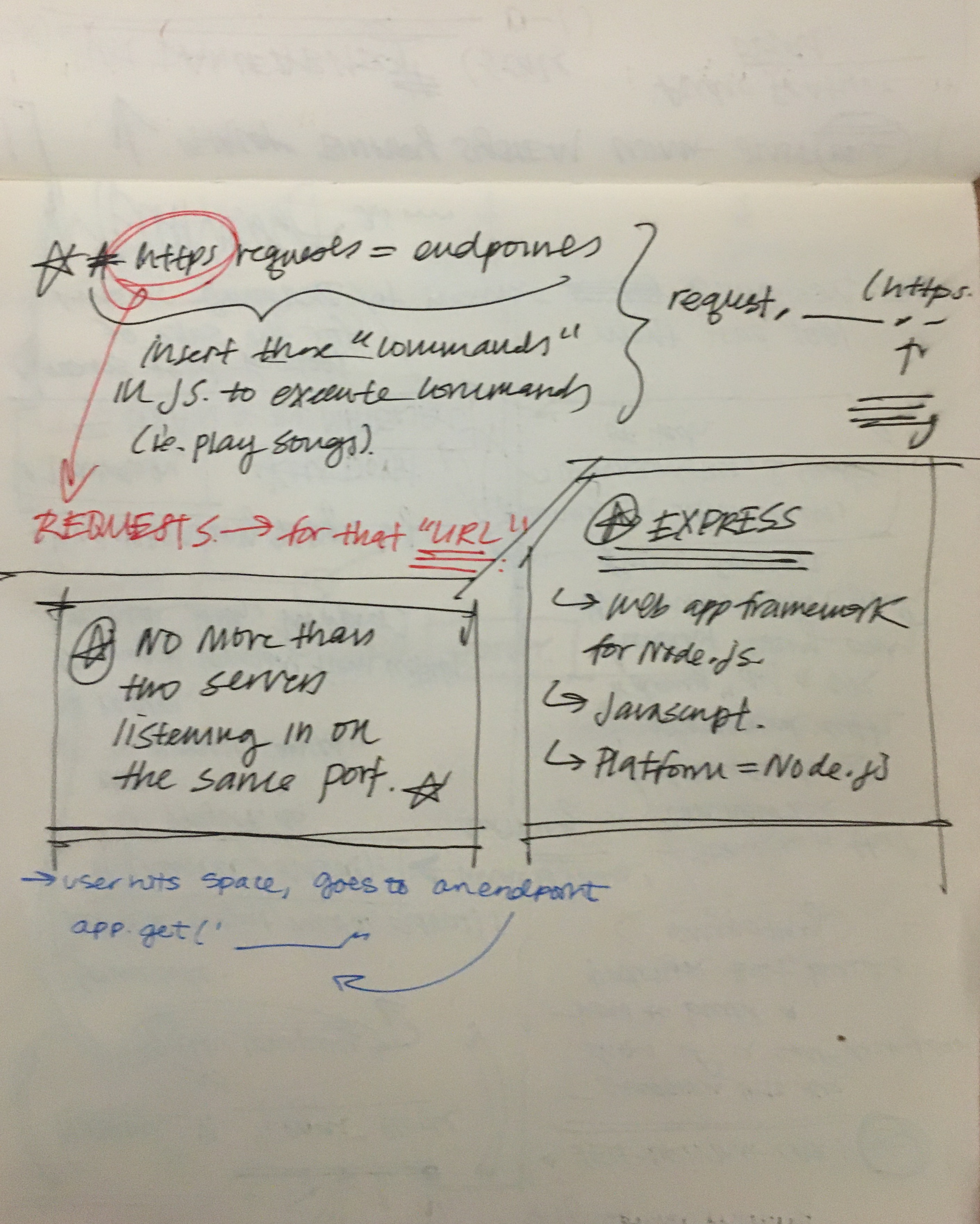

Our initial idea is a lot more ambitious, however, we ran into several (an understatement lol), issues. The original play was to create a web player environment that would consist of the 3 RGB colors, and CMY color overlaps, with white in the middle. Users would be able to click onto different colors, and the combination / toggle of the colors would trigger different songs to be played based on our mapping of colors to the Spotify API endpoints used above (in our current iteration). Users would then be able to dictate the visual mood of the room, as well as audio mood of the room, by mixing colors and playing different songs. First, there was the issue of the being able to create user authorization; there are several different types of it, some not being compatible with certain codes, and others having certain time limits. Next, there was the issue of being able to handle playback on Spotify Web API, versus Spotify Playback SDK, versus using Spotify Connect. SDK did not allow for collaboration with node.js, but the other two ended up creating issues in overlapping sockets, listening ports, and so on. We were also unable to manipulate / figure out how to pull apart certain songs from select playlists, but that was an issue that we could only have dip into due to the other issues that were more pressing. Because there is not only server and clients being communicated across here, and instead the entire addition of another party (Spotify), there was often conflicting interests in where that code intersected.

That being said, because we have managed to overcome the main hill of having all these parties communicate to each other, we would want to further work on this project to incorporate music (duh). It is quite sad that it is a project revolving around Spotify and music as a social experience, without the actual audio part.

by Sabrina Zhai and Vicky Zhou

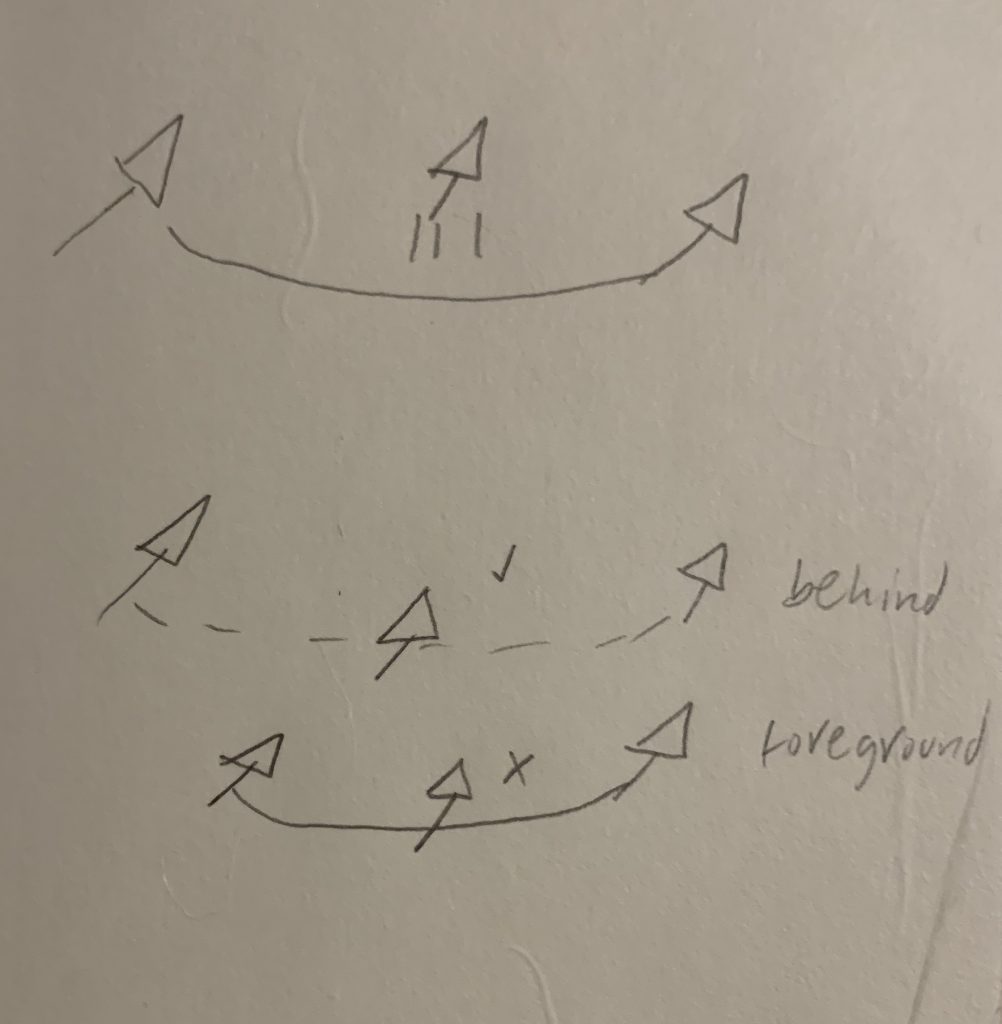

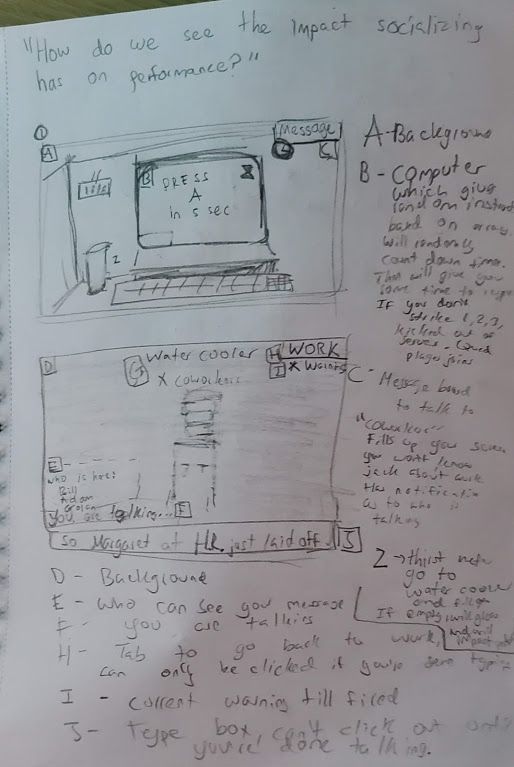

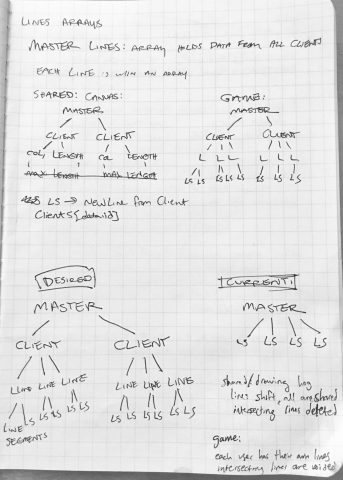

The concept for this project was a multiplayer game of jumprope within the browser. The idea was that each player has their own role (jumper, swinger).

The concept for this project was a multiplayer game of jumprope within the browser. The idea was that each player has their own role (jumper, swinger).