For our project, we created a scene from every spy movie ever - a room full of lasers. We were able to move around the lines we drew, but stepping over them and people walking through them didn't work as well.

Author: sovid

sovid & lubar – VR in-class assignment

Our lassie is a-wobblin' on a wee hoos!

We used our own models and rigged them in Mixamo, all the while having a darn good time.

sovid – Unity Tutorials

I have worked with Unity in the past, mainly from an asset standpoint, so I went into some intermediate tutorials to learn some new things. I followed an intermediate scripting tutorial as well as an intermediate lighting and rendering tutorial. They were more conceptual for tutorials, so there wasn't much to screenshot, but I got a good idea of how to apply these techniques to my own work when using my models and scripts in Unity.

sovid – Situated Eye

Sketch can be found here.

To use:

Toggle the training information by tapping 'z'.

Toggle the hand instructions by tapping 'm'.

For this project, I was interested in creating a virtual theremin, where much like an actual theremin, the positions that a user's right hand makes controls the note on the scale, and a slight wiggle controls the vibrato. I used the adapted Image Classifier to train my program on the hand positions, and looked at a point tracker by Kevin McDonald to track the hand for the vibrato. My main issue was finding good lighting and backgrounds to make the image classifier work reliably - I made a lot of strange sets and stands to make it work, so it's a very location-based project.

sovid – ML Toe Dipping

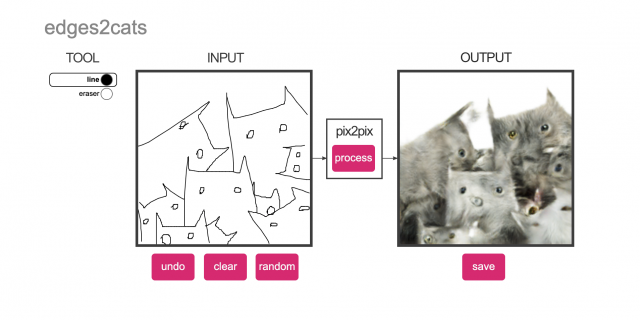

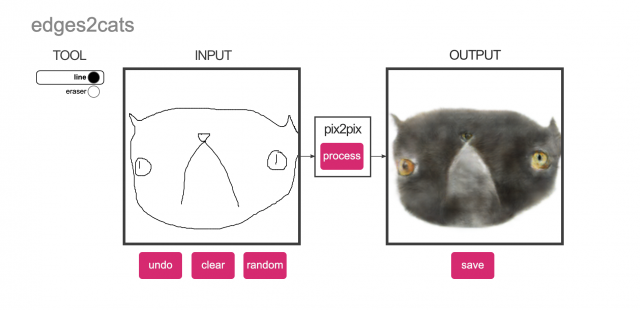

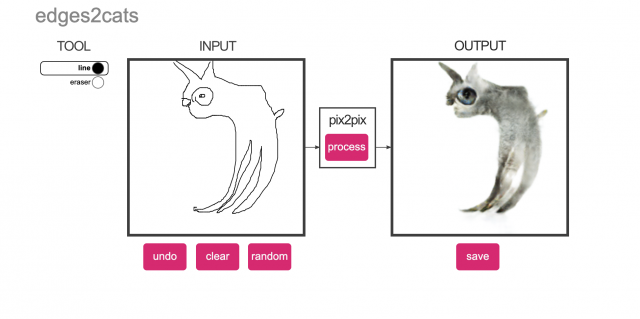

edges2cats

I found it interesting to see how the algorithm distinguished a nose from an eye, especially in sketches that weren't trying to resemble a cat.

GanPaint

original:

new:

ArtBreeder

and ocean in the field

french horn funghi

I found this really useful for character design in the portrait mode - you could create a whole family of people that were clearly related from one photo.

Infinite Patterns

original:

new:

TalkToTransformer

GoogleAI

I played around with the "How New Yorker are You" experiment, in which two players compete to be crowned the realest New Yorker by answering questions based on tweets that originated in NYC from the past year. The closer your responses are to the topic--and the faster you respond--the higher you score.

sovid – Telematic

Unfortunately for this project, I was unable to make it work completely with the server in Glitch. For this post, I'm sharing my trials and showing things that did end up working.

Shown above is an example of the convex hull algorithm I implemented in the p5 editor with some mouse interaction. I was interested in creating a generative gem or some kind of abstract shape with the cursors of each client visiting the page, but as these things sometimes go, I could not get it to work in Glitch. I liked the idea of a shape growing and shrinking as a group of people collaborate on its formation, all the while leaving history of each move made with the trail of points each cursor would leave.

The sketch in the p5 editor can be found here, and the sketch I ended up on in Glitch can be found here.

sovid – CriticalInterface

"An interface is designed within a cultural context and in turn designs cultural contexts."

Some assignments for this tenet:

- Visit 4Chan and make a friend

- Think about yourself as being from a different culture and check if the interface works equal to you.

- Visit websites from other cultures (Where are you from? ...) and check if the interface works equal to you.

The idea of struggling with a foreign interface (aside from the obvious barrier of language) is interesting to me because the context in which an interface was made matters. For better or for worse, certain interfaces are built with a specific demographic in mind. This is the case in many AAA video games. With content being created for the common straight, white male, video game interfaces reflect ideas that would appeal to that audience, and in turn enforces a certain type of culture in video games.

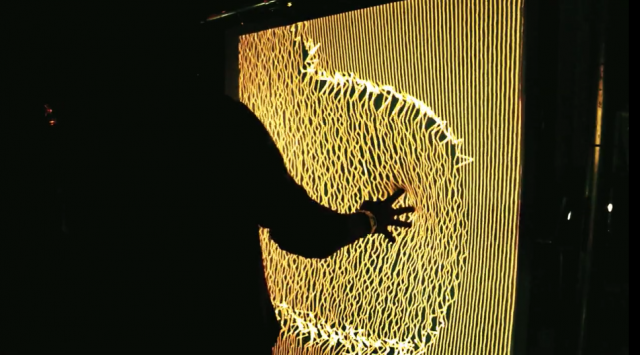

sovid – Looking Outwards04

For this looking outwards, I chose a work by Aaron Sherwood and Mike Allison, in which a projection is shown on a piece of stretched spandex - when a person presses against the spandex, the visuals interact with their hand, causing a flame-like ripple effect to form. Some of the visuals work better than others, but I liked the idea that the user would actually feel something touching them as they press inward rather than a projection simply interacting with the space around them.

Firewall from Aaron Sherwood on Vimeo.

sovid-Techniques

I chose the drawing exercise from the P5 examples. It appealed to me because the lines created by clicking and dragging the mouth have the added characteristic of particles as points.

From the libraries, I looked further into the 3D library - in particular, Canvas3D. With it, we are able to create shapes and patterns in 2D and make them 3D, much like an SVG image in Maya.

The glitch block that I found most useful was the Model Viewer. Since most of my work involves 3D models, I was interested to know how I could use them in a browser.

sovid – Body

I really can't explain the design inspiration for this one. I mainly wanted to play around with 3D in P5, and having just watched 'A Fish Called Wanda'

and modeled a cowboy hat for fun, I decided to combine them all. The program tracks your face rotation and monitors your blinks and the model follows that data. Working with multiple colors proved difficult, especially because I couldn't use textures I had on the model since the UV maps didn't work in P5. Using the BRFv4 face tracker, I used the rotation value to move the model, and figured out the points for the eyes. Each eye had six points - two on the top, two on the bottom, and one on each corner.

I was able to track blinks by finding the ear aspect ratio between each point on the eye, using research by a team named Soukupová and Čech.