Collaborated with vingu.

Check out post here:

/vingu/12/04/final/

60212: INTERACTIVITY & COMPUTATION

CMU School of Art, Fall 2019 • Prof. Golan Levin

Collaborated with vingu.

Check out post here:

/vingu/12/04/final/

Please see the following post for project documentation:

View the project here:

Sorry for the late post!

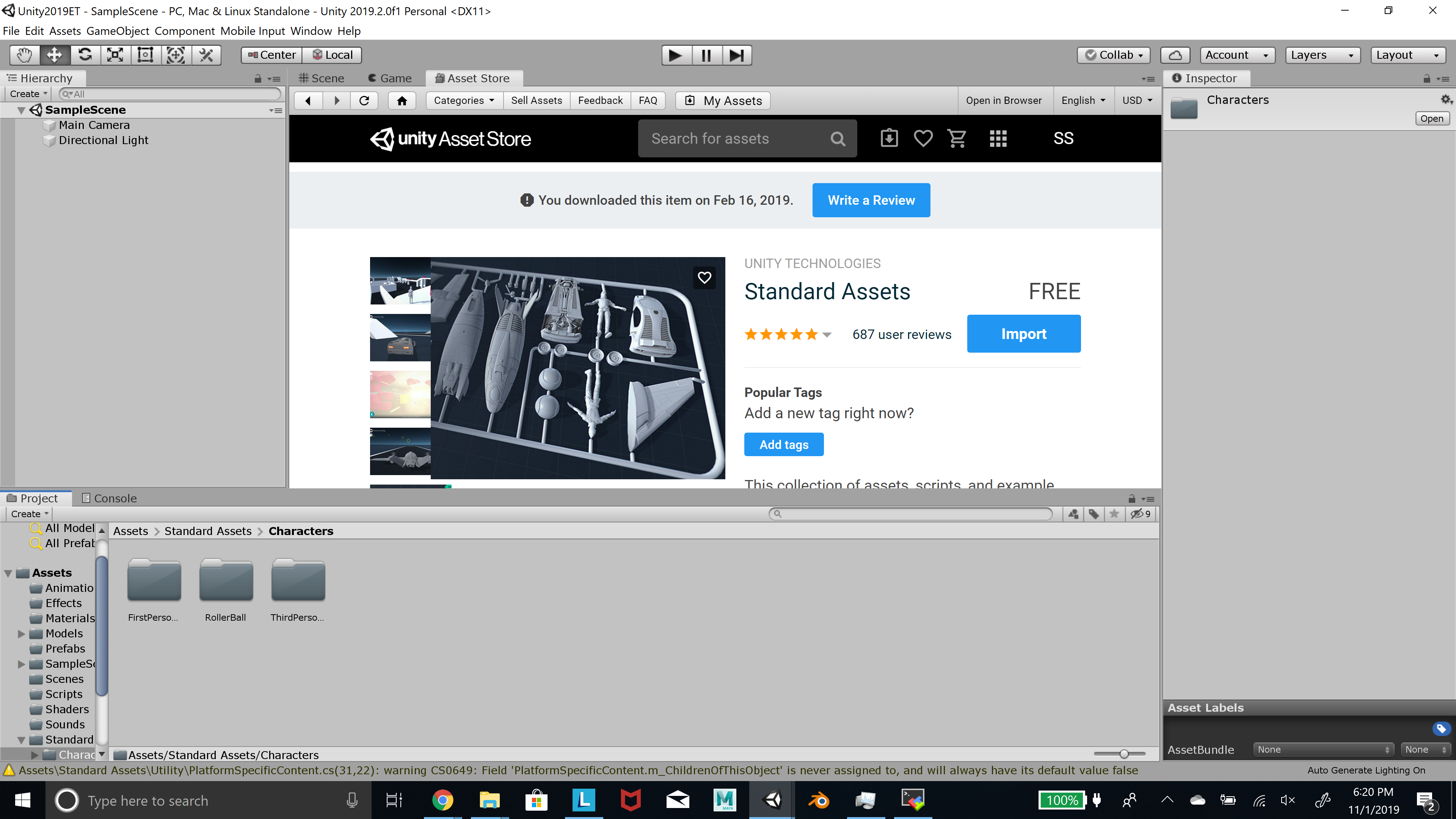

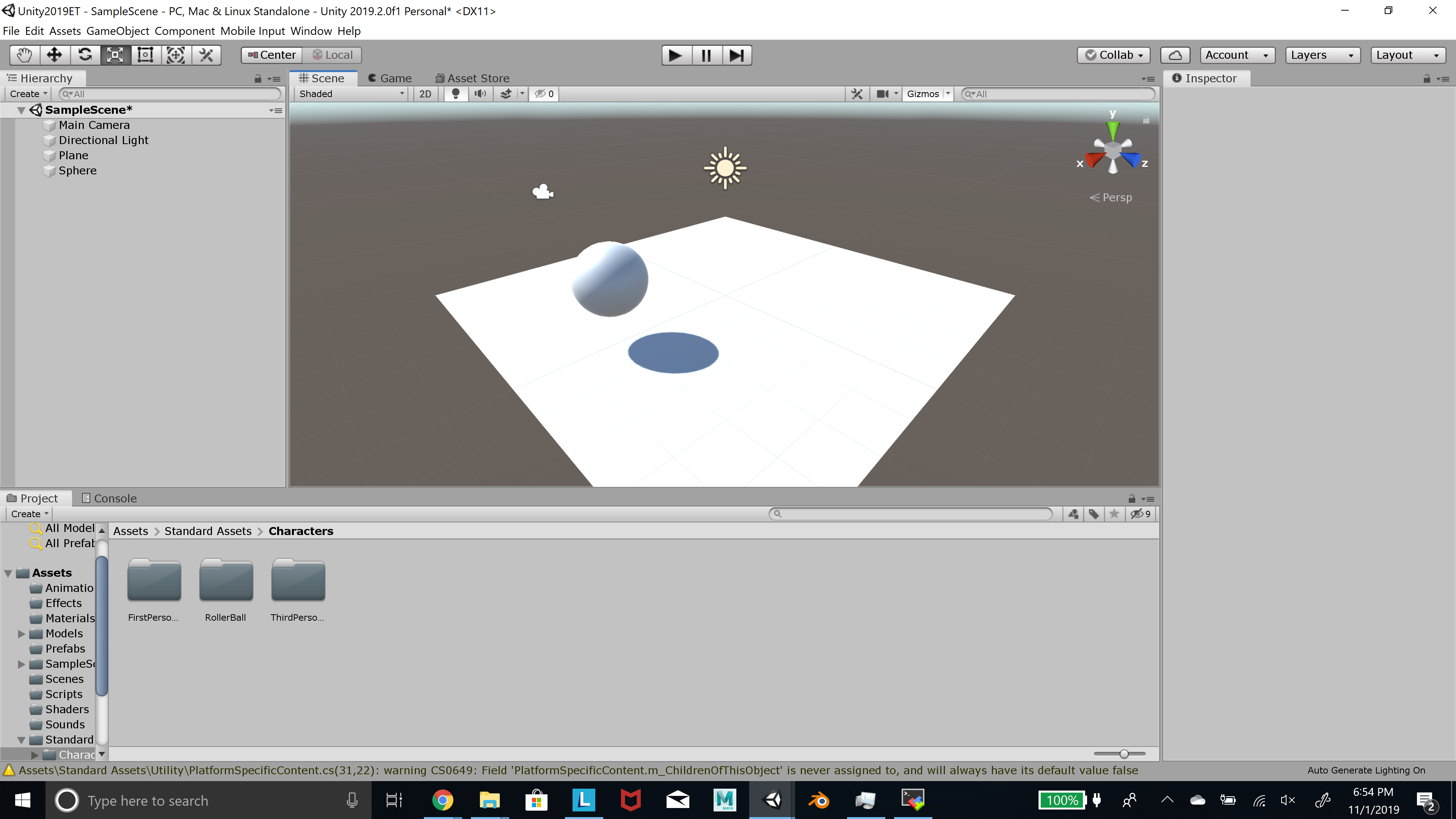

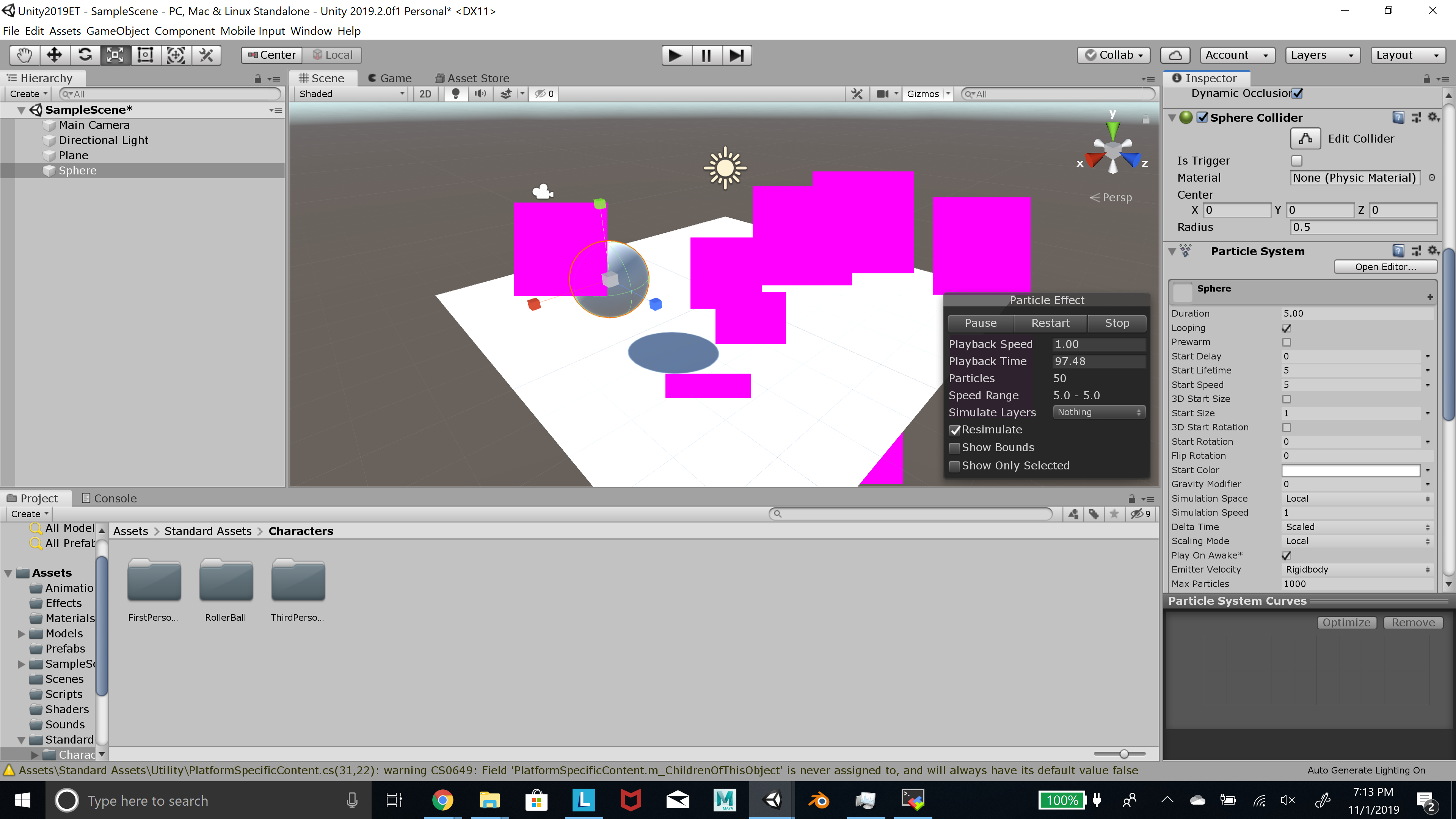

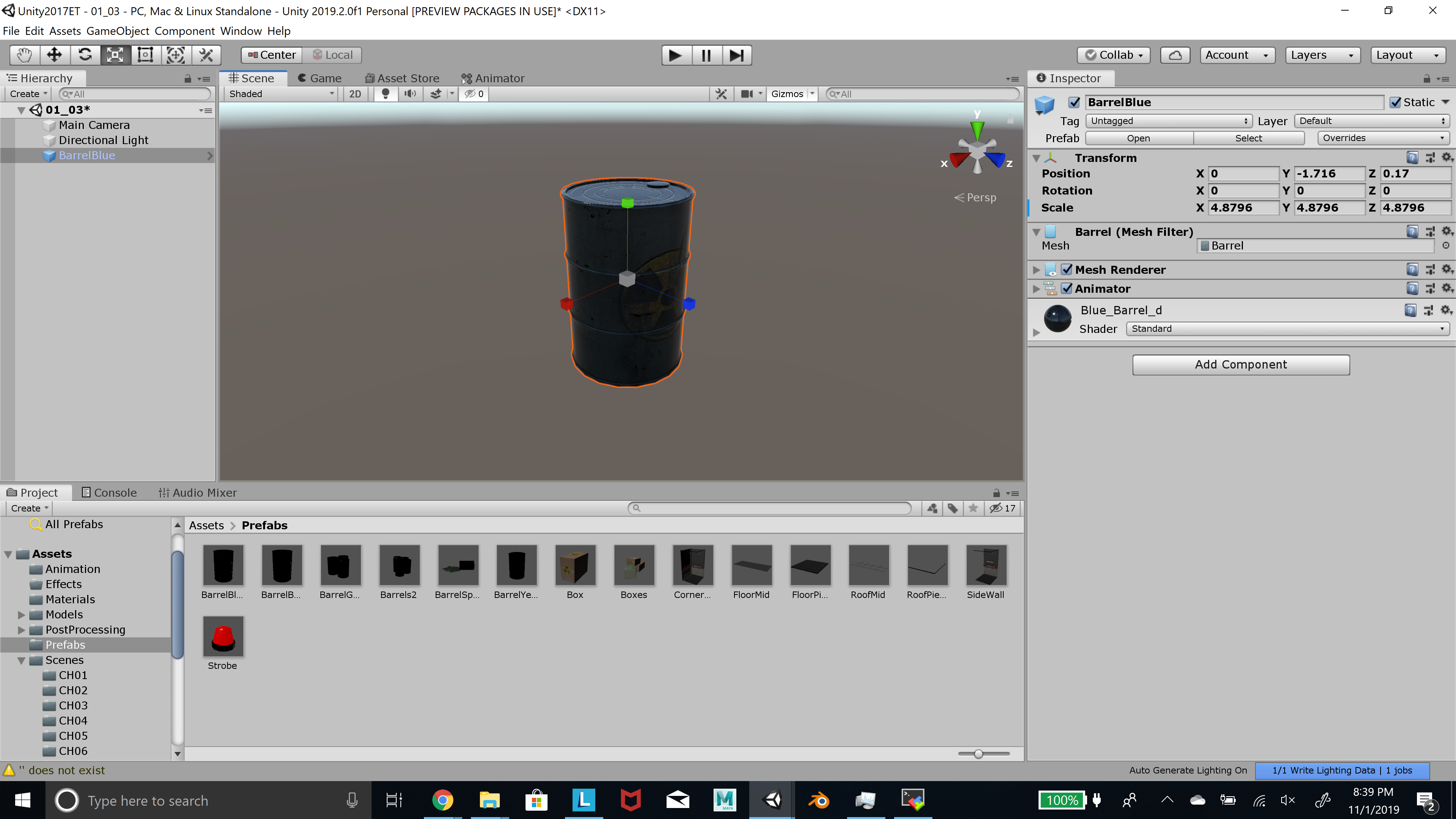

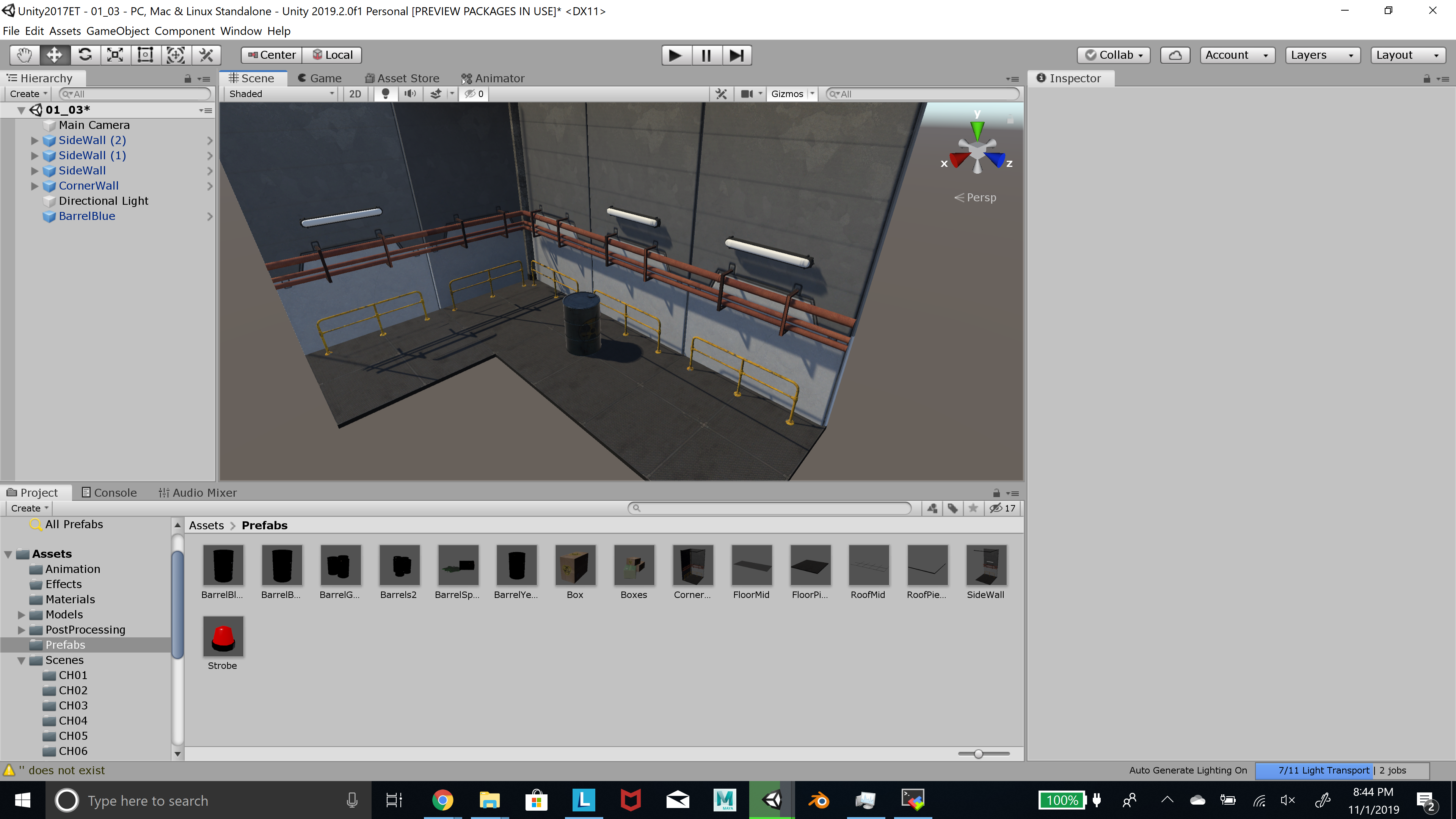

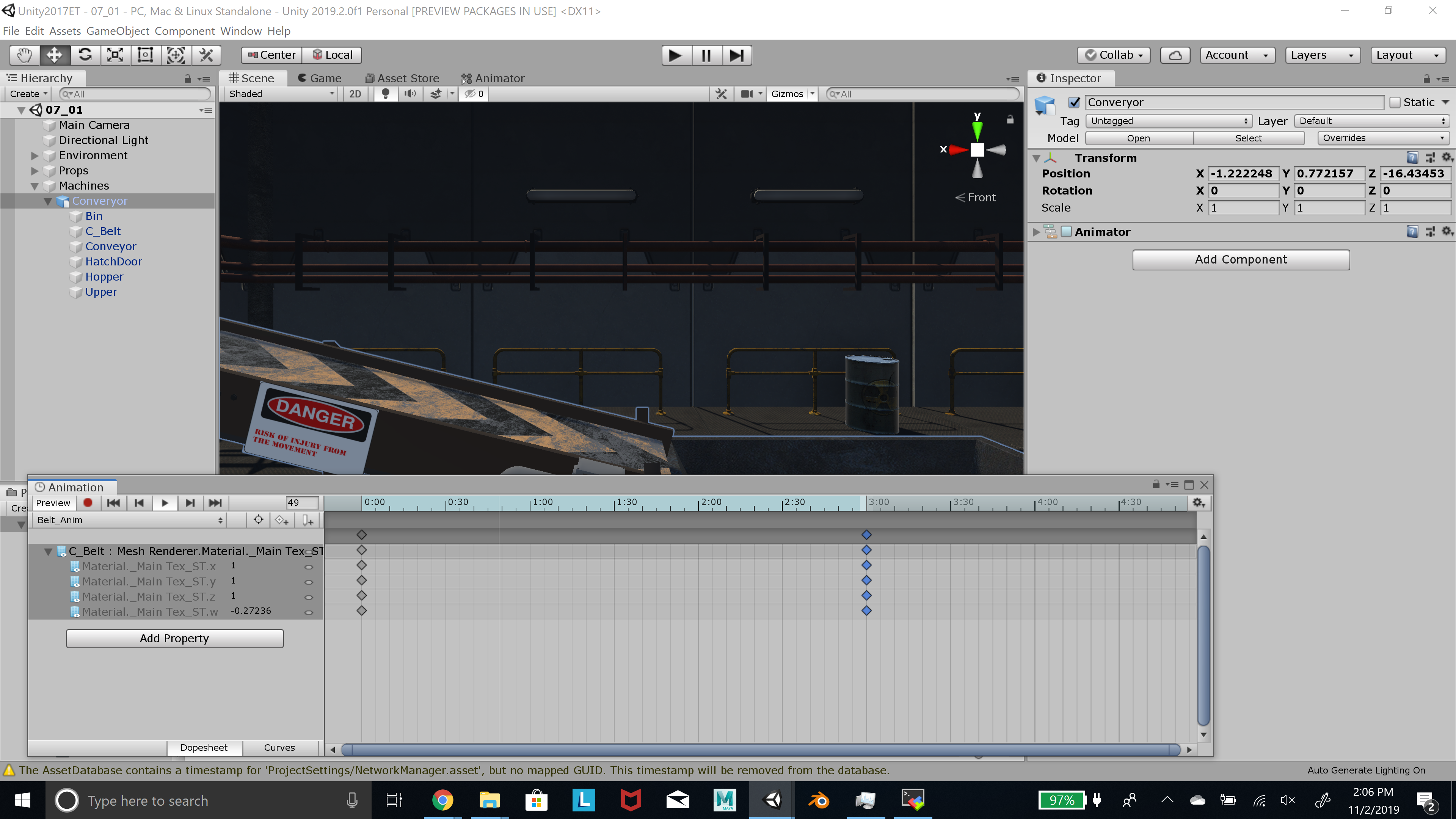

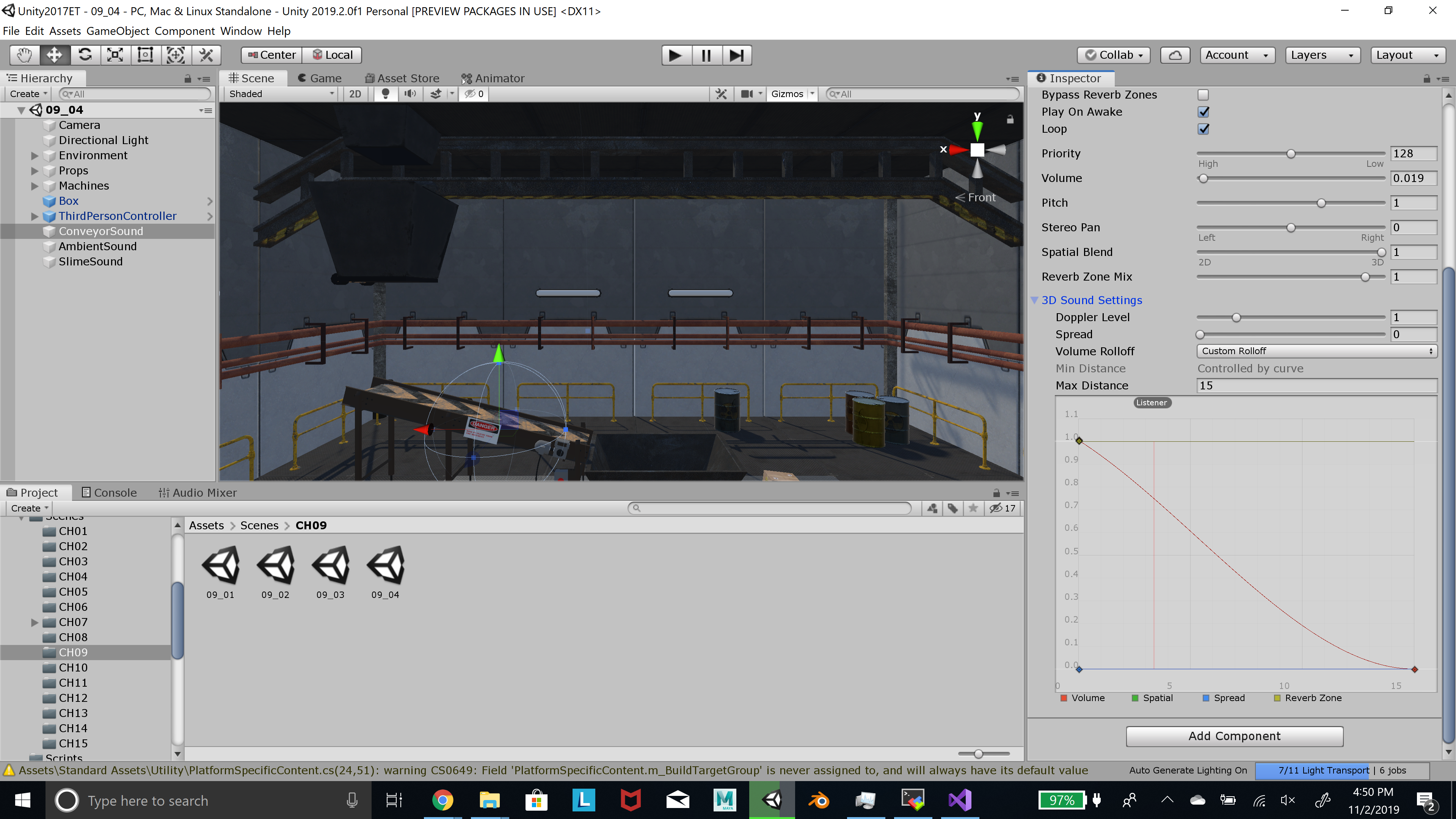

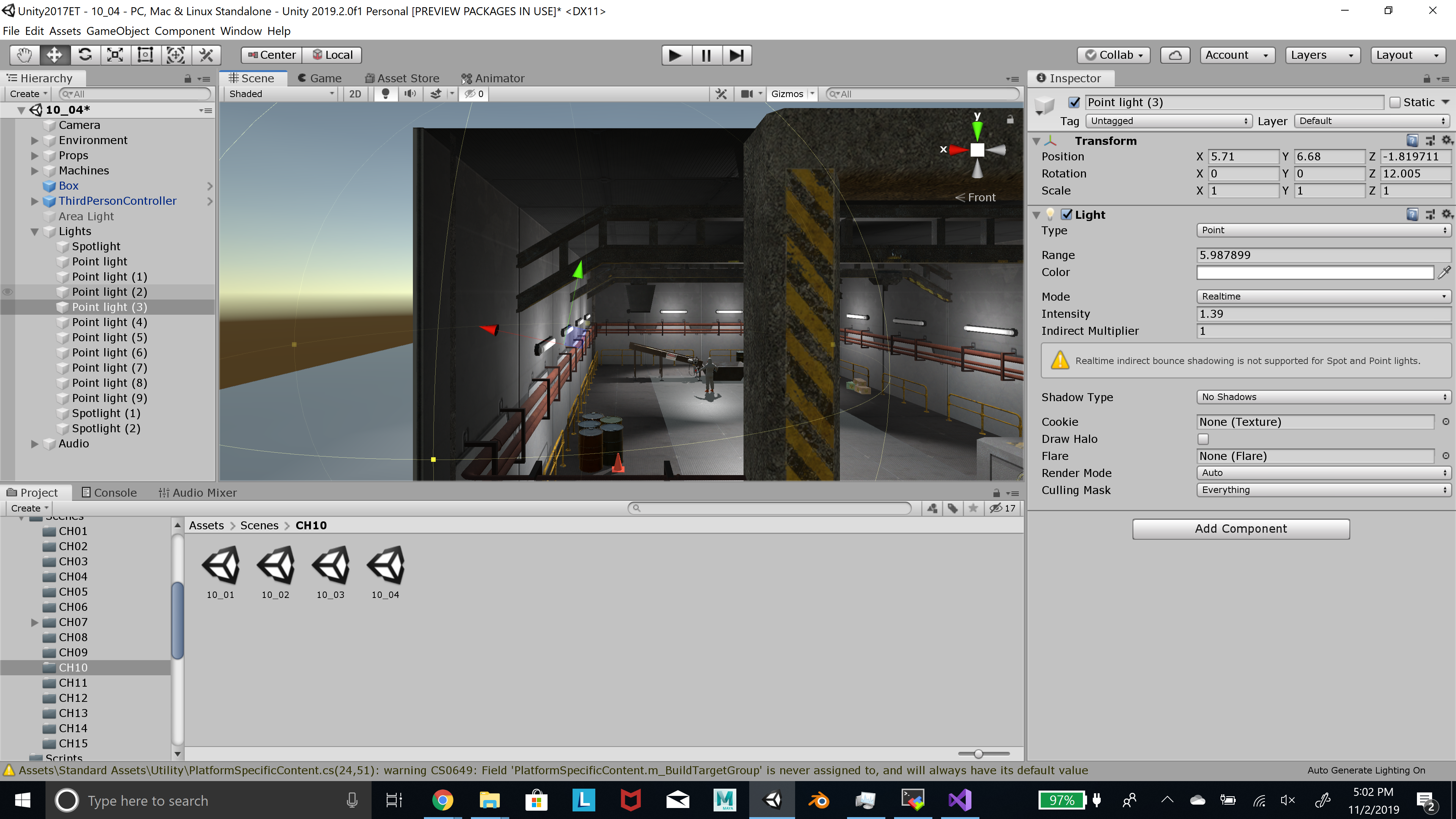

I've been somewhat busy the past few days, but I did finish the first tutorial on time, and just didn't get a chance to upload the tutorial screenshots. I have some basic scripting knowledge, but I'll try to finish the second tutorial before the next project gets script-heavy.

I worked with @meh on this project.

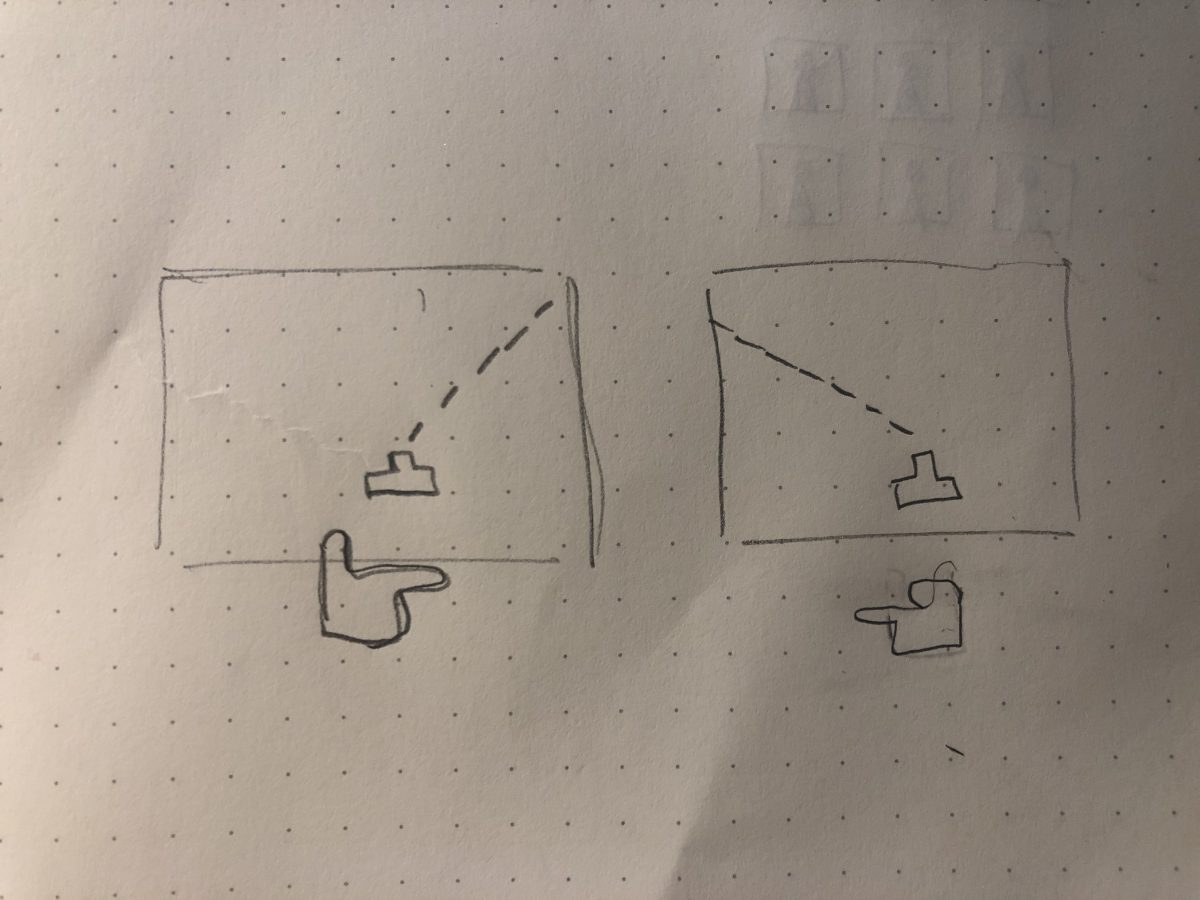

We decided to do something simple, and came up with the idea to use ml5.js' feature extraction and send that prediction data through to Space Invaders, so that a person could play the game with just their hand. We used a 2-dimensional image regressor, tracking both the orientation of the hand (to move the spaceship) and the thumb's position (to trigger the blaster). We also modified a version of Space Invaders written in JavaScript that we found online into a p5.js environment (the code was provided from here and here.

Unfortunately, we weren't able to figure out how to save/load the model, so all of our code had to be on the same sketch, with the game mode being triggered by a specific key press after the training of the machine was over. Because of this, it caused the game mode to lag (severely), but it is functional and still playable. If we had more time on this project, we would have tried to port our ML training values into a game of our own making, but we are still somewhat satisfied with the overall result.

This process was very relaxing and gave me a feeling of control.

The blending between different textures when they were added was very aesthetic.

Mixing faces was a lot more fun than I thought it would be; it was like creating a new person from scratch.

The infinite tiling program didn't take any of my images without raising errors, so I didn't really have a custom output image for this part.

At the end of the day, the sky will still come down."

There are a lot of signs all around the city and on the outskirts that people are ready for rain this Saturday.

Many are holding blankets to protect the windows and cars.

The biggest threat this weekend is rain.

Sometimes I leave my homework for a bit and come back to it, and I think that's the first step to change.

So that's how I started this class. I just wanted to make it better for the next generation. And then a couple of months ago I was inspired to do this again after seeing that this happened all over the United States: students of a certain age who had never been exposed to the art scene at their school were really impressed by the artistry of the pieces I presented.

So after two months of showing art to people, I was like, "Yes, this actually works." I knew that people were getting their first taste of art history. And also, it was fun. But I really really didn't think that I'd be able to create this art class in this way, with that kind of energy. I think that's why there have been people like me who go to school where the art class isn't as successful, where the art classes aren't as well-rounded.

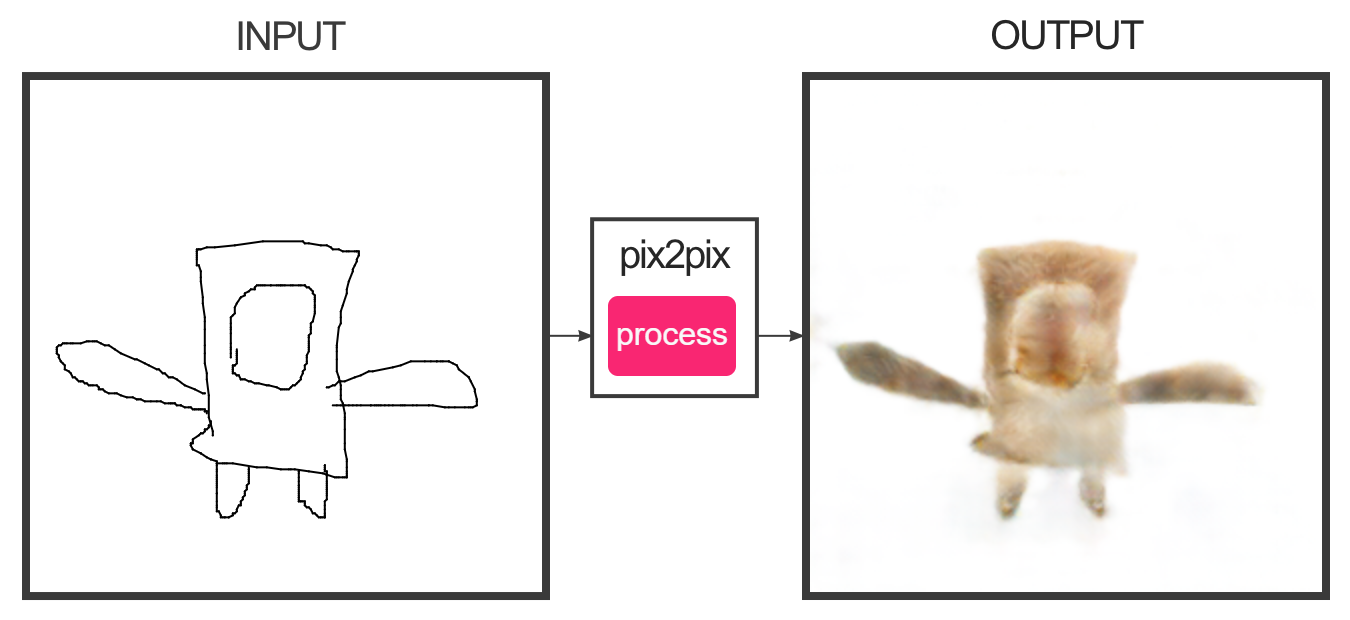

I experimented with the Google Quick Draw game. It was fun to play a sort of speed-pictionary where both the guesser and the artist are under pressure to guess or draw the given prompt.

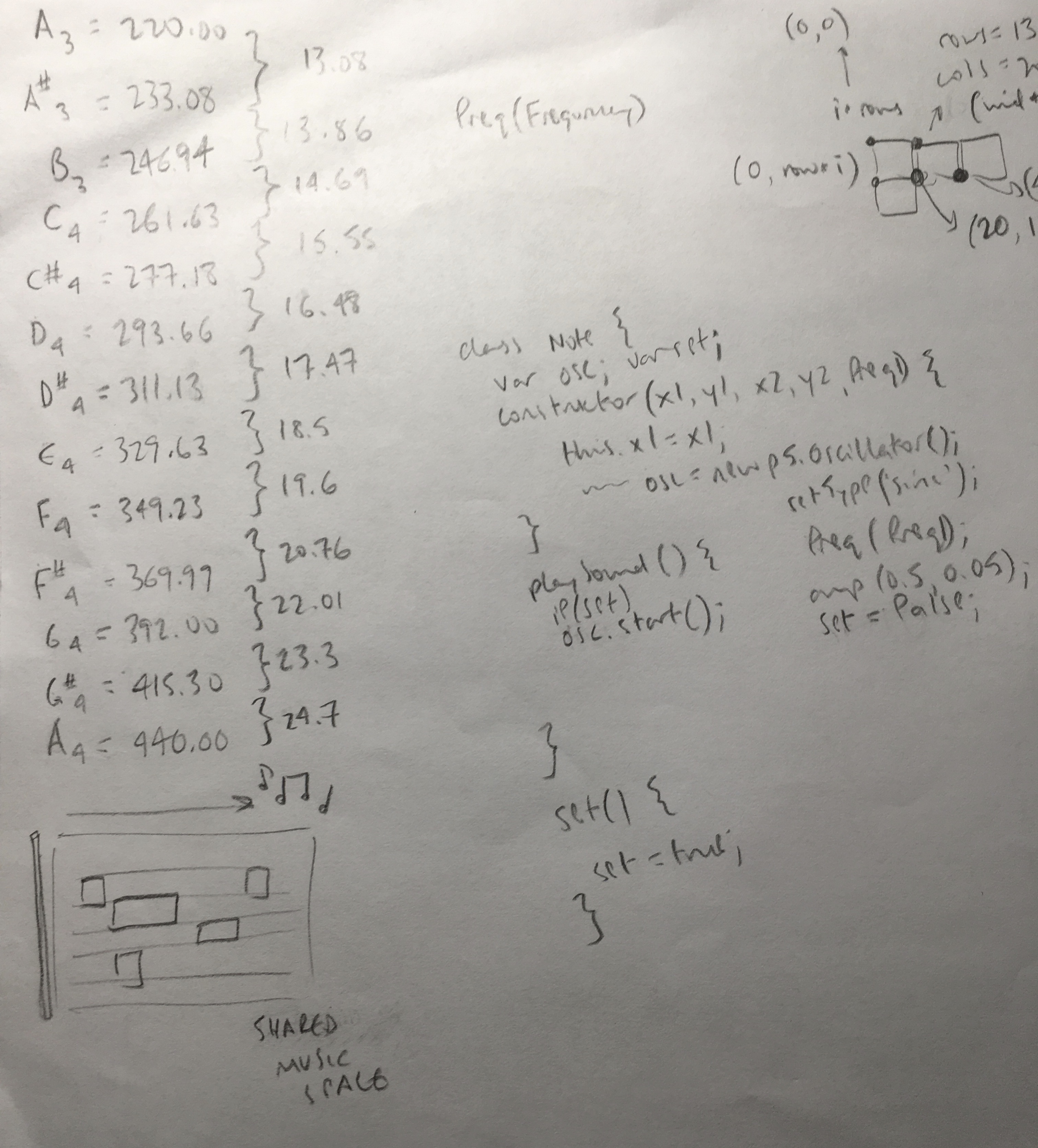

A Simple Music-Based Chatroom

https://glitch.com/edit/#!/harmonize?path=public/sketch.js:124:17

I edited the project after making the .gif, to make it easier to visualize what sound each client was making.

My main idea behind this project was about how to make a music-creating service that could be edited by multiple people at once, like a Google Doc. I first thought of using clicks on specific spaces to record a note, but I later realized it would be more stimulative if I mapped certain keys on the keyboard to piano keys. As such, the keys from a to k map to a corresponding note from a3 to a4, and w, r,t,u,and i map to the sharps/flats.

As per Golan's suggestion and general user confusion over the column location for each note, I've changed it so that each client's notes only occur within a specified column, and changing the note played only changes the row location. Now, each user's "cursor" will be constrained to within a specific column. Also, the text on the left-most column tells you which key to press to play that row's specific note.

My network model is effectively many-to-one(-to-many). I'm taking multiple clients' music data and note location, and sharing it with all clients on the same canvas. The project is synchronous, so people will be "communicating" in real-time. Participants are unknown, as there is no recorded form of identification, so communication across the server is anonymous.

The biggest problem for my project was getting the oscillator values to be emitted from/to multiple clients. I couldn't directly emit an oscillator as an object with socket.emit, so with some help, I found that I needed to just emit the parameters for the functions each client's oscillator used, and create a new oscillator to play that frequency upon calling socket.on. This'll definitely lead to memory leaks, but it was the best hack to solve this problem. Another earlier problem I had was that since music used time at a specific rate and because draw() updated 60 times per second, I needed to decrease that number so that the note changes would actually be visible on each client's screen. This was an easy fix, and I used frameRate(5) to decrease it down to a visually manageable number.

A problem that I found unsolvable was that glitch kept saying that all p5.js objects or references were undefined, even though I defined p5 in my html file. I later found out that this was because though there was a definition of p5.js on the browser side (thus the code compiled and the p5 elements were visible), the glitch server did not contain a reference to p5, so it would throw an error.

This tenet was the most interesting, mostly because of how it was worded - it seemed to imply some supernatural or cosmic force necessary in creating an interface. The idea of it being simultaneously two different things once again brought to mind the principle of Schrodinger's Cat, specifically how it is not distinguishable as either thing until it is utilized in some way, and does not truly show its capabilities until the user "performs" either it or with it. It also emphasizes the work put into making an interface, such as the research behind whether the physics will work, the final presentation, and its practicality, and thus it is both rendered and emergent. The first example of this that comes to mind is the telematic art where people can interact with other people from almost the other side of the globe through a simple screen and some complex programming.

Tangible Media Group - AUFLIP

This piece encourages the user and creates a positive environment where they can successfully learn a new skill, which in this case is doing a front or back flip. The real-time data monitoring allows for immediate positive reinforcement when a task is done well, and some suggestions when a task is not done at the best quality. I like that research was done on the entire process of doing a successful front or back flip, so that the device could be produced at the best and most accurate quality. The fact that it was tested and further augmented shows that the artists really cared about the practicality of the piece and wanted to ensure that people who used it were satisfied. What intrigues me the most about this project, therefore, is actually the practicality and functionality of the piece, and how it can be applied to teach other kinds of cool skills to beginners.

No video is available on the website, only images.

Ray Casting

This was a very interesting concept, and could add a whole new depth of interactivity in fields like VR or AR. As an enthusiast in those fields, this example provided some inspiration for what I can achieve in 3D (specifically with p5.js).

P5.scribble

This library adds even more direct interaction with the user, and creates a friendly environment where the user can communicate with the machine, where the machine has to interpret individuality in users, forming a basis for machine learning capabilities.

Cesium Viewer

Has applications for large scale 3D projects and immense attention to detail, which is useful especially in VR/AR projects trying to emulate the experience of the real world.