Update 2/11/13

Woo! The good news is that I figured out that I don’t need to split the image and can just serve chunks of it at a time using some more complex Sifteo commands, which eliminates the blind spots and should enable me to scroll smoothly using tilting gestures. The slightly bad news means that I need to rewrite a lot of stuff, and so I’ve posted a new list below.

1. Get the newest image from a dropbox or git repository (Probably trivial)

2. Write processing script to resize and convert images and autogenerate a LUA script (Needs a rewrite…)

3. Regularly run the processing script, re-compile, and re-upload to the Sifteo base (Maybe not too hard)

4. Figure out how to rotate images (Done by rotating orientation, which is a good move.)

5. Figure out how to pan around a larger image with one cube, and then make this tilt-dependent. (Done, but needs to be smoother. Takes a leaf from both the sensor demo and the stars demo.)

6. Fix some weird scrolling issues and do image edge detection and handling (In progress)

—— These are optional but will let me do images that are 4x larger ——

7. Devise a scheme for managing asset groups better on the limited cube resources (tough but interesting)

8. Devise a scheme to predict which asset group will be needed next and load in a timely manner to keep the interaction smooth (Hard but very interesting and possibly publishable)

2/10/13

This post is meant as a living document to track my status on Project 2. In my sketch, I made a list of steps that needed to be completed for the project, along with their estimated difficulty. Now that I’ve made some progress and added a few things to the list as well, I figure I’ll update this post regularly to reflect where I’m at.

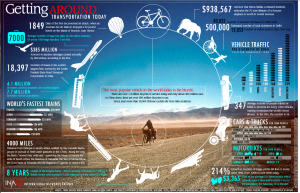

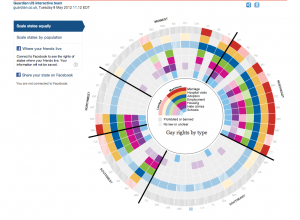

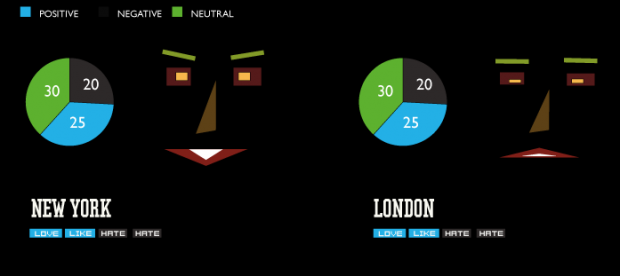

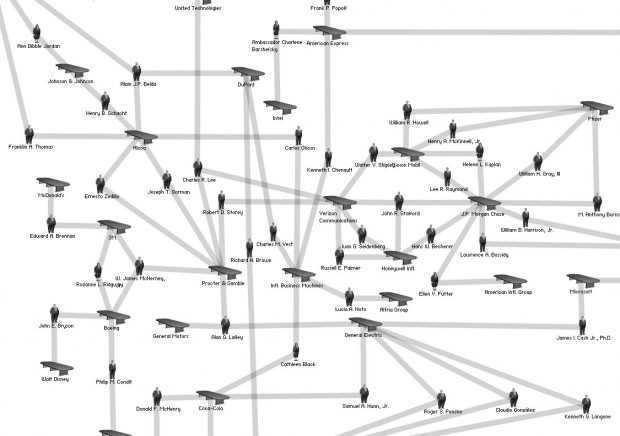

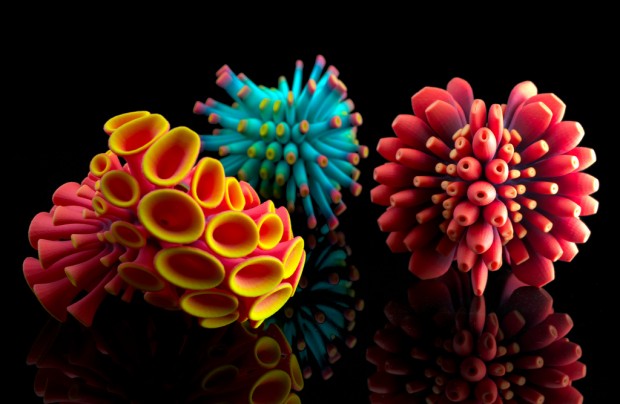

The general idea of the project is to create a system to allow children to explore large images on a small scale by using arrangements of Sifteo cubes as windows through which to view the larger picture. This is an extension of my Project 1 work with Sifteo cubes

1. Get the newest image from a dropbox or git repository (Probably trivial)

2. Write processing script to chop images and autogenerate a LUA script (Done. Also generates a short .h file to store the number of rows and columns.)

3. Regularly run the processing script, re-compile, and re-upload to the Sifteo base (Maybe not too hard)

4. Figure out how to rotate images (Done. There may be more elegant ways to do this.)

5. Figure out how to pan around a larger image with one cube, and then make this tilt-dependent. (probably tough but essential to a good interaction.)

6. Devise a scheme for managing asset groups better on the limited cube resources (tough but interesting)

7. Devise a scheme to predict which asset group will be needed next and load in a timely manner to keep the interaction smooth (Hard but very interesting and possibly publishable)