A project concerned with augmenting human action and/or perception, this is an exploration of interactive feedback in the context of high-bandwidth, continuous and real-time human signals — whether gesture, speech, body movement, or any other measurable property or behavior. You will develop, e.g. a gesture processor, a drawing program, a transformative mirror, an audiovisual instrument, or some other system which allows a participant to experience themselves and the world in a new way.

Here are some libraries/environments that might be useful for this project:

OpenCV

OpenCV is an open source computer vision library used by researchers, artists, robots, game companies, etc all over the world. There are bindings for almost all creative media arts toolkits around.

Processing

- Hypermedia OpenCV library for Processing & examples

OpenFrameworks

- ofxOpenCV: included with OF

- ofxCv: Kyle McDonald’s OpenCV 2 wrapper which is easier to use & has lots of examples

Cinder

Max

- cv.jit: computer vision for jitter

Pure Data

- pdp_opencv & pix_opencv: for GEM, Mac/Linux only

Kinect

The Microsoft Kinect is a cheap, commercial depth sensing camera. You can get both a RGB image and a 3D depth map out of it. Some libraries can use this depth map to track people via abstracting them into simplified skeletons.

There are currently 3 main libraries for working with with the kinect:

- libfreenect: reverse-engineered open source C library

- OpenNI: PrimeSense library with skeleton tracking

- Microsoft Kinect for Windows: official MS sdk with skeleton tracking, Window only

There are wrappers around some of these libs for most creative coding environments. Here are a few:

Processing

- Daniel Shiffman’s Kinect in Processing

- simple-openni

OpenFrameworks

- ofxKinect: uses open source freenect library, simple to use, no skeleton tracking, works on Mac/Win/Linux

- ofxOpenNI: uses PrimeSense OpenNI library, more difficult to use, has skeleton tracking, may not work, YMMV

- ofxMSKinect: uses MS Kinect SDK and provides skeleton tracking, Windows only

Cinder

Max

There are also a few pre-built Kinect apps that can stream skeleton data over OSC, so you don’t have to work with it directly:

- Synapse Win/Mac, there is a Processing template you might use

- OSCeleton Win/Mac/Linux

AR Tool Kit

The Augmented Reality toolkit is a library for finding and tracking image markers as well as providing a 3d transform for drawing content to fit over the marker. You will need to use the markers provided with the libraries.

Processing

- NyARToolkit, here is a tutorial

OpenFrameworks

- ofxARToolkitPlus, Mac only

Cinder

Max

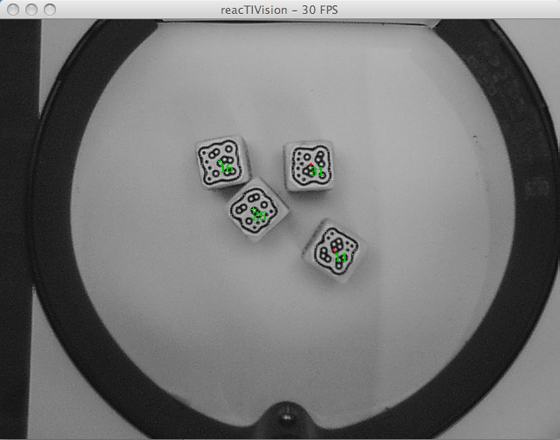

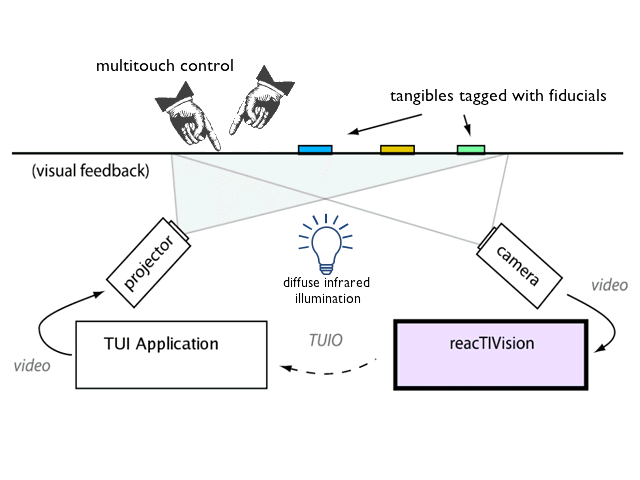

Reactivision

Reactivision is the fiducial marker tracking application that streams the marker info using the TUIO protocol via OSC. It was developed for the ReacTable project and is 2D only since it’s optimized for use with an IR camera and projector mounted underneath the projection surface. The markers are then attached to objects and placed facing down on the projection surface.

See the Reactivision page for the app and plenty of examples and templates for Processing, OF, Max, Pure Data, etc.

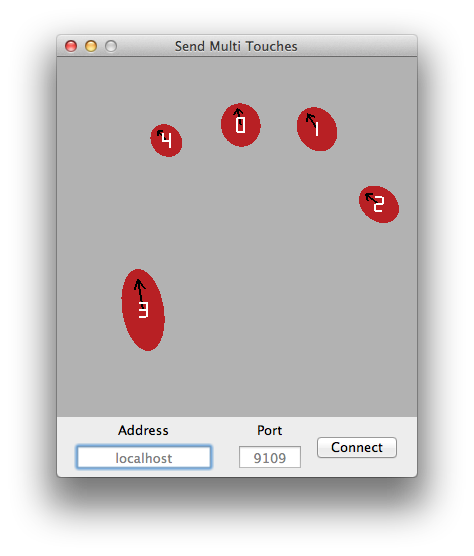

SendMultiTouches

A project from IACD 2012, Duncan Boehle’s SendMultiTouches is a small Mac-only app that streams multitouch data from a MacBook trackpad via OSC. Examples for Flash, Processing, and Pure Data are included.